Hey there,

Lets begin with a story.

The real-life story of Colonel Harland Sanders (KFC) who was disappointed umpteen times in his life and still made his dream come true late in his life is really inspiring.

He is a seventh grade dropped out who tried many ventures in life but tasted bitter every time. He started selling chicken at his age of 40 but his dream of a restaurant was turned down many times due to conflicts and wars.

Later he attempted to franchise his restaurant. His recipe got rejected 1,009 times before the final approval. And soon the secret recipe, “Kentucky Fried Chicken” became a huge hit worldwide. KFC was expanded globally and the company was sold for 2 million dollars and his face is still celebrated in the logos.

Have you stopped your attempts to a venture just because you were rejected or failed a few times? Can you even accept a failure of 1009 times? This story inspires everyone to try hard and believe in yourself until you see success despite how many times you have failed.

Main Content From Here

As we mentioned before, there have been very successful models that have performed very well on the ImageNet competition, such as

- AlexNet

- Inception

- Resonant

These neural networks tend to be very deep and contain thousands and even millions of parameters. The large number of parameters allows the networks to learn more complex patterns and therefore achieve higher accuracies. However, having such a large number of parameters also means that the model will take a very long time to train, make predictions, use a lot of memory, and are very computationally expensive.

In this lesson, we will use a state of the art convolutional neural network called MobileNet.

MobileNet, uses a very efficient neural network architecture that minimizes the amount of memory and computational resources needed while maintaining a high level of accuracy.

This makes MobileNet ideal to work on mobile devices that have limited memory and computational resources. MobileNet was developed by Google and was trained on the ImageNet dataset.

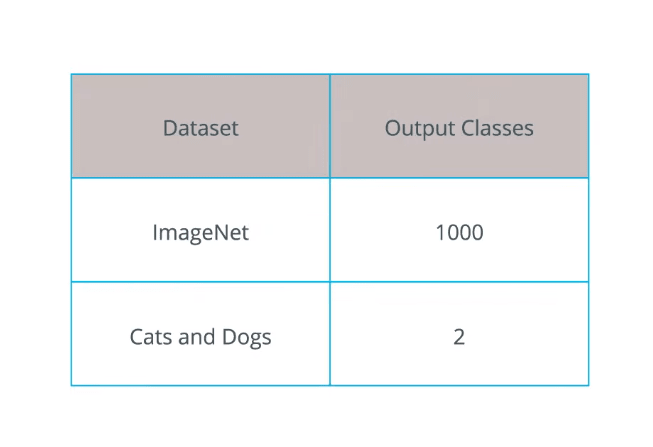

Since it's been trained on ImageNet, it has 1,000 output classes far more than the two we have in our cats and dogs dataset. To perform transfer learning, we'll download the features of MobileNet without the classification layer.

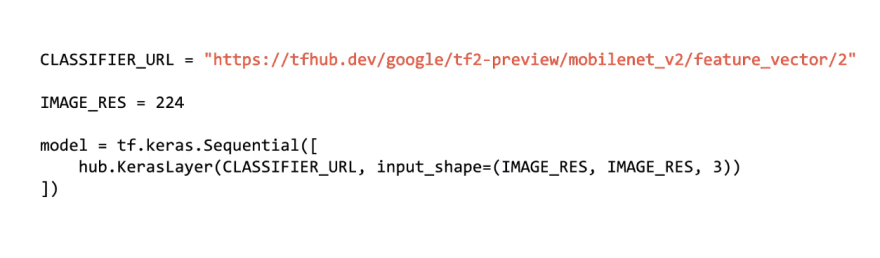

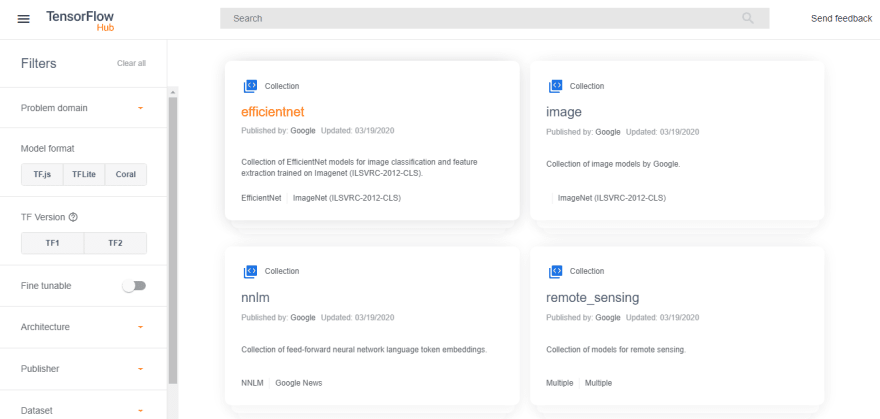

In TensorFlow, the downloaded features can be used as regular KerasLayers with a particular input size. Since MobileNet was trained on ImageNet, we have to match the same input size that was used during training. In our case, MobileNet was trained on RGB images of a fixed size of 224 by 224 pixels. TensorFlow has a repository of pretrained models called TensorFlow hub.

TensorFlow hub also has pretrained models where the last classification layer has been stripped out. Here, you can see a version of MobileNet version two.

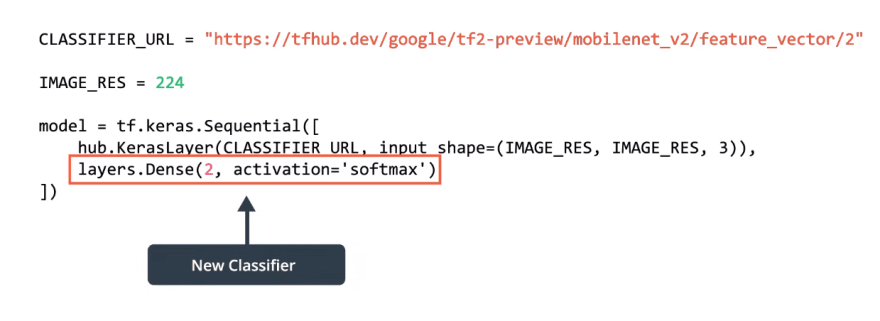

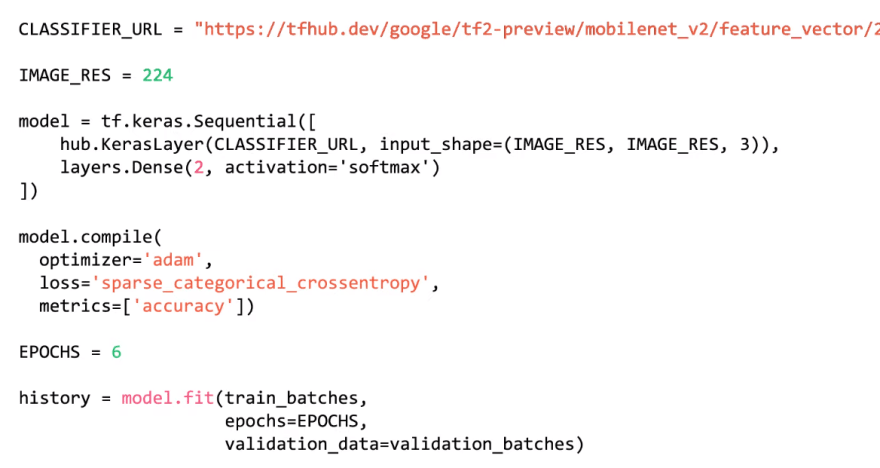

Using TensorFlow hub from code is really simple, you can just refer to the URL and then embed the pre-trained model as a KerasLayer in a sequential model.

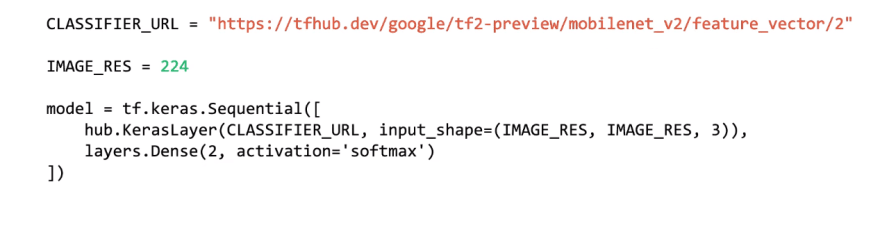

Then, we simply add our classification layer to the end of our model. Since our cat and dog dataset has only two classes, we'll add a dense layer with two outputs and a softmax activation function to our sequential model.

This dense layer will be our new classifier and it needs to be trained.

We can train our sequential model just as we did previously using the fit method.

Adios. Hasta luego.

Top comments (0)