The story of a big bang migration that we had in one of the companies where I worked!

What you will read in this article?

- About the application and the team

- Why we had to migrate the technology?

- What is Dgraph? Could a Database replace the Backend?

- How We Have Done the Migration with New Data Structure

- The problem of Caching in GraphQL

- Security Issues in GraphQL

- How we Built a Custom UI using GraphQL

- How We Have Used Elastic Search Directly on Dgraph

- Regrets and Expriences

About the application

Before getting to the details I want to clarify what was the application. It was a video streaming application and also users had access to a selected archive of the content(each content stored in the platform based on its program’s priority). Most people have used this application for watching important football games or famous local TV series. This application had about ~14M users at that time and in the pick time, we were experiencing around 800K-900k concurrent users.

Our Backend Before Migration: Java/MySQL Microservice Architecture

After Migration: Dgraph/ Elixir Phoenix for Authentication

Our Frontend was Angular and remained the same.

Size of the Team

1 Backend Developer(I) — 2 Frontend Developers — 3 Site Reliability Engineers — Engineering Manager and CTO. Overall 8 persons.

Why We Had to Migrate the Technology?

If you are reading here to see that the performance issue was related to the programming language, I have to say you are in the wrong place, and without a doubt Java was not the problem.

These were the problems in my personal opinion:

1- Lack of coherence in the Backend Repository

This was the result of having a small team and most of the time it was one person or in some cases, two persons working on the backend side of this project and it resulted in a code base with different strategies. Probably because the developer was the only one working on the code base it was hard to standardize the code. Also in teams without enough resources for growth, there are lots of tasks and bugs that are expected to be tackled before other important things like documentation, tests or standardization.

2- Lack of Documentation

There was no documentation about the code and how it works and even why some features exist. We had to communicate with different people to find out something is needed or not. The management has never asked for something like this in the first place so no one created any documentation about the code.

3- Inflexibility

Based on the estimation that the backend team(with different seniority levels and range of skills) was giving for small features or bug fixings it was obvious that the code has lost its flexibility and they are not able to ship fast and change the code.

4- No Knowledge Transfer

After the last Backend Developer left the company we had to deal with a Backend Repository without documentation or any clues. unfortunately there was no process of knowledge transfer so we had no choice except to go to into maintenance mode for a while.

What is Dgraph? Could a Database Could Replace the Backend?

We wanted to ship a lot of features fast and the number of users was increasing so we needed also a scalable solution. We finally decided to rewrite the whole backend but there were some problems:

- It was huge and had a lot of logic

- We did have only a few months at most for rewriting the whole thing and the Team was super small

- At the same time, there were expected new features

A year before this migration our CTO told me about an interesting Database called Dgraph and I started following this database newsletter and joined the forums of the community.

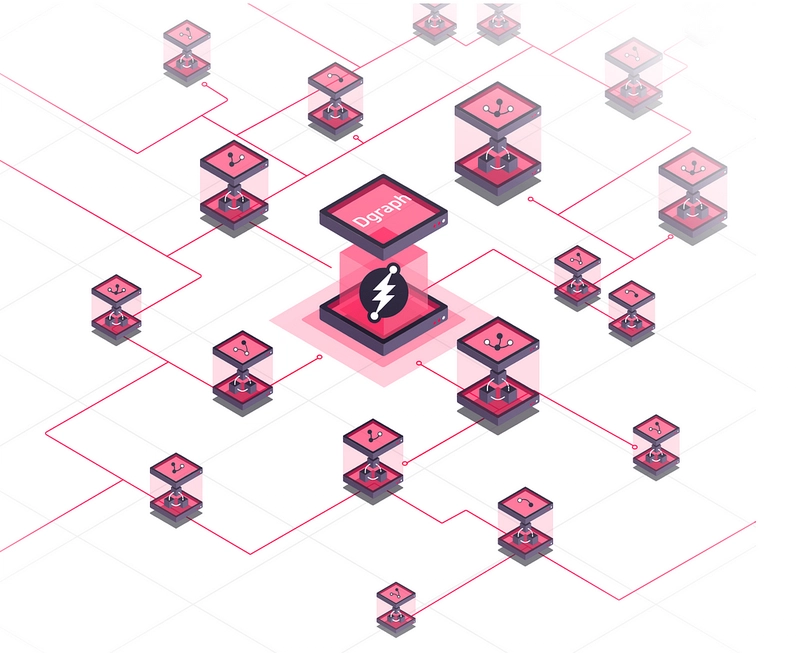

At that time it just introduced the GraphQL APIs which was amazing. If you’ve never heard about Dgraph it is a Graph Database written with Go Lang and besides being a database it offers API’s based on your Schema. This schema is a simple GraphQL Schema with some extra directives specific to Dgraph. When you define your schema you already have the API’s.

For example, if you define this schema in your Dgraph GraphQL Schema(The environment is Dgraph Cloud):

Dgraph GraphQL Schema Deployment and Definition

ID is a unique type and it is created by Dgraph DB itself.

Now we have a GraphQL Schema that we can use:

GraphQL API’s Based on GraphQL Schema

For each type by default you have these API’s generated:

1- aggregate[TypeName]

2-get[TypeName]

3-query[TypeName]

4-add[TypeName]

5-update[TypeName]

6-delete[TypeName]

At the time I started following Dgraph it did not support authorization and customized access to these functionalities but after six months they introduced Authentication Directive on GraphQL Schema which made it production ready in my perspective and we could handle a lot of cases.

Why did Our Team think Dgraph was a good solution?

1-Time Restriction : First of all we did not have that much time and we had to do this migration in a few months and in between a big Cyber Attack happened and moved the deadline to a sooner date. So it was not possible to implement more than hundred different routes with high test coverage. On the other hand, using GraphQL API of Dgraph could save us a lot of time.

2-Our Application Behavior : Our application had more reads than writes and most of these reads were the result of complicated queries from joining more than 3 tables. A lot of joins and heavy queries send us the signal that we might have some benefits if we use a Graph Database .

Another important thing that motivated us was that Dgraph had introduced something called Custom DQL . DQL is the language of Dgraph Database. This feature lets us implement custom complicated queries(Something like MongoDB aggregation pipeline) and put these queries behind GraphQL API’s. Pagination was also included without any problem and in our case, a lot of already existing Backend API’s were just returning a complicated query from MySQL. So this feature was also saving us a lot of time.

3- Lambda Resolvers For Our Custom Logic: We knew that we need some to implement some custom logic. For this need, Dgraph offered Lambda Resolvers which are equivalent to Lambda Functions but with the difference that Lambda Resolvers are web workers.

Could Dgraph Database Replace the Backend?

The answer is Yes and No!. There are more options these days that are working in the same way as Dgraph. Neo4J got the good idea(in my opinion maybe from Dgraph or Postgraphile) and now offers a super nice GraphQL API for the database with a lot of features. It seems that it is easier for Graph Databases to offer a GraphQL API but Hasura offers it with Relational Databases.

The point here is that people are using API’s from these databases(or platforms) instead of implementing every single route in a backend. We can have our own custom logic in each one of these platforms that I mentioned. Even if we are not satisfied with the database custom logic handling we can have a small backend on the side that works with the database and does some of the complicated things for us.

How We Have Done the Migration with new Data Structure

We started with a proof of concept to see if we are able to do this or not and let’s see how is the performance with some of our complicated queries.

For migrating SQL data to Dgraph there is a tool that Dgraph implemented but we did not want to use it because we had to also change the data structure.

We were providing Video content and everything was implemented in a hierarchy. For example:

Each of these categories had some subcategories. Each content had one of the leaves in this tree. We decided to go with the concept of Tags instead of using Tree(or both at the same time). We had a lot of tables and structures to assign different attributes, categories and subcategories to each content, but from a Tag perspective, all of these attributes could be considered as some Tags that we could assign to the Content and this drastically reduced the complexity .

In the older system content had a leaf called: PrimaryLeague but in the new system it had a tags fields that should contain all parents of content:

tags: [

{uid: 0x1, name: 'primaryLeague'},

{uid: 0x2, name: 'football'},

{uid: 0x3, name: 'international'},

]

Database Converter and Challenges

For migrating Data, We have used Node.js and Prisma. The reason we used Prisma was the Database Pull Feature. We had the production database structure and with this command, I was able to convert all the tables to a Prisma Schema: npx prisma db pull .

After that running npx prisma generate updated the Prisma Client and we had access to all tables and their relations in Typescript so I was able to fetch data and convert them to the new structure and insert them into the new database. Dgraph had a official Nodejs Client library for using DQL queries and mutations that we have used for this matter.

One of the biggest challenges was connecting Dgraph Nodes and creating the reverse edges. For connecting the Graph Nodes you need UID of the Nodes and the problem was that you cannot set this UID, dgraph generates this UID. So I had to create both Nodes at one step, then fetch the nodes and finally run an update query to connect these nodes. This was mostly the reason why this Database Converter was not so fast.

The Problem of Caching in GraphQL

Caching is usually not easy for GraphQL API since most caching processes are working with URL rather than details of the request. Parsing the request content was expensive for us since the number of concurrent requests were always high(most of the time more 10K). So we came up with the idea of hashing the queries and sending them from the client side. For each common query we were hashing the query content and we added this as queryId to the endpoint. Now we were able to do the caching. We could also set the caching time of each endpoint by using @cache directive on Dgraph Schema.

Security Issues in GraphQL

Failure to Appropriately Rate-limit

This is one the queries that could cause problem:

query Recurse {

allUsers {

posts {

author {

posts {

author {

posts {

author {

posts {

id

}

}

}

}

}

}

}

}

}

The attacker can nest this query a lot and as a result it puts a lot of pressure on the database.

For solving this issue we had to put a minimal Shield for protection against this kind of queries. We limited the size of query and also response to a small number and as a result this query which is passed could not be so big. We set the query timeout number in Dgraph to 1 Second(it means it drop the queries that take more than this number).

Also Dgraph have a feature for rate limiting that we have used along side these options I explained and you can check the details here: https://dgraph.io/docs/deploy/cli-command-reference/#limit-superflag

User Could Get All Documents From Dgraph GraphQL API

This was also another issue that the client could set page size. We limited the response size and as a result no one can easily get this data but they could harm the database performance. So we had to handle this also in our Go Lang Shield. We set the page size in there and as a result you cannot pass your desired page size to the system.

How We Built a Custom UI

One of the pains we had in there was too many changes in the UI of the application both in the Website and Mobile Apps. Not major changes but moving components around. We already had some components which were well implemented. We came up with a page, component system to handle these changes and at the same time introduced the feature of custom pages for different channels and events. These custom pages usually took a lot of time from our Fronted Developers so it having this feature could save them a lot of time.

This is a simplified version to show you the idea of how this UI was implemented:

Our Frontend Developers and Mobile Developer used the same component names and the design system was somehow that they could both read our pages and generate the UI. Support users were able to do all the customization with different kinds of components.

How We Have Used Elastic Search Directly on Dgraph

First we started the new version by using Dgraph Fuzzy Matching and later with some complicated queries to get related contents. It was not efficient enough and we were not able to get related content when the search phrase was not so accurate or it was more than a word. As a result we decided to use Elastic Search for our search functionality. One of the teamates has implemented a small service with Elixir for syncing our Dgraph Database with Elastic Search(Not the whole database just 2 types).

Now we had to find a way to perform our search queries. The first way that came to mind was to implement a minimal backend to access these search queries, but we had to think about scaling this small service and again we were facing all the problems. In the end we came up with a solution to expose one GraphQL API that requested data from our Elastic Search.

By using Dgraph Custom Queries you can make HTTP Requests to other endpoints and parse the result and return it in a GraphQL Resolver.

Check out this article on Dgraph for the details of how we connected Dgraph to Elastic Search. Below you can see a schematic of how this is handled.

How Dgraph is Connected to Elastic

Regrets and Experiences

Not enough Documentation

Dgraph did not have a perfect documentation at the time and I had to learn all the tricks from Dgraph Forum.

Investors Fired CEO(Founder) of Dgraph

After we almost finished our migration this happend and the whole Dgraph Team resigned and It was super scary for me. Now it has another CEO and they are going in a good path and their steadily improving features and fixing bugs.

Read more about this:

https://www.reddit.com/r/golang/comments/sfl7kj/quitting_dgraph_labs/

https://discuss.dgraph.io/t/going-out-of-business/16631

A Lot of Try and Error to Optimize Queries Based on Data

After a while some our queries changed and the reason behind it was the shape of our data. We had some Types with more than a few Million records and in queries we had to avoid running queries like Regex or Full-Text Search as much as possible.

An example of design change because of optimizing query:

First we had Content and Tag but querying contents with specific tags was really expensive and we had to touch all contents. We made the relation two way and then we were able to get the contents from tag(Indexes are removed for simplicity).

Two Different Approach for getting contents that have specific tag

With the first design for getting contents that have specific tag we must touch all contents(which was over a few million nodes). With second approach we directly get the tag and the contents are attached to this node so it is super fast compared to previous approach.

Two different queries for getting contents that have specific tag

Some Dgraph Issues that made us Slow

https://discuss.dgraph.io/t/dgraph-manual-reverse-index-is-gone-after-each-graphql-schema-upload/14829

https://discuss.dgraph.io/t/cannot-query-after-deleting-an-entity-that-is-used-in-a-union-type/15483

https://discuss.dgraph.io/t/bug-cannot-limit-number-of-results-using-auth-directive-to-prevent-malicious-queries/14828/5

In the end Kudos to Teammates who made this migration possible!

GraphQL Security: The complete guide

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Top comments (0)