Exploring Parallelism and Concurrency in Node.js: Child Processes, Multithreading, Cluster, and More

In this article, we delve into the world of parallelism and concurrency in Node.js, exploring the concepts of child processes, multithreading, and their practical implementations.

What you will read in this article

You are going to read about the Node.js Child process, and how we can spawn a child process. Then we will look into Fork and the differences between Fork() and Unix Fork. Then we look into worker threads and clusters and in the end we shortly discuss concepts of Parallelism vs Concurrency on Nodejs.

What is a Child Process?

A child process in computing is a process created by another process (the parent process). This technique pertains to multitasking operating systems and is sometimes called a subprocess or traditionally a subtask.

Each Process could have multiple child processes and one parent. If it does not have a parent process it is probably created directly by Kernel.

Specifically in Nodejs: Each process(Spwan or Fork) has its own memory, with its own V8 instances.

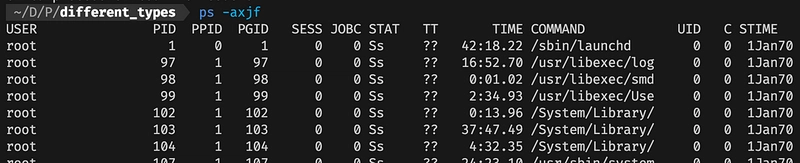

You can see the process tree using this command on Mac or Linux:

ps -axjf

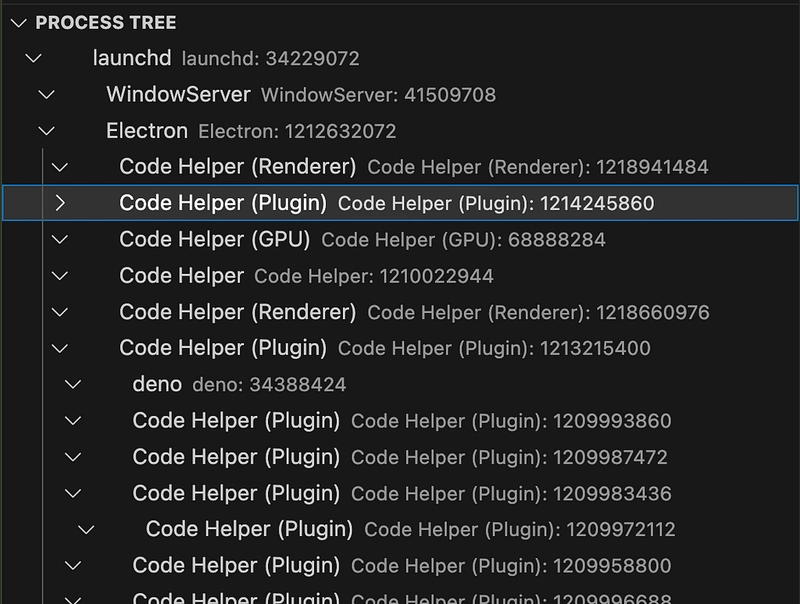

Here PID is the process ID and PPID is the parent process id. If you want to see it visualized as a tree you can use This VSCode Extension or install pstree with brew install pstree .

For example by using this vscode extension you can see the current process tree of your machine:

Spawn

Spawn is one of the ways we can create a child process and The child_process.spawn() method spawns a new process using the given command .

Let’s create a subprocess in our Nodejs app and check it out. In order to prevent processes from termination I am going to use Unix sleep command so we can see the parent-child process in the console.

This is the code, we are going to use Spawn :

const { spawn } = require('node:child_process');

const sleep = spawn('sleep', ['10']);

console.log(`Current process PID: ${process.pid}`);

console.log(`Child process PID: ${sleep.pid}`);

sleep.on('close', (code) => {

console.log(`child process exited with code ${code}`);

});

sleep.stdout.on('data', (data) => {

console.log(`stdout: ${data}`);

});

sleep.stderr.on('data', (data) => {

console.error(`stderr: ${data}`);

});

sleep.on('close', (code) => {

console.log(`child process exited with code ${code}`);

});

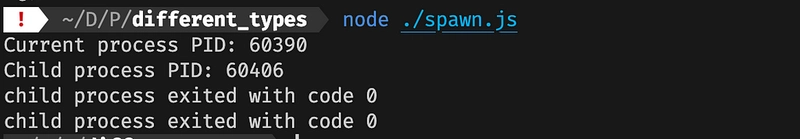

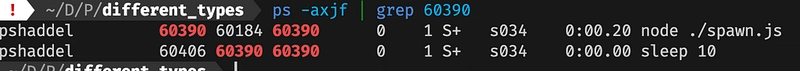

By using spawn we have created a new process. Before the sleep command is over I am going to log the pid and ppid in the console:

It logs the current process and the child process

As we can see sleep 10 command has a parent which is the process node ./spawn.js .

Fork

Based on the official docs Fork() method is a special case of

Spawn() and it is used specifically to spawn new Node.js processes. But the child process that comes from Fork() will have an additional communication channel built-in that allows messages to be passed back and forth between the parent and child. This forked Nodejs process is independent of the parent and has its own V8 instance.

Let’s see a simple example of a parent and forked child:

// Parent.js

const { fork } = require('node:child_process');

const forkSort = fork('./fork_sort');

const numbers = [1, 5, 2, 3, 4];

forkSort.send(numbers);

forkSort.on('message', (sortedNumbers) => {

console.log(`Sorted numbers: ${sortedNumbers}`);

process.exit(0);

});

forkSort.on('close', (code) => {

console.log(`Child process exited with code ${code}`);

}

);

// Child.js

process.on('message', (msg) => {

console.log("CHILD: message received from parent process", msg);

const sortedNumbers = msg.sort((a, b) => Number(a) - Number(b));

process.send(sortedNumbers);

});

By running parent.js we can create a forked process and do the sorting in the child.js and get the result back.

Difference between UNIX Fork and Nodejs Child Process Fork

In UNIX when we are forking a process, that makes a shadow of the parent's memory (they share the memory as long as they are only reading from it). this kind of memory is called Copy on Write.

Here comes the big difference: Nodejs fork is not a clone of the parent process. It is like Spawn(fresh memory) with a built-in communication channel!

Cluster

Using Cluster, we can run multiple instances of Node.js that can distribute workloads among their application threads.

The important thing about cluster is by using it we can easily create child processes that share the same port.

Here is an example(from official docs) of using Cluster for creating multiple child processes that all listen to the same port.

import cluster from 'node:cluster';

import http from 'node:http';

import { availableParallelism } from 'node:os';

import process from 'node:process';

const numCPUs = availableParallelism();

if (cluster.isPrimary) {

console.log(`Primary ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

} else {

// Workers can share any TCP connection

// In this case it is an HTTP server

http.createServer((req, res) => {

res.writeHead(200);

res.end('hello world\n');

}).listen(8000);

console.log(`Worker ${process.pid} started`);

}

As you can see Cluster module has spawned some child processes using cluster.fork() .

The default way that cluster module load balances the requests is that the primary process listens on port and accepts connections and uses round-robin for distributing them to other processes.

The second approach is where the primary process creates the listen socket and sends it to interested workers. The workers are able to accept incoming connections directly. ( In practice, distribution with this method tends to be very unbalanced due to operating system scheduler vagaries )

Routing using Cluster Module : Node.js does not provide routing logic. It is therefore important to design an application such that it does not rely too heavily on in-memory data objects for things like sessions and login. In other words, you cannot assign specific incoming requests to specific workers based on custom logic.

Worker Threads

Threads are smaller units of execution within a process. Multithreading is a technique used to achieve parallelism by splitting a process into multiple threads that can execute concurrently.

The worker_threads module in Node.js does not create new processes; instead, it provides a way to create and manage multiple threads within the same Node.js process. This allows you to perform parallel computation without spawning separate processes.

Three important things about worker threads:

- Workers (threads) are useful for performing CPU-intensive JavaScript operations.

- They do not help much with I/O-intensive work.

- The Node.js built-in asynchronous I/O operations are more efficient than Workers can be.

Let’s see a simple example before getting into the details.

We want to pass our sorting operation to a worker thread. In order to do that we can have two files:

1- Function that creates the worker and gets the computation result back

2- Worker thread that calls sort method on the array.

Here is the first file: It creates a new worker thread and resolves the promise when it gets the result back from the worker thread.

const {

Worker, isMainThread, parentPort, workerData,

} = require('node:worker_threads');

function sortAsyncUsingWorkerThreads(array) {

return new Promise((resolve, reject) => {

const worker = new Worker('./sort_work_thread', {

workerData: array,

});

worker.on('message', resolve);

worker.on('error', reject);

worker.on('exit', (code) => {

if (code !== 0)

reject(new Error(`Worker stopped with exit code ${code}`));

});

});

};

const numbers = [1, 5, 2, 3, 4];

const main = async () => {

const sortedNumbers = await sortAsyncUsingWorkerThreads(numbers);

console.log(`Sorted numbers: ${sortedNumbers}`);

}

main().catch(console.error);

Here is the implementation of this worker file:

const { parentPort, workerData } = require('worker_threads');

const array = workerData;

parentPort.postMessage(array.sort());

By just calling the postMessage method we can pass the data to the parent.

In the example, each time we call this function it creates a new thread. Which is not a good idea(because we do not have unlimited worker threads available). Generally using worker threads could be tricky. Take a look at this resource from Standford University:

https://web.stanford.edu/~ouster/cgi-bin/papers/threads.pdf

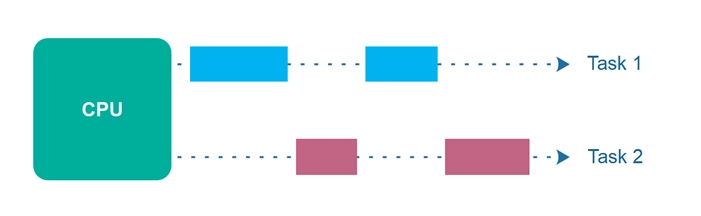

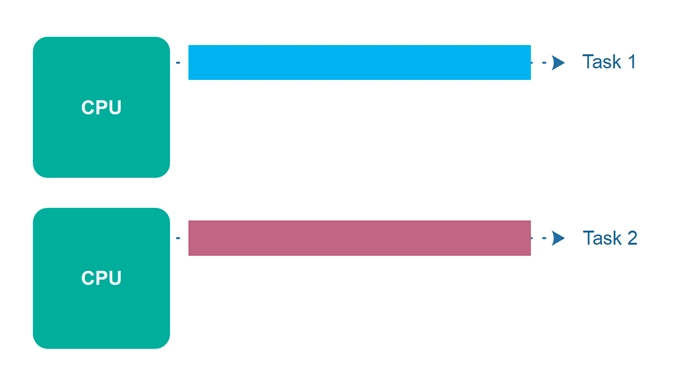

Parallelism vs Concurrency in Node.js

Concurrency in Node.js

Concurrency in Node.js refers to the ability of the system to handle multiple tasks or operations at the same time. Node.js does that by using Event Loop, it can handle a lot of requests while it is waiting for non-blocking I/O. The async-await pattern that we use daily as Node.js Developer is in order to achieve concurrency.

Parallelism in Node.js

Parallelism refers to the simultaneous execution of multiple tasks or processes to achieve better performance by utilizing multiple CPU cores. Node.js is inherently single-threaded, meaning it runs on a single thread of execution. However, Node.js can still achieve parallelism through various mechanisms, such as:

- Child Processes : Node.js can spawn or fork multiple child processes, to take advantage of multiple CPU cores. These processes can communicate through inter-process communication mechanisms.

- Worker Threads : The worker_threads module in Node.js allows developers to create and manage multiple threads within a single Node.js process. Each thread operates independently, and they can share memory, making it useful for CPU-bound tasks.

Conclusion

This was a quick review of some related topics. Spawn gives a new fresh isolated process. The Fork method gives you a new Nodejs process with a built-in communication channel. Cluster is using the fork method to distribute workloads. Worker threads are good for doing CPU-Intensive tasks inside the process with shared memory.

References

- Cluster | Node.js v20.5.0 Documentation

- Performance and reliability when using multiple Docker containers VS standard Node cluster

- Node.js clustering vs multiple Docker containers https://medium.com/media/f18f9c63e754eef625991cc9da7cf2a6/hrefhttps://medium.com/media/b8595ed54fafc0cf88e387218d752e16/hrefhttps://medium.com/media/74256cc4887a763f7414b0b2a5f7d272/hrefhttps://medium.com/media/c84004ddf363a9d223c8873ad93d7bfb/href

Difference between processes and threads — YouTube

Building a non-blocking multi-processes Web Server (Node JS fork example) — YouTube

https://web.stanford.edu/~ouster/cgi-bin/papers/threads.pdf

Top comments (0)