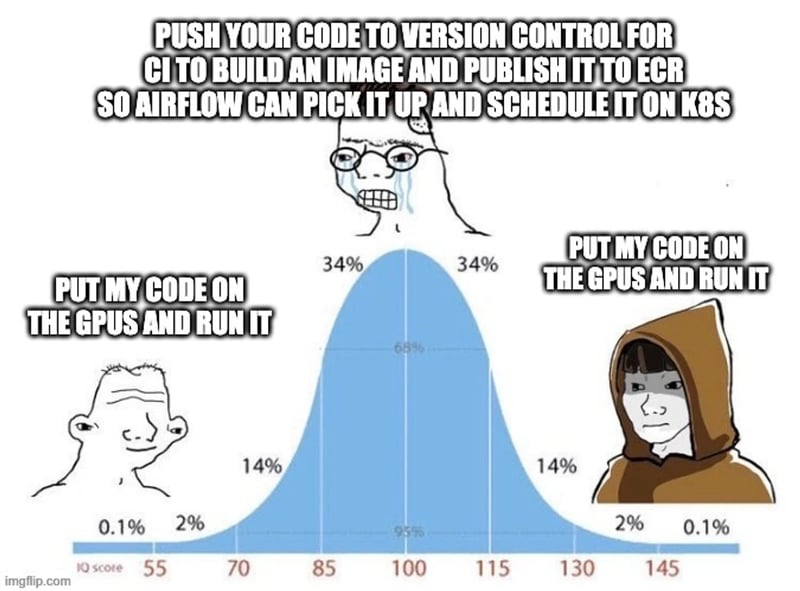

The simple act of “send my code to my cluster and run it” is surprisingly challenging for most AI teams. Numerous AI deployment systems have been built around model checkpoints or containerized pipelines, but if you just have some Python training functions to run you’re out of luck. Doing this collaboratively on a shared fleet of compute is even harder, especially if boxes in the pool are coming up and down all the time.

“Obviously, the ideal case here is some kind of orchestration layer that is specially built to send jobs to different servers. I believe many big AI-first companies generally have some sort of infrastructure in place to improve the quality of life for AI researchers. However, building this type of sophisticated & fancy ML training infrastructure is not really possible for a lean and new startup at the beginning.”

– Yi Tay, Training great LLMs entirely from ground zero in the wilderness as a startup

The solutions to this problem generally fall into two categories:

- Use only GPUs from a single provider and use their custom interface, like a notebook client, integrated IDE, or deployment SDK. Tying your stack to the infrastructure is risky, as GPUs are scarce and you never know where you’ll need to find scale. Most teams I speak with bring up new compute where their credits, discounts, or quota allow and end up with a mix of GPUs cobbled together from different providers. Tools embedded within infrastructure are also often imbued with opinions about how AI is done to avoid being seen as a compute wrapper, including code migrations by design between research and production.

- Allocate a much bigger pool of GPUs than you need and string them together into a Kubernetes cluster, and then build special DevOps tooling to build and deploy containers to the cluster from CI or allocate sub-clusters (e.g. with Ray) out of it on demand. Putting aside the lift to build a platform like this, keeping a generous pool of GPUs warm is extremely expensive and not resource efficient by definition. Teams of any size, even well-resourced ones, want to maximize the juice they get out of their compute. Further, Kubernetes clusters do not work well across regions or providers, so here too all the compute must be centralized, and you’re betting on being able to expand in-place.

“In the current world, having multiple clusters of accelerator pools seems inevitable unless one specially builds for a large number in a single location. More specifically, GPU supply (or lack thereof) also naturally resulted in this pattern of cluster procurement in which things are fragmented by nature.“

– Yi Tay

At Runhouse, we don’t think this is an AI problem, but a DevOps problem. The tools to deploy and execute code on remote compute have been bundled with opinions about the way AI is done or the underlying infrastructure for too long.

“Send my code to my cluster, that’s it”

With Runhouse, it’s easy to send code to your compute no matter where it lives, and efficiently utilize your resources across multiple callers scheduling jobs (e.g. researchers, pipelines, inference services, etc). We believe less is more when it comes to AI DevOps, so we don’t make any assumptions about the structure of your code or the infrastructure to which you’re sending it.

Within your existing Python code (no DSLs, yaml, or magic CLI incantations), just tell us,

- The Python function or class you want to run,

- in which environment,

- on which compute,

and we do the rest. It looks like this:

my_remote_callable = rh.function(my_function).to(my_cluster, env=my_env)

res = my_remote_callable(my_arg, my=kwarg)

Let’s break that down in more detail, starting with the compute.

Specifying Compute

To work with your compute, all we need is for you to point us to where it is and how to access it. We’ll start a lightweight HTTP server on the cluster to communicate with it and use Ray for scheduling, inter-process communication, and parallelism. You don’t need to know anything about Ray or write any Ray-specific code - Runhouse sets it up and manages it for you. If you already use Ray, we’ll use your existing Ray instance rather than start a new one. Pointing us to the compute is easy:

It can be a single server or the head node of an existing Ray cluster:

cluster = rh.cluster(

name="rh-cluster",

host="example-cluster", # hostname or ip address,

ssh_creds={"ssh_user": "ubuntu", "ssh_private_key": "~/.ssh/id_rsa"},

)

You can also omit the ssh_creds if the host points to a hostname in your .ssh/config file.

It can be a list of servers, which we’ll tie together into a Ray cluster:

cluster = rh.cluster(

name="rh-cluster",

host=[“192.27.147.223”, “192.139.95.104”, “192.222.209.190”, “192.153.102.187”],

ssh_creds={"ssh_user": "ubuntu", "ssh_private_key": "~/.ssh/id_rsa"},

)

Anyone who’s used Ray knows that this is a pretty nice little service.

It can be a fresh cloud box or Ray cluster that we’ll bring up for you via SkyPilot:

cluster = rh.ondemand_cluster(

name="rh-cluster",

instance_type="A10G:4+",

provider="aws"

).up_if_not()

You can use arbitrary instance types with as many nodes as you like. Note that SkyPilot is simply using cloud SDKs and your local cloud credentials here, such as your ~/.aws/config file. This is running fully locally and doesn’t require prior setup (though it does help to run sky check to confirm that your local cloud SDKs and credentials are set up correctly).

It can even be a pod in Kubernetes (also via SkyPilot):

cluster = rh.ondemand_cluster(

name="rh-cluster",

instance_type="A10G:4+",

provider="kubernetes"

).up_if_not()

We even support AWS SageMaker and local execution, and we continue to add new compute types all the time (e.g. serverless).

Specifying the Environment

Sharing a cluster among your team means allowing various jobs to coexist with different dependencies, secrets, resources, and setup instructions. Runhouse “envs” are a succinct but powerful mechanism for specifying both the setup activities needed for the job to run, including local packages, directories, or secrets to sync over, and also a native mechanism for parallelism and scheduling.

Each env runs in its own process, so you can put many jobs into a single env (multiple calls into the env are multithreaded by default, via Ray), replicate a job across several envs to parallelize it, or even have an application in one env call into an application in another because they have different dependencies.

An env can be a simple list of packages to install, or include detailed settings, data sync instructions, and installation steps. We won’t go into the full range of capabilities here, but to give you a preview:

env = rh.env(

name="fn_env",

reqs=["numpy", "torch", "~/my/local/data", my_custom_package],

working_dir="./", # current git root, or any other local path

env_vars={"USER": "*****"},

secrets=["aws", "openai", my_custom_secret],

setup_cmds=[f"mkdir -p ~/results"]

compute={"CPU": 0.5}

)

We also support conda_envs for dependency isolation or alternate Python versions.

Sending and calling your Python code remotely

You can dispatch code to remote compute from anywhere in Python, be it inside a notebook, orchestrator node, script, or another Runhouse function or class (nesting can have big design advantages and is encouraged).

from torch.cuda import is_available

is_cuda_available_on_cluster = rh.function(is_available).to(cluster, env=env)

is_cuda_available_on_cluster()

You can pass arbitrary inputs into the function and return arbitrary outputs. Generators, async, interactive printing and logging, and detached execution are all natively supported. All we need is for the function or class to be importable, so we can import it on the cluster inside its env’s process.

Often you may find that state gives life, reusability, or performance advantages to your application, such as pinning objects to GPU memory, in which case a class (wrapped by a Module) is ideal:

from my_lib import my_loss_fn

class MyTraining:

def __init__(model_load_fn = None, device="cuda"):

self._model = model_load_fn().to(device)

self._optimizer = self._initalize_optimizer()

def _initalize_optimizer():

...

def train_epoch(trn_batches, tst_batches):

...

return my_loss_fn(self.predict(tst_batches["x"]), tst_batches["y"])

def save_checkpoint(path):

...

def predict(batches):

...

if __name__ == "__main__":

gpu = rh.cluster(name="rh-a10x", instance_type="A10G:1", provider="cheapest")

trn_env = rh.env(reqs=["torch", "~/my_lib"], name="train_env", working_dir="./")

MyTrainingA10 = rh.module(MyTraining).to(gpu, env=trn_env)

load_model = rh.function(my_model_load_fn).to(gpu, env=trn_env.name)

my_training_app = MyTrainingA10(load_model, name="my_training") # Only loads the model remotely!

for i in range(epochs):

test_loss = my_training_app.train_epoch(my_trn_set, my_tst_set)

print(test_loss)

my_training_app.save_checkpoint(path)

I can also get the existing training instance from the cluster at the start of my script, so I can interactively iterate and debug it without re-initializing the training. The statefulness of this training on the cluster means that I or my collaborators can continually access and monitor it over time, and the process isolation of my env means that if my training fails, it doesn’t take down other jobs running on the cluster.

Collaboration - Bringing it All Together

Efficient resource utilization is all about sharing. Runhouse Den is a complementary service to Runhouse OSS allowing you to share, view, and manage any of your resources to make your AI stack collaborative and scalable. Compare that to cycling through cloud consoles to find all the live clusters in your fleet, let alone try to understand what’s running on them.

By logging into Den (runhouse login), you can save and load any remote cluster, env, or Python app from a global name directly in Python:

my_cluster.save()

my_reloaded_cluster = rh.cluster(name="/yourusername/rh-cluster")

That means saving significant cost and improving reproducibility rather than launching duplicate clusters and jobs for every researcher and pipeline performing the same activity. There is much more to that story, and we’ll discuss it further in a subsequent post.

Get in touch

If you’re an AI team that wants to improve costs, ergonomics, and reproducibility, or simply want to modernize your AI stack to shed it of infrastructure or AI opinionation, Runhouse can be a great option. If you have questions or feedback, feel free to open a Github issue, message us on Discord, or email donny@run.house.

Top comments (0)