Which three concepts lie at the heart of deep learning? - Loss Functions, Optimization algorithms, and Backpropagation. Without them, we would have been 'conceptually' stuck at the perceptron model of the 1950s.

If you are completely unfamiliar with these terms, feel free to go through CS231n Lecture Notes 1, 2, 3 or/and watch this and subsequent videos by Andrew Ng.

Different problems require different loss functions, most of them are well known to us, also several new ones shall be arriving in the future.

Backpropagation, proposed by Hinton et al. in 1986, drives the Neural Networks.

But the choice of Optimization algorithms has always been the center of discussions and research inside the ML community. Researchers have been working for years on building an optimizer algorithm which

1. performs equally well on all major tasks - image classification, speech recognition, machine translation, and language modeling

2. is robust to learning rate (LR) and weight initialization

3. has strong regularization properties

If you are a beginner and merely started coding Neural Network following online available notebooks, you might be most familiar with ADAM optimizer (2015). It's the default optimizer algorithm for almost all DL frameworks including the popular one - Keras. There's a reason why it's kept default - it's robust to different learning rates and weight initialization. If you want to train your architecture on any data and worry less about hyperparameter tuning just use the defaults and it will give you good enough result for a start.

But if you are an experienced one and already familiar with deep learning research papers, you know that except while training GANs and Reinforcement learning (both doesn't solve optimization problems), experts mostly use SGD with momentum or Nesterov as their preferred choice of the optimization algorithm.

So, which one should I choose? 🤔

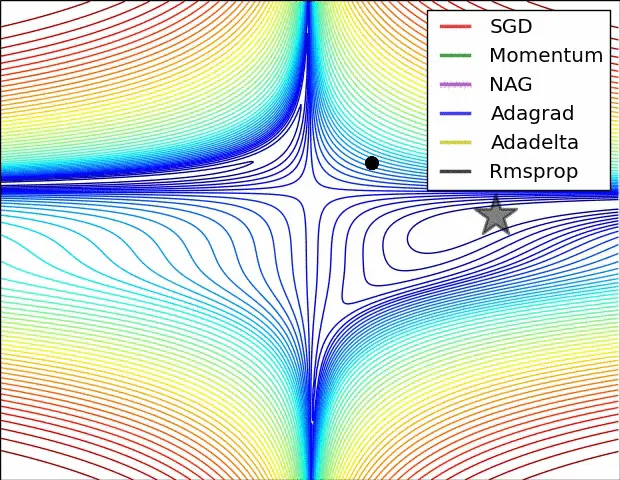

Non-adaptive methods

Stochastic Gradient Descent, as we know now, arrived from its initial form of Stochastic Approximation (1951) a landmark paper in the history of numerical optimization methods by Robbins and Monro. Here it wasn't proposed as a gradient method, but as a Markov chain. The form of SGD we are familiar with can be recognized in a subsequent paper by Kiefer and Wolfowitz. Programmers now mostly use the variant Mini-batch gradient descent with a batch size often between 32 and 512 (intervals in the exponent of 2) for training NN. But the key challenge faced by SGD is that it gets trapped in numerous suboptimal local minima and saddle points in loss function's landscape. So arrived the idea of implementing momentum, borrowed from a Polyak (1964) by Hinton et al. in their famous Backpropagation paper. Momentum helps accelerate SGD in the relevant direction and dampens its oscillations. But it's still vulnerable to vanishing and exploding gradients. Hazan et al in 2015 showed that the direction of the gradient is sufficient for convergence, thus proposing Stochastic Normalized Gradient Descent Optimizers. But it's still sensitive to 'noisy' gradients, especially during an initial training phase. In 2013, Hinton et al. conclusively demonstrated that SGD with momentum or Nesterov accelerated gradient (1983)(a variant of SNGD) and simple learning rate annealing schedule combined with well-chosen weight initialization schemes achieved better convergence rates and resulted in surprisingly good accuracy for several architectures and tasks.

Adaptive methods

Parameter updates by the above-described methods do not adapt with different learning rates, proper choice of which is crucial. This gave rise to several adaptive learning rate-based optimizers within next 4-5 years to improve SNGD robustness. They generally updated the parameters via an exponential moving average of past squared gradients. But even they weren't problem-free; such as for Adam it is the vanishing or exploding of the second moment, especially during the initial phase of training, and requirement of double the optimizer memory compared to SGD with momentum for storing the second moment. In 2018, Wilson et al. extensively studied how optimization relates to generalization and concluded three major findings:

with the same amount of hyperparameter tuning, SGD and SGD with momentum outperform adaptive methods on the development/test set across all evaluated models and tasks (even if the adaptive methods achieve the same training loss or lower than non-adaptive methods.)

adaptive methods often display faster initial progress on the training set, but their performance quickly plateaus on the development/test set

the same amount of hyperparameter tuning was required for all methods, including adaptive methods

Check out this beautiful python implementation of some common optimizers.

The Way Ahead

Last year Loshchilov et al. pointed out the major factor for the poor generalization of Adam - L2regularization being not nearly as effective as for SGD. The author explains that major deep learning libraries implement only L2regularization, and not the original weight decay (most people confuse them as identical). To improve regularization in Adam they proposed AdamW - which decouples the weight decay from the gradient-based update. Although for achieving this they used a cosine learning rate annealing with Adam. 😩

This year NVIDIA team proposed a new member of SNGD family - NovoGrad an adaptive stochastic gradient descent method with layer-wise gradient normalization and decoupled weight decay. They combined SGD’s and Adam’s strengths by implementing the following three ideas while building it:

1. starting with Adam, replaced the element-wise second moment with the layer-wise moment

2. computed the first-moment using gradients normalized by the layer-wise second moment

3. decoupled weight decay (WD)from normalized gradients (similar to AdamW)

Not only it generalizes well or better than Adam/AdamW and SGD with momentum on all major tasks, but also is more robust to the initial learning rate and weight initialization than them. In addition,

1. It works well without LR warm-up (annealing)

2. It performs exceptionally well for large batch training (for ResNet-50 up to 32K)

3. It requires half the memory compared to Adam

A few days back, Zhao et al. theoretically proved and empirically showed that SNGD with momentum can achieve the SOTA accuracy with a large batch size (much faster training).

Hope you realize that we are at a brink of a breakthrough after a long search for a dataset/task/hyperparameter-tuning independent better-generalizing optimizer algorithm.

Top comments (0)