Picture of Christina @ wocintechchat.com on Unsplash

Note: a lot has changed since the release of the article in Jan 2023

Everything described below is still working (2023.05.22), but I would suggest using OpenAI Services on Azure instead of OpenAI directly. as with Azure OpenAI Services you have control regarding your Data.

Motivation

While working with bots, you must consider two points,

how to present the answer and

where does the answer come from?

With all articles you might have read in the last few days about openais ChatGPT, you could think - why not integrate this in my installation as a last line of defense to answer when my system is incapable of doing so - at least for chitchat.

This article demonstrates the "how". But do not forget to read the entire article and find some thoughts about the "why" in the conclusions.

OpenAi

To use openai, you need to create an account with them at www.openai.com and generate an API token.

After creating an account and adding some funds as pocket money,

Click top right on your account and the on "View API Keys"

Generate a new API Key

Power Virtual Agent

Activate the Fallback topic in Power Virtual Agent

Power Virtual Agent has a built-in topic that can be a hook to the outside world, the Fallback system topic.

To activate the Fallback system topic, go to Settings within the editing canvas (click on the cog symbol), then System fallback, and then +Add.

Edit the fallback topic

Create a message box with a meaningful message and a new action.

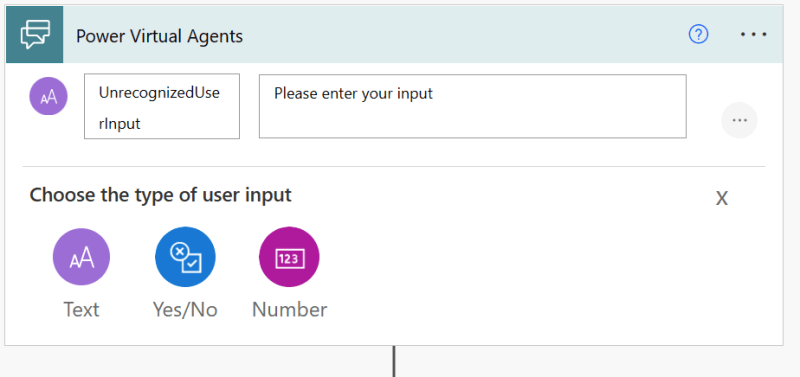

In Power Automate, create a new text-based variable, "UnrecognizedUserInput"

and an HTTP Node.

The syntax for openai is straightforward. We need to authenticate ourselves within the header and give the model, the question, the temperature, and max_tokens as the body. [documentation].

temperature: Higher values mean the model will take more risks. Try 0.9 for more creative applications, and 0 (argmax sampling) for ones with a well-defined answer.

I translated this to Power Automate; it will look like the following in the HTTP node.

The token you created above goes directly behind Bearer in the header.

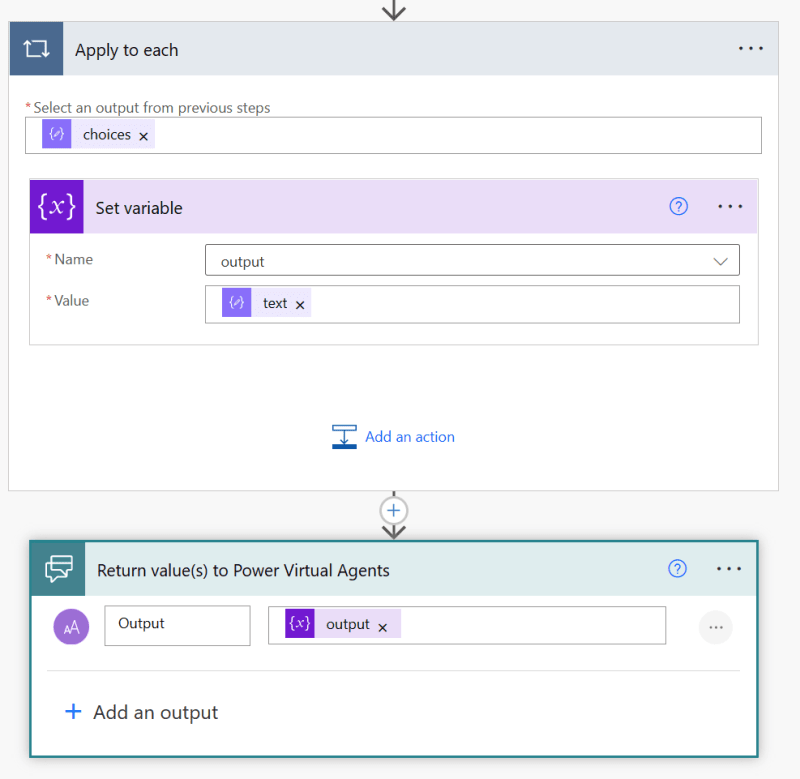

As a next step, we create a String variable.

Create a "Parse JSON node" to analyze the Body of the answer we get from openai.

You can use the following as a schema or generate it with the Body of the outcome of the previous step.

{

"type": "object",

"properties": {

"id": {

"type": "string"

},

"object": {

"type": "string"

},

"created": {

"type": "integer"

},

"model": {

"type": "string"

},

"choices": {

"type": "array",

"items": {

"type": "object",

"properties": {

"text": {

"type": "string"

},

"index": {

"type": "integer"

},

"logprobs": {},

"finish_reason": {

"type": "string"

}

},

"required": [

"text",

"index",

"logprobs",

"finish_reason"

]

}

}

}

}

After this, we create an "Apply to each" node with a "Set variable"

and we close by creating an output value.

Back to Power Virtual Agent, we adjust the created action to the correct input and output values and create a new message box to speak/display the answer on openai.

Test the result

Lets test the result within our configured channels as text chat or voice with Dynamics Customer Service 365 or AudioCodes VoiceAI.

Conclusion

ChatGPT gives us answers, most of the time correct answers, and the models are getting better and better.

Is there a downside? Why should we integrate it or why should we not to do so?

Do the user or us will "like" the answer or is it appropriate? Here we have no control; we do not own the model.

If you check the logs of your bots, you will see questions asked by users you would not even expect in your darkest dreams.

There are situations where a technically correct answer is not the answer you will give to your users when they are, e.g., in highly emotional situations.

Top comments (0)