About a month ago, vector database Weaviate landed 50 million dollars in series B funding. About three weeks ago, Chroma, an open source project with only 5k stars raised 18 million for its embeddings database and about two weeks ago, Pinecone DB announced a $100 million Series B investment on a $750 million post valuation. Naturally, a question arises, what is a vector database?

To talk about vector databases, we first need to know what a vector is. Vector is just an array of numbers. However, they can represent more complex objects such as words, sentences, images, or audio files in a continuous high dimensional space called an embedding.

Embeddings map the semantic meaning of words together or similar features in virtually any other data type. These embeddings can then be used for recommendation systems, search engines, and even text generation such as ChatGPT. But once you have your embeddings, the real question becomes: Where do you store them and how do you query them?

That's where vector databases come in. In a relational database, you have rows and columns. In a document database, you have documents and collections. However, in a vector database, you have arrays of numbers clustered together based on similarity which can later be queried with ultra low latency, making it an ideal choice for AI driven applications.

Relational databases such as PostgreSQL have tools like PGVector to support this type of functionality and Redis also has its first class vector support such as Redisearch. Bunch of new native vector databases are popping up, too. Weaviate and Milvus are open source options written in Go. Chroma, based on Clickhouse under the hood, is also an another open source option. Another extremely popular option is PineconeDB, but it is not open source.

Let's jump into some code to see what it looks like. I will be using PineconeDB and Python. Using the official guide, I will be implementing the Abstractive Question Answering program using the ELI5 BART model. Abstractive question answering focuses on the generation of multi-sentence answers to open-ended questions. It usually works by searching massive document stores for relevant information and then using this information to synthetically generate answers.

Our source data will be taken from the Wiki Snippets dataset, which contains over 17 million passages from Wikipedia. We will only utilize 5,000 passages that include "History" in the "section title" column (due to memory issues, you can utilize the complete dataset if you want; the official guide used 50,000 passages).

import pandas as pd

# create a pandas dataframe with the documents we extracted

df = pd.DataFrame(docs)

df.head()

To build our vector index, we must first establish a connection with Pinecone. Then, we create a new index. An index is the highest-level organizational unit of vector data in Pinecone. It accepts and stores vectors, serves queries over the vectors it contains, and does other vector operations over its contents. We specify the metric type as "cosine" and dimension as 768 because the retriever we use to generate context embeddings is optimized for cosine similarity and outputs 768-dimension vectors. Other metrics are "euclidean" and "dotproduct."

import pinecone

# connect to pinecone environment

pinecone.init(

api_key="YOUR_API_KEY",

environment="us-central1-gcp"

)

index_name = "abstractive-question-answering"

# check if the abstractive-question-answering index exists

if index_name not in pinecone.list_indexes():

# create the index if it does not exist

pinecone.create_index(

index_name,

dimension=768,

metric="cosine"

)

# connect to abstractive-question-answering index we created

index = pinecone.Index(index_name)

We will use a SentenceTransformer model based on Microsoft's MPNet as our retriever. Also, we will be using ELI5 BART for the generator which is a Sequence-To-Sequence model trained using the "Explain Like I'm 5" (ELI5) dataset. Sequence-To-Sequence models can take a text sequence as input and produce a different text sequence as output. You can download these models from the Huggingface hub.

import torch

from sentence_transformers import SentenceTransformer

# set device to GPU if available

device = 'cuda' if torch.cuda.is_available() else 'cpu'

# load the retriever model from huggingface model hub

retriever = SentenceTransformer("flax-sentence-embeddings/all_datasets_v3_mpnet-base", device=device)

retriever

from transformers import BartTokenizer, BartForConditionalGeneration

# load bart tokenizer and model from huggingface

tokenizer = BartTokenizer.from_pretrained('vblagoje/bart_lfqa')

generator = BartForConditionalGeneration.from_pretrained('vblagoje/bart_lfqa').to(device)

Next, we should upload our data to the pinecone database using the

index.upsert()command. If the operation was successful, you should see the following output.

Then let's right some helper functions to retrieve context passages from Pinecone index and to format the query in the way the generator expects the input.

def query_pinecone(query, top_k):

# generate embeddings for the query

xq = retriever.encode([query]).tolist()

# search pinecone index for context passage with the answer

xc = index.query(xq, top_k=top_k, include_metadata=True)

return xc

def format_query(query, context):

# extract passage_text from Pinecone search result and add the <P> tag

context = [f"<P> {m['metadata']['passage_text']}" for m in context]

# concatinate all context passages

context = " ".join(context)

# contcatinate the query and context passages

query = f"question: {query} context: {context}"

return query

query = "when was the first electric power system built?"

result = query_pinecone(query, top_k=1)

result

Lastly, we'll write a helper function that generates the answer given a query.

from pprint import pprint

def generate_answer(query):

# tokenize the query to get input_ids

inputs = tokenizer([query], max_length=1024, return_tensors="pt")

# use generator to predict output ids

ids = generator.generate(inputs["input_ids"], num_beams=2, min_length=20, max_length=40)

# use tokenizer to decode the output ids

answer = tokenizer.batch_decode(ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

return pprint(answer)

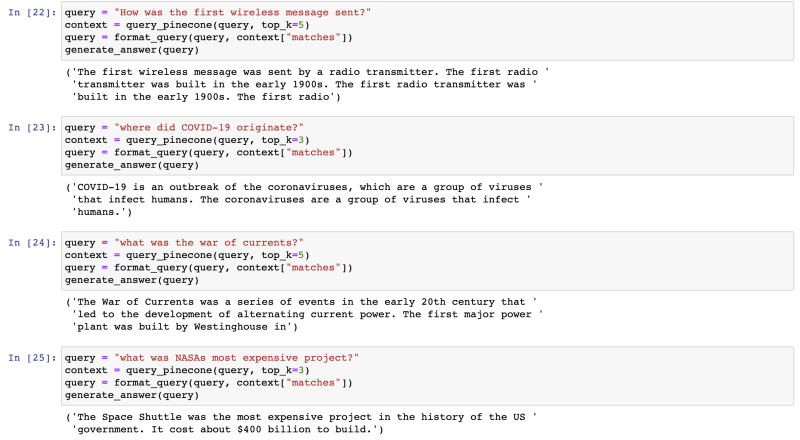

We use this function to test different queries as shown below.

Note that the answers are not complete since we only utilized 5,000 passages. You can adjust the numbers of passages and observe results.

The real reason that these databases are so hot right now is that they can extend LLMs with long-term memory. You start with a general purpose model like OpenAI's GPT-4, Meta's LLaMA, or Google's LaMDA then provide your own data in a vector database. When the user makes a prompt, you can then query relevant documents from your own database to update the context which will customize the final response and it can also retrieve historical data to give the AI long-term memory. They also integrate with tools like Langchain that combine multiple LLMs together.

Some parts transcribed from Fireship's video: Vector databases are so hot right now. WTF are they?

Official abstractive-question-answering guide: https://docs.pinecone.io/docs/abstractive-question-answering

Top comments (1)

I created a simple wrapper to pgvector that is inspired by Pinecone's simplicity: github.com/UpMortem/simple-pgvecto...