With the approximately 4.54 billion internet users(June 2020) worldwide, generating an unprecedented quantity of content, moderation is becoming a heavier task by the day! And the number of active users and apps on the market is constantly steeping. Left unsupervised, users can freely share any kind of content and create tons of digital garbage.

Naturally, content moderation is becoming a mandatory feature for each web or mobile application. But manual moderation is extremely time-consuming and surely stressful and Machine Learning solutions come quite pricey. So many drawbacks! Our team loves nothing more but a good challenge, so we rolled up our sleeves determined to aid developers in this heavy task and offer a solution.

Here, we are presenting the final piece of our simple, affordable, and most-importantly effective fully-functional Content Moderation Service. It is built entirely with open-source libraries and can be integrated into any Parse Node.JS project and hosted anywhere.

Agenda

1. Background

2. The Problem

3. The Solution

4. Moderation Preferences

5. DB Schema

6. Admin Panel

7. Configuration and Deployment

8. Fin

Background

The project was decided to come in three parts, each more advanced than the previous. We committed this way to give you the opportunity to pick the piece you need and integrate it into your application, without any hassles. Nothing less, nothing more!🙂 So far we’ve released:

1. Image Classification REST API - The first part of this service holds an Image Classification REST API that works with NSFW.JS. NSFW.JS is an awesome library developed by Gant Laborde that uses Tensorflow pre-trained ML models. The API has quite plain logic - given an URL, it returns predictions how likely the image falls into each of the following classes - Drawing, Neutral, Sexy, Porn and Hentai.

More details you can find at the following:

- Content Moderation Service with Node.JS and TensorFlow. Part 1: REST API. A blog post that gives details on our team’s inspiration, how the API works, and what’s the tech Stack used for all three project stages.

- Image Classification REST API GitHub - In case your project needs only REST API image classification, clone the repo, and follow the README.md.

2. REST API + Automation Engine - The Automation Engine is tightly coupled with the REST API. Basically, it’s work consists of checking how the classification of a certain image corresponds to the parameters you pre-define as safe for your project. The process is automated with a Parse Server afterSave trigger.

For further insight check:

- Content Moderation Service with Node.JS and TensorFlow. Part2: Automation Engine - An article explaining the purpose and settings of the Automation Engine.

- Content Moderation Automation GitHub - For all folks who want to build a moderation interface of their own, but could take advantage of the moderation automation.

Our blog offers a synthesized explanation of the logic behind the REST API and the Automation Engine, so we would strongly recommend you to have a look at the articles before continuing.

The Problem

With the REST API and AUTOMATION ENGINE already put forth, the problem is almost solved. After all, the decision-making process for a great number of user-generated content is already automated and the number of pictures that require manual moderation has drastically decreased.

Unfortunately, for manual moderation you surely need to go through photos one by one. Next, you need to decide for each of the images if it is safe or toxic for your specific audience and apply your decision. All that combined may once again become a tedious task.

If you look at Instagram with its 100 million+ photos uploaded per day or Facebook with more than 120 million fake profiles you can imagine that the count of inappropriate photos daily can also reach 7 digit numbers. Even if your project can’t compare with these giants yet and your pile of images for moderation is significantly smaller it still remains a boring burden

The final touch of our Content Moderation Service puts an end to this issue by providing a super user-friendly interface that makes manual moderation all fun and games.

The Solution

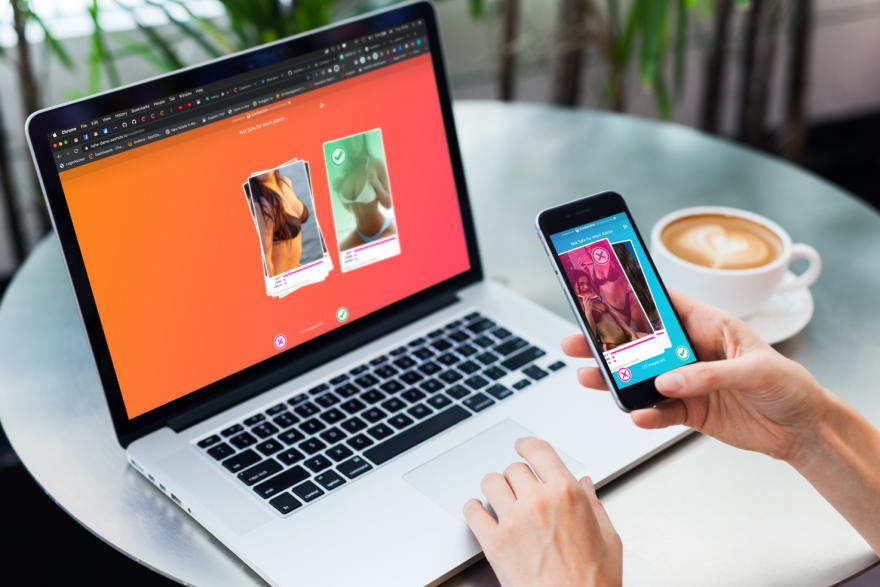

To cope with the situation and make the most of it, we'll take all photos flagged from the Automation Engine for moderation and display them in an eye-pleasing Admin Panel that empowers you to approve or reject a picture with just a click.

We combine the REST API and Automation Engine with an exquisite Admin Application interface, where all users’ images that need manual moderation are stacked up for approval. It’s desktop and mobile-friendly, easy to operate, and empowers you to go through a huge pile of photos in just a few brief moments.

As in the previous releases, we’ll once again use a SashiDo app for all examples. Because it is easy, fun, and all infra hassles are left behind. 😊

Moderation Preferences

Hopefully, you’ve already gone through our Part 2: Moderation Automation article and you can skip this section. However, if you didn’t have the chance to read the previous blog post, we’ll go over the Moderation Preferences briefly once more, as they are an essential component.

To put in a few words, the moderation preferences are the criteria specifically set for your app which images will be automatically approved from the automation engine considered safe and which will be rejected.

Therefore, you need to carefully review which classes are dangerous or unwanted to your users. The photos classified above the max values that you set will be automatically marked deleted, the ones lower than the min values -> auto-approved, all in between go to our Admin App for manual moderation.

For example, if you’ve created an innovations app, where enthusiastic engineers present their AI robot ideas, you’ll most probably consider Sexy, Hentai, and Porn classified images as inappropriate. Drawing seems a bit grey area, as you might want to allow robot sketches. Here is one example of how such moderation preferences can be adjusted:

{

"Sexy": { "min": 0.4, "max": 0.8 },

"Drawing": { "min": 0.4, "max": 0.9 },

"Porn": { "min": 0.4, "max": 0.8 },

"Hentai": { "min": 0.2, "max": 0.8 }

}

Let’s set these params and upload some suspicious pictures like the one below, which falls between the min and max values.

See, it’s directly stacked up for approval into the Admin App. Cool, right?! Still, in the long run, it all depends on your particular use-case and judgment.

No worries, we’ve made sure to provide you with an easy way of adjusting the moderation preferences on the fly.😉 We’ll save those as moderationScores config setting for the project, but more on that in the Configuration and Deployment Section.

The right moderation preferences settings leave more room for the automation to make the decisions and untie your hands for cooler stuff!

DB Schema

The Database Schema is the other must-mention unit and it is also related to the Automation Engine and explained in detail within our previous blog post on Moderation Automation. The idea is that you need to keep the naming and add a few columns to your DB to store the Automation Engine’s results and NSFW predictions.

- The afterSave automation is hooked to a collection UserImage by default. To use straight away after deployment, you should either keep the same class name or change with the respective one here into your production code.

- To keep a neat record of the REST API predictions and Automation Engine results, you will need to add a few columns to your Database collection that holds user-generated content.

-

isSafe(Boolean) - If an image is below the

minvalue of your moderation preferences, it’s markedisSafe - true. -

deleted(Boolean) - Likewise, the Automation Engine will mark the inappropriate pictures, as

deleted - true. Those pictures won’t be automatically deleted from the file storage. - moderationRequired(Boolean) - Those are loaded in the Admin Panel for manual moderation.

- NSFWPredictions(Array) - Stores the NSFW predictions as json for this image.

- Last but not least you need to add an isModerator column to the predefined _User class. It’s again of type boolean and allows you to manage access to the Admin Panel for different users.

Well, that’s about all preparations needed to kick it off! Next, we’ll check the rudiments of the last fragment - the Admin Application and move on to deploying in production.

Admin Panel

Our awesome engineering team built a plain and chic ReactJS-based Admin Application to put the final touch. Its primary mission is allowing you to go through all images that require manual moderation and make a decision in a blink of an eye. We’ve chosen ReactJS, as it is by far the most popular front-end framework loved by many developers.

Let’s check briefly the features of the Admin panel and how it helps:

- First thing comes first, we have made a basic login/logout functionality.

- At SashiDo we truly believe that security should be a top priority for every project, so we made sure to provide you with a way for restricting access to the Admin app. You can grant access to the app for your trusted partners by setting isModerator=true in the database.!

- All pictures that are flagged by the Automation Engine as moderationRequired=true are displayed in a beautiful interface, which makes manual moderation a fun play.

- Just below each picture you have a useful scale showing the classification result for most hazardous classes - Sexy, Porn and Hentai.

- You approve or reject a photo with a simple click or swipe. As easy as it can be!😊

- And it gets even better! The Admin app is desktop and mobile-friendly, which makes it the ultimate time-saver. You can moderate your app’s content even from the subway, while on your way to work, the restaurant, or the bar.

All parts of this project are open for collaboration. Fork the repo, apply your awesome ideas, and open a pull request!

Configuration and Deployment

Configuration

Parse Server offers two approaches for app config settings. Parse.Config is very simple, useful, and empowers you to update the configuration of your app on the fly, without redeploying. The trouble is configs are public by design. Environment variables are the second, more secure option, as these settings are private, but each time you change those your app is automatically redeployed. We’ll move on wisely and use both. 😉

Parse.Configs

- moderationScores - save the moderation preferences for your app in a Parse.Config object. That will allow you to update and fine-tune criteria any time you need on the fly.

- moderationAutomation - add this boolean option that makes enabling/disabling content moderation automation an effortless process finished with just a click when needed. For example, when you want to test the new code version without automation.

To set the configs for your SashiDo application go to the App’s Dashboard -> Core -> Config. The final result looks like:

Environment Variables

For production, we'll set the NSFW model URL, NSFW Model Shape size and Config caching as environment variables.

- TF_MODEL_URL - SashiDo stores three pre-trained ready for integration nsfw models that you can choose from.

TF_MODEL_SHAPE_SIZE - Еach model comes with its shape size

CONFIG_CACHE_MS variable. It will serve us for cashing the Parse.Configs and the value you pass is in milliseconds.

In SashiDo set the environment variables for your projects from the App's Dashboard -> Runtime -> Environment variables. Have a look at all you need:

For this project, we have preset the Mobilenet 93 model. However, you can always choose a different one. Want to test out different options and evaluate each model’s performance? Simply check the NSFWJS Demo page. 🙂

Deployment

SashiDo has implemented an effortless automatic git deployment process, so simply make sure your SashiDo and Github accounts are connected. Next, follow these simple steps:

- Clone the repo from GitHub.

git clone https://github.com/SashiDo/content-moderation-application.git

- Set the configs and env vars in production.

- checked ✔

- Add your SashiDo app as a remote branch and push changes.

git remote add production git@github.com:parsegroundapps/<your-pg-app-your-app-repo>.git

git push -f production master

Voila!!! You can leave content moderation to the newly integrated service and go grab a beer. Cheers 🍻!

FIN!

All chunks of the Moderation Services are already assembled. We would love to have your feedback on what other ready-to-use Machine Learning services can help your projects grow. Don’t be shy and share your thoughts at hello@sashido.io.

And if you’re still wondering about where to host such a project, don’t forget that SashiDo offers an extended 45-day Free trial, no credit card required as well as exclusive Free consultation by SashiDo's experts for projects involving Machine Learning.

Happy Coding!

Top comments (0)