Introduction

This is the third article in a series of articles that explain how you can backup an inexpensive NAS solution (built with raspberry pi) to Azure.

In the previous posts we've seen the cloud storage solutions available as well as their pricing. We've decided upon what service we would use (Azure Storage) and built the secure infrastructure using Terraform.

In this final article we will focus on the solution used to backup our files to Azure Storage using the Archive tier for reduced costs.

Backup Solution

Enter Rclone. Rclone is a command-line program to manage files on cloud storage. It is a feature-rich alternative to cloud vendors' web storage interfaces (as it is described in it's web page).

And that is what we need to automate our backups. We don't want to push the button, we want our files to backed up for several occasions during the day.

We also want to focus on security, and for that we will have to open and close the firewall on our storage account when the backup occurs.

So let's start.

The credentials

First we will need to generate a credentials file.

Open your text editor and paste the following json text:

{

"appId": "replaceme",

"displayName": "replaceme",

"password": "replaceme",

"tenant": "replaceme"

}

if you followed my previous post you remember we deployed the infrastructure using terraform, and that generated some outputs. If you stored them then you can replace the values in the text file:

Changes to Outputs:

+ appId = (known after apply)

+ displayName = "backupapplication"

+ password = (known after apply)

+ tenant = "xxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx"

If you didn't store the values you can still obtain them either by going to the azure portal or via command line. If you don't remember the password then a new password for the service principal may have to be generated.

Now that we have the file in place let's leave it aside and start with the script.

The script

Let's use our friend bash in order to put our solution together.

Starting with the security part. We need to be able to open and close the storage firewall in order to backup our files. For that we need to authenticate against azure (with our credentials file) and add our public ip address to the firewall.

Getting the public ip. Let's use aws! You read it correctly. AWS provides a free endpoint to discover your public ip address.

So our function will look like this:

getPublicIp() {

curl checkip.amazonaws.com

}

Now that we have a way for retrieving the public ip we need to authenticate. Using the service principle credentials we can easily retrieve an access token that will allow us to interact with the azure storage via rest api. So let's do it:

getAzureToken() {

client_id=$1

client_secret=$2

tenant=$3

scope="https%3A%2F%2Fmanagement.core.windows.net%2F.default"

headers="Content-Type:application/x-www-form-urlencoded"

data1="client_id=${client_id}&scope=${scope}&client_secret=${client_secret}&grant_type=client_credentials"

data2="https://login.microsoftonline.com/${tenant}/oauth2/v2.0/token"

curl -X POST -H $headers -d $data1 $data2

}

As you are seeing the parameters we need are available in the file we've generated. We just have to retrieve them from the file and feed them to the function.

So now that we have the token and the public ip we can start opening and closing the communication in the azure firewall.

Let's work on the open function. We will need to call the api that allows us to interact with our storage account (represented by the resource id) and pass a json payload that specifies that the storage denies all traffic by default but allows our public ip to communicate:

addNetworkException() {

token=$1

subscriptionId=$2

resourceGroupName=$3

accountName=$4

publicIp=$5

headers1="Content-Type:application/json"

headers2="Authorization:Bearer ${token}"

url="https://management.azure.com/subscriptions/${subscriptionId}/resourceGroups/${resourceGroupName}/providers/Microsoft.Storage/storageAccounts/${accountName}?api-version=2021-09-01"

data="{\"properties\":{\"networkAcls\":{\"defaultAction\":\"deny\",\"ipRules\":[{\"action\":\"allow\",\"value\":\"${publicIp}\"}]}}}"

curl -X PATCH -H "$headers1" -H "$headers2" -d "$data" "$url"

}

Closing the firewall is similar. We just have to remove the ip address from the json and pass an empty array:

removeNetworkException() {

token=$1

subscriptionId=$2

resourceGroupName=$3

accountName=$4

publicIp=$5

headers1="Content-Type:application/json"

headers2="Authorization:Bearer ${token}"

url="https://management.azure.com/subscriptions/${subscriptionId}/resourceGroups/${resourceGroupName}/providers/Microsoft.Storage/storageAccounts/${accountName}?api-version=2021-09-01"

data="{\"properties\":{\"networkAcls\":{\"defaultAction\":\"deny\",\"ipRules\":[]}}}"

curl -X PATCH -H "$headers1" -H "$headers2" -d "$data" "$url"

}

Now that we have taken care of the security functions let's work on the backup itself.

Let's use our credentials file to add the azure information to our rclone configuration (This will run only once):

rclone_config() {

echo 'Adding configuration...'

rclone config create "$name" azureblob account="$storageaccountname" access_tier="$accesstier" service_principal_file="$credential_file"

echo 'Config entry added!'

}

What are the function parameters?

- $name - The configuration name

- $storageaccountname - The name of the azure account

- $accesstier - The Archive access tier will be used to store our files in a cheap way

- $credential_file - The filesystem path for our manually created credentials file

Now we need the function that executes the backup:

rclone_backup() {

echo 'Starting backup...'

rclone sync "$sourcepath" "$name":"$container" "--azureblob-archive-tier-delete" "-v"

if [ $? -eq 0 ]; then

echo 'Backup completed!'

else

echo 'Something went wrong!'

exit 1

fi

}

The parameters once again.

- $sourcepath - The filesystem path to your folder

- $name - The rclone configuration name we used in the previous function

- $container - The azure storage container name

And now let's put this all together.

getPublicIp() {

curl checkip.amazonaws.com

}

getAzureToken() {

client_id=$1

client_secret=$2

tenant=$3

scope="https%3A%2F%2Fmanagement.core.windows.net%2F.default"

headers="Content-Type:application/x-www-form-urlencoded"

data1="client_id=${client_id}&scope=${scope}&client_secret=${client_secret}&grant_type=client_credentials"

data2="https://login.microsoftonline.com/${tenant}/oauth2/v2.0/token"

curl -X POST -H $headers -d $data1 $data2

}

addNetworkException() {

token=$1

subscriptionId=$2

resourceGroupName=$3

accountName=$4

publicIp=$5

headers1="Content-Type:application/json"

headers2="Authorization:Bearer ${token}"

url="https://management.azure.com/subscriptions/${subscriptionId}/resourceGroups/${resourceGroupName}/providers/Microsoft.Storage/storageAccounts/${accountName}?api-version=2021-09-01"

data="{\"properties\":{\"networkAcls\":{\"defaultAction\":\"deny\",\"ipRules\":[{\"action\":\"allow\",\"value\":\"${publicIp}\"}]}}}"

curl -X PATCH -H "$headers1" -H "$headers2" -d "$data" "$url"

}

removeNetworkException() {

token=$1

subscriptionId=$2

resourceGroupName=$3

accountName=$4

publicIp=$5

headers1="Content-Type:application/json"

headers2="Authorization:Bearer ${token}"

url="https://management.azure.com/subscriptions/${subscriptionId}/resourceGroups/${resourceGroupName}/providers/Microsoft.Storage/storageAccounts/${accountName}?api-version=2021-09-01"

data="{\"properties\":{\"networkAcls\":{\"defaultAction\":\"deny\",\"ipRules\":[]}}}"

curl -X PATCH -H "$headers1" -H "$headers2" -d "$data" "$url"

}

rclone_config() {

echo 'Adding configuration...'

rclone config create "$name" azureblob account="$storageaccountname" access_tier="$accesstier" service_principal_file="$credential_file"

echo 'Config entry added!'

}

rclone_backup() {

echo 'Starting backup...'

rclone sync "$sourcepath" "$name":"$container" "--azureblob-archive-tier-delete" "-v"

if [ $? -eq 0 ]; then

echo 'Backup completed!'

else

echo 'Something went wrong!'

exit 1

fi

}

publicIp=$(getPublicIp)

credentials=$(cat "$credential_file")

azureToken=$(jq -j '.access_token' <<<"$(getAzureToken "$client_id" "$client_secret" "$tenant")")

addNetworkException "$azureToken" "$subscriptionid" "$resourcegroupname" "$storageaccountname" "$publicIp"

config=$(rclone config dump)

if [ -z "$config" ]; then

rclone_config

rclone_backup

else

rclone_backup

fi

To make it easier I've compiled it into a bash script available at

You can go over the description of each block of code and also read the script parameter description.

Using the downloaded script we can just make it executable and pass the parameters:

chmod +x linuxFSAzureBackup.sh

And then run it with the code bellow (this is a dummy example. Please replace with your own values).

./linuxFSAzureBackup.sh -n "raspberry2azure" -k "/home/pi/azurecredentials.json" -s "myspecialstorage" -r "specialresourcegroup" -i "497b54be-ccfb-47be-994d-b4d549a191cb" -a "Archive" -p "/mnt" -c "myspecialcontainer"

The parameter order:

- -n The name that we will assign to the rclone configuration"

- -k Path for the azure Cli generated credentials file"

- -s The Azure Storage Account Name"

- -r The resource Group Name where the Azure Storage Account resides"

- -i The Azure subscription id"

- -a The desired storage account accesstier for the backed up files"

- -p The path where the items to be backed up reside"

- -c The Azure Storage account container name"

These are the results:

Adding the public ip address

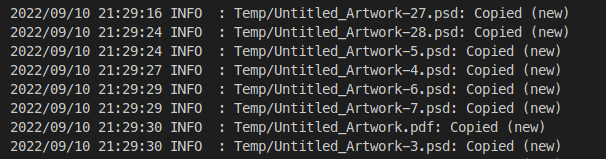

Copying the files

Removed the ip rule

In the end I had to create a filter file for rclone because some files were not getting copied. So that will be my next contribution for the script. But please feel free to modify it as you need.

And that's it. You can now add the script to crontab and have a cloud backup solution on your raspberry pi.

I hope you enjoyed this series of articles.

See you soon.

Top comments (0)