Keywords: ChatGPT, COS, Lookup Table, IRIS, AI

Purpose

Here is another quick note before we move on to GPT-4 assisted automation journey. Below are some "little" helps ChatGPT had already been offering, here and there, during daily works.

And what could be the perceived gaps, risks and traps to LLMs assisted automation, if you happen to explore this path too. I'd also love to hear anyone's use cases and experiences on this front too.

Lookup tables

One of the simplest tasks could be Lookup tables. Frequently we have to turn tedious CSV or Excel code tables into some Lookup tables in XML, manually, particularly there are blanks etc randomly distributed in the values across thousand records, and you had to tidy them quite a few times before go-live deadlines.

Can ChatGPT help a bit? Here is a quick test.

Prompt:

You are a program to automatically convert a CSV file into a XML lookup table file.

The input is a comma delimited CSV file with 3 data columns :

Blood Science ,XCOM,CANCELLED/REJECTED SAMPLE

Blood Science ,ADX ,ADD ON TEST COMMENT

Blood Transfusion,DARA,ALERT FOR DARA/CAMELLIA

Blood Transfusion, BT1 ,REJECT SAMPLE UNLABELLED

The output should be a XML file in the form of:

<?xml version="1.0"?>

<lookupTable>

<entry table="HIE.ICE.FilterOBR4" key="XCOM">Blood Science</entry>

<entry table="HIE.ICE.FilterOBR4" key="ADX ">Blood Science</entry>

<entry table="HIE.ICE.FilterOBR4" key="DARA">Blood Transfusion</entry>

<entry table="HIE.ICE.FilterOBR4" key="BT1">Blood Transfusion</entry>

</lookupTable>

Note: the sequence of the XML attribute in each root should be kept as they are

ChatGPT:

Here's a Python script that reads in the CSV file, processes it, and outputs the resulting XML file:

import csv

def convert_csv_to_xml(input_file, output_file):

"""

Convert a CSV file to an XML lookup table file.

Parameters:

input_file (str): Path to the input CSV file.

output_file (str): Path to the output XML file.

Returns:

int: 0 if the conversion was successful, 1 if there was an error.

"""

try:

# Create a dictionary to hold the lookup table data

lookup_table = {}

# Read in the CSV file and process the data

with open(input_file, "r") as csvfile:

csvreader = csv.reader(csvfile)

for row in csvreader:

category = row[0].strip()

key = row[1].strip()

value = row[2].strip()

lookup_table[key] = category

# Generate the XML file

with open(output_file, "w") as xmlfile:

xmlfile.write('<?xml version="1.0"?>\n')

xmlfile.write('<lookupTable>\n')

for key in lookup_table:

xmlfile.write(f'<entry table="HIE.ICE.FilterOBR4" key="{key}">{lookup_table[key]}</entry>\n')

xmlfile.write('</lookupTable>\n')

return 0

except Exception as e:

print("Error:", e)

return 1This script first reads in the CSV file using the csv module and creates a dictionary to hold the lookup table data. It then processes each row of the CSV file and adds the relevant data to the dictionary.

Finally, the script generates the XML file by iterating through the dictionary and writing the appropriate XML tags and attributes to the output file. Note that the f string syntax is used to dynamically insert the key and category data into the XML tags.

I ran it briefly in a Jupyter notebook, it checked out. It also stripped out the blanks (was going to be a lot manual tidy-ups). It gave a tool handling lengthy CSVs with messy vlues, without being limited to token size.

In this specific case, I'd say it increased the productivity about 20+ times over repeated manual approaches handling many messy code tables.

Now that it save me a bit time, so I was wondering whether it can generate a COS sample too to build Util class for future service BAUs etc.

Prompt:

Could you convert the above Python function to intersystems COS classmethod, by using correct COS specific function please

ChatGPT:

Certainly! Here's the converted code as an InterSystems ObjectScript class method:

ClassMethod ConvertCSVToXML(inputFile As %String, outputFile As %String) As %Integer

{

try {

// Create a dictionary to hold the lookup table data

set lookupTable = {}

// Read in the CSV file and process the data

set csvReader = ##class(%File.CharacterStream).%New()

do csvReader.LinkToFile(inputFile)

while (csvReader.AtEnd = 0) {

set row = csvReader.ReadLine()

set category = $ZSTRIP($PIECE(row, ",", 1), "B")

set key = $ZSTRIP($PIECE(row, ",", 2), "B")

set value = $ZSTRIP($PIECE(row, ",", 3), "B")

set lookupTable(key) = category

}

do csvReader.%Close()

// Generate the XML file

set xmlWriter = ##class(%File.CharacterStream).%New()

do xmlWriter.LinkToFile(outputFile)

do xmlWriter.WriteLine("<?xml version=""1.0""?>")

do xmlWriter.WriteLine("<lookupTable>")

set keys = lookupTable.%GetKeys()

while ('keys.%AtEnd) {

set key = keys.%GetNext()

set category = lookupTable(key)

do xmlWriter.WriteLine("<entry table=""HIE.ICE.FilterOBR4"" key="""_key_""">"_category_"</entry>")

}

do xmlWriter.WriteLine("</lookupTable>")

do xmlWriter.%Close()

quit 0

}

catch (ex) {

Write "Error: "_ex.DisplayString(), !

quit 1

}

}

In InterSystems ObjectScript, we can use the %File.CharacterStream class to read and write files. We use the LinkToFile() method to open the input and output files, and then use the ReadLine() and WriteLine() methods to read and write data to the files.

To strip leading and trailing spaces from each field, we use the $ZSTRIP() function with the "B" flag.

We also use the %GetKeys() method of the lookupTable object to iterate over the keys in the dictionary.

Finally, the method returns a status code of 0 if the conversion was successful, or 1 if there was an error.

It didn't give an accurate tool that can check out as straightaway as Python. %File.CharacterStream should be %Stream.FileCharacter, stripping blanks should use "<>W", and looping through globals should use $ORDER etc etc.

Prompts can be improved, but I am particularly not good at asking for favors, so I rushed up a few tweaks along the lines.

ClassMethod ConvertCSVToXML2(inputFile As %String, outputFile As %String) As %Integer

{

s tSC = $$$OK

try {

set ^lookupTable = ""

// Read in the CSV file and process the data

set csvReader = ##class(%Stream.FileCharacter).%New()

set tSC = csvReader.LinkToFile(inputFile)

while (csvReader.AtEnd = 0) {

set row = csvReader.ReadLine()

set category = $ZSTRIP($PIECE(row, ",", 1), "<>W")

set key = $ZSTRIP($PIECE(row, ",", 2), "<>W")

set value = $ZSTRIP($PIECE(row, ",", 3), "<>W")

set ^lookupTable(key) = category

}

//zw ^lookupTable

do csvReader.%Close()

// Generate the XML file

set xmlWriter = ##class(%Stream.FileCharacter).%New()

set tSC = xmlWriter.LinkToFile(outputFile)

set tSC = xmlWriter.WriteLine("<?xml version=""1.0""?>")

set tSC = xmlWriter.WriteLine("<lookupTable>")

set key = $O(^lookupTable("")) //lookupTable.%GetKeys()

while (key '= "") {

//w key

set category = $GET(^lookupTable(key))

w !,key, " ", category

set tSC = xmlWriter.WriteLine("<entry table=""HIE.ICE.FilterOBR4"" key="""_key_""">"_category_"</entry>")

set key = $O(^lookupTable(key))

}

set tSC = xmlWriter.WriteLine("</lookupTable>")

set tSC = xmlWriter.%Save("</lookupTable>")

set tSC = xmlWriter.%Close()

}

catch (ex) {

Write "Error: "_ex.DisplayString(), !

s tSC = ex

}

return tSC

}

So what are the perceived gaps here?

So some potential implications came into my mind would be:

1. Productivity: The productivity reward would depend on the COS proficiency of the developer. This tool would further amplify any advantages in coding proficiency.

2. Gaps: My wondering would be: how could we drive up its accuracy by few-shot learning via prompts , with or even without fine-tuning? If you happen to explore this front across LLMs - I'd love to hear your thoughts, or dreams.

Unit Tests Auto-generations

By talking about development, we can't get away from testing.

Things seem to be changing now. Very "simple" looking, sleek tools leveraging the power of GPTs, such as RubberDuck, mushroomed out to help.

So I tried RubberDuck extension in VSCode, then set it up with my OpenAI API key.

Then I open the Python function as mentioned above in VSCode, as below:

Then I selected click "Generate Unit Test ..." menu, and it would auto-generate some unit test coverage in a few seconds, at least 100+ times quicker than typing. It gives a quick place holder for tweaks.

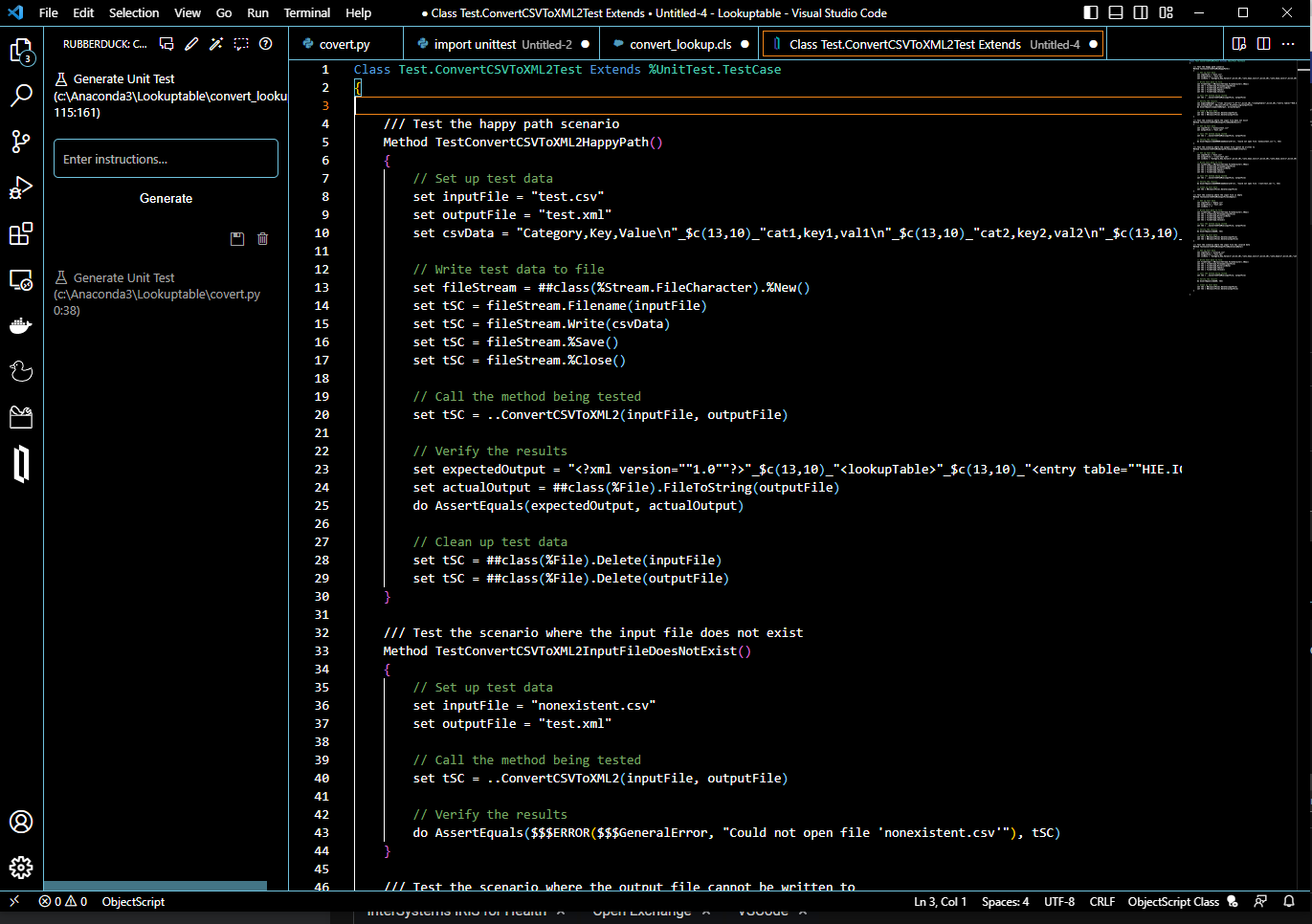

Can we do such for COS code too, even though neither RubberDuck nor ChatGPT truly understood COS yet (and it's not LLM's fault):

And it generated these unit test placeholder without understanding COS much yet - I'll leave its gaps open for advice for now, and particularly, how or will the gaps need to be addressed with a sense of purpose?

It would need some tweaks, but by harvesting ChatGPT, it seems now able to auto generate codes, analyse codes, auto-comment the code, and auto-generate unit test place holders for you, in today's reality. (Well, sort of, depending on what coding language we are using, and what we really want it to do next).

Conclusion?

Again, I don't have quick conclusions, since I am not able to explore its boundaries and traps much yet.

Math foundation??

Maybe one day, just as the concept of "entropy" in information theory was cast in 1948, another math genius might simply come out to enlighten us with another concept to simply quantify the "linguistic" gaps and distances among all sorts of languages, be it human or machine languages. Until then we would not really know the actual potential, limits. risks and traps of these LLMs. Nonetheless it doesn't seem to stop LLMs leap forward on monthly or weekly basis.

Other use cases??

Analytics dashboards driven by human natural language queries: A few weeks ago I was attempting some R&D for an innovation project, and by chance I noticed another use case: it "can" turn clinical queries in human language into a machine query in SQL/MDX. Well, kind of, without any fine-tuning yet by today. It seems to start to bridge the abyss between human languages and machine tones, to a degree?

It would not be difficult to imagine such a scenario: that a clinician just types in a clinical query into a chat in her natural language, and automatically a clinical dashboard is generated, highlighting those patients who might have missed the early detection of heart failure, and grouping and ranking them across regions, gender, and age groups. The differences in care quality would be highlighted in minutes instead of months of engineering efforts.

And certainly, personalised AI care assistant. It seemed to be so remote and so complex last year this time, and with GPT4, it rapidly becomes a reality, it seems. Theoretically, nothing would stop GPT4 alike LLMs looking into my care records, just bits and pieces of structured and semi-structure data (like Lab tests and my medicines), unstructured data (my clinical notes and reports etc) and image data (X-Rays, CTs and MRIs), to start to make the best sense of it, and able to coordinator my cares and appointments in the coming soon.

Disclaimer

Sorry to state the obvious, but please note that the purpose of this post is not about how can we do XML Lookup tables quickly, manually or not, and actually I am pretty good at Excels and Notepad++ too. Instead, it's meant to get into touch with minds who'd like to share use cases, reflections, challenges, risks and experiences on the journey to LLM etc assisted automations.

And LLMs' power came from developers, from every public repository and posting everybody made in public forums. GPTs are not born out of air. This is not being fully appreciated and acknowledged yet in recent months.

There are risks to humanity by AGI at this speed, but personally I felt slightly lucky, relieved and excused since we are in healthcare services.

Other routine risks include data privacy compliances per HIPPA, GDPR and DPAs as usual.

Top comments (1)

I would stress the importance of HIPPA, GDPR, PHI, PII etc. and put that first with a large banner. That said, your blog post with the experimentation is great, however one needs to be really careful on the public LLM providers. Hence, it's always recommended to go with the private instance like the one Azure provides.

Any exposure of personal health information to the Large Language Models would be on a greater risk no matter the LLM providers says that they do not train with our data.

We need to be more cautious in dealing with the compliance and regulatory aspects.