In most cases, Deep Learning (DL) is really just an application of statistical methods to suss out patterns in data using neural network architecture with several layers.

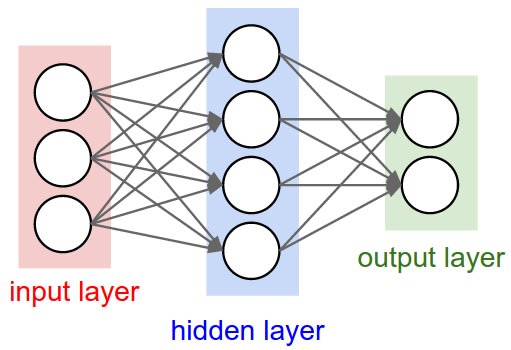

A neural net usually follows a general design. There are some inputs that represent data being fed to the network, i.e. pixels in an image, followed by several layers of hidden nodes that process information in a particular way before passing it on.

The “deep” in deep learning is really just a reference to these hidden layers that exist between your input and output layers. The architecture is, as you might have inferred from the name, very loosely inspired by that of the brain. Emphasis on loosely.

To train these networks, we present them with numerous examples of sample data that is labeled. (Think showing it a picture of a german shepherd with the label “dog”) We employ a process called gradient descent to modify the connections between our nodes, through an algorithm known as back-propagation. This allows us to prune our network and strengthen input-output mappings. As we train them, DL based networks often form interesting representations of data corresponding to some sub-component of the inputs. Think lines representing the edges of a table.

There are numerous interesting sub-architectures that provide optimized or incrementally better results, especially in a specific problem space, but we won’t dive too deeply into those here.

If you can only takeaway one thing from this primer, let it be this:

In a universe with infinite data and computational power, DL techniques can encode any discrete representation between inputs and outputs, and this is a very powerful notion. For example, there are an enormous set of problems that involve the classification of certain inputs into categories. Of course, categories can represent pretty much anything, so throw DL enough data and compute, and it can probably map to any input output pair you give it.

Practically, there are a number of endemic problems, including the concept of getting caught at a local minima, where your algorithm thinks it is optimized despite there being better solutions. This is usually solved, and is often not a huge problem when dealing with sufficiently large amounts of data.

TLDR: DL is powerful with large amounts of data and computational power, and can solve simple mapping problems effectively. But how would it do if the goal is to encode causality and intelligence? I’ll examine that soon.

This is Part 1 of a few part series introducing Modular Neural Networks with Adaptive Topology, an architecture I think will be enormously successful for complex, hierarchical problems.

You can check out the rest of the series as it comes out here or on technomancy!

Top comments (2)

A TL;DR cannot be something "I will tell you later", your piece is could be more substantial.

My apologies, there should have been a line break somewhere there.

Thanks for the advice, I was trying to keep the piece as short an overview as possible, literally under a 2 minute read. The other stuff will be coming in a much more long form post since i’ll be proposing a new architecture!