Abstract: In this post, I experimented with 6 different approaches for caching Docker builds in GitHub Actions to speed up the build process and compared the results. After trying out every approach, 10 times each, the results show that using GitHub Packages’ Docker registry as a build cache, as opposed to GitHub Actions’ built-in cache, yields the highest performance gain.

⚠️ Warning: This article has not been updated since its publication in April 2020. The approaches outlined here are probably out-of-date. Here are more updated takes on this issue:

2021-07-29 Docker's official

build-push-actionnow supports GitHub Cache API where caches are saved to GitHub Actions cache directly, skipping the local filesystem-based cache.2021-03-21 Andy Barnov, Kirill Kuznetsov. “Build images on GitHub Actions with Docker layer caching”, Evil Martians.

Background and motivation 💬

Sick of waiting for builds on GitHub Actions. 😖

Unlike self-hosted runners like Jenkins, most cloud-hosted build runners are stateless, providing us with a pristine environment each run. We cannot keep files from the previous runs around; anything that needs to be persisted must be externalized.

GitHub Actions has a built-in cache to help do this. But there are many ways of creating that cache (docker save and docker load first comes to mind). Will the performance gains outweight the overhead caused by saving and loading caches? Are there more approaches other than using GitHub Action’s built-in cache? That’s what this research is about.

Approaches considered 💡

To conduct this experiment, I first came up with some ideas on how we might cache the built Docker image. I experimented with 8 total approaches.

- No cache 🤷♂️

This is the baseline.

- No cache (BuildKit) 🤷♀️

This is another baseline, but with BuildKit.

- Use

docker saveanddocker loadwithactions/cache📦

Once an image is created, we can use docker save to export the image to a tarball and cache it with actions/cache. On subsequent runs, we can use docker load to import the image from the cached tarball. Then we can build the image with the --cache-from flag.

Note that this method is not compatible with BuildKit as it only supports external caches located on the registry (not pulled/imported images).

- Cache /var/lib/docker with

actions/cache🤯

Maybe we don’t need to do all that import-export work and can just cache the whole /var/lib/docker directory!

- Use a local registry with

actions/cache🐳

We can run a filesystem-backed Docker registry on localhost, push the built image to that registry, and cache it with actions/cache. On subsequent runs, we can pull the latest image from the localhost registry and use the pulled image with --cache-from arg.

- Use a local registry with

actions/cache(BuildKit) 🐋

Same as above, but with BuildKit.

There is a minor difference in the setup: With BuildKit we do not have to run docker pull. BuildKit already expects the cached image to be located on a registry. While this may seem like a limitation, but it also comes with a performance gain: if a cache turns out to be not applicable, BuildKit will skip pulling the image.

- Use GitHub Packages’ Docker registry as a cache 🐙

GitHub provides a package registry already, so we might not need to run our own filesystem-backed registry at all!

- Use GitHub Packages’ Docker registry as a cache (BuildKit) 🦑

Same as above, but with BuildKit.

Failed approaches 💥

-

Approach 🤯, Cache /var/lib/docker with

actions/cache, failed because of a lot of permission errors caused when generating the cache. Even with asudo chmoda proper cache could be created. -

Approach 🦑, Use GitHub Packages as a cache (BuildKit), failed because as of the time of writing this, GitHub Package Registry does not support requesting a cache manifest. Subsequent builds will cause this message when trying to re-use a cached image:

ERROR: httpReaderSeeker: failed open: could not fetch content descriptor

So, we are left with 4 approaches (📦, 🐳, 🐋, 🐙), excluding 2 baselines (🤷♂️ and 🤷♀️).

Image set up 🏛

When optimizing builds, although we can reduce the time to run docker build 🤩, but unfortunately some overhead will be introduced 😞.

Overhead time includes:

- restoring a saved cache (approaches 📦, 🐳, 🐋);

- importing image from the tarball loaded from the cache (approach 📦);

- running a local Docker registry (approaches 🐳, 🐋);

- pulling from a Docker registry (approaches 🐳, 🐋, 🐙);

- exporting the built image to a tarball (approach 📦);

- pushing an image to a registry (approaches 🐳, 🐋, 🐙); and

- saving a cache (approaches 📦, 🐳, 🐋).

From my experience,

- The build time grows mostly with project complexity, i.e. the CPU time to build.

- The overhead time grows mostly with the size of things being cached, i.e. the IO time to serialize and transmit stuff.

For this experiment, we want to have an image that’s large enough, such that both the build time and overhead become apparent in our measurements.

This resulted in this Dockerfile which generates a somewhat bloated image 🤪:

FROM node:12

RUN yarn create react-app my-react-app

RUN cd my-react-app && yarn build

RUN npm install -g @vue/cli && (yes | vue create my-vue-app --default)

RUN cd my-vue-app && yarn build

RUN mkdir -p my-tests && cd my-tests && yarn add playwright

Methodology 🔬

I created a GitHub Actions workflow which runs all the approaches. I ran them 20 times.

- 10 times, each time changing the Dockerfile, invalidating the cache (

actions/cache). This is the cache MISS scenario. - 10 times, each time keeping the Dockerfile the same. This is the cache HIT scenario.

After that, the results for each approach and scenario will be averaged together.

There are few exceptions:

- The baselines don't have a cache. So all 20 builds count as cache MISS.

- Approach 🐙 uses an external registry, and hence images are cached and invalidated on a layer-by-layer basis. Due to the way I set up this experiment, all 20 builds ended up being a cache HIT. I am too lazy to perform 10 more builds, so I will assume the cache MISS situation is same as the no cache + the time it takes to push the image the first time.

Results 📊

After all the builds are run, I used the GitHub Actions API to retrieve the timing for each step. I threw the results into Google Sheets, ran some PivotTable, and summarized the results.

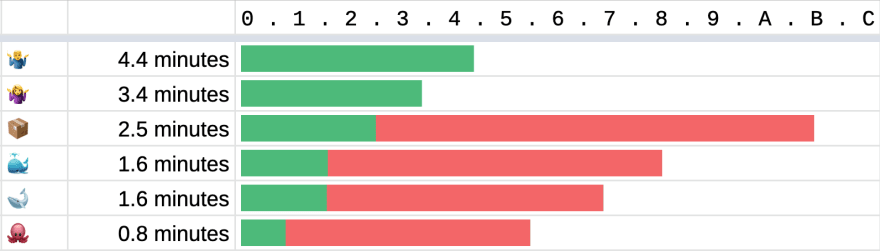

🎖 The showdown

The green bar represents the time it takes in cache HIT scenario. The red bar represents the extra time it costs for the cache MISS scenario.

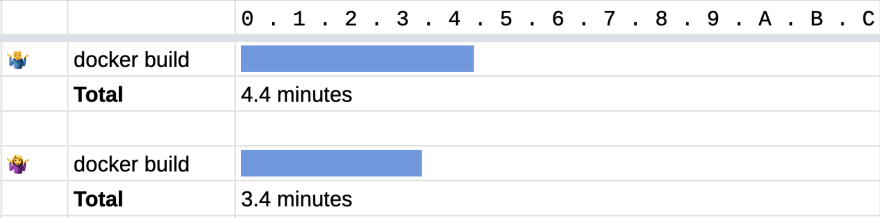

🤷♂️ The baselines 🤷♀️

You can see that just using BuildKit without any cache gives some performance gain. One whole minute saved.

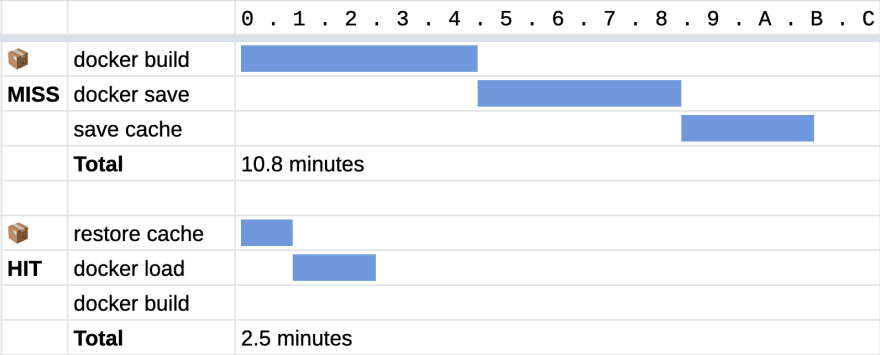

📦 The tarball

The cache size is 846 MB. It turns out that docker save is very slow. So, uploading that tarball into the cache also takes a long time.

🐳 The local registry

The cache size is 855 MB. It turns out that doing a docker push to a filesystem-backed registry, running in localhost, inside Docker, turned out to be faster than docker save. The same is true for docker pull vs docker load.

🐋 The local registry, with BuildKit

The cache size is 855 MB. When you run docker build, BuildKit pulls the image from the local registry, so there is no separate docker pull step, compared to the approach 🐳.

🐙 The GitHub Package Registry

Although we cannot take advantage of BuildKit, using an external registry means that builds can be cached on a layer-by-layer basis. Out of all approaches I’ve tried, this approach gives the best result. 🏆

However, you also get a “build-cache” package listed in your repository’s published packages. 😂

An example closer to real world

To test out the potential gain, I tried running docker-compose build on DEV Community’s repository. Without any caching, building the web image took 9 minutes and 5 seconds. Using GitHub Package Registry as a cache, the time to build the image has reduced to 37 seconds.

Conclusions

You can find the code implementing each of these approaches here:

When it comes to build performance improvement, there are many articles, each recommending different approaches using different examples. It’s hard to see how each approach fares against one another.

While working on this, I also noticed trying other different approaches together gives way to more novel approaches being discovered. For example, had I not tried out Approach 🐳 (local Docker registry), I wouldn’t have thought “Oh! GitHub Package Registry is a thing! Can I use that and skip Action’s cache entirely? Let’s try!” and came up with Approach 🐙.

So, I’d say this is the kind of article I wish existed when I wanted to set up a CI workflow with Docker. I wish we have more showdowns for more approaches and more tools.

Thanks for reading!

Top comments (15)

Thanks a lot for this article and the research!

I've spent some time on the topic as well and I thought I'd share my thoughts/findings here.

As someone already pointed out before, the official Docker build actions changed significantly since this article was released. Namely, they split up the action into smaller ones (they separated login and some setup actions) and added buildx support.

I added a buildx example using GitHub's cache action to the linked repository based on the official buildx cache example: github.com/dtinth/github-actions-d...

After running it a couple times, it turned out to be as fast as the GitHub Package Registry approach. To be completely fair: I couldn't actually run the GPR example myself, because it became outdated since GitHub introduced the new Container Registry, but I compared it to the numbers posted in this article.

So it looks like using buildx with GitHub cache can be a viable alternative.

Further thinking about the method used to compare the different approaches I came to the following theories:

${{ hashFiles('Dockerfile') }}might have an adverse effect on performance when using GH cache: Dockerfiles usually don't change, but code and dependencies do, so relying on the Dockerfile in the cache key will result in invalid caches most of the time. When working with large caches, downloading the cache itself takes time as well which is a waste of time when the cache is invalid. This is why the official example uses SHA with a fallback instead. Builds for the same SHA will always be fast. When no cache is found, the latest cache matching the fallback key will be used.I haven't actually verified these thoughts, hence they are just theories, but I strongly suspect they are correct, so take them into account when choosing a caching strategy.

Last, but not least: as I pointed out above, the Docker Package Registry example became outdated. See this announcement blog post for more details.

Great article and replies. At the risk of sounding stupid: How do you get docker-compose to use the cache? It doesn't have a --cache-from option.

Hi all,

great article. I have been experimenting a bit, porting some workflow from Gitlab.

What about this? Using buildx ability to export cache to local files and then caching it with standard github actions.

Example:

gist.github.com/alvistar/5a5d241bf...

Thanks for your comment. I have never used

buildxbefore, and I saw that it was an experimental feature, and so it might be subject to change as the feature develops.If you are curious as to how it fares against other approaches in this article, I would encourage you to try adding the

buildxapproach to the showdown workflow file. This workflow file contains all the approaches used in this article.When you fork, edit, and commit, GitHub Actions will run the workflow file automatically. Each approach will be run in a separate job which are run in parallel. So it’s like starting a race, and you can see how long each approach will take.

Let me know if you find an interesting result!

Fantastic article. Thank you for going to the effort of testing all these methods. I've implemented the Github Package Registry solution and it shaved our Action times down by 50%, which is very impressive.

Happy to hear it is useful to you! Cheers 😁

Awesome insight. Although I'm not sure what would I need docker to build it with other than having to do them locally. But I'll keep this in mind when the time comes. (Perhaps this is for CI - which I have yet to explore. 😁 I better start learning to test).

Hi. Thanks for putting all the legwork into this. Just a heads up that—according to my cursory search (so don't quote me on this)—GHCR + BuildKit is now possible: github.community/t/cache-manifest-...

Thank you, well written! I was trying to build images with buildkit, storing images in github packages, then try use them as cache on next builds. But I bumped into a problem with this approach. If you are team of people pushing branches directly to this repo, it's alright. But if someone else forks the repo and submits PR, his pull request CI run will not have access to your repository secrets. To use Github Packages, ideally you'd use GITHUB_TOKEN secret, but it's not available from forked repositories. It's often being discussed, see this thread for example github.community/t/token-permissio...

I am planning to try my luck with this github.com/actions/cache instead

Thanks for doing all the research.

I built a Github Action to cache images pulled from docker hub using Podman. I save about 30 seconds for two images that are about 1.3gb

github.com/jamesmortensen/cache-co...

I started to use this: github.com/whoan/docker-build-with...

Does the job very well :)

Great article. Thanks for documenting your findings. I still wish that "Conclusion" had more of a conclusion to it. What do you recommend? What are you doing with your images?

I've registered at dev.to only to say it's an incredible research!