This tutorial introduces how to get started building baselines for Deep Learning using PyTorch Lightning Flash

What is PyTorch Lightning Flash?

PyTorch Lightning Flash is a new library from the creators of PyTorch Lightning to enable quick baselining of state-of-the-art Deep Learning tasks on new datasets in a matter of minutes.

PyTorchLightning/lightning-flash

Consistent with PyTorch Lightning’s goal of getting rid of the boilerplate, Flash aims to make it easy to train, inference, and fine-tune deep learning models.

Flash is built on top of PyTorch Lightning to abstract away the unnecessary boilerplate for common Deep Learning Tasks ideal for:

- Data science

- Kaggle Competitions

- Industrial AI

- Applied research

As such, Flash provides seamless support for distributed training and inference of Deep Learning models.

Since Flash is built on top of PyTorch Lightning, as you learn more, you can override your Task code seamlessly with both Lightning and PyTorch to find the right level of abstraction for your scenario.

For the remainder of this post, I will walk you through the 5 steps of building Deep Learning applications with an inline Flash Task code example.

Creating your first Deep Learning Baseline with Flash

All code for the following tutorial can be found in the Flash Repo under Notebooks.

I will present five repeatable steps that you will be able to apply to any Flash Task on your own data.

- Choose a Deep Learning Task

- Load Data

- Pick a State of the Art Model

- Fine-tune the Task

- Predict

Now let’s Get Started!!!

Step 1: Choose A Deep Learning Task

The first step of the applied Deep Learning Process is to choose the task we want to solve. Out of the box, Flash provides support for common deep learning tasks such as Image, Text, Tabular Classification, and more complex scenarios such as Image Embedding, Object Detection, Document Summarization and Text Translation. New tasks are being added all the time.

In this tutorial, we’ll build a Text Classification model using Flash for sentiment analysis on movie reviews. The model will be able to tell us that a review such as “This is the worst movie in the history of cinema.” is negative and that a review such as “This director has done a great job with this movie!” is positive.

To get started, let’s first install Flash and import the required Python libraries for the Text Classification task.

Step 2: Load Data

Now that we have installed flash and loaded our dependencies let’s talk about data. To build our first model, we will be using the IMDB Movie Review dataset stored in a CSV file format. Check out some sample reviews from the dataset.

review, sentiment"I saw this film for the very first time several years ago - and was hooked up in an instant. It is great and much better than J. F. K. cause you always have to think 'Can it happen to me? Can I become a murderer?' You cannot turn of the TV or your VCR without thinking about the plot and the end, which you should'nt miss under any circumstances.", positive"Winchester 73 gets credit from many critics for bringing back the western after WWII. Director Anthony Mann must get a lot of credit for his excellent direction. Jimmy Stewart does an excellent job, but I think Stephen McNalley and John McIntire steal the movie with their portrayal of two bad guys involved in a high stakes poker game with the treasured Winchester 73 going to the winner. This is a good script with several stories going on at the same time. Look for the first appearance of Rock Hudson as Young Bull. Thank God, with in a few years, we would begin to let Indians play themselves in western films. The film is in black and white and was shot in Tucson Arizona. I would not put Winchester 73 in the category of Stagecoach, High Noon or Shane, but it gets an above average recommendation from me.<br /><br />.", positive

The first thing we need to do is download the dataset using the following code.

Once we have downloaded the IMDB dataset, Flash provides a convenient TextClassificationData module that handles the complexity of loading Text Classification data stored in CSV format and converting it into a representation that Deep Learning models need train.

3. Pick a State of the Art Model for Our Task

Once we have loaded our dataset, we need to pick a model to train. Each Flash Task comes preloaded with support for State of the Art model backbones for you to experiment with instantly.

By default, the TextClassifier task uses the tiny-bert model, enabling strong performance on most text classification tasks. Still, you can use any model from Hugging Face transformers — Text Classification model repository or even bring your own. In Flash, all you need is just one line of code to load a backbone.

4. Fine-tune the Task

Now that we have chosen the model and loaded our data, it’s time to train the model on our classification task using the following two lines of code:

Because the Flash Trainer is built on top of PyTorch Lightning, it is seamless to distribute training to multiple GPUs, Cluster Nodes, and even TPUs.

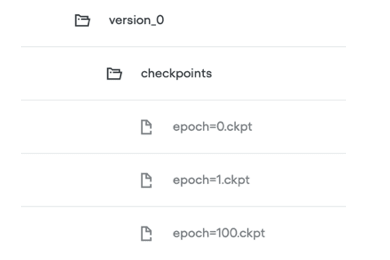

Additionally, you get tons of otherwise difficult to implement features, such as automated model checkpointing and logging integrations with platforms such as Tensorboard, Neptune.ai, and MLFlow built-in without any hassle.

Tasks come with native support for all standard task metrics. In our case, Flash Trainer will automatically benchmark your model for you on all the standard task metrics for classification, such as Precision, Recall, F1 and more with just one line of code.

Task models can be checkpointed and shared seamlessly using the Flash Trainer as follows.

5. Predict

Once we’ve finished training our model, we can use it to predict on our data. with just one line of code:

Additionally, we can use the Flash Trainer to scale and distribute model inference for production.

For scaling for inference on 32 GPUs, it is as simple as one line of code.

You can even export models to Onnx or Torch Script for edge device inference.

Putting it All Together

How fun was that! The 5 steps above are condensed into the simple code snippet below and can be applied to any Flash deep learning task.

https://medium.com/media/b5f1d203391db937738ea9e4d8e0ef6b/href

Next Steps

Now that you have the tools to get started with building quick Deep Learning Baselines, I can’t wait for you to show us what you can build. If you liked this tutorial, feel free to Clap Below and give us a Star on GitHub.

We are working tirelessly on adding more Flash tasks, so if you have any must-have tasks, comment below or reach out to us on Twitter @pytorchlightnin or in our Slack channel.

About the Author

Aaron (Ari) Bornstein is an AI researcher with a passion for history, engaging with new technologies and computational medicine. As Head of Developer Advocacy at Grid.ai, he collaborates with the Machine Learning Community to solve real-world problems with game-changing technologies that are then documented, open-sourced, and shared with the rest of the world.

Top comments (0)