The Question

What if we want to understand the impact of the tweet by a user on particular topic. let's say a user tweeted about a particular product like shoe laces on twitter, how likely are his followers going to buy that product based on his tweet.

let's analyze this scenario using machine learning by constructing a simple model. we'll get data from twitter directly and try to filter and clean the data to train our model. let's see how much can we learn from this.

We'll break down the entire process into the following steps:

- In

Part 1we'll focus on gathering and cleaning the data,

Understanding the Flow

Gathering Data

The main aspect of analyzing twitter data is to get the data. How can we get twitter data in large amount, like 10 million tweets on a particular topic.

- we can access twitter data from Twitter's Developer access token authorization.

- we can scrape twitter directly and get the data.

Accessing from twitter's developer access token

you can simply apply and get access token, which is useful for getting tweets using twitter api. we can use tweepy for that.

The Problem

The problem with using tweepy and twitter's api is, there is a rate limit of number of twitter calls from a particular user per hour. if we want large amount of data like a 10 million tweets this will take forever. Searching through tweets between a particular period was not effective while using twitter's api for me. Under these circumstances I've decided to scrape the twitter's data using an amazing library in python called twitterscraper.

Scraping Twitter directly

let's install twitterscraper

The best thing about twitterscraper is we can give the topic name, period and limit of tweets and the output format in which the tweets are to be obtained.

for the sake of understanding let's download 1000 tweets and try to clean them.

# twitterscraper <topic> --limit <count> --lang <en> --output filename.json

twitterscraper python --limit 1000 --lang en --output ~/backups/today\'stweets.json

the output format from the twitterscraper is in the form of json. let's try to convert the data we've obtained into a dataframe and clean it.

Cleaning Data

loading the downloaded json to a pandas dataframe

import codecs

import json

import pandas as pd

pd.options.mode.chained_assignment = None

# this enables us for rewriting dataframe to previous variable

from typing import List, Dict

json_twitter_data = pd.read_json(open("<path to json file>"))

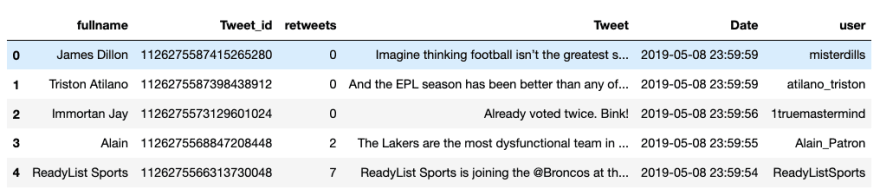

json_twitter_data.head()

let's clean the data now, from the head() we can eliminate url, html and replies and also likes for now. we'll get back to likes afterwards.

# dropping html, url, likes and replies

json_twitter_data.drop(columns=['html', 'url', 'likes', 'replies'], inplace=True)

We need to add user and fullname columns. and get user_ids of the user.

# renaming column names

json_twitter_data.columns = ['fullname', 'Tweet_id', 'retweets', 'Tweet', 'Date', 'user']

twitter_data_backup = json_twitter_data

json_twitter_data.head()

- Note the

retweetcolumn in thedataframewe can assume that the post having retweets will have larger impact on the users. so let's filter the tweets with tweets more thanzero

json_twitter_data = json_twitter_data[json_twitter_data.retweets != 0]

json_twitter_data.head()

- in the data we can have one user tweeting multiple tweets, we need to seperate users based on the tweet count.

# first remove date column

twitter_data_with_date = json_twitter_data

json_twitter_data.drop(columns=['Date', 'Tweet'], inplace=True)

json_twitter_data.head()

- now group the dataframe based on

users

# rather than dropping duplicated we can `groupby` in pandas

# twitter_data.duplicated(subset='user', keep='first').sum()

tweet_count = twitter_data.groupby(twitter_data.user.tolist(),as_index=False).size()

# tweet_count['mastercodeonlin']

-

tweet_countis simply a dictionary and we can access now, the tweets count of a particular user

- we can add the no of tweets column to the dataframe

json_twitter_data['no_of_tweets'] = json_twitter_data['user'].apply(lambda x: get_tweet_count(x))

twitter_data_without_tweet_count = json_twitter_data.drop_duplicates(subset='user', keep="first")

twitter_data_without_tweet_count.reset_index(drop=True, inplace=True)

twitter_data_without_tweet_count.head()

In the next part we'll focus on getting the user_ids of particular user, and analyzing the dataframe by converting it into numerical format.

Stay tuned, we'll have some fun...

Top comments (4)

Scraping the Twitter website is against the Terms of Service and may lead to your IP address being blocked, so I would not recommend this method. The API also provides much more detail, depending on what you're trying to achieve.

waiting for the Part - 2 of this ..

i wanna ask, do know how to solve my problem? i couldn't get the data after scaping.

the result are always:

"INFO:twitterscraper:Got 0 tweets (0 new)

i use Windows 10. thanks before

Hi monitayusriabbas,

sorry for the late reply.. I'm wondering if could give me the Python version you are using and the query for the tweet.

you could also search here if you need more info

github.com/taspinar/twitterscraper...