There is a great book called “High Performance Browser Networking” by Ilya Grigorik that contains basic knowledge about networking and data transfer optimizations. In this book author writes the following about CDNs:

Content delivery network (CDN) services provide many benefits, but chief among them is the simple observation that distributing the content around the globe, and serving that content from a nearby location to the client, enables us to significantly reduce the propagation time of all the data packets.

We may not be able to make the packets travel faster, but we can reduce the distance by strategically positioning our servers closer to the users! Leveraging a CDN to serve your data can offer significant performance benefits.

When you think about it, you probably agree with the fact that CDNs make the user experience better, because they speed up website loading. But... wait a minute! How does the CDN do it?

You might say, “Well, when a user loads files from CDN, they are loaded from the nearest server to this user. Easy-peasy.” Yeah, but how does it happen if we use the one and only domain name for the content?

In Uploadcare we offer our clients a CDN along with other optimizations, and we know a lot about the technologies that are used to make the content delivery fast, reliable, and secure. In this small series of articles we are going to share this knowledge with you and explain the magic behind the CDN.

Wiring the things up

Let us start with basic networking. Let’s say we have two computers that should communicate with each other:

The solution is simple — wire them and the job is done:

Yeah, it works. When computer A wants to send a message to computer B, it transfers the data through the port where the cable is connected to. It’s easy to detect when the communication channel is occupied, because there are only two users of this channel. There’s no problem with addressing due to the same reason.

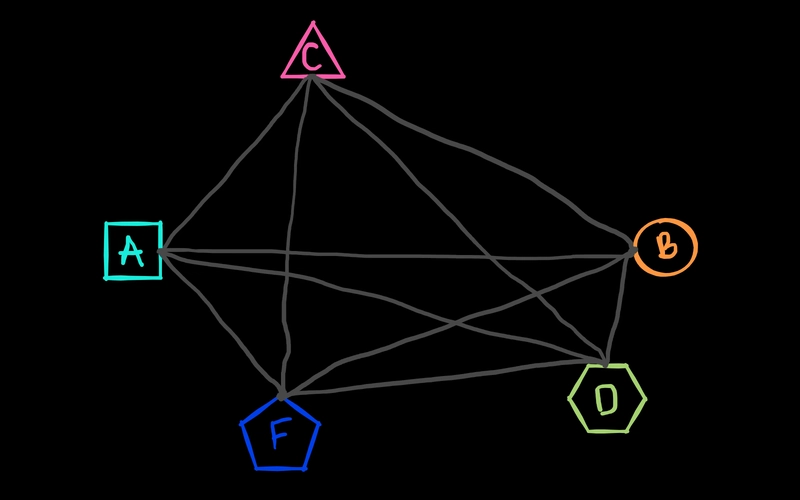

However, this solution is hard to scale. What should we do when we have five computers instead of two? Sure, we can connect them to each other, but...

You see that communication quickly becomes a problem. It’s easier to summon Satan with these wires rather than build a clear network. Not only do we need a lot more cables now, but also each computer should have four ports. Moreover, when we want to add a new computer to this network, we have to find a way to add a new port to each existing computer! The topology of this network is way too complex.

In communication networks topology is the arrangement of the elements. There are physical and logical topologies. Physical topology is the placement of the various components of the network, while logical topology is how data flows within a network.

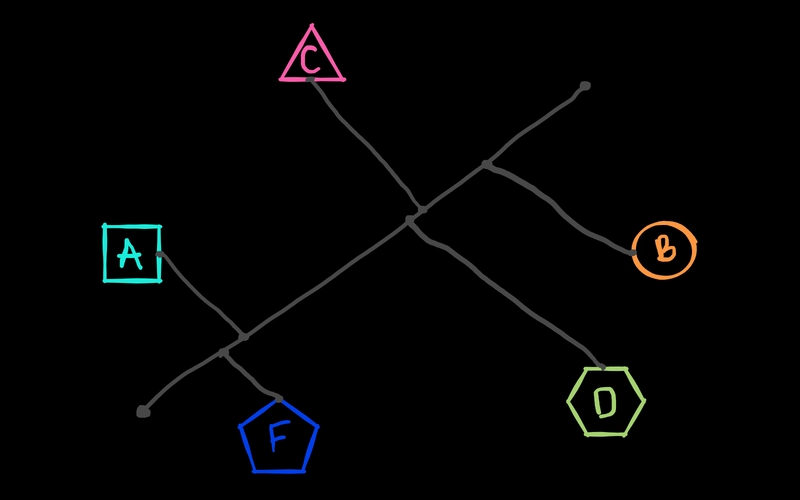

In our case, every device is connected to each other, which means that we have a fully connected network, physically and logically. To reduce the number of cables we may choose a different topology. For example, each computer may be connected to its neighbors on the left and on the right only:

This is called a ring network. As you see, this type of network does not require too many cables and each computer may have two ports only, but the tradeoff is the necessity of transferring data through the intermediate computers while trying to reach the destination.

Even though using intermediate computers isn’t that bad, there is a much more important flaw in a ring network. The connection relies on the availability of the nodes. If one node of the ring is broken, then the signal can’t be transferred properly.

There are different topologies which solve different problems. E.g. instead of using a ring network we might use one central wire to which all the computers are connected. That is, we would get a bus network:

Now our network does not rely on computers, but it will be broken if the central wire (usually called a bus, a backbone or a trunk) does not work properly. This type of network is harder to maintain, because you have to be able to check and repair every inch of the backbone.

To solve the maintenance problem described above, we may use a “helper device” which will receive data from a computer and distribute it through the network. The device is called a hub, while the topology of this kinds of network is called a star:

Even though this network may be broken by shutting down the hub, it’s much easier to maintain — instead of controlling a long cable, we have to control only one device. Also, it’s much easier to connect new nodes — just plug the cable into the hub, and it works.

In real life we use star networks at home. All the devices are usually connected to one router that receives messages and distributes them when necessary. The Internet itself is a hybrid network, which is combined from the myriads of small networks, each one of those may use any physical or logical topology.

The Internet hierarchy

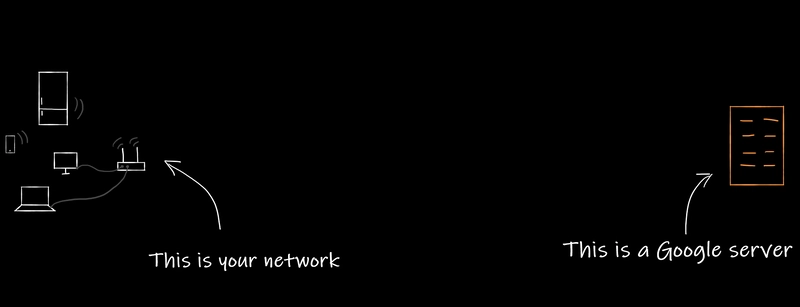

Alright, we have a network at home that may look like this:

But what happens on the next level? What is the way of your request to Google? Let’s see.

First of all, your request will land on the computer that will process it — a Google server:

But the request doesn’t go straight to the Google server.

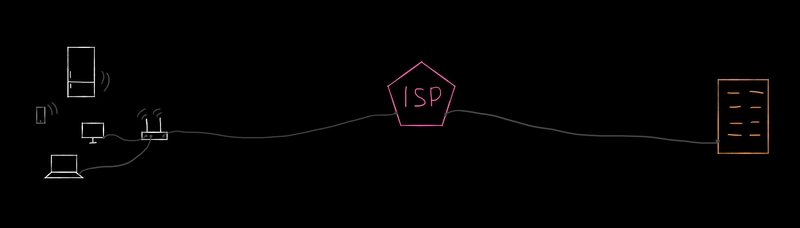

Your router connects you with a larger network owned by your Internet Service Provider (ISP). Same goes with the Google’s computer:

Each of those ISPs maintains its own network for many of the local networks, not only for yours and Google’s.

If you are connected to the same ISP as your Google server — you’re lucky, because the data may be transferred inside the provider’s network which is fast, secure and reliable:

But in the real world it is not possible to connect everyone using only one ISP. There are hundreds and thousands of providers. Each ISP has to communicate with each other to make the Internet work properly.

So, the closest to real life example may look like this:

You might see the problem here. If all the ISPs should connect to each other directly then we may encounter the same situation we got at the start of this article — tons of wire! That’s why they are connected using hubs called Internet Exchange Points, or IXPs.

Our network is already complicated, but there is one more problem — geography.

What if you live in Spain, while the Google computer “lives” in the USA? Well, they should be connected in some way, right?

This way is an underwater cable! You may think that this is a joke, but no, it is not. The continents are connected to each other using undersea cables. It may seem unreliable, and in fact sometimes it is. But it’s still the best way to connect everything:

Now it looks like we know how to connect two computers using the Internet. But here’s where things become really mad.

First, there are lots of ISPs.

Second, there are lots of IXPs.

Third, there are lots of undersea cables! Check the map of them dated 2021:

Finally, not every ISP is connected directly to IXP. Sometimes they are connected to “parent” ISPs.

Even this is not the end, the cables are different: some of them transmit the signal faster, others do it slower. What’s more important, some of them cost more than others to use. You don’t think that the connection between ISPs and IXPs is free of charge, do you? ISPs pay to each other and IXPs to use the network each of them have. Some providers charge more, others charge less.

That’s why the signal your computer sends usually does not go by the shortest path. The signal goes by the cheapest one.

Transmission medium

A transmission medium is a system or substance we use to transfer the signal. When devices are connected to each other using Wi-Fi, the medium is air. When your PC is connected to your router, the medium is usually a copper wire. The backbone of the Internet — the undersea cables — is made of optic fiber.

The medium defines the conditions in which the communication can be done.

For instance, copper wires are affected by any electrical noise. That’s why the twisted pair is actually “twisted” — to prevent electromagnetic interference. Such cables also may be covered with a special material which “shields” the transmitted signal.

At the same time, optical fiber does not care about electrical noise, because it’s just a “light pipe.” Even more, due to the techniques used to transfer the data, the speed of transmission may be much higher in optical fiber than in copper wire!

Now you may say, “The Internet is based on undersea cables and this is kinda sick. Why not use satellites if we can transmit data through the air?”

Sure, it is possible to connect computers using satellites. E.g. GPS uses satellites and it works. First of all, it’s cheaper to simply wire the computers up. Besides, the network laying on the ground (or in the sea) is much easier to repair.

Moreover, let’s do the quick math. Let’s recall the connection we described earlier. Let’s say that the orange dot was Nashville, USA, while the white dot was Zaragoza, Spain:

The distance between these cities is 7200 kilometers. If we connect them using a direct wire, the signal transmitted from Zaragoza will reach Nashville in 36 milliseconds, because the speed of light in optic fiber is around 200 000 km/s (it’s 1.5 lower than in the vacuum).

At the same time, there might be a satellite somewhere in the middle of the sky, between Zaragoza and Nashville. Historically, communication satellites were deployed on geostationary orbit, the altitude of which is 35 786 kilometers. Our signal should go from Zaragoza to the satellite and then from the satellite to Nashville.

Let’s omit trigonometry and say that the total distance is 2 × 35 786 kilometers, which is 71 572 km.

At the same time, the speed of the signal that goes to the satellite and back is approximately 300 000 km/s, because radio waves are used for transferring the signal. The total trip time is 238 ms.

So, the time that signal goes from Zaragoza to Nashville by direct wire is 36 milliseconds. For the satellite this time is 238 ms.

We have not said anything about the fact that all those intermediate points, such as ISPs, IXPs and the rest of them, slows the signal down, making the real transferring time bigger than 36 ms. But at the same time, we haven’t said anything about the quality of the signal, which is usually not so good for transmissions over the air.

So, you see why the world is still using cables. In the foreseeable future, the status quo may change, due to massive deployment of satellites (e.g. by Starlink) to a low Earth orbit. Lower altitude allows the satellites to maintain a connection with much less latency.

However, it’s highly unlikely that the current wired infrastructure disappears, which means that if you maintain an Internet service you have to use services like CDNs to serve data closer to users.

In the next article of this series we will see how the signal is encoded during the transfer and figure out how to intercept requests to inspect them.

Originally published by Igor Adamenko in Uploadcare Blog

Top comments (0)