Wolf Fact: Wolves and domestic dogs share about 99.8% of their DNA, making them closely related.

Prerequisites: C#, basic of Web API, Visual Studio, Docker Desktop

Publish and Subscribe Pattern:-

It defines brokers, publishers, and subscribers, and how they should interact to create a loosely coupled scalable system. The publisher forwards the content/messages to the broker. And the subscribers get these messages from the broker.

The Pub/Sub pattern can be implemented in a variety of ways. Ex.- The broker may push messages to subscribers or subscribers may have to read messages from the broker. Like this, every step’s process depends on implementation.

The broker determines the type of content that a subscriber will receive. The broker uses message filtering techniques for this. There are two mainstream methods.

Topic-based: It is a name for logical channels. A subscriber will receive all the messages published to a topic based on their subscription. The publisher is responsible for defining the topics.

Content-based: In this case, the subscriber classifies what type of content it will receive. So Subscriber is responsible for defining the constraints.

Pub/Sub pattern makes the application more scalable because publishers are not directly bound to subscribers and the broker deals with message delivery. So publishers don’t have to make any changes for a large number of subscribers. That’s the job of the broker.

Pub/Sub with Dapr:-

Dapr supports pub/sub pattern. In Dapr implementation:-

Publisher: It writes messages to an input channel and sends them to a topic, unaware of which application will receive them.

Subscriber: It subscribes to the topic and receives messages from an output channel, unaware of which service produced these messages.

An intermediary message broker copies each message from a publisher’s input channel to an output channel for all subscribers interested in that message. Dapr provides *a content-based routing *pattern( filtering technique).

The pub/sub API in Dapr:

Provides a platform-agnostic API to send and receive messages. Ex. Windows, Linux …

Offers at-least-once message delivery guarantee. Dapr considers a message successfully delivered once the subscriber processes the message and responds with a non-error response.

Integrates with various message brokers and queuing systems. Ex. Apache Kafka, AWS, Azure Service Bus, Redis streams, and many more. You can check the complete list here.

Default Values:

Message Routing: For message routing, Dapr uses the header value Content-Type as

datacontenttype. Any message sent by an application to a topic using Dapr is automatically wrapped in a Cloud Events envelope.Message Content: For message content, Dapr uses the header value Content-Type as

text/plain.The developer can change the content type for the message based on requirements.

Dapr applications can subscribe to published topics via two methods that support the same features:

Declarative: Subscription is defined in an external file( YAML file).

programmatic. Subscription is defined in the user code.

So how does dapr pub/sub work?

The Dapr sidecar of the subscriber service calls the

/dapr/subscribeendpoint.The Dapr sidecar of the subscriber creates the requested subscriptions on the message broker.

The publisher sends a message at the

/v1.0/publish/<pub-sub-name>/<topic>endpoint on the Dapr publisher’s sidecar.The publisher sidecar publishes the message to the message broker.

The message broker sends a copy of the message to the subscriber’s sidecar.

-

The subscriber’s sidecar calls the corresponding endpoint on the subscriber service then the subscriber returns an HTTP response.

HTTP Response Code

204 Message delivered

403 Message forbidden by access controls

404 No pubsub name or topic given

500 Delivery failed

200 Success

Now Let’s write some code. I will continue with the code that I wrote in the previous article. The complete source code can be found here.

I am using the programmatic subscription approach, the subscription will be defined in the code. My Project ServiceOne will behave as a Publisher and ServiceTwo as a subscriber. I will use the Redis Stream component for the broker.

In both services, NuGet packages Dapr.Client and Dapr.AspNetCore is required.

- Startup.cs:-

Add these methods to respective services.

UseCloudEvents(): Dapr uses Cloud event 1.0 for message routing( sending messages to topics and receiving responses from subscribers). So we need this method in both startups.

MapSubscribeHandler(): Map the endpoint that will respond to requests /dapr/subscribe from the Dapr runtime. So needs only in subscription service.

Note: If your service is behaving as both subscriber and publisher then you need to add both these methods to the startup.

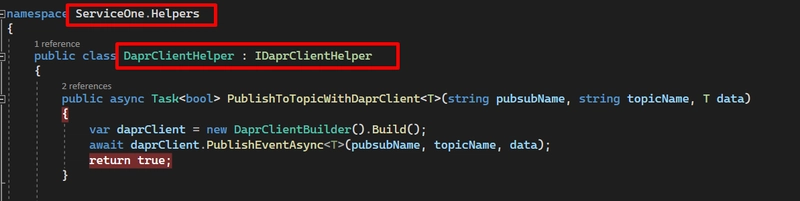

2. ServiceOne IDaprClientHelper and DaprClientHelper:-

This method is only required in ServiceOne (Publisher). PublishToTopicWithDaprClient method has so many concepts. Let’s understand them.

2.1. topicName: The name of the topic. It is a user-defined name.

2.2. data: Data that needs to be published on the topic.

2.3. daprClient: The Dapr client package allows interaction with other Dapr applications from a .NET application. The daprClient is an instance of DaprClientBuilder that would be used to call other dapr services.

2.4. PublishEventAsync: Publish an event to the specified topic.

2.5 pubsubname: It is the name of the pubsub component. This will be used by subscribers to subscribe to a topic. We will see it in the ServiceTwo controller.

3. ServiceOne IServiceTwoHelper and ServiceTwoHelper:-

PublishMessageTopic calls PublishToTopicWithDaprClient method. In this step, serviceOne( + sidecar) will write a message on the specified topic.

pubsubname: pubsub

topicName: publishmessage

data: Notice that data is passed as an object but not as a primitive data type.

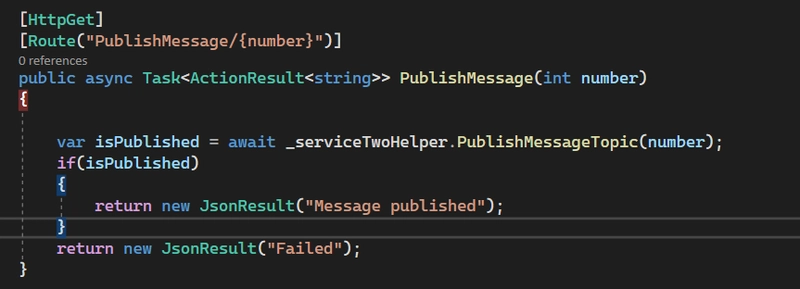

4. HomeController:-

This controller method is used to publish the message to the topic.

This controller method will receive the message from the broker/topic. Notice the header. The topic has two parameters.

First:- pubsubname. Which is pubsub.

Second:- topicName. Which is

publishmessage

Both are the same as PublishToTopicWithDaprClient parameters.

Now let’s update the docker-compose file. I am using Redis streams. So I have added the below-mentioned lines to docker-compose.

It defines that we need a service Redis on host port 6379 with any available hostname( I chose redisstate, you may choose based on your preference).

Hey, we have written a programmatic subscription for pubsub. Okk.

Also added Redis service to docker-compose file. Okk.

But how will our services know they need to use this Redis stream? hmmm.

Now let’s do this…

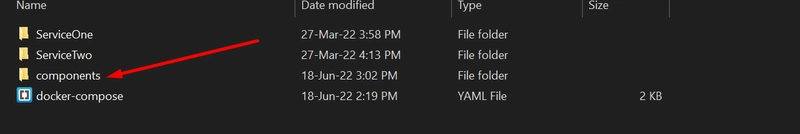

Do you remember the declaration of the component in the docker-compose sidecar?

This folder is used to define the components that can be used. We are going to use redis stream component.

Dapr keeps a YAML file in the components folder to identify the components that need to be used.

This file defines that we are using a Redis component at port 6379. I am using dapr.io apiVersion V1 alpha1. The hostname and port number are the same as the docker-compose file.

Now the coding part is done. So let’s run the code.

>>> docker compose build

>>> docker compose up

With these two commands, our services will be up and running.

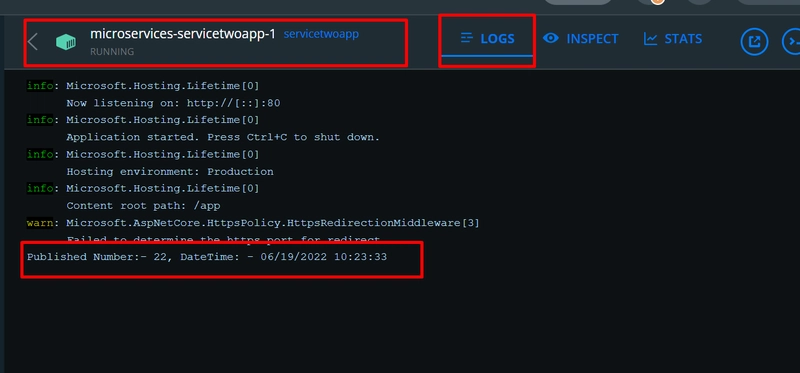

It shows status code 200, Success. You can check the console of ServiceTwo to confirm that the message is published correctly( Because I have written it on the console in the ServiceTwo controller).

Correctly Published.

So that’s how we can perform Pub/Sub operation with Dapr, docker-compose, and .NET.

Note: You can check out the complete code here. The first commit has docker-compose configurations, and the service-to-service invocation code. The second commit has an extra pub-sub configuration and the pub/sub code.

Any comments or suggestions would be greatly appreciated.

Top comments (0)