It’s all about the names!

_“The letter is E …. Start!…” — _One of my brothers said.

We began to crazily write down words that start with E in each category. Everyone wanted to win as many points as possible in that afternoon game.

“Stop!!!!!” — _My sister announced suddenly — “I’m already done with all the categories”_.

We stared at each other with disbelief.

“Ok. Let’s start checking!” — I said.

One by one, we start to enumerate the words we have entered under each category: fruits, places, names…

“What about a color name?” — My other brother asked at one point.

“Emerald…” — my sister said proudly.

“Noo! Noo! That’s not a color name!!!” — My two brothers complained loudly at the same time.

_“Of course it is! If no one put a color name I get double points!” — _She replied happily.

Every time we played Scattergories, or Tutti Frutti as it is commonly called in Argentina, we had the same discussion. The rules about color names changed every time.

It all depended on the players of the day. Something happening to a lot of people playing that game as we learned after.

It is easy for us to put words under categories. We can tell which word is a noun, an adjective or a verb in a text. We can point out the name of a person, an organization or a country.

This is not an easy task for a machine. However, we have come a long way.

Now, we don’t need to spend long hours reading long texts anymore. We can use machine learning algorithms to extract useful information from them.

I remember the first time I read about Natural Language Processing (NLP). It was hard for me to picture how an algorithm can recognize words. How it was able to identify their meaning. Or select which type of words they were.

I was even confused about how to start. It was so much information. After going around in circles, I started by asking myself what exactly I had to do with NLP.

I had several corpora of text coming from different websites I have scraped. My main goal was to extract and classify the names of persons, organizations, and locations, among others.

What I had between my hands was a _Named Entities Recognition (NER) _task.

These names, known as entities, are often represented by proper names. They share common semantic properties. And they usually appear in similar contexts.

Why did I want to extract these entities? Well, there are many reasons for doing it.

We use words to communicate with each other. We tell stories. We state our thoughts. We communicate our feelings. We claim what we like, dislike or need.

Nowadays, we mostly deliver things through written text. We tweet. We write in a blog. The news appears on a website. So written words are a powerful tool.

Let’s imagine that we have the superpower of knowing which people, organizations, companies, brands or locations are mentioned in every news, tweet or post in the web.

We could detect and assign relevant tags for each article or post. This will help us distribute them in defined categories. We could match them specifically with the people that are interested in reading about that type of entities. So we would act as classifiers.

We could also do the reverse process. Anyone can ask us a specific question. Using the keywords, we would also be capable of recommending articles, websites or post very efficiently. Sounds familiar?

We can go even further and recommend products or brands. If someone complains about a particular brand or product, we can easily assign it to the most idoneous department in a matter of seconds. So we are going to be excellent customer support.

As you can guess, NER is a very useful tool. However, everything comes at a price.

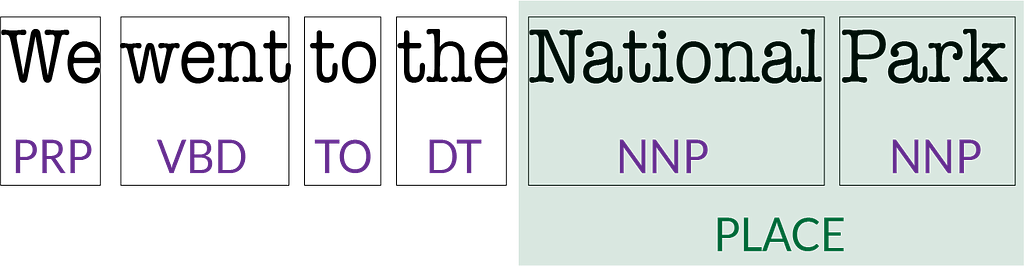

Before an algorithm can recognize entities in a text, it should be able to classify words in verbs, nouns, adjectives. Together they are referred to as parts of speech.

The task of labeling them is called part-of-speech tagging, or POS-tagging. The method label a word based on its context and definition.

Take as an example the sentences: “The new technologies impact all the world” and “In order to reduce global warming impact, we should do something now”. “Impact” has two different meanings in each sentence.

There are several supervised learning algorithms that can be picked for this assignment:

Lexical Based Methods. They assign the most frequently POS co-occurring with a word in the training set.

Probabilistic Methods. They consider the probability of the occurrence of specific tag sequence and assign it based on that.

Rule-Based Methods. They create rules to represent the structure of the sequence of words appearing in the training set. The POS is assigned based on these rules.

Deep Learning Methods. In this case, recurrent neural networks are trained and used for assigning the tags.

Training a NER algorithm demands suitable and annotated data. This implies that you need different types of data that match the type of data you want to analyze.

The data should also be provided with annotations. This means that the named entities should be identified and classified for the training set in a reliable way.

Also, we should pick an algorithm. Train it. Test it. Adjust the model….

Fortunately, they are several tools in Python that make our job easier. Let’s review two of them.

1 Natural Language Tool Kit (NLTK): NLTK is the most used platform when working with human language data in Python.

It provides more than 50 corpora and lexical resources. It also has libraries to classify, tokenize, and tag texts, among other functions.

For the next part, we will get a bit more technical. Let’s start!

In the code, we imported the module ntlk but also the methods word_tokenize and pos_tag.

The first will help us tokenize the sentences. This means splitting the sentences into tokens or words.

You may wonder why we don’t use the Python method .split(). NLTK split a sentence in words and punctuation. So it is more robust.

The second method will “tag” our tokens into the different parts of speech.

First, we are going to make use of two other Python module: requests and BeautifulSoup. We’ll use them to scrape Wikipedia website about NLTK. And retrieve its text.

Now, our text is in the variable wiki_nltk.

It’s time to see our main methods in action. We’ll create a method that takes the text as an input. It will use word_tokenize to split the text into tokens. Then, it will tag each token with its part of speech using pos_tag.

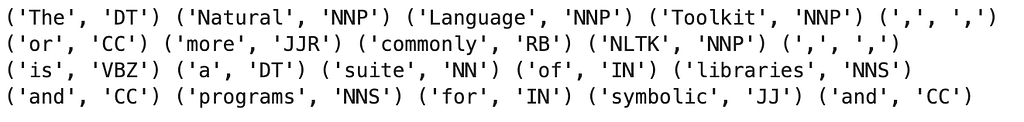

The method will return a list of tuples. What each tuple will consist of? Well, a word along with its tag; the part of the speech that it corresponds to.

After that, we apply the method to our text wiki_nltk. For convenience, we will print only the first 20 tuples; 5 per line.

The tags are quite cryptic, right? Let’s decode some of them.

DT indicates that the word is a determiner. NN a Noun, singular. NNP a proper noun, singular. CC a coordinating conjunction. JJR an adjective, comparative. RB an adverb. IN a preposition.

How is that pos_tag is able to return all of these tags?

It uses Probabilistic Methods. Particularly, Conditional Random Fields (CRF) and Hidden Markov Models.

First, the model extracts a set of features of each word called State Features. It bases the decision on characteristics like capitalization of the first letter, presence of numbers or hyphen, suffixes, prefixes, among others.

The model considers also the label of the previous word in a function called Transition Feature. It will determine the weights of different features functions to maximize the likelihood of the label.

The next step is to perform entity detection. This task will be carried out using a technique called chunking.

Tokenization extracts only “tokens” or words. On the other hand, chunking extract phrases that may have an actual meaning in the text.

Chunking requires that our text is first tokenized and POS tagged. It uses these tags as inputs. It outputs “chunks” that can indicate entities.

NLTK has several functions that facilitate the process of chunking our text. The mechanism is based on the use of regular expressions to generate the chunks.

We can first apply noun pronoun chunks or _NP-chunk_s. We’ll look for chunks matching individual noun phrases. For this, we will customize the regular expressions used in the mechanism.

We first need to define rules. They will indicate how sentences should be chunked.

Our rule states that our NP chunk should consist of an optional determiner (DT) followed by any number of adjectives (JJ) and then one or more pronoun noun (NNP).

Now, we create a chunk parser using RegexpParser and this rule. We’ll apply it to our POS-tagged words using chunkParser.parse.

The result is a tree. In this case, we printed only the chunks. We can also display it graphically.

NLTK also provides a pre-trained classifier using the function nltk.ne_chunk(). It allows us to recognize named entities in a text. It also works on top of POS-tagged text.

As we can see, the results are the same using both methods.

However, the results are not completely satisfying. Another disadvantage of NLTK is that POS tagging supports English and Russian languages.

2 SpaCy model : An open-source library in Python. It provides an efficient statistical system for NER by labeling groups of contiguous tokens.

It is able to recognize a wide variety of named or numerical entities. Among them, we can find company-names, locations, product-names, and organizations.

A huge advantage of Spacy is having pre-trained models in several languages: English, German, French, Spanish, Portuguese, Italian, Dutch, and Greek.

These models support tagging, parsing and entity recognition. They have been designed and implemented from scratch specifically for spaCy.

They can be imported as Python libraries.

And loaded easily using spacy.load(). In our code, we save it in the variable nlp .

SpaCy provides a Tokenizer, a POS-tagger and a Named Entity Recognizer. So it’s very easy to use. We just called our model in our text nlp(text). This will tokenize it, tagged it and recognize the entities.

The attribute .sents will retrieve the tokens. .tag_ the tag for each token. .ents the recognized entities. .label_ the label for each entity. .text just the text for any attribute.

We define a method for this task as follows.

Now, we apply the defined method to our original Wikipedia text.

Spacy recognizes not only names but also numbers. Very cool, right?

One question that probably raises is how SpaCy works.

Its architecture is very rich. This results in a very efficient algorithm. Explaining every component of SpaCy model will require another whole post. Even tokenization is done in a very novel way.

According to Explosion AI, Spacy Named Entity Recognition system features a sophisticated word embedding strategy using subword features, a deep convolutional neural network with residual connections, and a novel transition-based approach to named entity parsing.

Let’s explain these basic concepts step by step.

Word embedding strategy using subword features. Wow! Very long name and a lot of difficult concepts.

What does this mean? Instead of working with words, we should represent them using multi-dimensional numerical vectors.

Each dimension captures the different characteristics of the words. This is also referred to as Word embeddings.

The advantage is that working with numbers is easier than working with words. We can make calculations, apply functions, among other things.

The huge limitation is that these models normally ignore the morphological structure of the words. In order to correct this, the subword feature is introduced to include the knowledge about morphological structures of the words.

Convolutional neural network with residual connections . Convolution networks are mainly used in processing images. The convolutional layer multiplies a kernel or filter (a matrix with weights) by a window or portion of the input matrix.

The structure of the traditional neural networks is that each layer feeds the next layer.

A neural network with residual blocks splits a big network into small chunks. This chunks of the network are connected through skip functions or shortcut connections.

The efficiency of a residual network is given by the fact that the activation function has to be applied fewer times.

Transition-based approach . This strategy uses sequential steps to add one label or change the state until it reaches the most likely tag.

Lastly, Spacy provides a function to display a beautiful visualization of the Named Entity annotated sentences: displacy.

Let’s use it!

… Wrapping up!

Named Entities Recognition is an on-going developing tool. A lot has been done regarding this topic. However, there is still room for improvement.

Natural Language Processing Toolkit — It is a very powerful tool. Widely used. It provides many algorithms to choose from for the same task. However, it only supports 2 languages. And it requires more tunning. It does not support word vectors.

SpaCy — It is a very advanced tool. It supports 7 languages as well as multilanguage. It is more efficient. It’s object-oriented. However, the algorithms behind are complex. Only keeps the best algorithm for a task. It has support for word vectors.

Anyhow, Just go ahead and try an approach! You will have fun!

Top comments (1)

A very informative post and use-case! Over time there have been significant advancements in NLP. Most of the named entity extraction tasks can be performed using tools that are specifically designed for data annotation. For my projects, I use NLP Lab which is a free to use no-code platform that helps in automatic annotation of entities in your text document. Over the years, annotation in general has advanced through certain levels. Annotation tools like NLP Lab come with support for various data types as well, ranging from annotating raw unstructured texts, to pdf files and images.