In 2016, Twitter launched a skill for Alexa that allowed people to ask Alexa for their retweets, mentions etc. Back then, Alexa did not support display components. Since then, many Alexa devices now support display capabilities to provide a voice-first experience. This provides a rich, enhanced experience for people as they can view visual components such as images, text etc. on their devices, along with Alexa’s voice-based experience.

This tutorial shows how to build an Alexa skill for Twitter that allows you to view Trends and Tweets on an Alexa device with display capabilities, such as the Echo Show. I will use the Alexa Presentation Language (APL) to build the display components. This sample is written in Java and uses the Alexa Skills Kit (ASK) SDK for Java. To obtain Tweets and Trends, I will use the Twitter4J library. The full working code with setup instructions can be found here.

Note: This tutorial assumes a basic understanding of building an Alexa Skill and APL. For a more introductory sample, refer to this training course from Amazon.

Setting up a Twitter App

In order to use the Twitter APIs in the Alexa skill, I needed the following:

- A Twitter developer account. Click here to apply

- A Twitter app

- My Twitter API keys. Please review the documentation about obtaining your keys here.

Once I retrieved the API keys, I could use them to create a Twitter Client in Twitter4J which allows me to retrieve Tweets and Trends from Twitter in my Alexa skill.

Building the Language Model for your Alexa Skill

The complete English language model JSON for this skill can be found here.

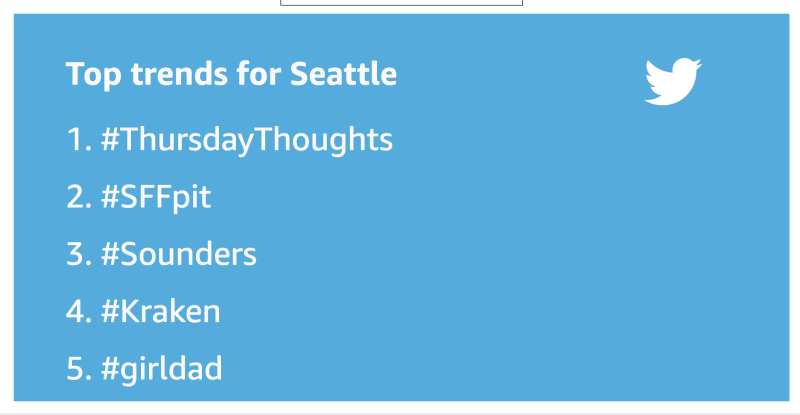

To support “what’s trending in a city”, I added a TrendIntent which has a slot of type City. A slot is the variable piece of information in an Intent. So, when people say something like “What’s trending in Seattle”, it will get routed to this TrendIntent. A complete list of slot types can be found here.

To support “give me Tweets about a topic”, I added TweetIntent which has a custom slot type of SearchTerm. So, when a user says something like “Give me Tweets about snow”, it will get routed to this TweetIntent.

Instructions on building this language model can be found here.

Getting Tweets and Trends

In this sample, I used the Twitter4J library to connect with the Twitter API in order to get Tweets and Trends. I created a TwitterService class that contains a function to get trends by location and another function that gets a List of Tweets for a search term. The Twitter client is setup as follows, using the Twitter API keys:

public TwitterService() {

twitter = new TwitterFactory(new ConfigurationBuilder()

.setOAuthConsumerKey(API_KEY)

.setOAuthConsumerSecret(API_SECRET)

.setOAuthAccessToken(ACCESS_TOKEN)

.setOAuthAccessTokenSecret(ACCESS_TOKEN_SECRET)

.setTweetModeExtended(true)

.build())

.getInstance();

}

The getTrends() method in the TwitterService class takes in a location. We can then lookup the Where On Earth Identifier (WOEID) for that location using a local mapping that I maintain in the SkillData file. Using this WOEID, we can get a list of trends as shown below. Note: I am only using a small list of WOEID mapping for this sample. A more comprehensive list of available WOEIDs can be searched here.

public Trends getTrends(String location) {

Trends trends = null;

try {

int woeid = SkillData.LOCATION_MAP.get(location);

trends = twitter.getPlaceTrends(woeid);

} catch (TwitterException e) {

e.printStackTrace();

}

return trends;

}

The getTweetsBySearchTerm() takes in a search string. We build a search query using this term and obtain a list of Tweets as shown below. More details on building a search query can be found here.

public List<Status> getTweetsBySearchTerm(String searchTerm) {

Query query = new Query(String.format("-filter:retweets -filter:images %s", searchTerm));

query.count(100);

query.resultType(Query.POPULAR);

QueryResult result = null;

try {

result = twitter.search(query);

} catch (TwitterException e) {

e.printStackTrace();

}

return result.getTweets();

}

Building the Display Components for your Alexa Skill

In order to return a response with display components, I first needed to check if the device from which the request is coming supports APL or not. This function below demonstrates how that can be done:

public static boolean supportsAPL(HandlerInput input) {

SupportedInterfaces supportedInterfaces = input.getRequestEnvelope().getContext().getSystem().getDevice().getSupportedInterfaces();

AlexaPresentationAplInterface alexaPresentationAplInterface = supportedInterfaces.getAlexaPresentationAPL();

return alexaPresentationAplInterface != null;

}

This skill supports three screens, optimized for Echo Show 5:

The screen that provides the Trends as a list

The screen that provides the Tweets

Currently, this sample supports three Tweets per search term. I use a Pager with SpeakItem command, so Alexa will read the Tweet on each page and then switch to the next one and read the next Tweet. Because I am using Java, I build a map for the datasource to be used in my response along with my APL document. A reference JSON equivalent for this datasource can be found here. The datasource contains the Tweet text, username, Twitter handle and the image URL of the user.

The TweetHandler handles the request for providing Tweets for a search term.

public Optional<Response> handle(HandlerInput handlerInput, IntentRequest intentRequest) {

final RequestHelper requestHelper = RequestHelper.forHandlerInput(handlerInput);

final String searchTerm = requestHelper.getSlotValue("SearchTerm").get();

final List<Status> statuses = SkillData.getFilteredStatuses(twitterService.getTweetsBySearchTerm(searchTerm));

if(statuses.size() < 3) {

return getNotEnoughTweetsResponse(handlerInput, searchTerm);

}

final String tweetsAsSpeechText = SkillData.getTweetsAsSpeechText(statuses);

final String speechText = String.format("Here are some tweets about %s.", searchTerm);

if (SkillData.supportsAPL(handlerInput)) {

String content = SkillData.getAplDocforTweets();

Map<String, Object> document = new Gson().fromJson(content,

new TypeToken<HashMap<String, Object>>() {}.getType());

Map<String, Object> dataSource = new Gson().fromJson(SkillData.getDataSourceForTweets(statuses),

new TypeToken<HashMap<String, Object>>() {}.getType());

List<Command> commandList = new ArrayList<>();

commandList.add(SpeakItemCommand.builder().withComponentId("tweet1").withDelay(500).build());

commandList.add(SetPageCommand.builder().withComponentId("pagerId").withDelay(100).withPosition(Position.RELATIVE).build());

commandList.add(SpeakItemCommand.builder().withComponentId("tweet2").withDelay(500).build());

commandList.add(SetPageCommand.builder().withComponentId("pagerId").withDelay(100).withPosition(Position.RELATIVE).build());

commandList.add(SpeakItemCommand.builder().withComponentId("tweet3").withDelay(500).build());

SequentialCommand command = SequentialCommand.builder()

.withCommands(commandList)

.withDelay(300)

.build();

return handlerInput.getResponseBuilder()

.withSpeech(speechText)

.addDirective(RenderDocumentDirective.builder()

.withDocument(document)

.withDatasources(dataSource)

.withToken("pager")

.build())

.addDirective(ExecuteCommandsDirective.builder()

.addCommandsItem(command)

.withToken("pager")

.build())

.build();

}

return handlerInput.getResponseBuilder()

.withSpeech(String.format("%s %s", speechText, tweetsAsSpeechText))

.build();

}

Design Considerations

Since Alexa reads the Tweets that are shown on the screen of devices such as the Echo Show, one of the challenges was dealing with URLs and emojis in a Tweet. For this sample, on the screen we render the Tweets as-is, but strip the emojis etc. from the Speech Text that Alexa reads. Another thing to consider is maintaining the formatting of the Tweets to maintain consistency of how Tweets are displayed across platforms. You can read about the Twitter Display Requirements here.

Using APL, we are able to add visual components to build out the welcome, trends and Tweets screens to provide a rich, enhanced voice-first experience for Alexa users.

Instructions on deploying this code can be found here. Try it out and reach out to me on Twitter @suhemparack with feedback or questions.

Top comments (0)