Stef Olafsdottir, Avo’s CEO, speaks about developer productivity at DevRelCon Earth 2020.

For those who don’t know, DevRelCon is an event series for developer relations and experience for developers by developers. Normally hosted in London, San Francisco and Tokyo, Covid-19 made 2020 a global affair with all events hosted online.

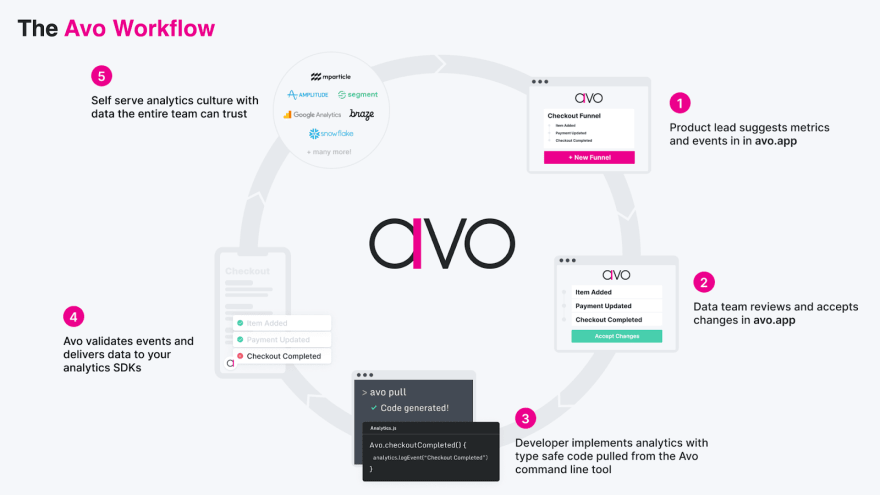

We at Avo are on a mission making sure product teams aren’t constantly forced to choose between product delivery speed and data quality. We provide an easy-to-use interface to define and maintain your tracking plan (as in your taxonomy, data plan, event schema). You could say we’re in the business of efficiency, not just for developers but for product managers, data scientists, and everyone else working with developers on shipping products.

“Instrumentation used to take 1-4 days for every feature >release and now takes 30-120 minutes.”

– Maura Church, Director of Data Science, Patreon

It sure is heartwarming and wonderful to hear feedback like this, and we could take their word for it, or we could do what’s in our DNA as data scientists and engineers: measure it.

Keep reading to learn about our journey in taking a gut feeling, to qualitative customer testimonials, to learning how we now measure developer productivity through their own product measurements.

Measuring Developer Productivity Through Avo's Own Product Measurements

Stef Olafsdottir, CEO and Co-Founder of Avo, is a mathematician and philosopher, turned genetics researcher, turned data scientist, turned founder. She was the 1st data person and Head of Data Science at QuizUp, a mobile game with 100m users, where she built and led the data science division and culture. This included pioneering company-wide ways to prioritize product innovation. At QuizUp they built a culture where people had the tools, data literacy, and data reliability to ask and answer the right questions.

After QuizUp, she and a couple of friends started a company. Only 5 months in, they shipped a product update based on incorrect data. It was a frustrating misstep that helped her realize the importance of the tools and infrastructure they built at QuizUp to keep up data reliability. Furthermore, they wouldn’t afford taking the time to build the tools internally again. They would have to choose between data quality and velocity - for every single product release.

Before Avo, teams have been constantly forced to choose

between product delivery speed and reliable insights.

After a conversation with several of their colleagues at companies like Twitch and Spotify, they learned that many had built the same internal tools to address this problem of inconsistent event analytics.

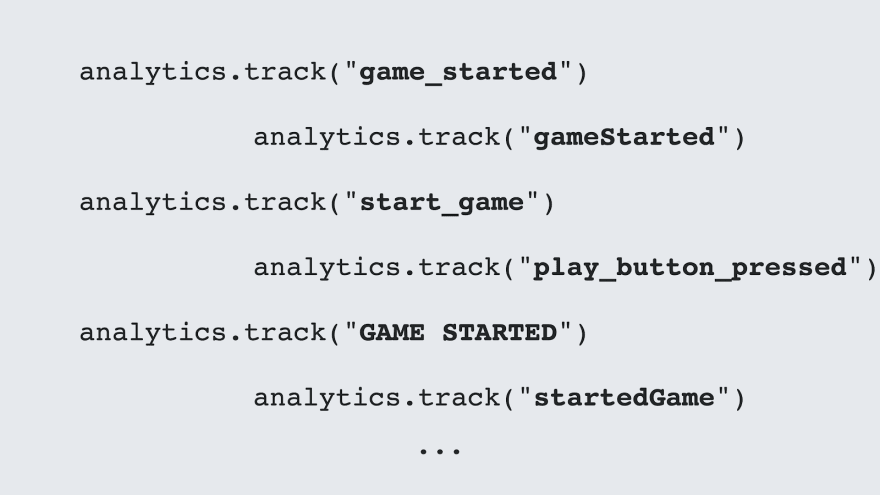

Look familiar? Yes, we can’t stand it either.

So they decided to build Avo to solve this problem once and for all. Using Avo to ensure the schema is always correct, means graphs displayed in tools like Amplitude and Mixpanel are accurate. This means product managers and data scientists can trust the data accuracy and provides immense value in how quickly product teams are able to ship analytics code, without bugs. This increased efficiency is what allows product teams using Avo to ship quickly without sacrificing data quality.

Qualitative Learning: Why Our Customers Like Using Avo

Looking at this qualitative feedback from our users reveals a clear theme. Everything is just a lot quicker. We’re not talking shaving off a few minutes. It’s an order of magnitude in company cost. Not only is implementing event analytics taking a lot less time, the quality is also vastly improved. Even companies like Rappi, who highly value analytics, and already have great insights in place, are spotting missing properties and other data issues that Avo is able to highlight, making it a lot easier to scope out what needs to happen next.

Two themes are clear:

- Operational efficiency

- Increased data quality and reliability

The question is then, how do we take this incredible feedback and have it tell this story of efficiency not just in semantics, but in cold hard data? In other words how do we measure reliable operational efficiency, quantitatively?

How does Avo measure “Operational Efficiency” quantitatively?

We looked at one of our most loved features: a branched workflow for your tracking plan. It’s like GitHub’s branched workflow for code. As we care about operational efficiency, the time to completion matters. That’s why we started measuring the turnaround time of completing the implementation of a tracking plan change, defined as branches being opened and merged within one day.

“Branch Opened” -> “Branch Merged”

After conversations with our customers we soon realized that one of our opportunities to increase the turnaround speed of a branch was making collaboration even smoother.They were desire-pathing the process by creating internal Slack channels and assigning collaborators there (this even included our own team!).

We shipped Branch Collaborators and updated our notifications based on what we heard our customers were using Slack for. The collaborative work ensures data quality and reliability as any relevant stakeholder: a data scientist, a product manager, a developer can be brought into the right branch with a single comment, for a peer review.

To measure the success of the feature release, we looked at the turnaround time of collaborative branches, where at least least one comment was made.

“Branch Opened” -> “Branch Merged” with > 0 comments

We already saw a 70% increase in this metric in the first week after release. And that’s how we measure developer productivity of our customers.

Watch Stef's talk in full

To Ship Tracking Faster Than Ever

Our mission is to enable product teams to ship quickly without sacrificing data quality. It’s critical we can measure developer productivity through our own product measurements, not just based on our guts or a nice comment from a customer, but to have that qualitative data inform a measurable impact in data.

Top comments (0)