In an age where large language models (llms) are gaining increasing popularity in both academia and industry, ranging from education to healthcare and owing remarkable performance in various applications.

However, many of these LLM-based features are new and have a lot of unknowns, they require careful release to preserve privacy and social responsibility.

Additionally, the rapid progress of Large Language Models (LLMs) raises concerns about the potential emergence of superintelligent systems without adequate safeguards.

So, whether you’re a seasoned AI researcher or an enthusiast keen on understanding the intricacies of language model evaluation, this guide has got you covered!

The Need for LLM Evaluation

Evaluating large language models (LLMs) is crucial for ensuring their safe and responsible development and deployment, and there are several reasons for this.

Firstly - it helps to assess the performance of LLMs in various tasks and identify their strengths and weaknesses. This information can be used to improve the development of LLMs and enhance their capabilities.

Secondly - the evaluation can help mitigate potential risks associated with using LLMs, such as biases, ethical concerns, and safety issues.

Finally - the evaluation can provide insights into the potential benefits of LLMs, such as improved work efficiency and educational assistance

Need help with testing & evaluation?

The DeepChecks platform, aimed at building production LLM applications, seeks to address this issue. It is an open-source package that helps you evaluate, monitor, and safeguard your LLM applications throughout their lifecycle.

On the other hand, DeepChecks is a tool for testing and validating machine learning models and data. It offers a wide range of checks for issues such as model performance, data integrity, distribution mismatches, and more. It simplifies analysing everything, from content and style to any potential red flags.

Key features of Deepchecks LLM Evaluation

The main features of the Deepchecks llm evaluation solution are :

✅ Dual Focus: Our solution goes beyond evaluating the accuracy of LLM models. It also ensures model safety by assessing potential biases, toxicity, and PII leakage. This comprehensive evaluation approach provides a more holistic understanding of the model's performance.

📝 Flexible Testing: We understand that responses to a single input can vary. Our solution is designed to adapt to multiple valid responses, allowing for more nuanced evaluation. This flexibility enhances the accuracy of the evaluation and ensures a more robust analysis of the model's capabilities.

👥 Diverse User Base: Our LLM Evaluation solution empowers a wide range of users, including data curators, product managers, and business analysts. This inclusivity allows teams from different backgrounds and expertise to leverage the solution and gain valuable insights into LLM models' performance.

🚀 Phased Approach: We provide a phased approach to LLM evaluation, covering key stages such as Experimentation, Staging, and Production. This methodology ensures that models are thoroughly tested and evaluated at every stage, leading to enhanced performance, reliability, and confidence in the model's capabilities.

With these key highlights, Our LLM Evaluation solution offers a comprehensive and flexible approach to assess the accuracy, safety, and performance of LLM models. By leveraging our solution, teams can make informed decisions, mitigate risks, and maximise the potential of these models in various applications.

Thorough Testing with Deepchecks

Without much delay let's jump to the practical implementation of LLM evaluation solutions.

To begin, head to Deepchecks and create a login.

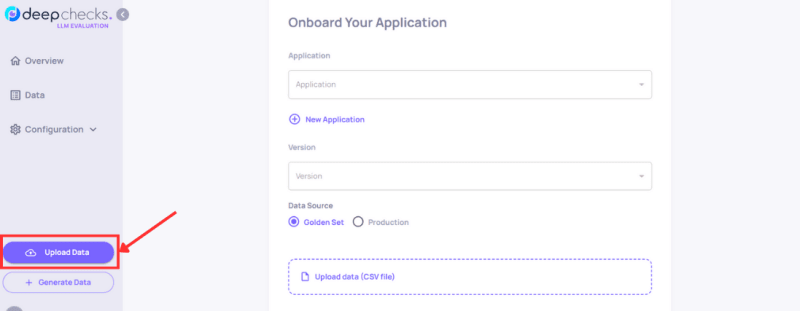

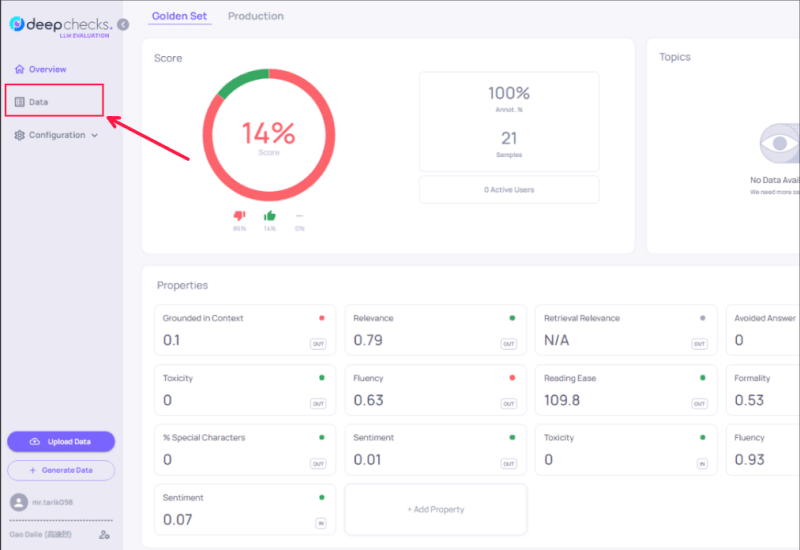

Next, upload Data.

Application set-up instructions

Naming Your Application: Choose a name for your application

Version Specification: Specify the version number of the application you are creating.

Data Source Selection: Decide on your data source, you have two options.

Golden Set: this is high-quality data

Production: refers to a collection of data that is used in the live.

Upload your data: prepare your data in a CSV file format. Ensure the header row, includes ‘input’ and ‘output’ as column titles.

Then submit the file

Next, let’s test and evaluate our data,

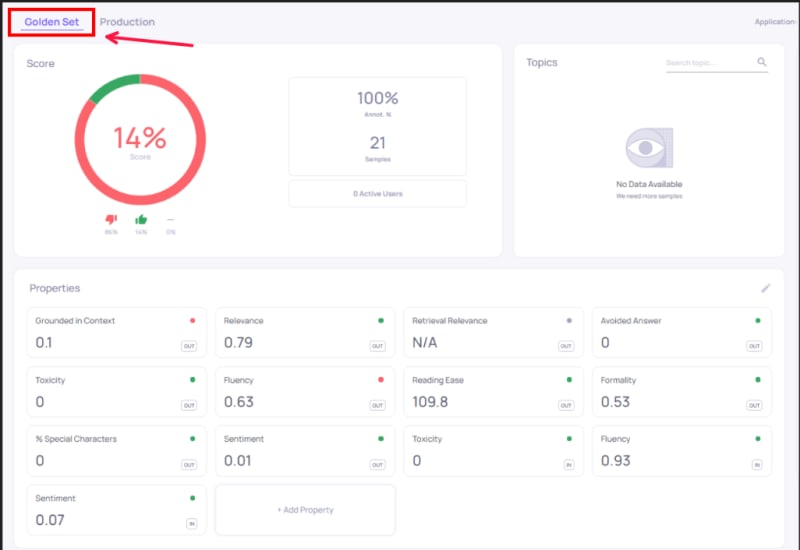

We provide quality scoring for pre-production versions and experimental designs, allowing you to select a specific set of samples to evaluate the quality before any updates.

The golden set is the phase where you evaluate your application’s behaviour, which represents the standard of quality and expected performance.

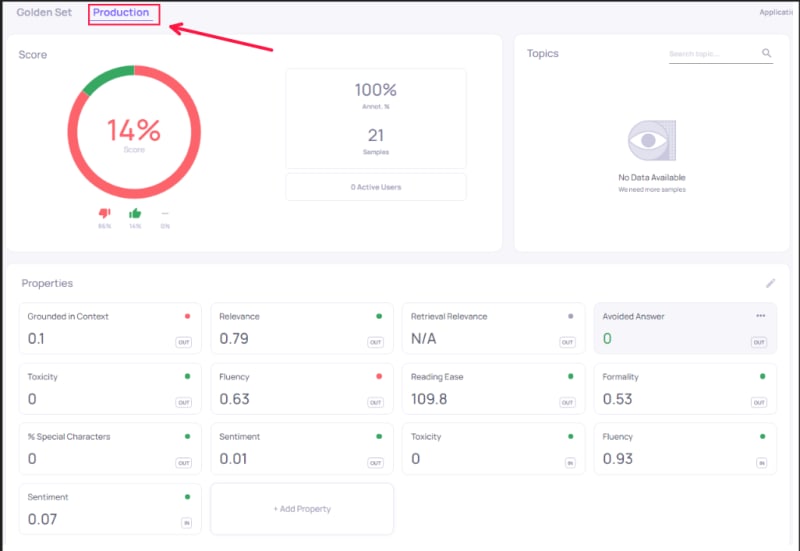

Production is the phase where you observe your application’s performance with real data, assessing its quality and latency across all lifecycle stages.

We furnish our users with comprehensive insights, including qualitative aspects like context relevance and toxicity…, as well as technical data properties such as text length. Moreover, users have the flexibility to incorporate any additional properties.

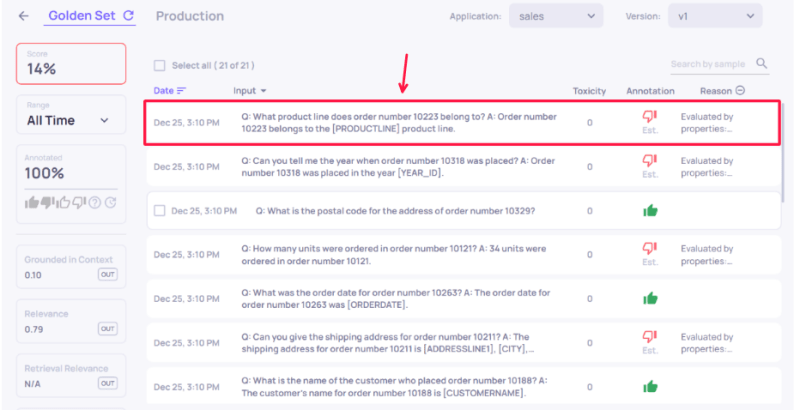

Next, Select the ‘Data’ option to determine whether it is eligible for annotation.

Click on the 'Evaluated by properties' to test annotation.

You can examine the annotation, view each step in the application process. and decide on the right annotation.

The key idea is to offer non-technical users automated annotations, providing them with a no-code solution to evaluate the quality.

Conclusion :

Deepcheeks offers a comprehensive LLM evaluation tool that facilitates the continuous validation of large language models through measurable metrics and AI-enhanced annotations, ensuring high-quality, risk-mitigated applications.

We are here to assist you if you want to test and validate your machine-learning models.

Request a demo for our app here or reach out to us at info@deepchecks.com email if you have any questions.

We are excited to see what you and your team test and validate with Deepcheecks next!

Top comments (0)