Last year, the President signed an Executive Order to improve our Nation's Cybersecurity, and part of that Executive Order was a direction to use Zero Trust Architectures to access cloud assets. I have talked to a few people over the last couple months about the impacts of the Executive Order and one of the reoccurring themes is that there isn't a clear understanding of what Zero Trust is. So let's hit some of the high-level points here so we can all be on the same page. And if I've missed something in this list - please let me know.

Origin and Concepts

The term was originally part of a whitepaper called No More Chewy Centers: Introducing The Zero Trust Model of Information Security which was released by Forrester in 2010. The paper lays out that our traditional networking model is like an M&M - hard and crunchy on the outside and soft and chewy on the inside. Meaning it is hard to get into the network, but once you are in, it is pretty easy to move around. The author points out numerous cases where this has been a problem. One of those stories is about Philip Cummings, who used to work at the help desk at TeleData Communications, Inc (TCI). TCI provided software to credit bureaus like Equifax, TransUnion, and Experian. Through his job duties, Philip had access to all of the passwords and API keys to access all three of the major credit bureaus. While he was working at TCI, a Nigerian crime organization told Philip that they would give him $60 per credit report he was able to send to them. Philip left TCI in 2000, but before he left, he saved off the password and API keys for the credit bureaus in order to send credit reports to the crime organization. It is estimated that he sent over 30,000 credit reports in the next two years until the theft was noticed in 2002.

The Philip Cummings story shows us it is hard to trust people inside our networks, but people have an identity. When we move to the network level, the packets sent across the network have no definitive identity. We can tell where they are coming from, where they want to go, and what the request is. There is no identity. Trusting that packets are legitimate is something we all do, but in computing terms, what does that mean? Because there is no concept of identity, how can we trust packets? We can't. We shouldn't.

Zero trust means that we should never have the soft chewy center. All networks should be treated as untrusted. This will ensure security is baked into the network. In order to implement this, Forrester lays out these concepts:

- Ensure that all resources are accessed securely regardless of location - treat all traffic as if it were coming from the internet, even internally.

- Adopt a least privilege strategy and strictly enforce access control - use something like role based access control to control what people have access to.

- Inspect and log all traffic - most companies log traffic data, but very few inspect it

Forrester makes the point that monitoring the security of 100's to 1,000's of applications is difficult and can be done in as many different ways as there are applications. Fortunately, access to these applications is all done the same way - through the network. Implementing security at the network level is easier and more effective.

Lastly, the paper lays out that cloud traffic isn't going away and we need to have a better way to verify and monitor that traffic. Implementing Zero Trust never ends, much like Agile, Zero Trust is a way of thinking, not a prescriptive method for implementing security. "Trust, but verify" can no longer be our standard, instead it should be "Verify and never trust."

Themes throughout the paper highlight the benefits of using Zero Trust -

- More secure against insider threats

- More secure against external threats when using a "defense in-depth" strategy

- Ultimately cheaper to maintain than the traditional "defense in-depth" options

Standards - NIST 800-207

Since the whitepaper by Forrester in 2010, the evolution of Zero Trust hasn't stopped. In 2020, the National Institute for Standards and Technology (NIST) released publication 800-207 - Zero Trust Architecture. As it is with any Government publication, it is a dry read and is full of acronyms, so here is my summary of the document.

The seven tenets of a zero trust architecture:

- All data sources and computing services are considered resources. Big or small, simple or complex, all systems on a network are considered resources. This should also include Software as a Service (SaaS).

- All communication is secured regardless of network location. There is no trust, there is only untrust. All devices, regardless of network placement should be treated as untrusted devices.

- Access to individual enterprise resources is granted on a per-session basis. Trust in the requester is evaluated before access is granted and may include time-based rules. Granting access to one resource does not automatically grant access to another.

- Access to resources is determined by dynamic policy - including observable state of client activity, application/service, and the requesting asset - and may include other behavioral and environmental attributes. Trust should be contextually based. Meaning taking into account the patterns of the user, including time of day, device being used, versions of operating systems, among others.

- The enterprise monitors and measures the security posture of all owned and associated assets. Enterprises should be able to answer the questions: what is on the network, who is on the network, what is happening on the network, and how is data protected on the network. Associated assets are any other assets allowed to connect to enterprise resources; this likely includes personally owned devices.

- All resource authentication and authorization are dynamic and strictly enforced before access is allowed. Zero Trust Architectures have a continuous cycle of obtaining access, scanning and assessing threats, adapting, and reevaluating trust.

- The enterprise collects as much information as possible about the current state of assets, network infrastructure and communications and uses it to improve its security posture. Enterprises need to continually collect data about security postures, network traffic, and access requests and use the data to adjust policies.

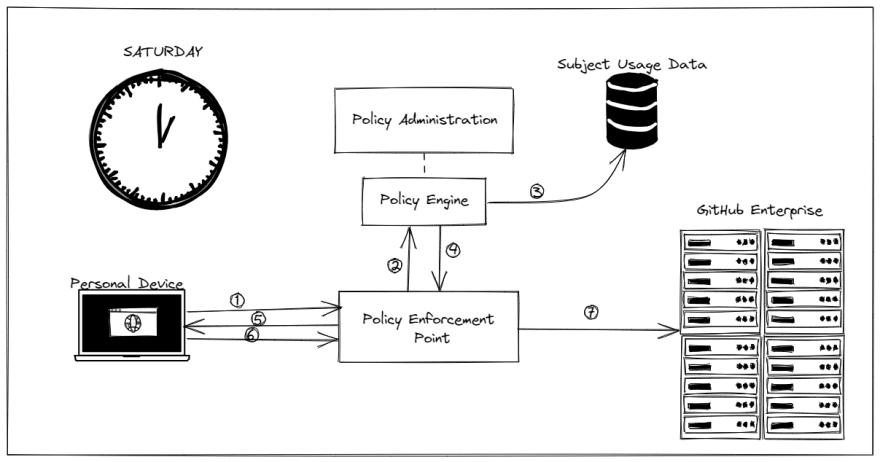

There are a bunch of different models for a zero trust architecture. Most of them look similar to this picture:

While there are a lot of acronyms there, here is the core concept - any person or service account (subject) uses a system to access Enterprise Resources. A policy enforcement point is added somewhere in the middle of it to intercept the request and send it through policy engine to make a decision on whether access will be granted. The policy administrator takes inputs from various sources to help guide the creation and evolution of policies used by the policy engine. There are different ways for the policy engine to make a decision. One of the more popular methods is a scoring system where the contextual data is weighted in a certain way and if the cumulative score of the request is over a threshold then the access will be granted. If the cumulative score does not reach the threshold then the subject is presented with a challenge of some kind to allow them to raise their score.

I think things are always easier with an example, so let's see what it looks like if you are trying to connect to a GitHub Enterprise instance.

As you make the request to the GitHub Enterprise instance, your traffic is intercepted by the policy enforcement point. Here is some of the data that comes along with your request:

- IP Address

- Geolocation

- Time

- Date

- An identity

The policy enforcement point sends this traffic to the policy engine which see that the traffic is coming from a place within the United States, it is an IP address that has accessed the system before, by an identity that is valid. The time might be a little off though. Maybe this request is being made at 1AM on a weekend - hours when you don't normally work. Those two last data points would lower the score and this request might not meet the configured threshold. Instead of allowing you access to the GitHub instance, you are instead redirected to a challenge page to allow you to prove you are who you say you are. Maybe you will need to re-enter your RSA PIN and token, or maybe you will have to respond with a code sent via text message. Once complete, the policy engine will raise your score and allow you through to GitHub Enterprise. This flow would happen whether you are physically within the enterprise network, working from home, or travelling anywhere in the world.

How to Deploy Zero Trust Architectures

The short answer - it will be a journey. NIST 800-207 does provide some guidance on how to migrate to a Zero Trust Architecture depending on your current network configuration. One of the big pieces to consider is the policy engine and the administration of the scoring. It is likely that with the initial implementations there will be some issues where traffic is denied when it should have been allowed. We will have to make sure we set expectations as we work through this process.

Based on the NIST documentation, there might be some good first steps for us to start implementing Zero Trust.

- Identify Actors in the Enterprise - these are humans as well as service accounts

- Identify Assets Owned by the Enterprise - includes physical, virtual and data assets (like user accounts)

- Identify Key Processes and Evaluate Risks Associated with Executing Process - find a system that is relatively low risk to the business; cloud-based resources are a typical first step

- Formulating Policies for the Zero Trust Architecture Candidate - find all supporting systems for your candidate and start setting the initial thresholds for score

- Identify Candidate Solutions - we need to pick a solution that matches our existing asset policies and tooling

- Initial Deployment and Monitoring - the initial deployment might limit traffic to only coming from specific locations and might be in report-only mode to allow us to gauge how well our scoring system works

- Expanding the Zero Trust Architecture - with the confidence built in the previous step, expand the criteria for accessing resources and add more resources to add

Conclusion

Unfortunately, this isn't something we can do with the snap of a finger. This will take time to implement and gain confidence in. One of the hardest things to prove out here will be return on investment. Will we ever be the victim of an insider threat? What will the cost of a breach be? Is it worth spending money to try to prevent something that we don't know will happen?

I think it is.

We might take a different perspective on return on investment though. If we look at this like insurance, we are paying for a possibility of a breach in smaller increments now. The determination of return on investment will depend on typical actuarial items, likelihood of the event and the potential cost of an event.

References:

- Zeroing in on Zero Trust Podcast - https://open.spotify.com/episode/28o5axMB5tjSUzms03bmyk?go=1&sp_cid=90f1110ab844846f3afe849e3c5e5621&t=3&utm_source=embed_player_p&utm_medium=desktop&nd=1

- Zero Trust Security Explained: Principles of the Zero Trust Model - https://www.crowdstrike.com/cybersecurity-101/zero-trust-security/

- NIST 800-207 - https://csrc.nist.gov/publications/detail/sp/800-207/final

- No More Chewy Centers: Introducing The Zero Trust Model Of Information Security - https://media.paloaltonetworks.com/documents/Forrester-No-More-Chewy-Centers.pdf

Top comments (0)