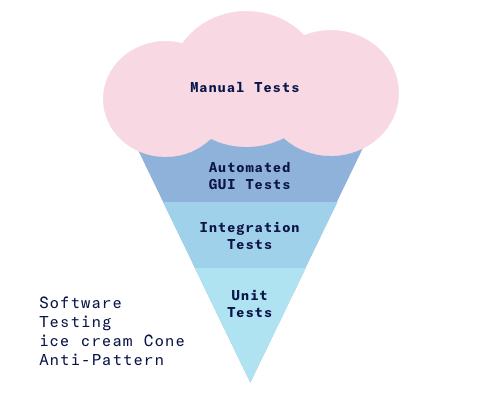

Test pyramid

Since Puppeteer releases, end-2-end testing becomes a fast and reliable way for testing features. Most things that you can do manually in the browser can be done using Puppeteer. Even more, headless Chrome reduces the performance overhead and native access to the DevTools Protocol makes Puppeteer awesome. Imagine, each time we develop front-end we just check the final view in the browser, and without TDD we faced with Test Pyramid Ice cream Anti-pattern  But we like Ice cream, so why we need a trade-off? I show you how to upgrade your sources with tests no matter what engine your use with confidence that your application works as expected because Puppeteer will check Features instead of you.

But we like Ice cream, so why we need a trade-off? I show you how to upgrade your sources with tests no matter what engine your use with confidence that your application works as expected because Puppeteer will check Features instead of you.

Setup

I have complete step-by-step instruction README.md based on a simple project I forked from and had provided it with feature-rich test upgrade to show off. So, if you have one other please:

1) Install dependencies in your root

npm i puppeteer mocha puppeteer-to-istanbul nyc -D

2) Expose your instance on endpoint (my light solution for *.html http-server)

3) Create test directory and fill {yourFeature}_test.js with next suitable template (note before and after hooks), try to extend it with your project-specific selectors and behaviors:

const puppeteer = require('puppeteer');

const pti = require('puppeteer-to-istanbul');

const assert = require('assert');

/**

* ./test/script_test.js

* @name Feature testing

* @desc Create Chrome instance and interact with page.

*/

let browser;

let page;

describe('Feature one...', () => {

before(async () => {

// Create browser instance

browser = await puppeteer.launch()

page = await browser.newPage()

await page.setViewport({ width: 1280, height: 800 });

// Enable both JavaScript and CSS coverage

await Promise.all([

page.coverage.startJSCoverage(),

page.coverage.startCSSCoverage()

]);

// Endpoint to emulate feature-isolated environment

await page.goto('http://localhost:8080', { waitUntil: 'networkidle2' });

});

// First Test-suit

describe('Visual regress', () => {

it('title contain `Some Title`', async () => {

// Setup

let expected = 'Some Title';

// Execute

let title = await page.title();

// Verify

assert.equal(title, expected);

}).timeout(50000);

});

// Second Test-suit

describe('E2E testing', () => {

it('Some button clickable', async () => {

// Setup

let expected = true;

let expectedCssLocator = '#someIdSelector';

let actual;

// Execute

let actualPromise = await page.waitForSelector(expectedCssLocator);

if (actualPromise != null) {

await page.click(expectedCssLocator);

actual = true;

}

else

actual = false;

// Verify

assert.equal(actual, expected);

}).timeout(50000);

// Save coverage and close browser context

after(async () => {

// Disable both JavaScript and CSS coverage

const jsCoverage = await page.coverage.stopJSCoverage();

await page.coverage.stopCSSCoverage();

let totalBytes = 0;

let usedBytes = 0;

const coverage = [...jsCoverage];

for (const entry of coverage) {

totalBytes += entry.text.length;

console.log(`js fileName covered: ${entry.url}`);

for (const range of entry.ranges)

usedBytes += range.end - range.start - 1;

}

// log original byte-based coverage

console.log(`Bytes used: ${usedBytes / totalBytes * 100}%`);

pti.write(jsCoverage);

// Close browser instance

await browser.close();

});

});

Execute

- Run your test described above against scripts on endpoint with

mochacommand - Get coverage collected while test run with

nyc report. - I suggest you instrument your

package.jsonwith the next scripts makes it super easy to run tasks likenpm testornpm run coverage

"scripts": {

"pretest": "rm -rf coverage && rm -rf .nyc_output",

"test": "mocha --timeout 5000 test/**/*_test.js",

"server": "http-server ./public",

"coverage": "nyc report --reporter=html"

},

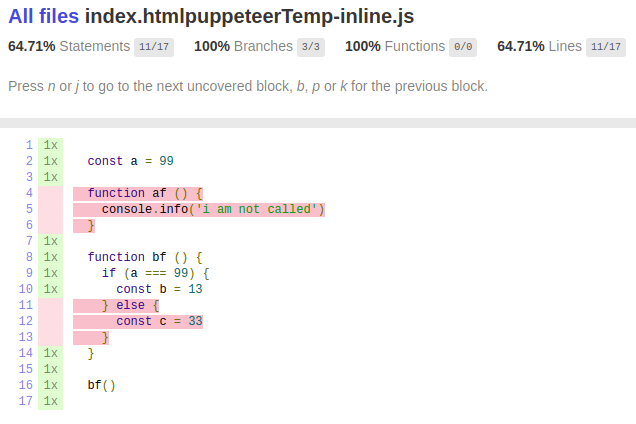

Coverage

In my project I have coverage about 62%

We can report it as html and look closer

You can see that Branches and Functions both 100% covered. While I tested Puppeteer coverage feature (like Coverage devTool) I filed that bug

[Bug] Incorrect branches hits coverage statistic

#22

[Bug] Incorrect branches hits coverage statistic

#22

Unit vs E2E testing wars

Someone who has enough passion to research without getting bored I tell what, we need more attention to unit testing frameworks like Mocha used to write tests from Unit to Acceptance but not to unit or end tests themselves. No matter what test you write if your codebase covered I think. Times have changed. Now, with coverage available feature other tools like the Traceability matrix as a measure of quality look ugly, because stakeholders still need to believe in tester's word. But pay attention to the forth best practices in the domain of code coverage from Google to use coverage information pragmatically.

Contribute

I strongly recommend take some time and review my github-working-draft project before you will stuck.

I will appreciate any collaboration and feedback. Feel free to reach me any questions.

![Cover image for [Puppeteer][Mocha] Upgrade your implementation code with coverage.](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fthepracticaldev.s3.amazonaws.com%2Fi%2Fbgr2lxqgiv2ohmvk564d.png)

Top comments (1)

What if we get code coverage via gulp. I face the error while getting code coverage via gulp. When I run unit and e2e tests via gulp all test cases passing but one is throwing error when try to get code coverage and server sends 500 status code instead of 200.

Error message: *Error: Evaluation failed: ReferenceError: cov_2os11p00jv is not defined at pptr://puppeteer_evaluation_script:1:16

*

Any suggestions are appreciated.