Introduction

As the world is getting rooted in the new era of Microservices, a fundamental challenge that haunts all architects is identifying the right technology stack for the system. The sheer number of alternatives and their combinations is enough to leave one struggling.

Today’s challenge is not limited to identifying one good technology or tool for the task. We are not choosing between a good tool and a bad one. The choice is identifying an appropriate subset of a vast range of wonderful tools and technologies. We need to find the right subset of technologies, that can complement each other and work well in unison.

The enterprise architect must shortlist a good collection of components that create tested and trusted architecture patterns. It is important that the chosen tools are popular enough to ensure that we have enough trained developers. At the same time, it should be free from any legacy, to make sure it does not carry forward the problems of the past.

Everyone wants to do more using fewer technologies and tools. Everyone wants to follow tested patterns where the tools are known to go well together. At the same time, everyone wants to stay on the cutting edge! This is more difficult than it sounds.

The Redis Stack

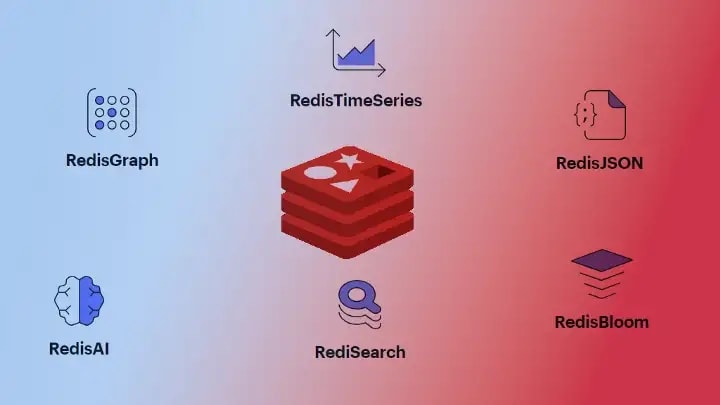

Redis simplifies this problem to a great extent. Beyond doubt, the Redis Cache is now a standard, irreplaceable component of any meaningful enterprise. This is complemented by a multitude of other applications that come along — the Redis Stream, Redis Pub Sub, Redis Search, Redis AI, Redis JSON, Redis Graph, Redis Time series, and many more.

To make the architect’s life simpler, Redis came up with a complete stack of tools that provides for all the major components of a Microservice architecture. When we use them together, we can be sure that there is no risk of incompatibility. We have very little risk of skill deficiency since Redis everywhere means a simpler learning curve.

The Redis stack (Redis JSON, Redis Search, Redis Timeseries, Redis Graph, and Redis Bloom) significantly reduces the technology chaos. Along with these, the Pub-Sub and Streaming data structures enable elegant asynchronous communication among your microservices.

This blog will give you a glimpse of each of the above. We will look at them one by one. Before that, let us get some setup in place.

Installation

Don’t install! Gone are the days we developed and tested our code with databases installed on our laptops. Welcome to the cloud. With a Redis cloud account, you only need a few clicks, and the free database instance is ready. Why clutter your laptop?

Creating the Redis Stack

Visit Redis cloud. If you don’t already have an account, create it by signing up with Google/GitHub if you don’t want to remember too many passwords.

- Once you log in, click on Create subscription. Then accept all the defaults to create a new free subscription. You can choose the cloud and region of your preference. I prefer to use AWS, hosted in the us-east-1 region.

- Once you have the subscription, click “New database” to create a new database instance. Make sure you choose the Redis Stack in the database type. Then, accept all the defaults and click on “Activate database” to activate the database instance.

- Give it a few minutes to set up. And there, the database is waiting for you to start learning. Just note the database URL (public endpoint) and the database password. Copy to a notepad. Then log out of the web console.

- Download and install RedisInsight. Connect to the database instance we created above using the above connection string.

Redis Json

Data is the core of any enterprise and data is JSON (most of the time). Redis JSON is the database module of the Redis Stack that lets us work with JSON data. We can write, persist and read JSON documents.

Unlike other JSON document stores, Redis provides full support for the JSON standard — including all the intricacies. We can access the records (select/update) using a JSONPath syntax for selecting/updating elements inside documents (see JSONPath syntax).

Internally, the Documents are stored as binary data in a tree structure. That allows super fast access to sub-elements — something that we can take for granted when working with Redis. Above all, we get typed atomic operations for all JSON value types.

Let us check out how this works. Open the workbench in the RedisInsight desktop tool that we configured above.

Example

Try out the below commands in the terminal:

> JSON.SET example $ '{"key1": "hello", "key2": "world"}'

"OK"

It responds with a simple “OK”, which means that the command is accepted and processed. The command has several components.

As one can guess, JSON is the module, and SET is the command in that module. Next, “example”, is the KEY where we store the value. The “$” has a special significance that we will discuss below. Finally, we provide a valid JSON that is saved into the database.

It is equally easy to get back this value:

> JSON.GET example $

"[{\"key1\":\"hello\",\"key2\":\"world\"}]"

Easy to read; this invokes the GET method in the JSON module to get the value of “example”.

That funny “$” is still hanging around. Let us check that now. It signifies the JSON Path into the value. “$” alone means the root path. So we get the entire JSON value. We could have done something like this.

> JSON.GET example $.key1

"[\"hello\"]"

This time, we ask for just a part of the JSON value, using the key. Of course, we can use the JSON path to update the value. For example,

> JSON.SET example $.key2 '"redis"'

"OK"

> JSON.GET example $

"[{\"key1\":\"hello\",\"key2\":\"redis\"}]"

This time, we used “$.key2” as the JSON Path when we set the value. So it does not overwrite the entire value, just updates that part of the JSON.

This was just a glimpse. We can persist and manage complex JSON documents, at ultra-high speed. And of-course, Redis has a rich set of clients and SDKs in most programming languages. Check out the documentation for the list of commands and also for the popular SDKs.

Redis Search

Search is a growing necessity for all applications. As data accumulates at a high volume and velocity, the rest of our data pipeline must be capable of extracting the correct information and insights from this data. Search is one of the primary tools for that.

Speed is built into RediSearch, in the core. The secret of this speed lies in the two core concepts — Inverted index and Trie — that store the data in a way that enables extreme speed in fuzzy string matching and retrievals.

Example

Let us check out the RediSearch in action using a few commands

> FT.CREATE myIdx ON HASH PREFIX 1 doc: SCHEMA title TEXT WEIGHT 5.0 body TEXT url TEXT

"OK"

This takes a few seconds to create. Once it is ready, we can add a document using the HSET.

> HSET doc:1 title "hello world" body "Learning Redis with Vikas" url "http://solegaonkar.com"

(integer) 3

As this value is saved with the appropriate index updates, we can search for it using the search command

> FT.SEARCH myIdx "hello world" LIMIT 0 10

1) "1"

2) "doc:1"

3) 1) "title"

2) "hello world"

3) "body"

4) "Learning Redis with Vikas"

5) "url"

6) "http://solegaonkar.com"

We can enable auto-suggest as well as fuzzy search. That gives us wonderful search results at a super fast speed. Check out this blog for a deep dive into Redis Search.

Redis TimeSeries

RedisTimeSeries is a Redis module that adds a time series data structure to Redis. It enables high-volume inserts along with low-latency reads. Time series data naturally has time as a major query parameter. Based on this, we can have several types of aggregate queries. Let us check this out in action.

> TS.CREATE sensor RETENTION 86400000

"OK"

This creates a TimeSeries with a retention period of one day. Most often the time series data is not required beyond a given retention time. Specifying the time here ensures memory efficiency. However, that is not required. We can skip that optional parameter and then the data is retained as long as the underlying infrastructure allows.

Next, we can add some data to build a time series.

> TS.ADD sensor * 1

(integer) 1675082148448

> TS.ADD sensor * 2

(integer) 1675082155207

> TS.ADD sensor * 3

(integer) 1675082162470

> TS.ADD sensor * 4

(integer) 1675082167147

> TS.ADD sensor * 5

(integer) 1675082172130

> TS.ADD sensor * 3

(integer) 1675082177637

> TS.ADD sensor * 4

(integer) 1675082182834

> TS.ADD sensor * 5

(integer) 1675082187730

The response to the command is the actual time when it was executed. Now we can query this data based on the time stamps. Here is an example:

> TS.RANGE sensor - + FILTER_BY_TS 1675082172130 1675082182834

1) 1) "1675082172130"

2) "5"

2) 1) "1675082182834"

2) "4"

The above command gets the records between the two timestamps. There are several other ways we can query the data — based on labels, filters, etc. We can also tweak the frequency of the incoming data to be able to process just what we require.

Check out the detailed list of commands and the available client SDKs on their website.

Redis Graph

As the world is gradually upgrading itself from relational to document, and from document to graph databases. Redis Stack is ready for you to make this jump. Relational and document-based databases require a kind of mapping of real-world data to a form that is more suitable for the machine. In this process, we do end up discarding some information that we feel is not required at this time. Invariably, as Murphy says, such information is required later on, and that leads to painfully slow queries and slow applications.

Graph is the way our mind stores information — in form of connections of entities and their relationships. Technically, that means nodes with properties and relationships that join these nodes. There are several popular graph databases like Neptune and Neo4J. Of course, the advantage of using Redis is that we live within the ecosystem and simplify the development life-cycle.

Under the hood, RedisGraph uses GraphBlas to implement the sparse adjacency. It uses an adaptation of the Property Graph Model where Nodes (vertices) and Relationships (edges) have attributes. Nodes can have multiple labels and Relationships have a relationship type. It uses an extension of the OpenCypher to query the database.

Let us check out how this works, using small snippets of code:

> GRAPH.QUERY MotoGP "CREATE (:Rider {name:'Valentino Rossi'})-[:rides]->(:Team {name:'Yamaha'}), (:Rider {name:'Dani Pedrosa'})-[:rides]->(:Team {name:'Honda'}), (:Rider {name:'Andrea Dovizioso'})-[:rides]->(:Team {name:'Ducati'})"

1) 1) "Labels added: 2"

2) "Nodes created: 6"

3) "Properties set: 6"

4) "Relationships created: 3"

5) "Cached execution: 0"

6) "Query internal execution time: 13.873922 milliseconds"

As we can guess, the above command uses the module GRAPH and the command QUERY inside that module. It creates a new key — MogoGP. This is done using a CREATE command that is passed in. This is an OpenCypher command, that creates nodes and relationships between these nodes.

Now that we have some data in the database, we can query it using another OpenCypher command.

> GRAPH.QUERY MotoGP "MATCH (r:Rider)-[:rides]->(t:Team) WHERE t.name = 'Yamaha' RETURN r.name, t.name"

1) 1) "r.name"

2) "t.name"

2) 1) 1) "Valentino Rossi"

2) "Yamaha"

3) 1) "Cached execution: 0"

2) "Query internal execution time: 10.843586 milliseconds"

If you are interested, you can check out the OpenCypher in detail on the reference page. You can learn more about Redis Graph and its applications on the website.

Redis Bloom

Life is never a “Yes or No”. A lot of our data is in form of statistical probabilities. Redis bloom helps us work with such data. This module provides a set of data types that can store statistical data and help us process it effectively.

Bloom is a probabilistic data structure, that improves the speed and space utilization of our applications. We can think of a Bloom filter as a SET, that is probabilistic under the hood. We can add to the filter and we can check for existence. And this response is probabilistic — meaning that the response it gives may not always be precise — although that probability is too low. We can have false positives but never get a false negative.

Well, one might wonder, why do we want such an obscure data structure? As a trivial example, we can think of this as a level above the cache. This is very useful for improving the performance of the cache. Before we query the cache for a key, we can pass it through the bloom filter. A cache miss can be identified much faster that way, reducing the load on the main cache.

Internally, it is implemented by reducing the value to smaller values, that may collide with each other. Similar to the modulus of numbers. Redis has an algorithm that identifies the level of reduction to enable a level of accuracy of the outcome.

Let’s check out in code.

> BF.ADD bloom 'Hello World'

(integer) 1

> BF.ADD bloom 'Hello Redis'

(integer) 1

> BF.EXISTS bloom "Hello"

nil

> BF.EXISTS bloom "Hello World"

(integer) 1

As we can see in the above code, we can add values to the bloom filter, then check if they exist. It is possible that the outcome is a false positive. However, the probability is quite low.

Other Datatypes and Modules

The above five together are called the Redis Stack. However, there are a few more useful modules and data types that can enhance our microservices. These are:

- Pub-Sub & Streams

- Redis AI

- Redis Gears

Let us look at them one by one.

Pub-Sub & Streams

Before we dive into these data types, we should first understand asynchronous processing in general. Asynchronous processing is a core component of Microservice Architecture. A mature Microservice architecture must be based on asynchronous communication between the services. That eliminates the possibility of cascading failures and ensures a healthy decoupling between services.

Synchronous v/s Asynchronous

Synchronous communication is the traditional approach to communication between components. The calling component invokes the target component with direct function calls, or by SOAP/REST API calls. This is easy to implement, however, it has some problems. The tight coupling between components reduces the life of the application. The synchronous caller is held back until the response is available — degrading the performance. And if the target component is not available for any reason, the caller is not able to do its work — causing cascading failures.

Asynchronous communication helps us avoid such problems, by decoupling the services and their communication. Of course, asynchronous communication does not guarantee the performance, stability, or extensibility of the application. On the contrary, asynchronous communication can be a disaster if it is not implemented correctly. However, if we do implement it correctly, asynchronous communication could be the key to the application's performance.

There are several patterns for asynchronous communication. Event-driven communication is the most popular among them. There are two principal types of event-driven communication — Pub/Sub and Streaming. It is important to understand the significance of either of them so that we can use them correctly. Neither is better or worse than the other. They are just different, catering to different requirements.

Pub/Sub?

In a Pub/Sub, the event source just “Publishes” the event for the world around it. Such an event is temporary. It is lost immediately after it is created — unless it is picked up by a “Subscriber”. We could have multiple subscribers waiting for events from any publisher. Such communication is useful when the time of the event itself is as important as the data it carries.

Note here, that the subscriber does not care about who published the event and the publisher does not care about who subscribes to the event. Both work independently, leading to perfect decoupling. However, if for some reason, there is no active subscriber when the event is published, that event will be lost and cannot be retrieved.

The Redis syntax is very simple. We can subscribe to a channel using the SUBSCRIBE command and then publish to that channel using PUBLISH. However, we cannot test this on the Redis Insight workbench. We need to connect with the CLI. Open two clients on the command line. On one, type

> SUBSCRIBE channelName

This will wait for any inputs from that channel. On the other terminal, type

> PUBLISH channelName "message"

As soon as we do this, we see the message is received in the subscribe terminal. This is asynchronous communication in action. We have a range of SDKs for different languages that enable us to use this concept in our applications. Check out the details on the Redis Documentation.

What is Streaming?

Streaming, on the other hand, is more durable. The “event source” continuously appends the events to an “event-stream”. Another service that is interested in these events can “listen” to this stream and “consume” events in the stream. The events in the stream are “persisted” for a time configured into the system. This provides a sturdy system where the listeners can consume events at their own pace.

Is streaming better than pub/sub? Not necessary! Streaming is sturdier for sure. However, it implies an overhead that may not be necessary. Pub/Sub is lightweight and more apt for the requirement at times. Either pattern has its own value, and it is the job of the architect to identify and deploy the appropriate pattern in the use case at hand.

Let us check out some code

> XADD stream1 * test "Message 1"

"1675102596894-0"

> XADD stream1 * test "Message 2"

"1675102797271-0"

> XADD stream1 * test "Message 3"

"1675102828991-0"

Here, we have published a few messages to the stream. Next, we can open a stream consumer that can read these messages.

> XRANGE stream1 "1675102596894-0" + COUNT 3

1) 1) "1675102596894-0"

2) 1) "test"

2) "Message 1"

2) 1) "1675102797271-0"

2) 1) "test"

2) "Message 2"

3) 1) "1675102828991-0"

2) 1) "test"

2) "Message 3"

Notice that, unlike the Pub/Sub, we were able to read from the stream after the write was over.

Redis Gears

This is the Redis engine for data processing. It supports batch and event-driven processing. We can use RedisGears, by submitting a function code to the Redis instance. It is then executed remotely within Redis. As of Feb 2023, this supports Java and Python code.

That means, we save the latency and cost due to the data flowing to and fro between the compute and the database.

Redis Gears requires an enterprise version, so we cannot have a detailed demo here.

Redis AI

Nothing is complete without AI!

RedisAI is a module that helps with Deep Learning/Machine Learning models. It manages their data and also runs the training/predictions. It is the “workhorse” for the model. To enable this, it provides out-of-the-box support for most of the popular DL/ML frameworks. Extreme performance is an obvious feature of anything Redis. RedisAI simplifies the deployment as well as the serving of the models.

Top comments (0)