Problem

Think about this scenario, given a list of strings with number of N, where N is large than 1 million.

Let's implement a data structure to check whether a target string is in given strings.

If N is not so huge, a HashDict could be used to build a dictionary. When N is huge, memory is the key point for this challenge.

Different data structures will give you different facility and benefits.

To properly use the power and accessibility of the data structures you need to

know the trade-offs of using one.

In this case, we need a data structure which optimized with space. This is where bloom filter can be used.

The Concept

Bloom Filter was proposed by Burton Howard Bloom in 1970. The related paper is: Space/time Trade-offs in Hash Coding with Allowable Errors.

It's an efficiency in time and space with a very clever design. The cost for space efficiency is accuracy, we called it as a probabilistic data structure:

- When the returned result is false, it's 100% correct.

- When the returned result is true, it may be wrong.

Why? Let's come up with the details of bloom filter.

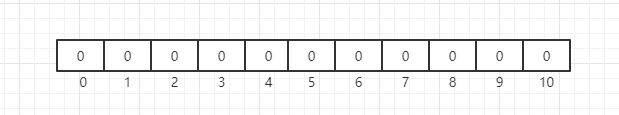

The bottom data structure of the bloom filter is a bit array(like BitMap). Initially, when the represented set is empty, all bits are 0:

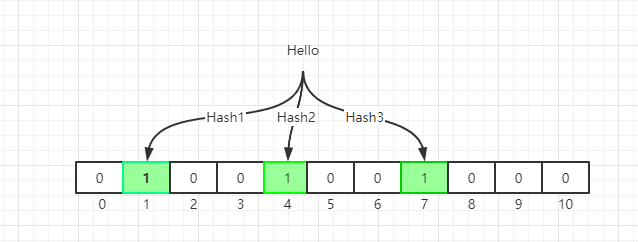

When we initialize the bit array, we use multiple hash functions to compute multiple indexes according the the strings, and set the bit at index positions to 1.

Why we need multiple hash functions? This is the core idea of bloom filter. Think about HashMap, usually we use one hash function to calculate a hash value, but we also store all the keys in HashMap.

Different keys with same hash function may come out with the same hash value, this is called collision.

In bloom filter, we use multiple hash functions to reduce the possibility of collision.

Even the possibility of collision could be very small, it may happen.

For query API, we do similar hash calculations, but when we find one hash bit is not set to 1, we can safely return false. For naive Bloom Filter, the keys added into table can not be deleted. If we want to delete key, a variant named Counting Bloom Filter(CBF) could be used.

We can have some trivial tunning about the data structures, you can modify the false positive rate of your filter. From above description, a larger filter will have less false positives, and more hash functions will introduce more cost in computation. Given a Bloom filter with m bits and k hashing functions with n insertion.

Your false positive rate will be approximately $$ (1-e^{-kn/m})^k $$.

Implementation of C

#include <stdlib.h>

#include <limits.h>

#include <stdarg.h>

typedef unsigned int (*hashfunc_t)(const char *);

typedef struct

{

size_t asize;

unsigned char *a;

size_t nfuncs;

hashfunc_t *funcs;

} BloomFilter;

#define SETBIT(a, n) (a[n / CHAR_BIT] |= (1 << (n % CHAR_BIT)))

#define GETBIT(a, n) (a[n / CHAR_BIT] & (1 << (n % CHAR_BIT)))

BloomFilter *bloom_create(size_t size, size_t nfuncs, ...)

{

BloomFilter *bloom;

va_list l;

int n;

bloom = malloc(sizeof(BloomFilter));

bloom->a = calloc((size + CHAR_BIT - 1) / CHAR_BIT, sizeof(char));

bloom->funcs = (hashfunc_t *)malloc(nfuncs * sizeof(hashfunc_t));

va_start(l, nfuncs);

for (n = 0; n < nfuncs; ++n)

bloom->funcs[n] = va_arg(l, hashfunc_t);

va_end(l);

bloom->nfuncs = nfuncs;

bloom->asize = size;

return bloom;

}

int bloom_add(BloomFilter *bloom, const char *s)

{

size_t n;

for (n = 0; n < bloom->nfuncs; ++n)

SETBIT(bloom->a, bloom->funcs[n](s) % bloom->asize);

return 0;

}

int bloom_check(BloomFilter *bloom, const char *s)

{

size_t n;

for (n = 0; n < bloom->nfuncs; ++n)

{

if (!(GETBIT(bloom->a, bloom->funcs[n](s) % bloom->asize)))

return 0;

}

return 1;

}

unsigned int sax_hash(const char *key)

{

unsigned int h = 0;

while (*key)

h ^= (h << 5) + (h >> 2) + (unsigned char)*key++;

return h;

}

unsigned int sdbm_hash(const char *key)

{

unsigned int h = 0;

while (*key)

h = (unsigned char)*key++ + (h << 6) + (h << 16) - h;

return h;

}

int main() {

BloomFiler* bloom = bloom_create(2500000, 2, sax_hash, sdbm_hash);

...

}

Implementation of Python

Please refer to Pure Python Bloom Filter module. The usage is straight forward:

from bloom_filter import BloomFilter

bloom = BloomFilter(max_elements=10000, error_rate=0.1)

assert "test-key" in bloom is False

bloom.add("test-key")

assert "test-key" in bloom is True

Real cases

- Chromium with a Counting Bloom Filter

- python-bloomfilter is a Scalable Bloom Filter

- LevelDB use double-hashing instead of multiple hash functions

b.com/google/leveldb/blob/master/util/bloom.cc)

Latest comments (0)