ArgoCD helps to deliver applications to Kubernetes by using the GitOps approach, i.e. when a Git-repository is used as a source of trust, thus all manifest, configs and other data are stored in a repository.

It can b used with Kubernetes manifest, kustomize, ksonnet, jsonnet, and what we are using in our project — Helm-charts.

ArgoCD spins up its controller in the cluster and watches for changes in a repository to compare it with resources deployed in the cluster, synchronizing their states.

Some additional features which are really useful are SSO with SAML, so we can integrate it with our Okta, it can deploy to multiple clusters, Kubernetes RBAC support, great WebUI and CLI, Github, GitLab, etc webhooks integration, plus Prometheus metrics out of the box, and awesome documentation.

I’m planning to us eArgoCD to replace our current deployment process with Jenkins and Helm, see the Helm: пошаговое создание чарта и деплоймента из Jenkins post (Rus).

Content

- Components

- ArgoCD CLI installation

- Running ArgoCD in Kubernetes

- LoadBalancer, SSL, and DNS

- ArgoCD SSL: ERR_TOO_MANY_REDIRECTS

- ArgoCD: deploy from a Github repository

- ArgocD: ComparisonError failed to load initial state of resource

Components

ArgoCD consists of the three main components — API server, Repository Server, and Application Controller.

- API server (pod: argocd-server): controls the whole ArgoCD instance, all its operations, authentification, and secrets access which are stored as Kubernetes Secrets, etc

- Repository Server (pod: argocd-repo-server): stores and synchronizes data from configured Git-repositories and generates Kubernetes manifests

- Application Controller (pod: argocd-application-controller): used to monitor applications in a Kubernetes cluster to make them the same as they are described in a repository, and controls PreSync, Sync, PostSync hooks

ArgoCD CLI installation

In macOS:

$ brew install argocd

In Linux — from the Github repository:

$ VERSION=$(curl — silent “https://api.github.com/repos/argoproj/argo-cd/releases/latest" | grep ‘“tag_name”’ | sed -E ‘s/.*”([^”]+)”.*/\1/’)

$ sudo curl -sSL -o /usr/local/bin/argocd [https://github.com/argoproj/argo-cd/releases/download/$VERSION/argocd-linux-amd64](https://github.com/argoproj/argo-cd/releases/download/%24VERSION/argocd-linux-amd64)

$ sudo chmod +x /usr/local/bin/argocd

Check it:

$ argocd version

argocd: v1.7.9+f6dc8c3

BuildDate: 2020–11–17T23:18:20Z

GitCommit: f6dc8c389a00d08254f66af78d0cae1fdecf7484

GitTreeState: clean

GoVersion: go1.14.12

Compiler: gc

Platform: linux/amd64

Running ArgoCD in Kubernetes

And let’s spin up an ArgoCD instance. We can use a manifest from the documentation which will create all necessary resources such as CRD, ServiceAccounts, RBAC roles and binding, ConfigMaps, Secrets, Services, and Deployments.

I’m pretty sure there is an existing Helm chart for ArgoCD, but this time let’s use the manifest as it is described in the Getting Started.

The documentation suggests using the argocd namespace and it will be simpler, but we are not looking for simplicity so let’s create our own namespace:

$ kubectl create namespace dev-1-devops-argocd-ns

namespace/dev-1-devops-argocd-ns created

Deploy resources:

$ kubectl apply -n dev-1-devops-argocd-ns -f [https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml](https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml)

Edit the argcd-server Service — change its type to the LoadBalancer to get access to the WebUI from the world:

$ kubectl -n dev-1-devops-argocd-ns patch svc argocd-server -p ‘{“spec”: {“type”: “LoadBalancer”}}’

service/argocd-server patched

Find its URL:

$ kubectl -n dev-1-devops-argocd-ns get svc argocd-server

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-server LoadBalancer 172.20.142.44 ada***585.us-east-2.elb.amazonaws.com 80:32397/TCP,443:31693/TCP 4m21s

A password for the ArgoCD is generated automatically is set to the name of its pod’s name, get it with the next command:

$ kubectl -n dev-1-devops-argocd-ns get pods -l app.kubernetes.io/name=argocd-server -o name | cut -d’/’ -f 2

argocd-server-794857c8fb-xqgmv

Log in via CLI, don’t pay attention to the certificate error:

$ argocd login ada***585.us-east-2.elb.amazonaws.com

WARNING: server certificate had error: x509: certificate is valid for localhost, argocd-server, argocd-server.dev-1-devops-argocd-ns, argocd-server.dev-1-devops-argocd-ns.svc, argocd-server.dev-1-devops-argocd-ns.svc.cluster.local, not ada***585.us-east-2.elb.amazonaws.com. Proceed insecurely (y/n)? y

Username: admin

Password:

‘admin’ logged in successfully

Context ‘ada***585.us-east-2.elb.amazonaws.com’ updated

Change the password:

$ argocd account update-password

*** Enter current password:

*** Enter new password:

*** Confirm new password:

Password updated

Open WebUI, again ignore the SSL warning, we will set it up in a moment, and log in:

LoadBalancer, SSL, and DNS

Cool — we have all services running, now let’s configure a DNS name and an SSL certificate.

AWS ALB and ELB didn’t support gRPC, see AWS Application Load Balancers (ALBs) And Classic ELB (HTTP Mode), thus we can not use the ALB Ingress Controller here.

Let’s leave the Service with the LoadBalancer type as we did it above — it creates an AWS Classic LoadBalancer.

SSL certificate can be issued with the AWS Certificate Manager in the same region as our cluster and load balancer are created and save its ARN.

Download the manifest file:

$ wget [https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml](https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml)

Find the argocd-server Service:

--------

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: server

app.kubernetes.io/name: argocd-server

app.kubernetes.io/part-of: argocd

name: argocd-server

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

- name: https

port: 443

protocol: TCP

targetPort: 8080

selector:

app.kubernetes.io/name: argocd-server

To the annotations add the service.beta.kubernetes.io/aws-load-balancer-ssl-cert, in the spec.type - LoadBalancer's type, and limit access with the loadBalancerSourceRanges:

--------

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: server

app.kubernetes.io/name: argocd-server

app.kubernetes.io/part-of: argocd

name: argocd-server

annotations:

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: "arn:aws:acm:us-east-1:534 ***385:certificate/ddaf55b0-*** -53d57c5ca706"

spec:

type: LoadBalancer

loadBalancerSourceRanges:

- "31. ***.***.117/32"

- "194. ***.***.24/29"

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

- name: https

port: 443

protocol: TCP

targetPort: 8080

selector:

app.kubernetes.io/name: argocd-server

Deploy it:

$ kubectl -n dev-1-devops-argocd-ns apply -f install.yaml

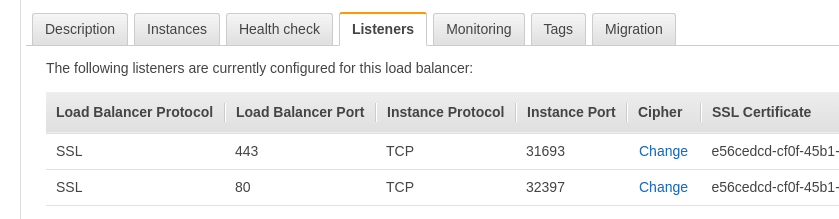

Check the ELB’s SSL:

Create a DNS record:

Open the URL — and get the ERR_TOO_MANY_REDIRECTS error_:_

ArgoCD SSL: ERR_TOO_MANY_REDIRECTS

Go to Google and find this topic — https://github.com/argoproj/argo-cd/issues/2953.

Go back to the install.yaml, in the Deployment argocd-server add the --insecure flag:

--------

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: server

app.kubernetes.io/name: argocd-server

app.kubernetes.io/part-of: argocd

name: argocd-server

spec:

selector:

matchLabels:

app.kubernetes.io/name: argocd-server

template:

metadata:

labels:

app.kubernetes.io/name: argocd-server

spec:

containers:

- command:

- argocd-server

- --staticassets

- /shared/app

- --insecure

...

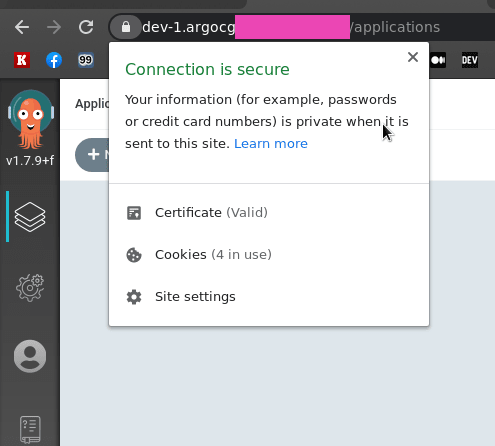

Deploy it over, and check:

Okay — we are done here.

ArgoCD: deploy from a Github repository

And let’s proceed with the example from the Getting started guide — Helm deployment will be observed in the following part.

Click on the New App, specify a name, and set Project == default:

In the Git set the https://github.com/argoproj/argocd-example-apps.git URL and a path inside of the repo — guestbook:

In the Destination — https://kubernetes.default.svc and the default namespace, click on the Create:

Looks like it was created, but why its Sync Status is Unknown?

Something went wrong:

$ argocd app get guestbook

Name: guestbook

Project: default

Server: [https://kubernetes.default.svc](https://kubernetes.default.svc)

Namespace: default

…

CONDITION MESSAGE LAST TRANSITION

ComparisonError failed to load initial state of resource PersistentVolumeClaim: persistentvolumeclaims is forbidden: User “system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller” cannot list resource “persistentvolumeclaims” in API group “” at the cluster scope 2020–11–19 15:35:51 +0200 EET

ComparisonError failed to load initial state of resource PersistentVolumeClaim: persistentvolumeclaims is forbidden: User “system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller” cannot list resource “persistentvolumeclaims” in API group “” at the cluster scope 2020–11–19 15:35:51 +0200 EET

Try the sync command from the CLI - also didn't work:

$ argocd app sync guestbook

Name: guestbook

Project: default

Server: [https://kubernetes.default.svc](https://kubernetes.default.svc)

Namespace: default

…

Sync Status: Unknown

Health Status: Healthy

Operation: Sync

Sync Revision:

Phase: Error

Start: 2020–11–19 15:37:01 +0200 EET

Finished: 2020–11–19 15:37:01 +0200 EET

Duration: 0s

Message: ComparisonError: failed to load initial state of resource Pod: pods is forbidden: User “system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller” cannot list resource “pods” in API group “” at the cluster scope;ComparisonError: failed to load initial state of resource Pod: pods is forbidden: User “system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller” cannot list resource “pods” in API group “” at the cluster scope

FATA[0001] Operation has completed with phase: Error

ArgocD: ComparisonError failed to load initial state of resource

Actually, better consider the “ User “system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller” cannot list resource “pods” in API group “” at the cluster scope ” error.

Check the argocd-application-controller ServiceAccount (really helpful here the Kubernetes: ServiceAccounts, JWT-tokens, authentication, and RBAC authorization post):

$ kubectl -n dev-1-devops-argocd-ns get serviceaccount argocd-application-controller

NAME SECRETS AGE

argocd-application-controller 1 36m

Yup, we have the User system:serviceaccount:dev1-devops-argocd-ns:argocd-application-controller created.

And it has the argocd-application-controller ClusterRoleBinding mapped:

$ kubectl -n dev-1-devops-argocd-ns get clusterrolebinding argocd-application-controller

NAME AGE

argocd-application-controller 24h

But it can’t perform the list pods action:

$ kubectl auth can-i list pods -n dev-1-devops-argocd-ns — as system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller

no

Although its ClusterRole gives all the permissions:

--------

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: application-controller

app.kubernetes.io/name: argocd-application-controller

app.kubernetes.io/part-of: argocd

name: argocd-application-controller

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

Check the ClusterRoleBinding again, but this time with the -o yaml output:

$ kubectl get clusterrolebinding argocd-application-controller -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

…

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: argocd-application-controller

subjects:

- kind: ServiceAccount

name: argocd-application-controller

namespace: argocd

namespace: argocd - aha, here we are.

Find two ClusterRoleBinding in the install.yaml - argocd-application-controller and argocd-server, update their namespaces:

--------

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: application-controller

app.kubernetes.io/name: argocd-application-controller

app.kubernetes.io/part-of: argocd

name: argocd-application-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: argocd-application-controller

subjects:

- kind: ServiceAccount

name: argocd-application-controller

namespace: dev-1-devops-argocd-ns

--------

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: server

app.kubernetes.io/name: argocd-server

app.kubernetes.io/part-of: argocd

name: argocd-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: argocd-server

subjects:

- kind: ServiceAccount

name: argocd-server

namespace: dev-1-devops-argocd-ns

Well, that’s why I told about the default argocd namespace and why it’s simpler to use it, as if you are using a custom namespace then you’ll have to update those bindings.

So, fix it, deploy again:

$ kubectl auth can-i list pods -n dev-1-devops-argocd-ns — as system:serviceaccount:dev-1-devops-argocd-ns:argocd-application-controller

yes

Try to execute the sync one more time:

$ argocd app sync guestbook

TIMESTAMP GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

2020–11–19T15:47:08+02:00 Service default guestbook-ui Running Synced service/guestbook-ui unchanged

2020–11–19T15:47:08+02:00 apps Deployment default guestbook-ui Running Synced deployment.apps/guestbook-ui unchanged

Name: guestbook

Project: default

Server: [https://kubernetes.default.svc](https://kubernetes.default.svc)

…

Sync Status: Synced to HEAD (6bed858)

Health Status: Healthy

Operation: Sync

Sync Revision: 6bed858de32a0e876ec49dad1a2e3c5840d3fb07

Phase: Succeeded

Start: 2020–11–19 15:47:06 +0200 EET

Finished: 2020–11–19 15:47:08 +0200 EET

Duration: 2s

Message: successfully synced (all tasks run)

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

Service default guestbook-ui Synced Healthy service/guestbook-ui unchanged

apps Deployment default guestbook-ui Synced Healthy deployment.apps/guestbook-ui unchanged

And it’s working:

A pod’s logs:

The next step will be to deploy a Helm chart and figure out how to work with Helm Secrets.

Originally published at RTFM: Linux, DevOps and system administration.

Top comments (0)