[Ruby on Rails is a great web application framework for startups to see their ideas evolve to products quickly. It’s for this reason that most products at Freshworks are built using Ruby on Rails. Moving from startup to scale-up means having to constantly evolve your applications so they can scale up to keep pace with your customers’ growth. In this new Freshworks Engineering series, Rails@Scale, we will talk about some of the techniques and patterns that we employ in our products to tune their performance and help them scale up.]

Freshservice is a cloud-based IT help desk and service management solution that enables organizations to simplify IT operations. Freshservice provides ITIL-ready components that help administrators manage Assets, Incidents, Problems, Change, and Releases. The Asset Management component helps organizations exercise control over their IT assets.

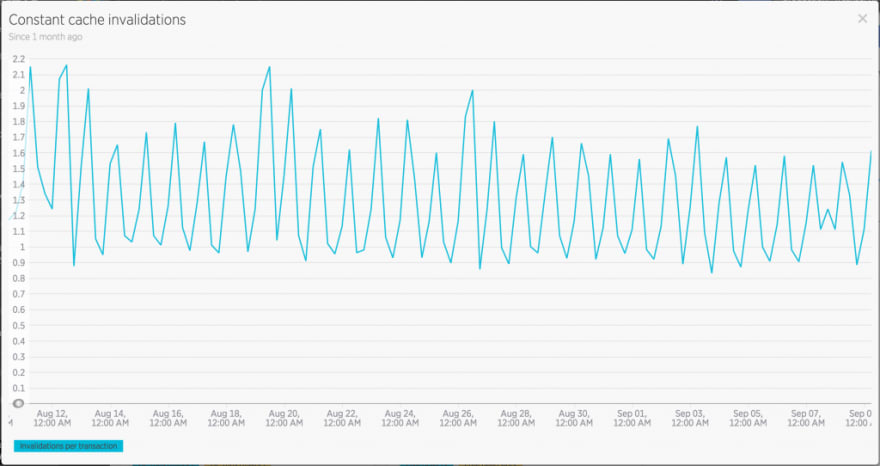

Freshservice is a SaaS product powered by Ruby on Rails. It is backed by a MySQL database for persistence storage and uses Memcached and Redis extensively for caching and config storage purposes. Multi-tenancy is at the core of a SaaS product and tenant isolation is an important factor when dealing with data. To ensure that, the keys representing data in the cache stores are always suffixed with each tenant’s unique ID.

We benchmarked the idea of changing the way we build cache keys for getting and setting data on both Memcached and Redis. We noticed that for every cached object that belonged to some entity (or entities) like a tenant or a user, we were creating hash objects to build a unique key representing that entity in the cache store.

This is a fairly common practice of building a string in Ruby (the cache key in our case):

The above snippet creates a new hash object unnecessarily. Since the end goal is to have an interpolated string, we can skip the hash creation part entirely. With the alternate approach, we could just interpolate the required constant with the dynamic value. This value could be anything that responds to to_s.

We introduced a #key method on the module, which would take a symbol and a value as params and returns an interpolated string. The resulting key that was generated was the same with both approaches, hence the existing cached objects wouldn’t be affected by this change.

We ran a benchmarking exercise to check if there was any performance impact of this activity. The benchmarking results were as follows:

Benchmarks we ran

Note: The above benchmarks were run on our current production versions of Ruby/Rails (2.3.7 / 4.2.11.1)

Currently, we’re supporting up to 2 dynamic values via 2 different methods (MemcacheKeys#key, MemcacheKeys#multi_key). Making the method accept a dynamic number of values would create an array on each invocation via the Ruby splat operation and would defeat the whole purpose.

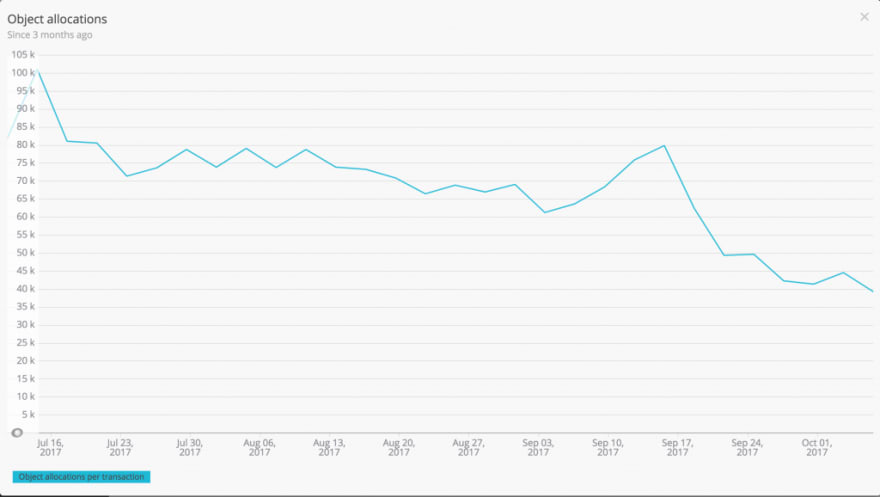

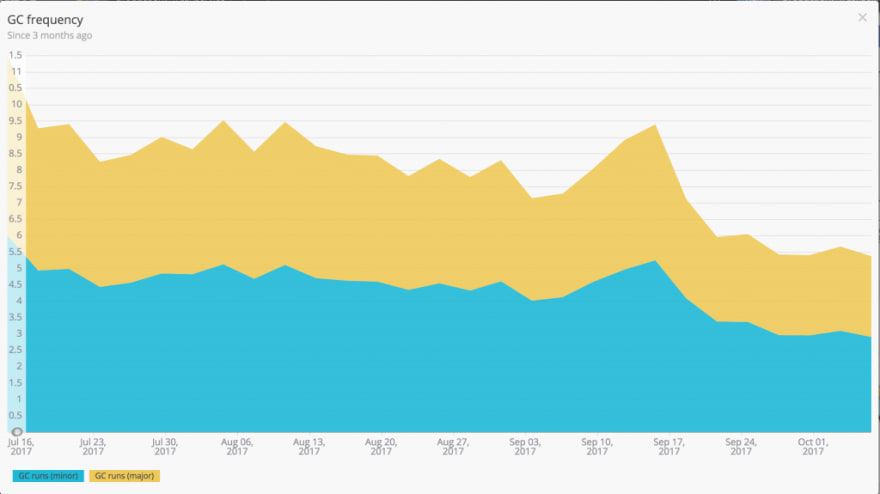

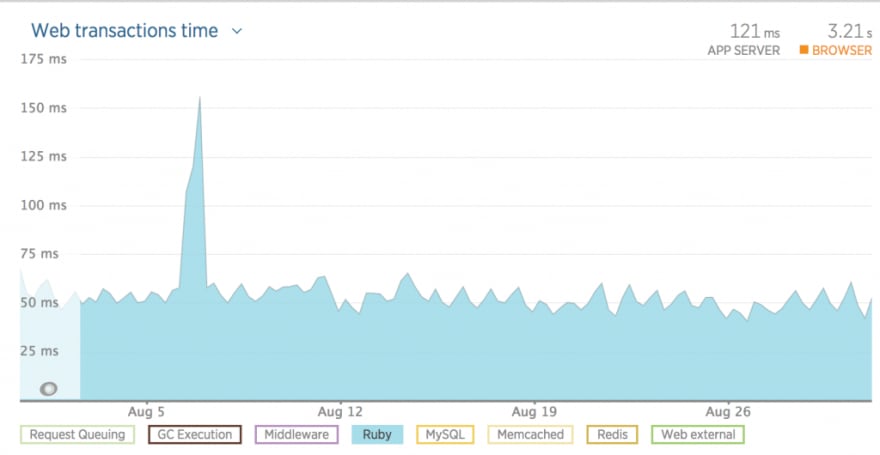

After shipping out the key generation changes over the span of a month and a half, we have noticed significant improvements in object allocations and GC frequencies.

The above approach also reduces class constant footprints. We had previously included the module in numerous classes and modules with an include MemcacheKeys just to access a constant for building the required key. Even though constants are included via reference and not duplicated, the class still has to maintain a reference to it. You can check the module#constants method. After the changes, key constants no longer need to be referenced in multiple places.

Top comments (0)