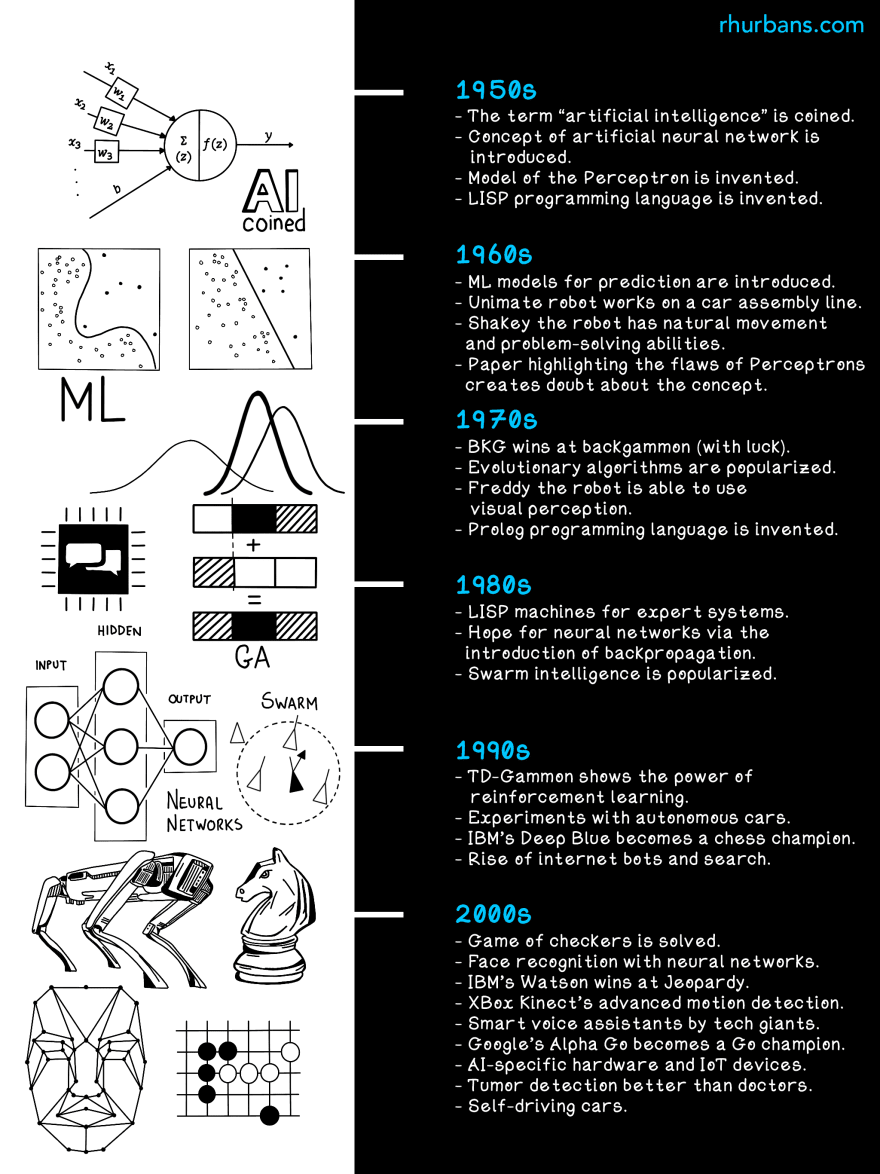

History is filled with myths of "mechanical men" and "autonomous thinking” machines. Looking back, we’re standing on the shoulders of giants in everything we do. Here's a brief look back at some AI history.

1950s: The term "Artificial Intelligence" is coined. The concept of artificial neural networks is introduced. The model of the Perceptron is invented. The LISP programming language is invented. Alan Turing proposes the Turing Test.

1960s: ML models for predictions are popularised. The Unimate robot works on a car assembly line. A paper highlighting flaws in the Perceptron creates doubts about the concept.

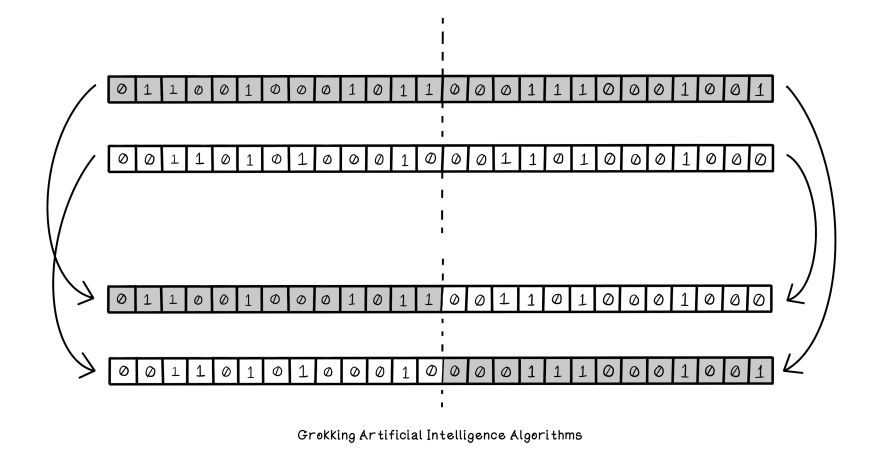

1970s: BKG wins at backgammon - with a bit of luck. Evolutionary algorithms are popularised. Freddy the robot is able to use visual perception. The Prolog programming language is invented.

1980s: LISP machines are used for expert systems. New hope arrives for neural networks through the introduction of back-propagation. Swarm intelligence is popularised.

1990s: TD-Gammon shows the potential power of reinforcement learning. Beginning of experiments with autonomous cars. IBM's Deep Blue beats a grand master and becomes a chess champion. The rise of internet search and internet bots begins.

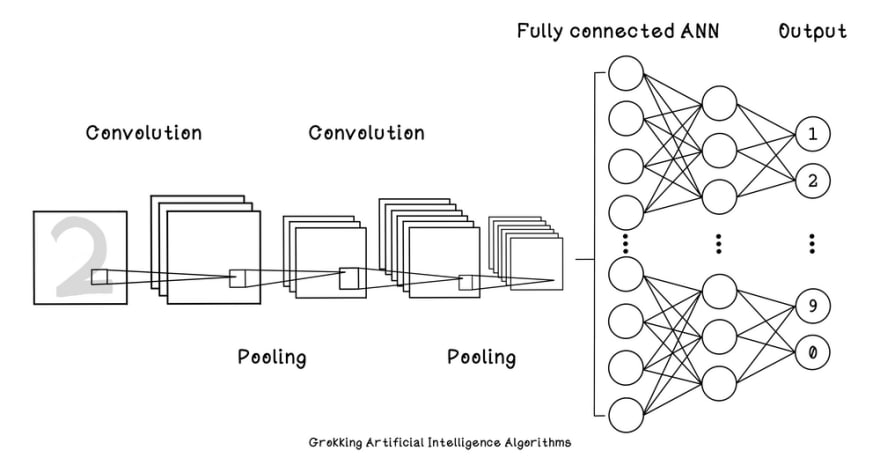

2000s: The game of checkers is solved. Facial recognition using neural networks shows promise. The adoption of the internet booms, opening the mass collection of data from the world. IBM's Watson wins at Jeopardy.

2010s: Voice assistants become widely adopted creating a facade of "general intelligence". Work in autonomous cars are booming. Purpose-built AI hardware becomes widely adopted.

2010s: DeepMind AlphaGo is the first program to defeat a professional Go player and world champion, and is arguably the strongest Go player in history. AlphaGo improved and became increasingly stronger and better at learning and decision-making through reinforcement learning.

2020s: OpenAI GPT-3: Generative Pre-trained Transformer 3 is a language model that uses deep learning to produce human-like text. It can generate convincing information that makes sense with the context like creating poems, memes, or even websites.

2020s: If you could unravel a protein you would see that it’s like a string of beads made of a sequence of different chemicals known as amino acids. DeepMind AlphaFold predicts 3D models of protein structures. It has the potential to improve pathology for many diseases.

2020s: OpenAI CLIP (Contrastive Language–Image Pre-training) learns visual concepts from natural language supervision. CLIP can be applied to any visual classification benchmark by simply providing the names of the visual categories to be recognised.

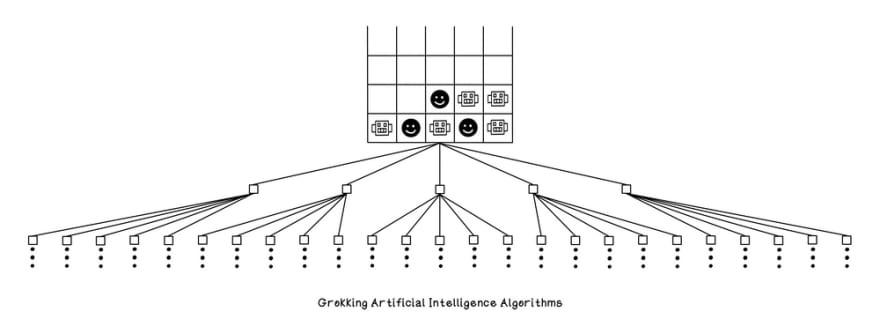

If you enjoyed this thread, check out my book: Grokking Artificial Intelligence Algorithms with Manning, consider following me for more, or join my mailing list for infrequent knowledge drops in your inbox: rhurbans.com/subscribe.

Oldest comments (0)