When you have a laptop with so called "switchable graphics" (like I do in my Lenovo IdeaPad G50-80), you effectively have two GPUs. In my case, these are: Intel i7-5500U and AMD Radeon R5 M330. While programming in DirectX 11, you can enumerate these two adapters and choose any of them while creating a ID3D11Device object. For quite some time I was wondering how various settings of this "switchable graphics" affect my app? Today I finally figured it out. Long story short: They just change order of these adapters as visible to my program, so that the appropriate one is visible as adapter 0. Here is the full story:

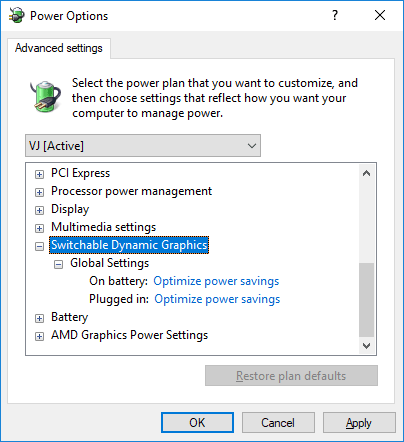

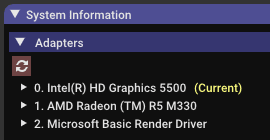

It look like the base setting is the one that can be found in Windows Settings > Power options > edit your power plan > Switchable Dynamic Graphics. (Not to confuse with "AMD Graphics Power Settings"!) When you set it to "Optimize power savings" or "Optimize performance", application sees Intel GPU as first adapter:

When you choose "Maximize performance", application sees AMD GPU as first adapter:

I also found that Radeon Settings (the app that comes with AMD graphics driver) overrides this system setting. If you go to System > Switchable Graphics and make configuration for your specific executable, then again: choosing "Power Saving" makes your app see Intel GPU as first adapter, while choosing "High Performance" makes AMD graphics first.

It's as simple as that. Basically if you always use the first adapter you find, then you follow recommended settings of the system. You are still free to use the other adapter while creating your D3D11 device. I checked that - it works and it really uses that one.

It's especially important if you meet a strange bug where your app hangs on one of these GPUs.

Top comments (0)