Skaffold (part of the Google Container Tools ) was on the market since 2018, but was in 2020 when, (at least for me), they reach a prod-grade maturity level on the tool.

And I was more than fascinated on how, this tool not only can facilitate the developer work on local machines, but also, as a complete pipeline from development to production environment, if is used with a couple of other tools.

Easy and Repeatable Kubernetes Development, no matter if you are a developer, lead, platform engineer, SRE or head of Engineering, all we're agree on that 🙋🏽.

We want an easy, repeatable, reproducible development workflow, that brings more autonomy in the teams, to bring more product value to the final user in a secure way.

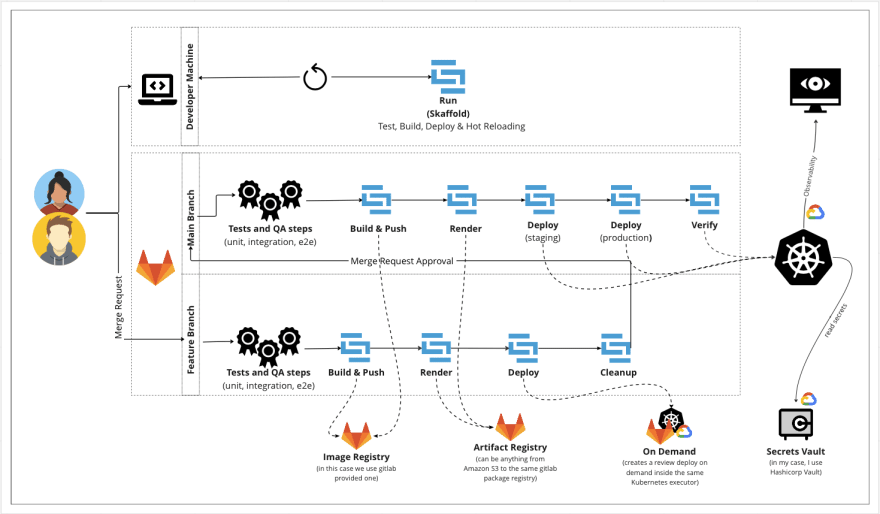

I want to show you, how I use Skaffold as building-block for my micro service CI/CD pipeline from local to production.

The Toolset

- skaffold cli (you can use the provided docker image or install in you machine)

-

kubectl and

Kustomize(kustomize is part of kubectl cli already) - K8s cluster (local and remote) - if you use docker-desktop you've already one cluster installed by default, to use for local development.

Isn't intended in this article to show, how to install a k8s cluster for local development, you have various alternatives like Minikube out there. Like I said, I my case since I use docker-desktop and they come with a k8s inside by default.

The Workflow

The main idea is, to use 'skaffold' as a building block from local to production environment, simplifying the tooling used by the developer and facilitating the integration with the actual gitlab repository service.

The more complex part would be, the integration and functional tests, since integration and functional tests needs the complete application and dependencies running, a little more work needs to be done to accomplish that, however isn't as complex as sounds, since I used a Kubernetes gitlab runner to run the pipelines, so, we can use the same runner to deploy the application in a special namespace, run our tests, and then, remove the application from the runner.

Be aware, that you need to do a cleanup process after each pipeline or stage run, to avoid left running process consuming capacity and space in your Kubernetes cluster and to avoid recurring in unexpected operational costs.

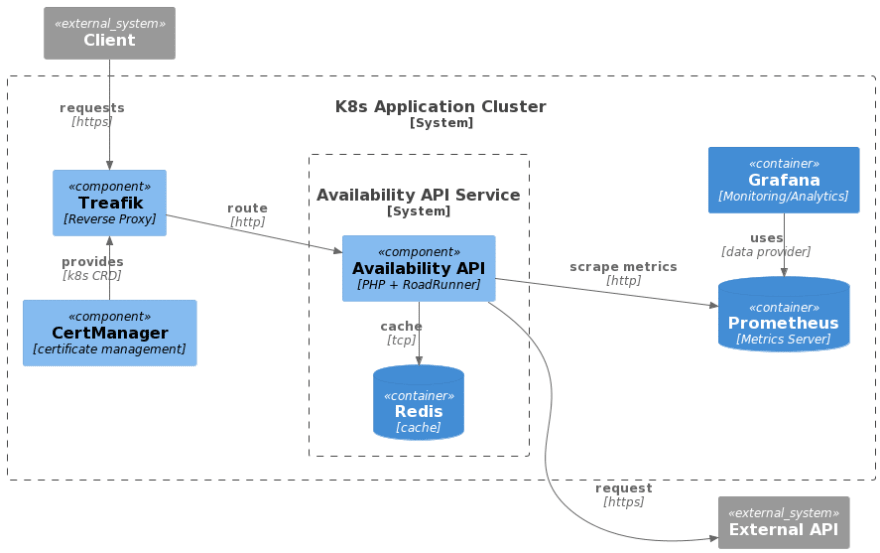

The Application

It's the simplest micro service you may know, but it's intended for demonstration purposes. Was made in Symfony with RoadRunner as application server, expose metrics to a prometheus metrics server, and then this metrics are fetched from the monitoring stack to be used in grafana dashboard for monitoring and observability purposes.

Now, that we have all the context clear, lets begin with the next steps: first at all, i need to setup our repository skeleton and directory structure, in order to be, as functional as possible to my intended workflow and development process.

Take into account, that this, is how I setup my repositories, and you should fit this to your expectations and operational workflow.

Additionally, my principal goal when I began to work with this GitOps approach was also: reduce the cognitive load and operational complexity for new an current colleagues, reducing also the onboarding time and the number of tools that we need to do our work.

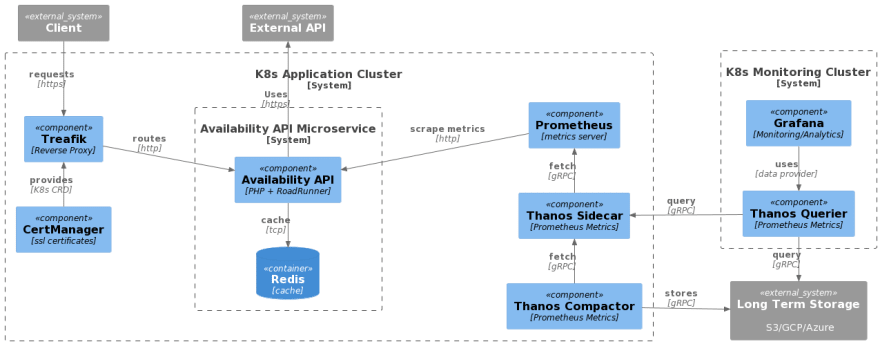

If you need a more complex scenario for metrics scaling, i normally try to use thanos for that job, since allows me to easily scale prometheus, and get long term storage in commonly knows cloud object storage services, like Amazon S3 or GCP Cloud Storage.

Below you can find a diagram of the same application but implementing thanos.

Let's continue this guide with the simplified version of the application (the one with only prometheus and Grafana components for monitoring)

Folder Structure and Orchestration process

Lets recap on some concepts about the tooling that we're implementing here, to fulfil the promise of a, full workflow with skaffold from local to production.

- I deploy on

K8scluster withkubectlandkustomize(kustomize is part of k8s bundle). - I use

skaffoldas workflow building block through their cli command steps (build, test, render, deploy, verify) - I build images locally with

dockercli (is a pre requisite for my workflow) and ingitlabi have a couple of options alongside docker (KanikoorDocker in Dockervariations), but i'll cover that in the next steps. - I use a

Makefileas command "collector" entrypoint no only for local development but for gitlab pipeline to group commands in single word ones (make run, build, unit, etc). - I use

terraformdeclarative configuration files, to set the desired state of my working cluster (in that case the local one), in this desired state we have some pre-requisites needed in my architecture definition, likecert-manager,traefik,prometheusandgrafana, like my machines on staging and production.

- deploy/

manifests/ # the place where k8s yaml resides

- *k8s.yaml

- kuztomization.yaml

overlays/ # for every environment that you want, you should have an overlay

development/

- *.k8s.patch.yaml

- kuztomization.yaml

production/

- *.k8s.patch.yaml

- kuztomization.yaml

- skaffold.yaml

- infrastructure/ #terraform scripts to install cluster pre requisites vault, cert-manager, treafik

- src/ # all the source code of your application

- Dockerfile

- Makefile

- .gitlab-ci.yaml

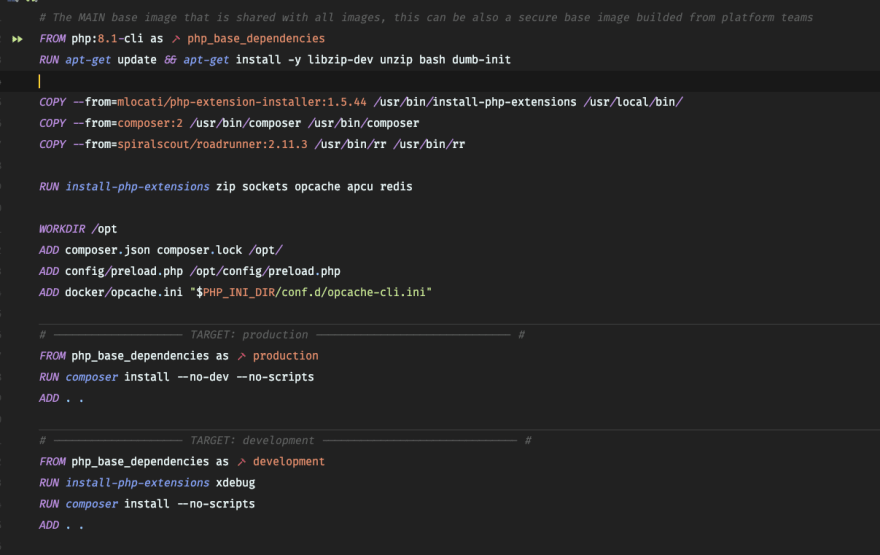

Another important component of this setup is: the Dockerfile, to be able to use the same dockerfile to build images for development and production environments (with the dependencies of each of them), i build a multi-stage dockerfile that allows me to get a target for development, and a target for production, that we can point to in the skaffold build phase.

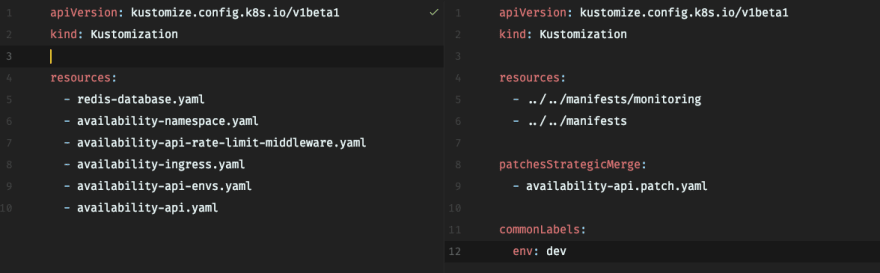

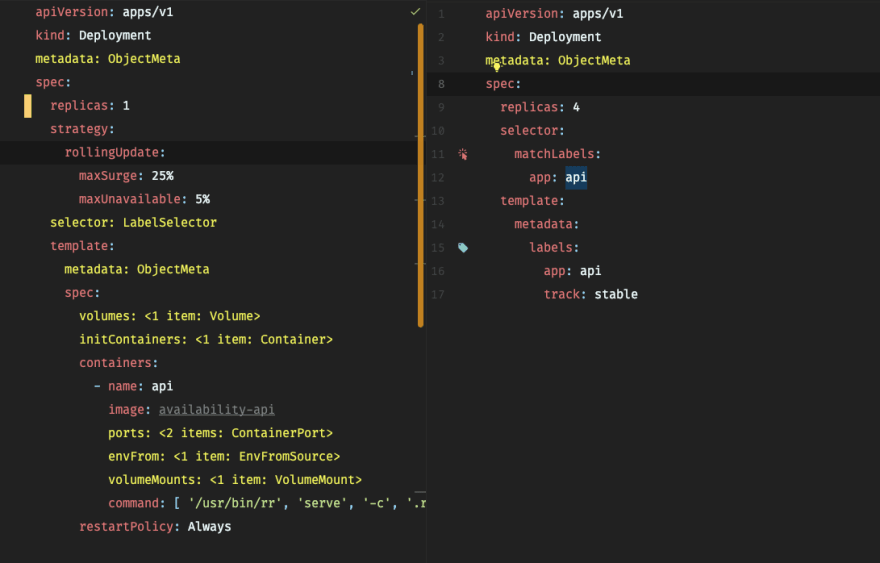

Kustomize files, (kustomization.yaml ones), allow me to declare a patch or merge of the a part of the main k8s manifest, to apply the changes that I need to change in some environment without the necessity of duplicate the entire YAML, so, for example, if I have the following k8s manifest declaring a api with 1 replica, and then, i can declare a patch to set that number to 4 replicas if the environment is production.

The following image shows you, how is a main k8s manifest and their corresponding patch for production. You can patch anything you want, adding all the data, metadata and others labels to every manifest in environment overlays.

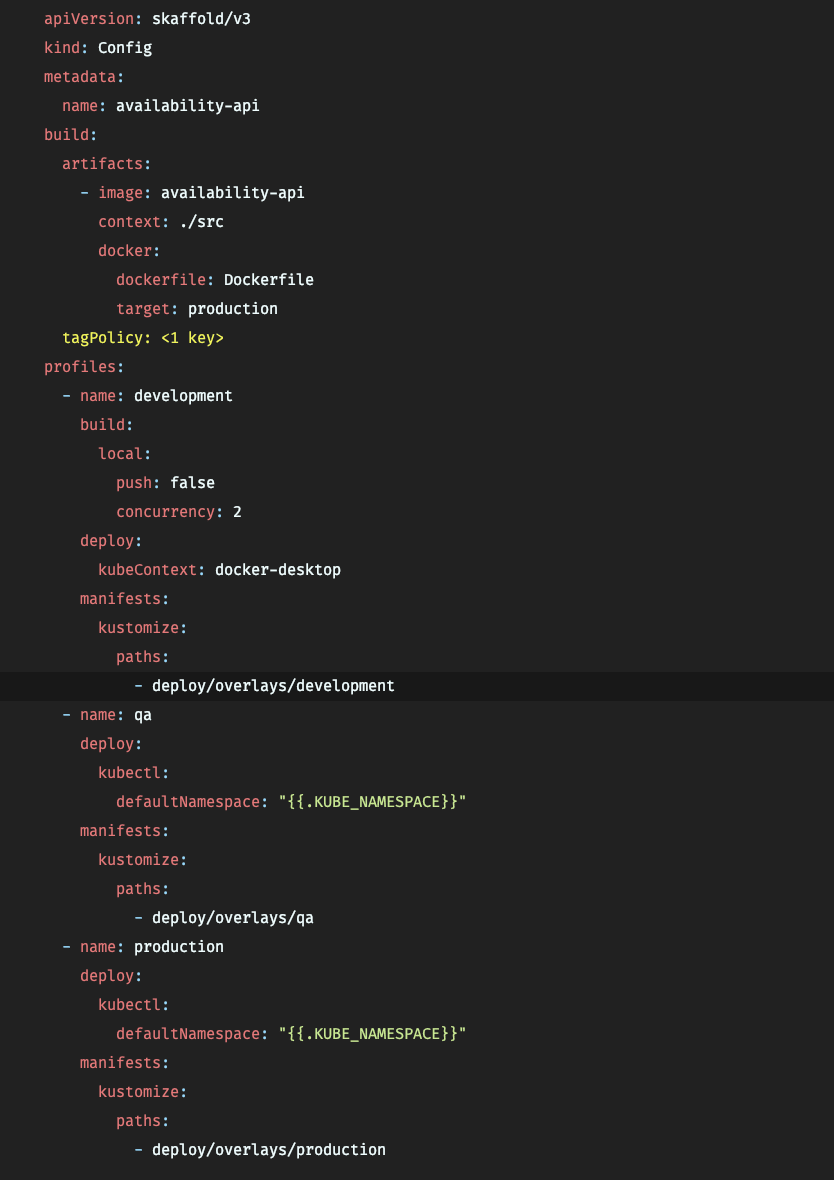

Then, we have our skaffold file, in charge of the orchestration process of the workflow itself:

Since skaffold allows us to use kustomization as deployment strategy, i organise my profiles to do so, and also, with this, the development team has a lot of maneoveur to modify and deploy changes with zero effort.

Now, i can run everything in one shot to see how this work, so, it's everything is ok, i'll capable to access all the tools (via browser) and the API requesting them.

To run skaffold you need to run the following command:skaffold dev -p development, but since we use a Makefile as command entrypoint, you can see above that make run do the same job as we need to run skaffold in development mode.

I use my domain and a self signed certificate to access, all applications via a FQDN over https (using cert-manager and traefik for that), now, I'll be able to access all of them via that URLS (on the local machine this urls points to the loopback 127.0.0.1 in the /etc/hosts file)

We should have at lest this applications:

- Grafana

- Traefik Dashboard

- API /Application

Lets see in this animated GIF, those applications running:

😅 with this , i already have a full local development cycle for my local environment, now the next milestone is, make my gitlab pipelines compliance with this pipeline and make the way to Low and Prod environments .

Let's stop here for now. I'll prepare the material for the next blog entry.

Next Chapter

In the next chapter of this tutorial, I'll try to implement this local workflow in gitlab pipeline, allowing me to use the tests, build, render, deploy and verify skaffold stages in my entire pipelines and deploy the application to a k8s cluster in GCP in a full GitOps manner.

Thanks for reading and see you the next week for more! 😃

A Big KUDOS to the team #skaffold for the great job, if you wan to know more about you can reach them at slack or in their repo

Support me

If you like what you just read and you find it valuable, then you can buy me a coffee by clicking the link in the image below, it would be appreciated.

Top comments (0)