What are DynamodDB Streams? How do they work?

DynamoDB Stream can be described as a stream of observed changes in data, technically called a Change Data Capture (CDC). Once enabled, whenever you perform a write operation to the DynamoDB table, like put, update or delete, a corresponding event containing information like which record was changed and what was changed will be saved to the Stream in near-real time.

Characteristics of DynamoDB Stream

- events are stored up to 24 hours

- ordered, sequence of events in the stream reflects the actual sequence of operations in the table

- near-real time, events are available in the stream within less than a second from the moment of the write operation

- deduplicated, each modification corresponds to exactly one record within the stream

- noop operations, like

PutItemorUpdateItemthat do not change the record are ignored

Anatomy of DynamoDB Stream

Stream consists of Shards. Each Shard is a group of Records, where each record corresponds to a single data modification in the table related to that stream.

Shards are automatically created and deleted by AWS. Shards also have a possibility of dividing into multiple shards, and this also happens without our action.

Moreover, when creating a stream you have few options on what data should be pushed to the stream. Options include:

-

OLD_IMAGE- Stream records will contain an item before it was modified -

NEW_IMAGE- Stream records will contain an item after it was modified -

NEW_AND_OLD_IMAGES- Stream records will contain both pre and post-change snapshots -

KEYS_ONLY- Self-explanatory

DynamoDB Lambda Trigger

DynamoDB Streams works particularly well with AWS Lambda due to its event-driven nature. They scale to the amount of data pushed through the stream and streams are only invoked if there's data that needs to be processed.

In Serverless Framework, to subscribe your Lambda function to a DynamoDB stream, you might use following syntax:

functions:

compute:

handler: handler.compute

events:

- stream: arn:aws:dynamodb:region:XXXXXX:table/foo/stream/1970-01-01T00:00:00.000

- stream:

type: dynamodb

arn:

Fn::GetAtt: [MyDynamoDbTable, StreamArn]

- stream:

type: dynamodb

arn:

Fn::ImportValue: MyExportedDynamoDbStreamArnId

Sample event that will be delivered to your Lambda function:

[

{

"eventID": "fe981bbed304aaa4e666c0ecdc2f6666",

"eventName": "MODIFY",

"eventVersion": "1.1",

"eventSource": "aws:dynamodb",

"awsRegion": "us-east-1",

"dynamodb": {

"ApproximateCreationDateTime": 1576516897,

"Keys": {

"sk": {

"S": "sk"

},

"pk": {

"S": "pk"

}

},

"NewImage": {

"sk": {

"S": "sk"

},

"pk": {

"S": "pk"

},

"List": {

"L": [

{

"S": "FirstString"

},

{

"S": "SecondString"

}

]

},

"Map": {

"M": {

"Name": {

"S": "Rafal"

},

"Age": {

"N": "30"

}

}

},

"IntegerNumber": {

"N": "223344"

},

"String": {

"S": "Lorem Ipsum"

},

"StringSet": {

"SS": ["Test1", "Test2"]

}

},

"SequenceNumber": "125319600000000013310543218",

"SizeBytes": 200,

"StreamViewType": "NEW_IMAGE"

},

"eventSourceARN": "arn:aws:dynamodb:us-east-1:1234567890:table/my-test-table/stream/2021-12-02T00:00:00.000"

}

]

Few important points to note about this event:

-

eventNameproperty can be one of three values:INSERT,MODIFYorREMOVE -

dynamodb.Keysproperty contains the primary key of the record that was modified -

dynamodb.NewImageproperty contains the new values of the record that was modified. Keep in mind that this data is in DynamoDB JSON format, not plain JSON. You can use library likedynamodb-streams-processorby Jeremy Daly to convert it to plain JSON.

Filtering DynamoDB Stream events

One of the recently announced features of Lambda function is the ability to filter events, including these coming from a DynamoDB Stream. Filtering is especially useful if you want to process only a subset of the events in the stream, e.g. only events that are deleting records or updating a specific entity. This is also a great way to reduce the amount of data that your Lambda function processes - it drives the operational burden and costs down.

To create event source mapping with filter criteria using AWS CLI, use following command:

aws lambda create-event-source-mapping \

--function-name dynamodb-async-stream-processor \

--batch-size 100 \

--starting-position LATEST \

--event-source-arn arn:aws:dynamodb:us-west-2:111122223333:table/MyTable/stream/2021-05-11T12:00:00.000 \

--filter-criteria '{"Filters": [{"Pattern": "{\"age\": [{\"numeric\": [\"<\", 25]}]}"}]}'

The filter-criteria argument is using the same syntax as EventBridge event patterns.

DynamoDB Streams Example Use Cases

DynamoDB Streams are great if you want to decouple your application core business logic from effects that should happen afterward. Your base code can be minimal while you can still "plug-in" more Lambda functions reacting to changes as your software evolves. This enables not only separation of concerns but also better security and reduces the impact of possible bugs. Streams can be also leveraged to be an alternative for Transactions is consistency and atomicity is not required.

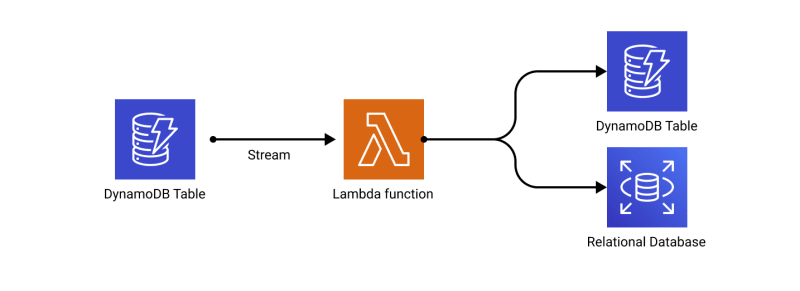

Data replication

Even though cross-region data replication can be solved with DynamoDB Global tables, you may still want to replicate your data to DynamoDB table in the same region or push it to RDS or S3. DynamoDB Streams are perfect for that.

Content moderation

DynamoDB Streams are also useful for writing "middlewares". You can easily decouple business logic with asynchronous validation or side-effects. One example of such a case is content moderation. Once a message or image is added to a table, DynamoDB Stream passes that record to the Lambda function, which validates it against AWS Artificial Intelligence services such as AWS Rekognition or AWS Comprehend.

Search

Sometimes the data must also be replicated to other sources, like Elasticsearch where it could be indexed in order to make it searchable. DynamoDB Streams allow that too.

Best practices

- Be aware of the eventual consistency of that solution. DynamoDB Stream events are near-real time but not real-time. There will be small delay between the time of the event and the time of the event delivery.

- Be aware of constraints - events in the stream are retained for 24 hours, only two processes can be reading from a single stream shard at a time

- To achieve best separation of concerns, use one Lambda function per DynamoDB Stream. It will help you keep IAM permissions minimal and code as simple as possible

- Handle failures. Wrap whole processing logic in a

try/catchclause, store the failed event in a DLQ (Dead Letter Queue) and retry them later.

Notifications and sending e-mails

Similarly to the previous example, once the message is saved to DynamoDB table, Lambda function which subscribes to that stream, invokes AWS Pinpoint or SES to notify recipients about it.

Frequently Asked Questions

How much do DynamoDB streams cost?

DynamoDB Streams are based on "Read Request Units" basis. To learn more about them head to our DynamoDB Pricing calculator.

How can I view DynamoDB stream metrics?

DynamoDB Stream metrics can be viewed in two places:

- In AWS DynamoDB Console in the Metrics tab

- Using AWS Cloudwatch

What are DynamoDB Stream delivery guarantees?

DynamoDB Streams operate in exactly-once delivery mode meaning that for each data modification, only one event will be delivered to your subscribers.

Can I filter DynamoDB Stream events coming to my Lambda function?

Yes, you can use "event source mapping" with a filter criteria to filter events coming to your Lambda function. It's using the same syntax as EventBridge event patterns.

Top comments (0)