Training neural networks is not as hard as it used to be 40 years ago, nevertheless, it is as much as art as it is science, thus practice makes the master. Deep learning is a very powerful tool for someone who wants to apply AI to unstructured data, but unstructured data has the tendency to be unstructured, in other words, every piece of data might have a different size and shape. An example of this might be a text, a song, or images. As a programmer, you need to face this difficulty and find solutions. In this post, I'll show you two ways to handle variable-shaped input, when training neural networks.

Padding

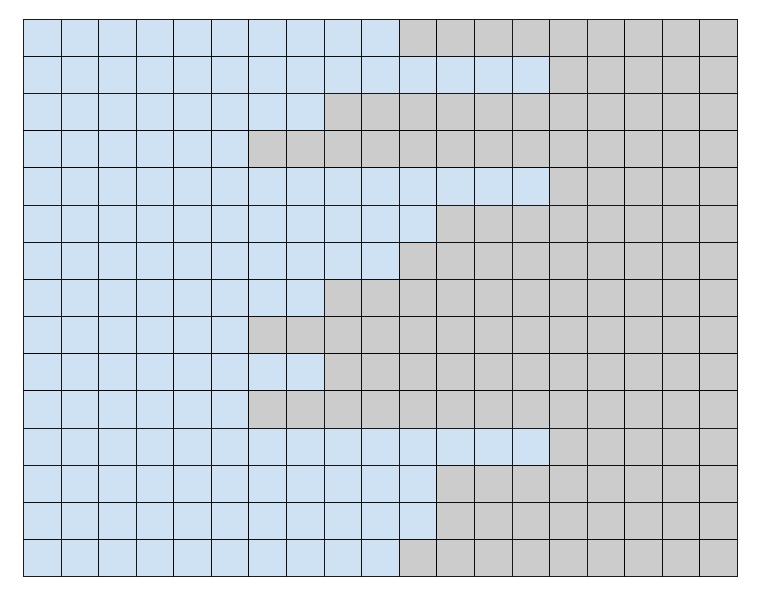

The most simple way to do it is by padding every input to the same size. It is straightforward since you only need to find the biggest tensor in a batch of data and pad every other tensor in the batch to that size. Mmm, that does not seem very efficient, you are using a lot of empty space, and you are making the training harder for the neural network.

Bucketing

A better way would be to sort the data in ascending order and create batches that minimize the padding between tensors. This way you make training faster and avoid unused data. You might still encounter an over-padded situation but it is definitely better than the naive solution. A problem with this solution is that the batches you train with will always be the same, which might cause overfitting but it shouldn't be much of an issue.

Conclusion

This is a simple tip I wanted to share for deep learning enthusiasts. Comment your favorite way to handle variable-shaped data!

Top comments (0)