Note: This article is being incomplete and will not be updated. But still contains a lot of information about Big O Notation.

It doesn't matter whether you're self-taught or you went to school to study computer science. If you're looking to be hired as a software engineer, you're going to need to understand Big O notation along with calculating time/space complexity.

There's a high probability that a question, such as "Can you tell me the time/space complexity of this algorithm?" or "What's the Big O of this function?" will appear in your next technical interview.

My intention with writing this article is to help you understand Big O notation so that when the time comes and you're in an technical interview... you'll feel confident in answering a question about time/space complexity and Big O notation.

Let's begin.

What is Big O notation?

We use Big O notation to determine how fast an algorithm's runtime is, as the number of operations/inputs for that algorithm increases.

By knowing the Big O of our algorithm, we're able to:

- Know how well our algorithm performs as the number of operations increases (in its worst-case scenario).

- Compare algorithms to determine which algorithm is faster and takes less memory.

I want to emphasize, when we use Big O notation, we're discovering how efficient an algorithm is in its worst-case runtime.

How is Big O notation written and said?

Depending on the algorithm, you'll may see Big O notation written in a variety of forms like this: O(n), O(1), O(log n), and more. "n" in this case, is the number of operations in an algorithm. The speed of an algorithm isn’t measured in seconds, but in growth of the number of operations.

But, how would you tell somebody that an algorithm has a Big O notation of O(n), O(1), O(log n)?

Give it a try (I encourage you to say it out loud):

- O(n) = Big O of n_._

- O(1) = Big O of one.

- O(log n) = Big O of log n.

Most times, you can leave out the word "Big" and just say O of ...

Why does it matter if we use Big O notation?

There's a time and place to use Big O notation.

If your goal is to create an application that doesn't require a lot of operations/inputs and more people won't be using it then you're most likely not going to have to stress out about Big O notation. But, if you're working at a company such as: Amazon, Google, Facebook, Twitter, etc... your job will require you to design algorithms that'll be used by millions.

When millions of people are using software that rely on your algorithm, you're going to want to start asking yourself questions such as:

- Is my algorithm readable?

- Is my algorithm fast?

- Can I reduce the number of operations my algorithm does while maintaining readability and speed?

- How much memory is my algorithm taking?

There's always limits to how much you can improve in one area before another area starts suffering. And what I mean by this is, if I focus purely on making my algorithm as fast as possible, there's a good chance that my algorithm won't be as easy to read or my algorithm maybe my algorithm would require more space in trade for more speed.

These are the types of things you must consider when designing your algorithms and by thinking about these things, they make you a better engineer.

Speed isn't everything

Obviously you want your code to be fast, but you also want to be able to come back to your code (or have somebody with fresh eyes) nine months later and be able to understand what your code is doing.

Consider Big O notation as something that you can add to your software engineering toolkit. You have a bunch of tools in your toolbox such as: data structures, design patterns, and sorting algorithms. You then use these tools in your toolbox to create different types of algorithms. Now you're able to use Big O notation to compare the algorithms you've created with each other to select the algorithm that'll better fit your need.

Before we start moving onto time and space complexities, I think it's important to mention that the reason why it's incredibly important to use something like Big O notation, especially when building for scalability is because everything seems fast when there's only a small amount of inputs and operations being done. It's only when you get to large amounts of inputs and operations that you can really see how efficient your algorithm really is.

Big-O Complexity Chart

Before we get into calculating and understanding time and space complexities, above is a chart courtesy of (https://www.bigocheatsheet.com) which shows us complexities of common algorithms. I recommend bookmarking this website as it helps remembering the complexities and how well they are.

How do we use this chart?

When we figure out the Big O notation of our algorithm, we're able to use this chart to identify how our algorithm performs either in time or space complexity. For example, If we figure out that our algorithm is O(n), we can look at this chart to find the line representing O(n). Notice that as the number of elements increase (goes further to the right along the x-axis), we notice that the number of operations (y axis) doesn't increase as much. The chart says that an algorithm with O(n)... is fair.

Time complexity

Big O notation can be used for describing two things: Time complexity and Space complexity. It's important to know how to find and describe both of them using Big O notation.

How to find the time complexity in Big O notation

We find the time complexity by counting the number of operations in an algorithm and then using a chart like above to determine whether that time complexity is good or bad.

Our first complexity, O(1) - Constant Time

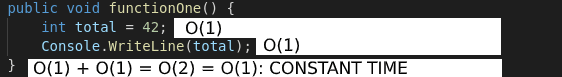

In this example, I have a function named functionOne. In it, I initialized an integer named total and set it equal to 42. Afterwards, I wrote to the console that total.

There were two operations done, each of these operations were O(1). There was no loops at all, very quickly we initialized an integer and displayed it to console. Every time we invoke this function, it will always be "O of one". Having a time complexity, O of one.

You may have noticed that when we counted the total amount of operations it was O(2) but for then I said this function was O(1) - Constant Time. There are some simplifying rules that I will get to later. For now, know that this is what constant time and a time complexity of O(1) looks like... no loops. It's important to remember that O(1) stands for Constant Time.

Here are some more examples of O(1) - Constant Time

This graph represents O(1), every time we invoke a function with a time complexity of O(1), we know that it's going to be really fast because the number of operations is the same each time. Notice that the line is a horizontal line at 1?

What if our algorithm was O(2)? Then we'd have a horizontal line at y = 2, it'd still be a horizontal line with no changes in the number of operations. So if we see any constants e.g. O(500), O(32), O(17), etc... we always simplify it to O(1) because O(1) has the same behavior as any other constant.

Our second complexity, O(n) - Linear Time

This is our second complexity, O(n) - Linear Time. A loop is considered O(n).

Do you remember what the n stands for in O(n)? # of operations

The reason a loop is O(n) is because a loop would typically go through the loop, n amount of times. You can also define a specific amount of times you want to loop through a loop... this still results in looping n amount of times. As a result, this is known as linear time. As the number of elements(inputs) increase, the number of operations increase.

O(n) Linear Time = for loops, while loops through n items.

In linear time, as the number of elements increase, the number of operations increase and time complexity is longer.

Our third complexity, O(n²) - Quadratic Time

Looking at our Big O cheatsheet, notice that the Quadratic Time complexity is in the red. It's horrible. For example, if we have 400 operations, we end up having, 160,000 operations. Now we're starting to have a better understanding of why Big O notation is important especially when dealing with scalability.

There's more to Big O Notation, but I hope this article can serve as an introduction to Big O Notation. And an article you can refer to along with your other resources.

Thank you for reading.

Top comments (0)