The main issue with large files is that we can’t load them entirely into the memory. It is easy to get out-of-memory error and crash. And obviously, all JSON parsers in all languages will run out-of-memory exception in no time. Any file larger than 100M is difficult and in most cases impossible to analyse.

JSON is a popular text-based format in the industry. Which represents a structured data-based object. It is commonly used for transmitting data in web applications (e.g., sending some data from the server to the client, so it can be displayed on a web page, or vice versa)

Also, it works well with JSV format — JSV Text Format (JSON + CSV) is a text-based format developed by the ServiceStack team and is optimized for both size and speed.

So, our goal here is to demonstrate to you how you can use WORKSHEETS Data Studio to process and analyse large JSON files straight in your browser (tested with JSON files up to 4G).

As I mentioned before, we can’t afford to load the entire file into the memory. So, how are we going to analyse then? There are a few tricks we can apply:

- Limit result set to 100, 1000, or any other reasonable amount.

- Get distinct values for one of the fields.

- Apply filter criteria and reduce element count.

- Transform each element and reduce the size you are loading.

All these actions you can do in your browser with Worksheets Data Studio and no installations are required. You can find it online: https://run.worksheet.systems or Chrome Extension

There are two ways you can process JSON files:

- Use JSON editor to open/process files

- Use JSPython. It is a more advanced way of processing files. Where you can program more logic into it. You can use the function openFileAsArray where you can choose file from the disk and parse data partially with all control you need. Function

openFileAsArraycomes with following parameters:-

take and skip — specify how many items you want to take or to skip. This will allow you to

pagebig JSON file - distinct — if the distinct field is defined, then the function will return unique values for this field

- containsText — filter results by searching text before parsing.

- filterText — a callback predicate function where you can have pre-parsed string and define filter criteria

- filter — a callback predicate function where you can define filter criteria with parsed element object

- map — a callback function where you can define/changes result items

- chunkSize — define a chunk size we are processing at a time. By default it is 64 * 1024 * 1024 (64K)

- chunkProcessor — a callback function where you can handle a parsed chunk of items. If this function is defined, then we will not return items. You will have to process a batch of items yourself. In one of the use cases, you can save a batch of items to the database.

-

take and skip — specify how many items you want to take or to skip. This will allow you to

Let's review all 4 use cases and I will demonstrate all available approaches:

Limit elements count

A good starting point is to look for a small number of elements, lets's say you want to see only 1000 elements and figure out the element’s structure and decide your future steps for analysis:

Use JSON editor in Worksheets Data Studio

Open new blank JSON tab

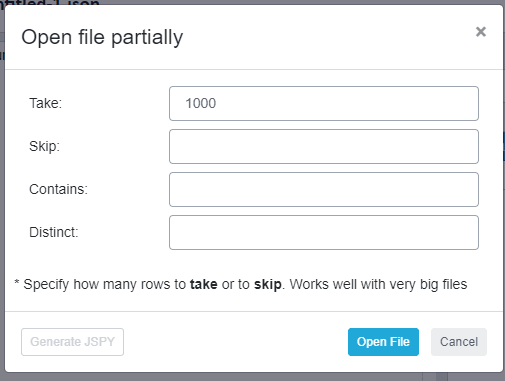

In the top right corner, you will notice the button Open File and Open file partially. Where the first button will open and load the entire JSON/JSV/CSV file into JSON Editor. And button Open file partially will open the following dialog, which will allow you to work with large files

If you press Open File button. You will be able to see the first 1000 elements from your JSON file. This is very helpful to analyze file structure and decide on further analysis actions

If you press Open File button. You will be able to see the first 1000 elements from your JSON file. This is very helpful to analyze file structure and decide on further analysis actions

Use JSPython

Alternatively, you can use JSPython editor and programmatically handle results. FunctionopenFileAsArray will open the same dialog and will return processed elements results for further data manipulations in JSPython.

Here is an example

# Welcome to JSPython (https://jspython.dev)

data = openFileAsArray({

take: 1000

#skip: 1000

}).data

# work with data array here

return data

Get Distinct values

After you’ve seen a file structure and the top 1000 elements in your JSON file. Next, you would be probably more interested to see unique values for some of the fields. Let’s say, in our example, you would be interested to see the unique Product_ID in the file

Or you can achieve the same result with JSPython code

Apply filter criteria

And now, when you’ve seen a chunk of your JSON file and analyzed unique values from this file. You should know enough to filter and get whatever is needed for your analysis.

Let's say you want to see only elements that contain the text InterestRate:CrossCurrency:FixedFloat.

Then you can specify in the dialog:

Or with JSPython you can define a predicate function with more advanced filter criteria. you can add extra filter criteria e.g. Action == ‘CANCEL’

Transform results

One other way of reducing memory footprint is to remove some of the fields for each element. Especially, if some of the fields are not important. This option is available only with JSPython code

Here is JSPython example with filter callback and transformation map

openFileAsArray({

filter: r=>

r.Product_ID == 'InterestRate:CrossCurrency:FixedFloat'

and r.Action == 'CANCEL',

map: r => return {

id: r.Dissemination_ID,

effectiveDate: dateTime(r.Effective_Date.substring(0, 10)),

notional: r.Notional_Amount_1,

notionalCcy: r.Notional_Currency_1

}

}).data

and eventually, results will look like

Video tutorial

Conclusion

WORKSHEETS Data Studio simplifies working with large JSON files or even, sometimes, is the only option to view and analyse large JSON file. However, the best way to analyze big files will be to load the entire file into an SQL database and then use SQL queries to analyze much more effectively. This is something we will show you how to do in the next tutorial.

Originally published at https://www.worksheet.systems

Latest comments (0)