The JSON format is very friendly for both people and for machines. In other words, it is very well structured for people to read as well as you can parse it quickly with any programming language.

However, all important information is better stored in relational SQL databases. In relational databases, all information is stored in tables, which consist of rows and columns. So, we will have to break down our JSON file into rows and columns.

Supporting Video tutorial

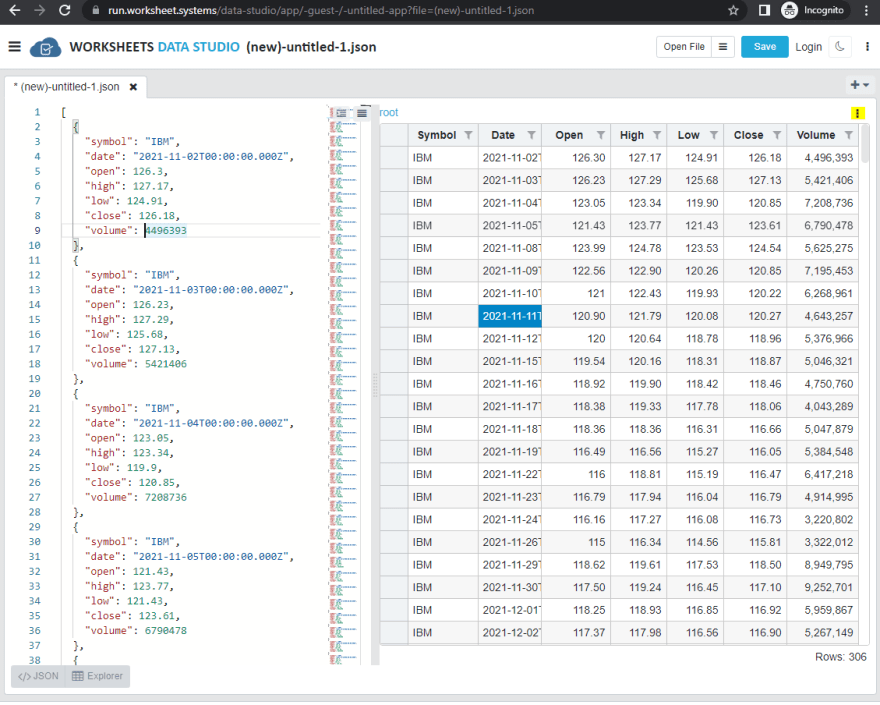

It is very easy to do with WORKSHEETS Data Studio. Just go to JSON editor and open the JSON file or paste a valid JSON data into the editor.

You will instantly see in a right-hand panel, that your JSON had been rendered into the data grid which has a table structure. Each element from an array is a row and each property is a column. Any nested objects are kept in a valid JSON format.

Please note. You have to have a valid SQL connection

So, we are ready to save this to the database. Press the Save To Database menu item. Choose:

- SQL Connection, schema, tableName.

- Make sure field mapping is correct

- Choose the primary key

and you are ready to save.

We have 3 ways to save :

Upsert / Merge - So, it will insert or update rows in your table, based on a primary key(s). this is the default way and will work well in most cases.

Append - it will append only your data. A bit more performance optimized

Bulk Insert - it delivers the best performance. But, you will have to ensure data integrity

In a bit more advanced scenarios you may want to change the structure of your

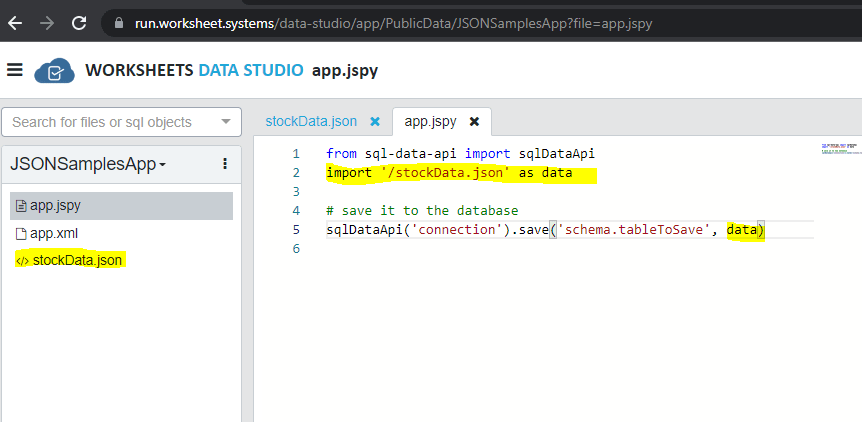

Use JSPython to save JSON Data to SQL Database

If you need more flexibility and control, you can use JSPython to import your data. You can use SQL Data Api library to save. Also, you can save JSON to Worksheets Data Studio project and then import it into a jspy file for further processing

Open JSON file and process it

You can use openFileAsArray function

openFileAsArray()

Transform JSON Data

You can save JSON data in the projects and then import it into JSPython

# transform object if needed

fileData = openFileAsArray()

return fileData.data.map(r =>

r.fileName = fileData.fileName

r.date = dateTime(r.date)

r.avg = (r.high + r.low)/2

return r

)

Save Data To SQL

# Welcome to JSPython (https://jspython.dev)

from sql-data-api import sqlDataApi

fileData = openFileAsArray()

items = fileData.data

.map(r =>

r.fileName = fileData.fileName

r.date = dateTime(r.date)

r.avg = (r.high + r.low)/2

return r

)

sqlDataApi('public-data-connect').save('publicData.table2Ex', items)

Importing large JSON file

We do not recommend storing/loading large JSON files, because your browsers will run out of memory and crash. The previous article demonstrated how you can work with large JSON files. In this article, I will show you how you can import large JSON files into smaller chunks.

Here is a JSPython:

from sql-data-api import sqlDataApi

async def saveItemsToDatabase(items, fileName):

#add fileName for each element

items = items.map(r => Object.assign({fileName}, r))

# save it to the database

res = sqlDataApi('connectionName').save('schema.tableName',items)

print(res)

return openFileAsArray({

chunkProcessor: saveItemsToDatabase

}).data

As in a previous example, we use the openFileAsArray function. Where we define a chunkProcessor function, which uses sql-data-api to save items to the database. Meanwhile, as in this example, we are transforming elements and adding the fileName field.

Before running this code, you have to have SQL Connection defined and which has a table with a conforming structure. If you are running a large file, you can always open the first 1000 (or 10000) rows and use the Save To Database functionality to create a new table with all fields. Please make sure that varchar length and datatype can accommodate all rows in a file

WORKSHEETS Data Studio

WORKSHEETS Data Studio is a low-code data management studio which makes it easy to work with different kinds of files and we have straightforward processes to load JSON data into the database

Top comments (1)

Thanks for an excellent tutorial.