How to setup CI (Continuous Integration) pipelines for dockerized PHP applications with Github Actions and Gitlab Pipelines

This article appeared first on https://www.pascallandau.com/ at CI Pipelines for dockerized PHP Apps with Github & Gitlab [Tutorial Part 7]

In the seventh part of this tutorial series on developing PHP on Docker we will setup a CI (Continuous Integration) pipeline to run code quality tools and tests on Github Actions and Gitlab Pipelines.

All code samples are publicly available in my Docker PHP Tutorial repository on Github. You find the branch for this tutorial at part-7-ci-pipeline-docker-php-gitlab-github.

All published parts of the Docker PHP Tutorial are collected under a dedicated page at Docker PHP Tutorial. The previous part was Use git-secret to encrypt secrets in the repository and the following one is A primer on GCP Compute Instance VMs for dockerized Apps.

If you want to follow along, please subscribe to the RSS feed or via email to get automatic notifications when the next part comes out :)

Table of contents

Introduction

CI is short for Continuous Integration and to me mostly means running the code quality tools and tests of a codebase in an isolated environment (preferably automatically). This is particularly important when working in a team, because the CI system acts as the final gatekeeper before features or bugfixes are merged into the main branch.

I initially learned about CI systems when I stubbed my toes into the open source water. Back in the day I used Travis CI for my own projects and replaced it with Github Actions at some point. At ABOUT YOU we started out with a self-hosted Jenkins server and then moved on to Gitlab CI as a fully managed solution (though we use custom runners).

Recommended reading

This tutorial builds on top of the previous parts. I'll do my best to cross-reference the corresponding articles when necessary, but I would still recommend to do some upfront reading on:

- the general folder structure, the

update of the

.docker/directory and the introduction of a.make/directory - the general usage of

makeand it's evolution as well as the connection todocker composecommands - the concepts of the docker containers and the

docker composesetup

And as a nice-to-know:

- the setup of PhpUnit for the

testmake target as well as theqamake target - the usage of

git-secretto handle secret values

Approach

In this tutorial I'm going to explain how to make our existing docker setup work with Github Actions and Gitlab CI/CD Pipelines. As I'm a big fan of a "progressive enhancement" approach, we will ensure that all necessary steps can be performed locally through make. This has the additional benefit of keeping a single source of truth (the Makefile) which will come in handy when we set up the CI system on two different providers (Github and Gitlab).

The general process will look very similar to the one for local development:

- build the docker setup

- start the docker setup

- run the qa tools

- run the tests

You can see the final results in the CI setup section, including the concrete yml files and links to the repositories, see

On a code level, we will treat CI as an environment, configured through the env variable ENV. So far we only used ENV=local and we will extend that to also use ENV=ci. The necessary changes are explained after the concrete CI setup instructions in the sections

Try it yourself

To get a feeling for what's going on, you can start by executing the local CI run:

- checkout branch part-7-ci-pipeline-docker-php-gitlab-github

- initialize

make - run the

.local-ci.shscript

This should give you a similar output as presented in the Execution example.

git checkout part-7-ci-pipeline-docker-php-gitlab-github

# Initialize make

make make-init

# Execute the local CI run

bash .local-ci.sh

CI setup

General CI notes

Initialize make for CI

As a very first step we need to "configure" the codebase to operate for the ci environment. This is done through the make-init target as explained later in more detail in the Makefile changes section via

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

$ make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

Created a local .make/.env file

ENV=ci ensures that we

TAG=latest is just a simplification for now because we don't do anything with the images yet. In an upcoming tutorial we will push them to a container registry for later usage in production deployments and then set the TAG to something more meaningful (like the build number).

EXECUTE_IN_CONTAINER=true forces every make command that uses a RUN_IN_*_CONTAINER setup to run in a container. This is important, because the Gitlab runner will actually run in a docker container itself. However, this would cause any affected target to omit the $(DOCKER_COMPOSER) exec prefix.

GPG_PASSWORD=12345678 is the password for the secret gpg key as mentioned in Add a password-protected secret gpg key.

wait-for-service.sh

I'll explain the "container is up and running but the underlying service is not" problem for the mysql service and how we can solve it with a health check later in this article at Adding a health check for mysql. On purpose, we don't want docker compose to take care of the waiting because we can make "better use of the waiting time" and will instead implement it ourselves with a simple bash script located at .docker/scripts/wait-for-service.sh:

#!/bin/bash

name=$1

max=$2

interval=$3

[ -z "$1" ] && echo "Usage example: bash wait-for-service.sh mysql 5 1"

[ -z "$2" ] && max=30

[ -z "$3" ] && interval=1

echo "Waiting for service '$name' to become healthy, checking every $interval second(s) for max. $max times"

while true; do

((i++))

echo "[$i/$max] ...";

status=$(docker inspect --format "{{json .State.Health.Status }}" "$(docker ps --filter name="$name" -q)")

if echo "$status" | grep -q '"healthy"'; then

echo "SUCCESS";

break

fi

if [ $i == $max ]; then

echo "FAIL";

exit 1

fi

sleep $interval;

done

This script waits for a docker $service to become "healthy" by checking the .State.Health.Status info of the docker inspect command.

CAUTION: The script uses $(docker ps --filter name="$name" -q) to determine the id of the container, i.e. it will "match" all running containers against the $name - this would fail if there is more than one matching container! I.e. you must ensure that $name is specific enough to identify one single container uniquely.

The script will check up to $max times in a interval of $interval seconds. See these answers on the "How do I write a retry logic in script to keep retrying to run it up to 5 times?" question for the implementation of the retry logic. To check the health of the mysql service for 5 times with 1 seconds between each try, it can be called via

bash wait-for-service.sh mysql 5 1

Output

$ bash wait-for-service.sh mysql 5 1

Waiting for service 'mysql' to become healthy, checking every 1 second(s) for max. 5 times

[1/5] ...

[2/5] ...

[3/5] ...

[4/5] ...

[5/5] ...

FAIL

# OR

$ bash wait-for-service.sh mysql 5 1

Waiting for service 'mysql' to become healthy, checking every 1 second(s) for max. 5 times

[1/5] ...

[2/5] ...

SUCCESS

The problem of "container dependencies" isn't new and there are already some existing solutions out there, e.g.

But unfortunately all of them operate by checking the availability of a host:port combination and in the case of mysql that didn't help, because the container was up, the port was reachable but the mysql service in the container was not.

Setup for a "local" CI run

As mentioned under Approach, we want to be able to perform all necessary steps locally and I created a corresponding script at .local-ci.sh:

#!/bin/bash

# fail on any error

# @see https://stackoverflow.com/a/3474556/413531

set -e

make docker-down ENV=ci || true

start_total=$(date +%s)

# STORE GPG KEY

cp secret-protected.gpg.example secret.gpg

# DEBUG

docker version

docker compose version

cat /etc/*-release || true

# SETUP DOCKER

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

start_docker_build=$(date +%s)

make docker-build

end_docker_build=$(date +%s)

mkdir -p .build && chmod 777 .build

# START DOCKER

start_docker_up=$(date +%s)

make docker-up

end_docker_up=$(date +%s)

make gpg-init

make secret-decrypt-with-password

# QA

start_qa=$(date +%s)

make qa || FAILED=true

end_qa=$(date +%s)

# WAIT FOR CONTAINERS

start_wait_for_containers=$(date +%s)

bash .docker/scripts/wait-for-service.sh mysql 30 1

end_wait_for_containers=$(date +%s)

# TEST

start_test=$(date +%s)

make test || FAILED=true

end_test=$(date +%s)

end_total=$(date +%s)

# RUNTIMES

echo "Build docker: " `expr $end_docker_build - $start_docker_build`

echo "Start docker: " `expr $end_docker_up - $start_docker_up `

echo "QA: " `expr $end_qa - $start_qa`

echo "Wait for containers: " `expr $end_wait_for_containers - $start_wait_for_containers`

echo "Tests: " `expr $end_test - $start_test`

echo "---------------------"

echo "Total: " `expr $end_total - $start_total`

# CLEANUP

# reset the default make variables

make make-init

make docker-down ENV=ci || true

# EVALUATE RESULTS

if [ "$FAILED" == "true" ]; then echo "FAILED"; exit 1; fi

echo "SUCCESS"

Run details

- as a preparation step, we first ensure that no outdated

cicontainers are running (this is only necessary locally, because runners on a remote CI system will start "from scratch")

make docker-down ENV=ci || true

- we take some time measurements to understand how long certain parts take via

start_total=$(date +%s)

to store the current timestamp

- we need the secret

gpgkey in order to decrypt the secrets and simply copy the password-protected example key (in the actual CI systems the key will be configured as a secret value that is injected in the run)

# STORE GPG KEY

cp secret-protected.gpg.example secret.gpg

- I like printing some debugging info in order to understand which exact circumstances we're dealing with (tbh, this is mostly relevant when setting the CI system up or making modifications to it)

# DEBUG

docker version

docker compose version

cat /etc/*-release || true

- for the docker setup, we start with

initializing the environment for

ci

# SETUP DOCKER

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

then build the docker setup

make docker-build

and finally add a .build/ directory to

collect the build artifacts

mkdir -p .build && chmod 777 .build

- then, the docker setup is started

# START DOCKER

make docker-up

and gpg is initialized so that

the secrets can be decrypted

make gpg-init

make secret-decrypt-with-password

We don't need to pass a GPG_PASSWORD to secret-decrypt-with-password because we have set

it up in the previous step as a default value via make-init

- once the

applicationcontainer is running, the qa tools are run by invoking theqamake target

# QA

make qa || FAILED=true

The || FAILED=true part makes sure that the script will not be terminated if the checks fail.

Instead, the fact that a failure happened is "recorded" in the FAILED variable so that we

can evaluate it at the end. We don't want the script to stop here because we want the

following steps to be executed as well (e.g. the tests).

- to mitigate the

"

mysqlis not ready" problem, we will now apply the wait-for-service.sh script

# WAIT FOR CONTAINERS

bash .docker/scripts/wait-for-service.sh mysql 30 1

- once

mysqlis ready, we can execute the tests via thetestmake target and apply the same|| FAILED=trueworkaround as for the qa tools

# TEST

make test || FAILED=true

- finally, all the timers are printed

# RUNTIMES

echo "Build docker: " `expr $end_docker_build - $start_docker_build`

echo "Start docker: " `expr $end_docker_up - $start_docker_up `

echo "QA: " `expr $end_qa - $start_qa`

echo "Wait for containers: " `expr $end_wait_for_containers - $start_wait_for_containers`

echo "Tests: " `expr $end_test - $start_test`

echo "---------------------"

echo "Total: " `expr $end_total - $start_total`

- we clean up the resources (this is only necessary when running locally, because the runner of a CI system would be shut down anyway)

# CLEANUP

make make-init

make docker-down ENV=ci || true

- and finally evaluate if any error occurred when running the qa tools or the tests

# EVALUATE RESULTS

if [ "$FAILED" == "true" ]; then echo "FAILED"; exit 1; fi

echo "SUCCESS"

Execution example

Executing the script via

bash .local-ci.sh

yields the following (shortened) output:

$ bash .local-ci.sh

Container dofroscra_ci-redis-1 Stopping

# Stopping all other `ci` containers ...

# ...

Client:

Cloud integration: v1.0.22

Version: 20.10.13

# Print more debugging info ...

# ...

Created a local .make/.env file

ENV=ci TAG=latest DOCKER_REGISTRY=docker.io DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_ci --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose-php-base.yml build php-base

#1 [internal] load build definition from Dockerfile

# Output from building the docker containers

# ...

ENV=ci TAG=latest DOCKER_REGISTRY=docker.io DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_ci --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose.local.ci.yml -f ./.docker/docker-compose/docker-compose.ci.yml up -d

Network dofroscra_ci_network Creating

# Starting all `ci` containers ...

# ...

"C:/Program Files/Git/mingw64/bin/make" -s gpg-import GPG_KEY_FILES="secret.gpg"

gpg: directory '/home/application/.gnupg' created

gpg: keybox '/home/application/.gnupg/pubring.kbx' created

gpg: /home/application/.gnupg/trustdb.gpg: trustdb created

gpg: key D7A860BBB91B60C7: public key "Alice Doe protected <alice.protected@example.com>" imported

# Output of importing the secret and public gpg keys

# ...

"C:/Program Files/Git/mingw64/bin/make" -s git-secret ARGS="reveal -f -p 12345678"

git-secret: done. 1 of 1 files are revealed.

"C:/Program Files/Git/mingw64/bin/make" -j 8 -k --no-print-directory --output-sync=target qa-exec NO_PROGRESS=true

phplint done took 4s

phpcs done took 4s

phpstan done took 8s

composer-require-checker done took 8s

Waiting for service 'mysql' to become healthy, checking every 1 second(s) for max. 30 times

[1/30] ...

SUCCESS

PHPUnit 9.5.19 #StandWithUkraine

........ 8 / 8 (100%)

Time: 00:03.077, Memory: 28.00 MB

OK (8 tests, 15 assertions)

Build docker: 12

Start docker: 2

QA: 9

Wait for containers: 3

Tests: 5

---------------------

Total: 46

Created a local .make/.env file

Container dofroscra_ci-application-1 Stopping

Container dofroscra_ci-mysql-1 Stopping

# Stopping all other `ci` containers ...

# ...

SUCCESS

Setup for Github Actions

- Repository (branch

part-7-ci-pipeline-docker-php-gitlab-github) - CI/CD overview (Actions)

- Example of a successful job

- Example of a failed job

If you are completely new to Github Actions, I recommend to start with the official Quickstart Guide for GitHub Actions and the Understanding GitHub Actions article. In short:

- Github Actions are based on so called Workflows

- Workflows are

yamlfiles that live in the special.github/workflowsdirectory in the repository

- Workflows are

- a Workflow can contain multiple Jobs

- each Job consists of a series of Steps

-

each Step needs a

run:element that represents a command that is executed by a new shell- multi-line commands that should use the same shell are written as

- run : | echo "line 1" echo "line 2"See also difference between "run |" and multiple runs in github actions

The Workflow file

Github Actions are triggered automatically based on the files in the .github/workflows directory. I have added the file .github/workflows/ci.yml with the following content:

name: CI build and test

on:

# automatically run for pull request and for pushes to branch "part-7-ci-pipeline-docker-php-gitlab-github"

# @see https://stackoverflow.com/a/58142412/413531

push:

branches:

- part-7-ci-pipeline-docker-php-gitlab-github

pull_request: {}

# enable to trigger the action manually

# @see https://github.blog/changelog/2020-07-06-github-actions-manual-triggers-with-workflow_dispatch/

# CAUTION: there is a known bug that makes the "button to trigger the run" not show up

# @see https://github.community/t/workflow-dispatch-workflow-not-showing-in-actions-tab/130088/29

workflow_dispatch: {}

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- name: start timer

run: |

echo "START_TOTAL=$(date +%s)" > $GITHUB_ENV

- name: STORE GPG KEY

run: |

# Note: make sure to wrap the secret in double quotes (")

echo "${{ secrets.GPG_KEY }}" > ./secret.gpg

- name: SETUP TOOLS

run : |

DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

# install docker compose

# @see https://docs.docker.com/compose/cli-command/#install-on-linux

# @see https://github.com/docker/compose/issues/8630#issuecomment-1073166114

mkdir -p $DOCKER_CONFIG/cli-plugins

curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/docker-compose-linux-$(uname -m) -o $DOCKER_CONFIG/cli-plugins/docker-compose

chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

- name: DEBUG

run: |

docker compose version

docker --version

cat /etc/*-release

- name: SETUP DOCKER

run: |

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=${{ secrets.GPG_PASSWORD }}"

make docker-build

mkdir .build && chmod 777 .build

- name: START DOCKER

run: |

make docker-up

make gpg-init

make secret-decrypt-with-password

- name: QA

run: |

# Run the tests and qa tools but only store the error instead of failing immediately

# @see https://stackoverflow.com/a/59200738/413531

make qa || echo "FAILED=qa" >> $GITHUB_ENV

- name: WAIT FOR CONTAINERS

run: |

# We need to wait until mysql is available.

bash .docker/scripts/wait-for-service.sh mysql 30 1

- name: TEST

run: |

make test || echo "FAILED=test $FAILED" >> $GITHUB_ENV

- name: RUNTIMES

run: |

echo `expr $(date +%s) - $START_TOTAL`

- name: EVALUATE

run: |

# Check if $FAILED is NOT empty

if [ ! -z "$FAILED" ]; then echo "Failed at $FAILED" && exit 1; fi

- name: upload build artifacts

uses: actions/upload-artifact@v3

with:

name: build-artifacts

path: ./.build

The steps are essentially the same as explained before at Run details for the local run. Some additional notes:

- I want the Action to be triggered automatically only when I

push to branch

part-7-ci-pipeline-docker-php-gitlab-githubOR when a pull request is created (viapull_request). In addition, I want to be able to trigger the Action manually on any branch (viaworkflow_dispatch).

on:

push:

branches:

- part-7-ci-pipeline-docker-php-gitlab-github

pull_request: {}

workflow_dispatch: {}

For a real project, I would let the action only run automatically on long-living branches like

main or develop. The manual trigger is helpful if you just want to test your current work

without putting it up for review. CAUTION: There is a

known issue that "hides" the "Trigger workflow" button to trigger the action manually.

- a new shell is started for each

run:instruction, thus we must store our timer in the "global" environment variable$GITHUB_ENV

- name: start timer

run: |

echo "START_TOTAL=$(date +%s)" > $GITHUB_ENV

This will be the only timer we use, because the job uses multiple steps that are timed

automatically - so we don't need to take timestamps manually:

- the

gpgkey is configured as an encrypted secret namedGPG_KEYand is stored in./secret.gpg. The value is the content of thesecret-protected.gpg.examplefile

- name: STORE GPG KEY

run: |

echo "${{ secrets.GPG_KEY }}" > ./secret.gpg

Secrets are configured in the Github repository under Settings > Secrets > Actions at

https://github.com/$user/$repository/settings/secrets/actions

e.g.

https://github.com/paslandau/docker-php-tutorial/settings/secrets/actions

- the

ubuntu-latestimage doesn't contain thedocker composeplugin, thus we need to install it manually

- name: SETUP TOOLS

run : |

DOCKER_CONFIG=${DOCKER_CONFIG:-$HOME/.docker}

mkdir -p $DOCKER_CONFIG/cli-plugins

curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/docker-compose-linux-$(uname -m) -o $DOCKER_CONFIG/cli-plugins/docker-compose

chmod +x $DOCKER_CONFIG/cli-plugins/docker-compose

- for the

makeinitialization we need the second secret namedGPG_PASSWORD- which is configured as12345678in our case, see Add a password-protected secret gpg key

- name: SETUP DOCKER

run: |

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=${{ secrets.GPG_PASSWORD }}"

- because the runner will be shutdown after the run, we need to move the build artifacts to a permanent location, using the actions/upload-artifact@v3 action

- name: upload build artifacts

uses: actions/upload-artifact@v3

with:

name: build-artifacts

path: ./.build

You can

download the artifacts in the Run overview UI

Setup for Gitlab Pipelines

- Repository (branch

part-7-ci-pipeline-docker-php-gitlab-github) - CI/CD overview (Pipelines)

- Example of a successful job

- Example of a failed job

If you are completely new to Gitlab Pipelines, I recommend to start with the official Get started with GitLab CI/CD guide. In short:

- the core concept of Gitlab Pipelines is the Pipeline

- it is defined in the

yamlfile.gitlab-ci.ymlthat lives in the root of the repository

- it is defined in the

- a Pipeline can contain multiple Stages

- each Stage consists of a series of Jobs

- each Job contains a

scriptsection - the

scriptsection consists of a series of shell commands

The .gitlab-ci.yml pipeline file

Gitlab Pipelines are triggered automatically based on a .gitlab-ci.yml file located at the root of the repository. It has the following content:

stages:

- build_test

QA and Tests:

stage: build_test

rules:

# automatically run for pull request and for pushes to branch "part-7-ci-pipeline-docker-php-gitlab-github"

- if: '($CI_PIPELINE_SOURCE == "merge_request_event" || $CI_COMMIT_BRANCH == "part-7-ci-pipeline-docker-php-gitlab-github")'

# see https://docs.gitlab.com/ee/ci/docker/using_docker_build.html#use-docker-in-docker

image: docker:20.10.12

services:

- name: docker:dind

script:

- start_total=$(date +%s)

## STORE GPG KEY

- cp $GPG_KEY_FILE ./secret.gpg

## SETUP TOOLS

- start_install_tools=$(date +%s)

# "curl" is required to download docker compose

- apk add --no-cache make bash curl

# install docker compose

# @see https://docs.docker.com/compose/cli-command/#install-on-linux

- mkdir -p ~/.docker/cli-plugins/

- curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/docker-compose-linux-x86_64 -o ~/.docker/cli-plugins/docker-compose

- chmod +x ~/.docker/cli-plugins/docker-compose

- end_install_tools=$(date +%s)

## DEBUG

- docker version

- docker compose version

# show linux distro info

- cat /etc/*-release

## SETUP DOCKER

# Pass default values to the make-init command - otherwise we would have to pass those as arguments to every make call

- make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=$GPG_PASSWORD"

- start_docker_build=$(date +%s)

- make docker-build

- end_docker_build=$(date +%s)

- mkdir .build && chmod 777 .build

## START DOCKER

- start_docker_up=$(date +%s)

- make docker-up

- end_docker_up=$(date +%s)

- make gpg-init

- make secret-decrypt-with-password

## QA

# Run the tests and qa tools but only store the error instead of failing immediately

# @see https://stackoverflow.com/a/59200738/413531

- start_qa=$(date +%s)

- make qa ENV=ci || FAILED=true

- end_qa=$(date +%s)

## WAIT FOR CONTAINERS

# We need to wait until mysql is available.

- start_wait_for_containers=$(date +%s)

- bash .docker/scripts/wait-for-service.sh mysql 30 1

- end_wait_for_containers=$(date +%s)

## TEST

- start_test=$(date +%s)

- make test ENV=ci || FAILED=true

- end_test=$(date +%s)

- end_total=$(date +%s)

# RUNTIMES

- echo "Tools:" `expr $end_install_tools - $start_install_tools`

- echo "Build docker:" `expr $end_docker_build - $start_docker_build`

- echo "Start docker:" `expr $end_docker_up - $start_docker_up `

- echo "QA:" `expr $end_qa - $start_qa`

- echo "Wait for containers:" `expr $end_wait_for_containers - $start_wait_for_containers`

- echo "Tests:" `expr $end_test - $start_test`

- echo "Total:" `expr $end_total - $start_total`

# EVALUATE RESULTS

# Use if-else constructs in Gitlab pipelines

# @see https://stackoverflow.com/a/55464100/413531

- if [ "$FAILED" == "true" ]; then exit 1; fi

# Save the build artifact, e.g. the JUNIT report.xml file, so we can download it later

# @see https://docs.gitlab.com/ee/ci/pipelines/job_artifacts.html

artifacts:

when: always

paths:

# the quotes are required

# @see https://stackoverflow.com/questions/38009869/how-to-specify-wildcard-artifacts-subdirectories-in-gitlab-ci-yml#comment101411265_38055730

- ".build/*"

expire_in: 1 week

The steps are essentially the same as explained before under Run details for the local run. Some additional notes:

- we start by defining the stages of the pipeline - though that's currently just one (

build_test)

stages:

- build_test

- then we define the job

QA and Testsand assign it to thebuild_teststage

QA and Tests:

stage: build_test

- I want the Pipeline to be triggered automatically only when I

push to branch

part-7-ci-pipeline-docker-php-gitlab-githubOR when a pull request is created Triggering the Pipeline manually on any branch is possible by default.

rules:

- if: '($CI_PIPELINE_SOURCE == "merge_request_event" || $CI_COMMIT_BRANCH == "part-7-ci-pipeline-docker-php-gitlab-github")'

- since we want to build and run docker images, we need to use a docker base image and activate the

docker:dindservice. See Use Docker to build Docker images: Use Docker-in-Docker

image: docker:20.10.12

services:

- name: docker:dind

- we store the secret

gpgkey as a secret file (using the "file" type) in the CI/CD variables configuration of the Gitlab repository and move it to./secret.gpgin order to decrypt the secrets later

## STORE GPG KEY

- cp $GPG_KEY_FILE ./secret.gpg

Secrets can be configured under Settings > CI/CD > Variables at

https://gitlab.com/$project/$repository/-/settings/ci_cd

e.g.

https://gitlab.com/docker-php-tutorial/docker-php-tutorial/-/settings/ci_cd

- the docker base image doesn't come with all required tools, thus we need to install the

missing ones (

make,bash,curlanddocker compose)

## SETUP TOOLS

- apk add --no-cache make bash curl

- mkdir -p ~/.docker/cli-plugins/

- curl -sSL https://github.com/docker/compose/releases/download/v2.2.3/docker-compose-linux-x86_64 -o ~/.docker/cli-plugins/docker-compose

- chmod +x ~/.docker/cli-plugins/docker-compose

- for the initialization of

makewe use the$GPG_PASSWORDvariable that we defined in the CI/CD settings

## SETUP DOCKER

- make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=$GPG_PASSWORD"

Note: I have marked the variable as "masked"

so it won't show up in any logs

- finally, we store the job artifacts

artifacts:

when: always

paths:

- ".build/*"

expire_in: 1 week

They can be accessed in the Pipeline overview UI

Performance

Performance isn't an issue right now, because the CI runs take only about ~1 min (Github Actions) and ~2 min (Gitlab Pipelines), but that's mostly because we only ship a super minimal application and those times will go up when things get more complex. For the local setup I used all 8 cores of my laptop. The time breakdown is roughly as follows:

| Step | Gitlab | Github | local without cache |

local with cached images |

local with cached images + layers |

|---|---|---|---|---|---|

| SETUP TOOLS | 1 | 0 | 0 | 0 | 0 |

| SETUP DOCKER | 33 | 17 | 39 | 39 | 5 |

| START DOCKER | 17 | 11 | 34 | 2 | 1 |

| QA | 17 | 5 | 10 | 13 | 1 |

| WAIT FOR CONTAINERS | 5 | 5 | 3 | 2 | 13 |

| TESTS | 3 | 1 | 3 | 6 | 3 |

| total (excl. runner startup) |

78 | 43 | 97 | 70 | 36 |

| total (incl. runner startup) |

139 | 54 | 97 | 70 | 36 |

Times taken from

- "CI build and test #83" Github Action run

- "Pipeline #511355192" Gitlab Pipeline run

- "local without cache" via

bash .local-ci.shwith no local images at all - "local with cached images" via

bash .local-ci.shwith cached images formysqlandredis - "local with cached images + layers" via

bash .local-ci.shwith cached images formysqlandredisand a "warm" layer cache for theapplicationimage

Optimizing the performance is out of scope for this tutorial, but I'll at least document my current findings.

The caching problem on CI

A good chunk of time is usually spent on building the docker images. We did our best to optimize the process by leveraging the layer cache and using cache mounts (see section Build stage ci in the php-base image). But those steps are futile on CI systems, because the corresponding runners will start "from scratch" for every CI run - i.e. there is no local cache that they could use. In consequence, the full docker setup is also built "from scratch" on every run.

There are ways to mitigate that e.g.

- pushing images to a container registry and pulling them before building the images to leverage

the layer cache via the

cache_fromoption ofdocker compose - exporting and importing the images as

tararchives viadocker saveanddocker load, storing them either in the built-in cache of Github or Gitlab- see also the satackey/action-docker-layer-caching@v0.0.11 Github Action and the official actions/cache@v3 Github Action

- using the

--cache-fromand--cache-tooptions ofbuildx

But: None of that worked for me out-of-the-box :( We will take a closer look in an upcoming tutorial. Some reading material that I found valuable so far:

- Caching Docker builds in GitHub Actions: Which approach is the fastest? 🤔 A research.

- Caching strategies for CI systems

- Build images on GitHub Actions with Docker layer caching

- Faster CI Builds with Docker Layer Caching and BuildKit

- Image rebase and improved remote cache support in new BuildKit

Docker changes

As a first step we need to decide which containers are required and how to provide the codebase.

Since our goal is running the qa tools and tests, we only need the application php container. The tests also need a database and a queue, i.e. the mysql and redis containers are required as well - whereas nginx, php-fpm and php-worker are not required. We'll handle that through dedicated docker compose configuration files that only contain the necessary services. This is explained in more detail in section Compose file updates.

In our local setup, we have sheen the host system and docker - mainly because we wanted our changes to be reflected immediately in docker.

This isn't necessary for the CI use case. In fact we want our CI images as close as possible to our production images - and those should "contain everything to run independently". I.e. the codebase should live in the image - not on the host system. This will be explained in section Use the whole codebase as build context.

Compose file updates

We will not only have some differences between the CI docker setup and the local docker setup (=different containers), but also in the configuration of the individual services. To accommodate for that, we will use the following docker compose config files in the .docker/docker-compose/ directory:

-

docker-compose.local.ci.yml:- holds configuration that is valid for

localandci, trying to keep the config files DRY

- holds configuration that is valid for

-

docker-compose.ci.yml:- holds configuration that is only valid for

ci

- holds configuration that is only valid for

-

docker-compose.local.yml:- holds configuration that is only valid for

local

- holds configuration that is only valid for

When using docker compose we then need to make sure to include only the required files, e.g. for ci:

docker compose -f docker-compose.local.ci.yml -f docker-compose.ci.yml

I'll explain the logic for that later in section ENV based docker compose config. In short:

docker-compose.local.yml

When comparing ci with local, for ci

- we don't need to share the codebase with the host system

application:

volumes:

- ${APP_CODE_PATH_HOST?}:${APP_CODE_PATH_CONTAINER?}

- we don't need persistent volumes for the redis and mysql data

mysql:

volumes:

- mysql:/var/lib/mysql

redis:

volumes:

- redis:/data

- we don't need to share ports with the host system

application:

ports:

- "${APPLICATION_SSH_HOST_PORT:-2222}:22"

redis:

ports:

- "${REDIS_HOST_PORT:-6379}:6379"

- we don't need any settings for local dev tools like

xdebugorstrace

application:

environment:

- PHP_IDE_CONFIG=${PHP_IDE_CONFIG?}

cap_add:

- "SYS_PTRACE"

security_opt:

- "seccomp=unconfined"

extra_hosts:

- host.docker.internal:host-gateway

So all of those config values will only live in the docker-compose.local.yml file.

docker-compose.ci.yml

In fact, there are only two things that ci needs that local doesn't:

- a bind mount to share only the secret gpg key from the host with the

applicationcontainer

application:

volumes:

- ${APP_CODE_PATH_HOST?}/secret.gpg:${APP_CODE_PATH_CONTAINER?}/secret.gpg:ro

This

is required to decrypt the secrets:

[...] the private key has to be named

secret.gpgand put in the root of the codebase,

so that the import can be simplified withmaketargets

The secret files themselves are baked into the image, but the key to decrypt them will be

provided only during runtime and

- a bind mount to share a

.buildfolder for build artifacts with theapplicationcontainer

application:

volumes:

- ${APP_CODE_PATH_HOST?}/.build:${APP_CODE_PATH_CONTAINER?}/.build

This will be used to collect any files we want to retain from a build (e.g. code coverage

information, log files, etc.)

Adding a health check for mysql

When running the tests for the first time on a CI system, I noticed some weird errors related to the database:

1) Tests\Feature\App\Http\Controllers\HomeControllerTest::test___invoke with data set "default" (array(), ' <li><a href="?dispatch=fo...></li>')

PDOException: SQLSTATE[HY000] [2002] Connection refused

As it turned out, the mysql container itself was up and running - but the mysql process within the container was not yet ready to accept connections. Locally, this hasn't been a problem, because we usually would not run the tests "immediately" after starting the containers - but on CI this is the case.

Fortunately, docker compose has us covered here and provides a healtcheck configuration option:

healthcheckdeclares a check that’s run to determine whether or not containers for this service are "healthy".

Since this healthcheck is also "valid" for local, I defined it in the combined docker-compose.local.ci.yml file:

mysql:

healthcheck:

# Only mark the service as healthy if mysql is ready to accept connections

# Check every 2 seconds for 30 times, each check has a timeout of 1s

test: mysqladmin ping -h 127.0.0.1 -u $$MYSQL_USER --password=$$MYSQL_PASSWORD

timeout: 1s

retries: 30

interval: 2s

The script in test was taken from SO: Docker-compose check if mysql connection is ready.

When starting the docker setup, docker ps will now add a health info to the STATUS:

$ make docker-up

$ docker ps

CONTAINER ID IMAGE STATUS NAMES

b509eb2f99c0 dofroscra/application-ci:latest Up 1 seconds dofroscra_ci-application-1

503e52fd9e68 mysql:8.0.28 Up 1 seconds (health: starting) dofroscra_ci-mysql-1

# a couple of seconds later

$ docker ps

CONTAINER ID IMAGE STATUS NAMES

b509eb2f99c0 dofroscra/application-ci:latest Up 13 seconds dofroscra_ci-application-1

503e52fd9e68 mysql:8.0.28 Up 13 seconds (healthy) dofroscra_ci-mysql-1

Note the (health: starting) and (healthy) infos for the mysql service.

We can also get this info from docker inspect (used by our wait-for-service.sh script) via:

$ docker inspect --format "{{json .State.Health.Status }}" dofroscra_ci-mysql-1

"healthy"

FYI: We could also use the depends_on property with a condition: service_healthy on the application container so that docker compose would only start the container once the mysql service is healthy:

application:

depends_on:

mysql:

condition: service_healthy

However, this would "block" the make docker-up until mysql is actually up and running. In our case this is not desirable, because we can do "other stuff" in the meantime (namely: run the qa checks, because they don't require a database) and thus save a couple of seconds on each CI run.

Build target: ci

We've already introduced build targets in Environments and build targets and how to "choose" them through make with the ENV variable defined in a shared .make/.env file. Short recap:

- create a

.make/.envfile viamake make-initthat contains theENV, e.g.

ENV=ci

- the

.make/.envfile is included in the mainMakefile, making theENVvariables available tomake -

configure a

$DOCKER_COMPOSEvariable that passes theENVas an environment variable, i.e. via

ENV=$(ENV) docker-compose

- use the

ENVvariable in thedocker composeconfiguration file to determine thebuild.targetproperty. E.g. in.docker/docker-compose/docker-compose-php-base.yml

php-base:

build:

target: ${ENV?}

- in the

Dockerfileof a service, define theENVas a build stage. E.g. in.docker/images/php/base/Dockerfile

FROM base as ci

# ...

So to enable the new ci environment, we need to modify the Dockerfiles for the php-base and the application image.

Build stage ci in the php-base image

Use the whole codebase as build context

As mentioned in section Docker changes we want to "bake" the codebase into the ci image of the php-base container.

Thus, we must change the context property in .docker/docker-compose/docker-compose-php-base.yml to not only use the .docker/ directory but instead the whole codebase. I.e. "dont use ../ but ../../":

# File: .docker/docker-compose/docker-compose-php-base.yml

php-base:

build:

# pass the full codebase to docker for building the image

context: ../../

Build the dependencies

The composer dependencies must be set up in the image as well, so we introduce a new stage stage in .docker/images/php/base/Dockerfile. The most trivial solution would look like this:

- copy the whole codebase

- run

composer install

FROM base as ci

COPY . /codebase

RUN composer install --no-scripts --no-plugins --no-progress -o

However, this approach has some downsides:

- if any file in the codebase changes, the

COPY . /codebaselayer will be invalidated. I.e. docker could not use the layer cache which also means that every layer afterwards cannot use the cache as well. In consequence thecomposer installwould run every time - even when thecomposer.jsonfile doesn't change. -

composeritself uses a cache for storing dependencies locally so it doesn't have to download dependencies that haven't changed. But since we runcomposer installin Docker, this cache would be "thrown away" every time a build finishes. To mitigate that, we can use--mount=type=cacheto define a directory that docker will re-use between builds: > Contents of the cache directories persists between builder invocations without invalidating > the instruction cache.

Keeping those points in mind, we end up with the following instructions:

# File: .docker/images/php/base/Dockerfile

# ...

FROM base as ci

# By only copying the composer files required to run composer install

# the layer will be cached and only invalidated when the composer dependencies are changed

COPY ./composer.json /dependencies/

COPY ./composer.lock /dependencies/

# use a cache mount to cache the composer dependencies

# this is essentially a cache that lives in Docker BuildKit (i.e. has nothing to do with the host system)

RUN --mount=type=cache,target=/tmp/.composer \

cd /dependencies && \

# COMPOSER_HOME=/tmp/.composer sets the home directory of composer that

# also controls where composer looks for the cache

# so we don't have to download dependencies again (if they are cached)

COMPOSER_HOME=/tmp/.composer composer install --no-scripts --no-plugins --no-progress -o

# copy the full codebase

COPY . /codebase

RUN mv /dependencies/vendor /codebase/vendor && \

cd /codebase && \

# remove files we don't require in the image to keep the image size small

rm -rf .docker/ && \

# we need a git repository for git-secret to work (can be an empty one)

git init

FYI: The COPY . /codebase step doesn't actually copy "everything in the repository", because we have also introduced a .dockerignore file to exclude some files from being included in the build context - see section .dockerignore.

Some notes on the final RUN step:

-

rm -rf .docker/doesn't really save "that much" in the current setup - please take it more as an example to remove any files that shouldn't end up in the final image (e.g. "tests in a production image") - the

git initpart is required because we need to decrypt the secrets later - andgit-secretrequires agitrepository (which can be empty). We can't decrypt the secrets during the build, because we do not want decrypted secret files to end up in the image.

When tested locally, the difference between the trivial solution and the one that makes use of layer caching is ~35 seconds, see the results in the Performance section.

Create the final image

As a final step, we will rename the current stage to codebase and copy the "build artifact" from that stage into our final ci build stage:

FROM base as codebase

# build the composer dependencies and clean up the copied files

# ...

FROM base as ci

COPY --from=codebase --chown=$APP_USER_NAME:$APP_GROUP_NAME /codebase $APP_CODE_PATH

Why are we not just using the previous stage directly as ci?

Because using multistage-builds is a good practice to keep the final layers of an image to a minimum: Everything that "happened" in the previous codebase stage will be "forgotten", i.e. not exported as layers.

That does not only save us some layers, but also allows us to get rid of files like the .docker/ directory. We needed that directory in the build context because some files where required in other parts of the Dockerfile (e.g. the php ini files), so we can't exclude it via .dockerignore. But we can remove it in the codebase stage - so it will NOT be copied over and thus not end up in the final image. If we wouldn't have the codebase stage, the folder would be part of the layer created when COPYing all the files from the build context and removing it via rm -rf .docker/ would have no effect on the image size.

Currently, that doesn't really matter, because the building step is super simple (just a composer install) - but in a growing and more complex codebase you can easily save a couple MB.

To be concrete, the multistage build has 31 layers and the final layer containing the codebase has a size of 65.1MB.

$ docker image history -H dofroscra/application-ci

IMAGE CREATED CREATED BY SIZE COMMENT

d778c2ee8d5e 17 minutes ago COPY /codebase /var/www/app # buildkit 65.1MB buildkit.dockerfile.v0

^^^^^^

<missing> 17 minutes ago WORKDIR /var/www/app 0B buildkit.dockerfile.v0

<missing> 17 minutes ago COPY /usr/bin/composer /usr/local/bin/compos… 2.36MB buildkit.dockerfile.v0

<missing> 17 minutes ago COPY ./.docker/images/php/base/.bashrc /root… 395B buildkit.dockerfile.v0

<missing> 17 minutes ago COPY ./.docker/images/php/base/.bashrc /home… 395B buildkit.dockerfile.v0

<missing> 17 minutes ago COPY ./.docker/images/php/base/conf.d/zz-app… 196B buildkit.dockerfile.v0

<missing> 17 minutes ago COPY ./.docker/images/php/base/conf.d/zz-app… 378B buildkit.dockerfile.v0

<missing> 17 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 1.28kB buildkit.dockerfile.v0

<missing> 17 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 41MB buildkit.dockerfile.v0

<missing> 18 minutes ago ADD https://php.hernandev.com/key/php-alpine… 451B buildkit.dockerfile.v0

<missing> 18 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 62.1MB buildkit.dockerfile.v0

<missing> 18 minutes ago ADD https://gitsecret.jfrog.io/artifactory/a… 450B buildkit.dockerfile.v0

<missing> 18 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 4.74kB buildkit.dockerfile.v0

<missing> 18 minutes ago ENV ENV=ci 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV ALPINE_VERSION=3.15 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV TARGET_PHP_VERSION=8.1 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV APP_CODE_PATH=/var/www/app 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV APP_GROUP_NAME=application 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV APP_USER_NAME=application 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV APP_GROUP_ID=10001 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ENV APP_USER_ID=10000 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG ENV 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG ALPINE_VERSION 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG TARGET_PHP_VERSION 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG APP_CODE_PATH 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG APP_GROUP_NAME 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG APP_USER_NAME 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG APP_GROUP_ID 0B buildkit.dockerfile.v0

<missing> 18 minutes ago ARG APP_USER_ID 0B buildkit.dockerfile.v0

<missing> 2 days ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 2 days ago /bin/sh -c #(nop) ADD file:5d673d25da3a14ce1… 5.57MB

The non-multistage build has 32 layers and the final layer(s) containing the codebase have a combined size of 65.15MB (60.3MB + 4.85MB).

$ docker image history -H dofroscra/application-ci

IMAGE CREATED CREATED BY SIZE COMMENT

94ba50438c9a 2 minutes ago RUN /bin/sh -c COMPOSER_HOME=/tmp/.composer … 60.3MB buildkit.dockerfile.v0

<missing> 2 minutes ago COPY . /var/www/app # buildkit 4.85MB buildkit.dockerfile.v0

^^^^^^

<missing> 31 minutes ago WORKDIR /var/www/app 0B buildkit.dockerfile.v0

<missing> 31 minutes ago COPY /usr/bin/composer /usr/local/bin/compos… 2.36MB buildkit.dockerfile.v0

<missing> 31 minutes ago COPY ./.docker/images/php/base/.bashrc /root… 395B buildkit.dockerfile.v0

<missing> 31 minutes ago COPY ./.docker/images/php/base/.bashrc /home… 395B buildkit.dockerfile.v0

<missing> 31 minutes ago COPY ./.docker/images/php/base/conf.d/zz-app… 196B buildkit.dockerfile.v0

<missing> 31 minutes ago COPY ./.docker/images/php/base/conf.d/zz-app… 378B buildkit.dockerfile.v0

<missing> 31 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 1.28kB buildkit.dockerfile.v0

<missing> 31 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 41MB buildkit.dockerfile.v0

<missing> 31 minutes ago ADD https://php.hernandev.com/key/php-alpine… 451B buildkit.dockerfile.v0

<missing> 31 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 62.1MB buildkit.dockerfile.v0

<missing> 31 minutes ago ADD https://gitsecret.jfrog.io/artifactory/a… 450B buildkit.dockerfile.v0

<missing> 31 minutes ago RUN |8 APP_USER_ID=10000 APP_GROUP_ID=10001 … 4.74kB buildkit.dockerfile.v0

<missing> 31 minutes ago ENV ENV=ci 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV ALPINE_VERSION=3.15 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV TARGET_PHP_VERSION=8.1 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV APP_CODE_PATH=/var/www/app 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV APP_GROUP_NAME=application 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV APP_USER_NAME=application 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV APP_GROUP_ID=10001 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ENV APP_USER_ID=10000 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG ENV 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG ALPINE_VERSION 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG TARGET_PHP_VERSION 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG APP_CODE_PATH 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG APP_GROUP_NAME 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG APP_USER_NAME 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG APP_GROUP_ID 0B buildkit.dockerfile.v0

<missing> 31 minutes ago ARG APP_USER_ID 0B buildkit.dockerfile.v0

<missing> 2 days ago /bin/sh -c #(nop) CMD ["/bin/sh"] 0B

<missing> 2 days ago /bin/sh -c #(nop) ADD file:5d673d25da3a14ce1… 5.57MB

Again: It is expected that the differences aren't big, because the only size savings come from the .docker/ directory with a size of ~70kb.

$ du -hd 0 .docker

73K .docker

Finally, we are also using the --chown option of the RUN instruction to ensure that the files have the correct permissions.

Build stage ci in the application image

There is actually "nothing" to be done here. We don't need SSH any longer because it is only required for the SSH Configuration of PhpStorm. So the build stage is simply "empty":

ARG BASE_IMAGE

FROM ${BASE_IMAGE} as base

FROM base as ci

FROM base as local

# ...

Though there is one thing to keep in mind: In the local image we used sshd as the entrypoint, i.e. we had a long running process that would keep the container running. To keep the ci application container running, we must

- start it via the

-dflag ofdocker compose(already done in themake docker-uptarget)

.PHONY: docker-up

docker-up: validate-docker-variables

$(DOCKER_COMPOSE) up -d $(DOCKER_SERVICE_NAME)

-

allocate a

ttyviatty: truein thedocker-compose.local.ci.ymlfile

application:

tty: true

.dockerignore

The .dockerignore file is located in the root of the repository and ensures that certain files are kept out of the Docker build context. This will

- speed up the build (because less files need to be transmitted to the docker daemon)

- keep images smaller (because irrelevant files are kept out of the image)

The syntax is quite similar to the .gitignore file - in fact I've found it to be quite often the case that the contents of the .gitignore file are a subset of the .dockerignore file. This makes kinda sense, because you typically wouldn't want files that are excluded from the repository to end up in a docker image (e.g. unencrypted secret files). This has also been noticed by others, see e.g.

- Reddit: Any way to copy .gitignore contents to .dockerignore

- SO: Should .dockerignore typically be a superset of .gitignore?

but to my knowledge there is currently (2022-04-24) no way to "keep the two files in sync".

CAUTION: The behavior between the two files is NOT identical! The documentation says

Matching is done using Go’s filepath.Match rules. A preprocessing step removes leading and

trailing whitespace and eliminates . and .. elements using Go’s filepath.Clean. Lines that are blank after preprocessing are ignored.Beyond Go’s filepath.Match rules, Docker also supports a special wildcard string ** that

matches any number of directories (including zero). For example, **/*.go will exclude all

files that end with .go that are found in all directories, including the root of the build context.Lines starting with ! (exclamation mark) can be used to make exceptions to exclusions.

Please note the part regarding **\*.go: In .gitignore it would be sufficient to write .go to match any file that contains .go, regardless of the directory. In .dockerignore you must specify it as **/*.go!

In our case, the content of the .dockerignore file looks like this:

# gitignore

!.env.example

**/*.env .idea .phpunit.result.cache vendor/

secret.gpg .gitsecret/keys/random_seed .gitsecret/keys/pubring.kbx~

!*.secret passwords.txt .build

# additionally ignored files .git ```

<!-- generated -->

<a id='makefile-changes'> </a>

<!-- /generated -->

## Makefile changes

<!-- generated -->

<a id='initialize-the-shared-variables'> </a>

<!-- /generated -->

### Initialize the shared variables

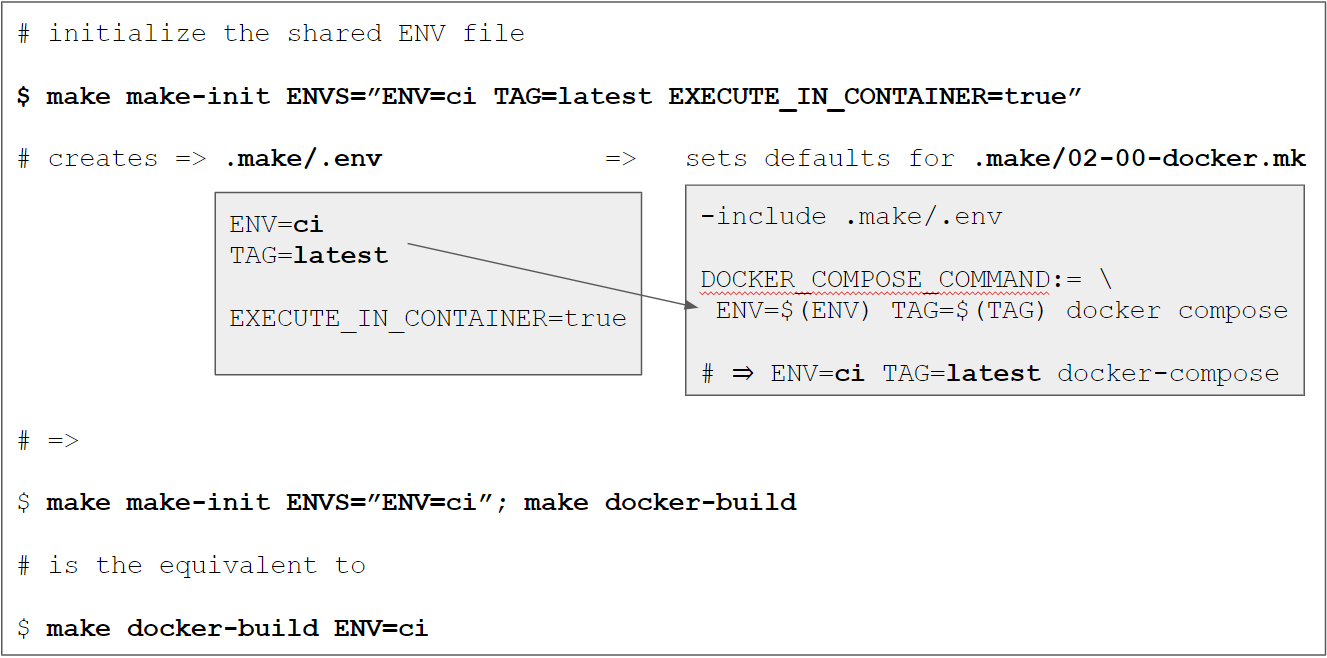

We have introduced the concept of [shared variables via `.make/.env`](https://www.pascallandau.com/blog/docker-from-scratch-for-php-applications-in-2022/#shared-variables-make-env)

previously. It allows us to **define variables in one place** (=single source

of truth) that are then used as "defaults" so we **don't have to define them explicitly** when

invoking certain `make` targets (like `make docker-build`). We'll make use of this concept by

setting the environment to `ci`via`ENV=ci` and thus making sure that all docker commands use

`ci` "automatically" as well.

[](https://www.pascallandau.com/img/ci-pipeline-docker-php-gitlab-github/make-init-ci-docker-commands.PNG)

In addition, I made a small modification by **introducing a second file at `.make/variables.env`**

that is also included in the main `Makefile` and **holds the "default" shared variables**. Those

are neither "secret" nor are they likely to be changed for environment adjustments. The file

is NOT ignored by `.gitignore` and is basically just the previous `.make/.env.example` file without

the environment specific variables:

```text

# File .make/variables.env

DOCKER_REGISTRY=docker.io

DOCKER_NAMESPACE=dofroscra

APP_USER_NAME=application

APP_GROUP_NAME=application

The .make/.env file is still .gitignored and can be initialized with the make-init

target using the ENVS variable:

make make-init ENVS="ENV=ci SOME_OTHER_DEFAULT_VARIABLE=foo"

which would create a .make/.env file with the content

ENV=ci SOME_OTHER_DEFAULT_VARIABLE=foo

If necessary, we could also override variables defined in the .make/variables.env file, because the .make/.env is included last in the Makefile:

# File: Makefile

# ...

# include the default variables

include .make/variables.env

# include the local variables

-include .make/.env

The default value for ENVS is ENV=local TAG=latest to retain the same default behavior as before when ENVS is omitted. The corresponding make-init target is defined in the main Makefile and now looks like this:

ENVS?=ENV=local TAG=latest

.PHONY: make-init

make-init: ## Initializes the local .makefile/.env file with ENV variables for make. Use via ENVS="KEY_1=value1 KEY_2=value2"

@$(if $(ENVS),,$(error ENVS is undefined))

@rm -f .make/.env

for variable in $(ENVS); do \

echo $$variable | tee -a .make/.env > /dev/null 2>&1; \

done

@echo "Created a local .make/.env file"

ENV based docker compose config

As mentioned in section Compose file updates we need to select the "correct" docker compose configuration files based on the ENV value. This is done in .make/02-00-docker.mk:

# File .make/02-00-docker.mk

# ...

DOCKER_COMPOSE_DIR:=...

DOCKER_COMPOSE_COMMAND:=...

DOCKER_COMPOSE_FILE_LOCAL_CI:=$(DOCKER_COMPOSE_DIR)/docker-compose.local.ci.yml

DOCKER_COMPOSE_FILE_CI:=$(DOCKER_COMPOSE_DIR)/docker-compose.ci.yml

DOCKER_COMPOSE_FILE_LOCAL:=$(DOCKER_COMPOSE_DIR)/docker-compose.local.yml

# we need to "assemble" the correct combination of docker-compose.yml config files

ifeq ($(ENV),ci)

DOCKER_COMPOSE_FILES:=-f $(DOCKER_COMPOSE_FILE_LOCAL_CI) -f $(DOCKER_COMPOSE_FILE_CI)

else ifeq ($(ENV),local)

DOCKER_COMPOSE_FILES:=-f $(DOCKER_COMPOSE_FILE_LOCAL_CI) -f $(DOCKER_COMPOSE_FILE_LOCAL)

endif

DOCKER_COMPOSE:=$(DOCKER_COMPOSE_COMMAND) $(DOCKER_COMPOSE_FILES)

When we now take a look at a full recipe when using ENV=ci with a docker target (e.g. docker-up), we can see that the correct files are chosen, e.g.

$ make docker-up ENV=ci -n

ENV=ci TAG=latest DOCKER_REGISTRY=docker.io DOCKER_NAMESPACE=dofroscra APP_USER_NAME=application APP_GROUP_NAME=application docker compose -p dofroscra_ci --env-file ./.docker/.env -f ./.docker/docker-compose/docker-compose.local.ci.yml -f ./.docker/docker-compose/docker-compose.ci.yml up -d

# =>

# -f ./.docker/docker-compose/docker-compose.local.ci.yml

# -f ./.docker/docker-compose/docker-compose.ci.yml

Codebase changes

Add a test for encrypted files

We've introduced git-secret in the previous tutorial Use git-secret to encrypt secrets in the repository and used it to store the file passwords.txt encrypted in the codebase. To make sure that the decryption works as expected on the CI systems, I've added a test at tests/Feature/EncryptionTest.php to check if the file exists and if the content is correct.

class EncryptionTest extends TestCase

{

public function test_ensure_that_the_secret_passwords_file_was_decrypted()

{

$pathToSecretFile = __DIR__."/../../passwords.txt";

$this->assertFileExists($pathToSecretFile);

$expected = "my_secret_password\n";

$actual = file_get_contents($pathToSecretFile);

$this->assertEquals($expected, $actual);

}

}

Of course this doesn't make sense in a "real world scenario", because the secret value would now be exposed in a test - but it suffices for now as proof of a working secret decryption.

Add a password-protected secret gpg key

I've mentioned in Scenario: Decrypt file that it is also possible to use a password-protected secret gpg key for an additional layer of security. I have created such a key and stored it in the repository at secret-protected.gpg.example (in a "real world scenario" I wouldn't do that - but since this is a public tutorial I want you to be able to follow along completely). The password for that key is 12345678.

The corresponding public key is located at .dev/gpg-keys/alice-protected-public.gpg and belongs to the email address alice.protected@example.com. I've added this email address and re-encrypted the secrets afterwards via

make gpg-init

make secret-add-user EMAIL="alice.protected@example.com"

make secret-encrypt

When I now import the secret-protected.gpg.example key, I can decrypt the secrets, though I cannot use the usual secret-decrypt target but must instead use secret-decrypt-with-password

make secret-decrypt-with-password GPG_PASSWORD=12345678

or store the GPG_PASSWORD in the .make/.env file when it is initialized for CI

make make-init ENVS="ENV=ci TAG=latest EXECUTE_IN_CONTAINER=true GPG_PASSWORD=12345678"

make secret-decrypt-with-password

Create a JUnit report from PhpUnit

I've added the --log-junit option to the phpunit configuration of the test make target in order to create an XML report in the .build/ directory in the .make/01-02-application-qa.mk file:

# File: .make/01-02-application-qa.mk

# ...

PHPUNIT_CMD=php vendor/bin/phpunit

PHPUNIT_ARGS= -c phpunit.xml --log-junit .build/report.xml

I.e. each run of the tests will now create a Junit XML report at .build/report.xml. The file is used as an example of a build artifact, i.e. "something that we would like to keep" from a CI run.

Wrapping up

Congratulations, you made it! If some things are not completely clear by now, don't hesitate to leave a comment. You should now have a working CI pipeline for Github (via Github Actions) and/or Gitlab (via Gitlab pipelines) that runs automatically on each push.

In the next part of this tutorial, we will create a VM on GCP and provision it to run dockerized applications.

Please subscribe to the RSS feed or via email to get automatic notifications when this next part comes out :)

![Cover image for CI Pipelines for dockerized PHP Apps with Github & Gitlab [Tutorial Part 7]](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fwww.pascallandau.com%2Fimg%2Fci-pipeline-docker-php-gitlab-github%2Fyt-preview-image.png)

Top comments (3)

Feedback appreciated :)

Just what I needed. There is so many tutorials that use the native PHP Github Actions but only very few that use plain docker 👍

Glad you found it helpful!