Caching strategies are essential techniques employed to enhance the performance and responsiveness of web applications by mitigating the need for recurrently fetching identical data or resources. Two prevalent caching approaches encompass client-side caching and server-side caching:

Client-Side Caching: Client-side caching entails preserving responses or assets on the user’s device, typically within the web browser’s cache. This facilitates subsequent requests for the same resources to be instantly served from the cache, mitigating the necessity for renewed retrieval from the server. Here’s a deeper dive into client-side caching:

HTTP Cache-Control: Client-side caching is governed by HTTP headers like Cache-Control and Expires, which specify the duration of resource caching and the criteria for considering it fresh.

Advantages:

Decreased Latency: Cached resources load expeditiously as they are fetched locally.

Lightened Server Load: Caching alleviates the server by curtailing requests for identical resources.

Enhanced User Experience: Speedier load times contribute to a smoother user experience.

Instances of Client-Side Caching:

Browser Cache: Web browsers automatically cache resources like images, stylesheets, and scripts, and caching behavior can be regulated using HTTP headers.

Service Workers: Progressive web apps (PWAs) leverage service workers to cache assets and provide offline capabilities.

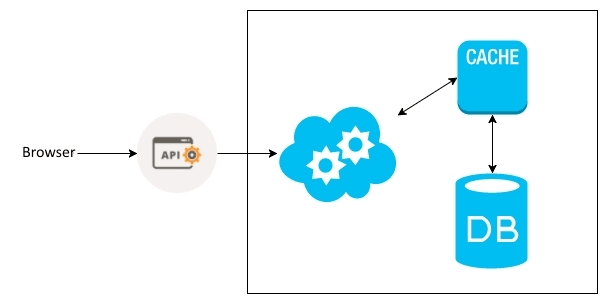

Server-Side Caching: Server-side caching involves the retention of often-requested data or entire responses on the server or an intermediary server (e.g., a proxy or CDN). This approach curtails the time and resources necessary for generating responses, substantially enhancing application performance. Here’s an exploration of server-side caching:

Caching Mechanisms: Various mechanisms are employed for server-side caching, including in-memory caching, distributed caching, reverse proxy caching, and content delivery networks (CDNs).

Cache Invalidation: To ensure the continued accuracy of cached data, strategies for cache invalidation are imperative. Data should be refreshed or invalidated when it becomes outdated or when alterations occur in the source data.

Advantages:

Expedited Response Times: Cached responses are dispensed swiftly, diminishing user wait times for data to load.

Lighter Server Burden: Caching reduces the application server’s load by curtailing the necessity to regenerate identical responses.

Scalability: Caching facilitates the more efficient scalability of applications by disseminating the load across cached responses.

Instances of Server-Side Caching:

In-Memory Caches: Tools such as Redis and Memcached are commonly employed to cache frequently accessed data in memory.

Reverse Proxy Caches: Servers like Nginx and Varnish can be configured as reverse proxies with integrated caching capabilities.

Content Delivery Networks (CDNs): CDNs cache static assets, such as images and scripts, across numerous global locations for accelerated delivery to end-users.

Caching represents a potent strategy for optimizing web applications and APIs, curbing latency, and heightening overall performance. Nonetheless, effective cache management is requisite to ensure that cached data remains current and precise, balancing the interplay between performance enhancement and data accuracy.

Follow me there :

linkedin.com/in/laetitia-christelle-ouelle-89373121b/

twitter.com/OuelleLaetitia

dev.to/ouelle

Happy coding ! 😏

Top comments (0)