In my next few posts we're going to look at different types of shading starting from most primitive to more granular. In most games these more primitive types aren't much of a thing, everything is per-pixel in the age of pixel shaders but it's interesting to explore how we might do something else.

Flat Shading

Credit: http://gamemaniacosblog.blogspot.com/2013/09/old-games-virtua-fighter-o-pai-dos.html

"Flat shading" is perhaps the most primitive type of lighting we have. To be more specific we can call this "per-poly lighting" or "per-face lighting" because the light only changes based on the normal of the triangle or polygonal surface. It was more popular before 3D hardware was available perhaps because it's a little less intense to compute, modern hardware does interpolation for us so we actually get a bit more for free. Still, this has some artistic value I think. On a purely flat object with no curvature it's not really obviously different than per-vertex lighting, at least when dealing with spotlights.

When dealing with shaders the highest level concept in the vertex not a polygon so how can we even calculate something for a triangle? Well, we have to do a bit of that outside the shader and pass the information in. Since the info is valid per triangle it must be repeated for all 3 vertices, and this means, like our UVs and normal problems, we are not allowed to share position vertices between triangles; they must be duplicated. We'll start with a shape I'm calling a "facetQuad" (I'm not sure if there's an official name for it but it's a quad in which all triangles have distinct vertices).

export const facetQuad = {

positions: [

-0.5, -0.5, 0,

0.5, -0.5, 0,

0.5, 0.5, 0,

-0.5, -0.5, 0,

0.5, 0.5, 0,

-0.5, 0.5, 0

],

colors: [

1, 0, 0,

1, 0, 0,

1, 0, 0,

1, 0, 0,

1, 0, 0,

1, 0, 0

],

uvs: [

0, 1,

1, 1,

1, 0,

0, 1,

1, 1,

0, 0

],

normals: [

0, 0, -1,

0, 0, -1,

0, 0, -1,

0, 0, -1,

0, 0, -1,

0, 0, -1,

],

triangles: [

0, 1, 2,

3, 4, 5

],

textureName: "smile"

};

So now that we have that we can get to doing the light calculation. So what do we need?

1) A normal

2) A position (to get the direction of the light)

Let's start with 1. For a quad this is trivial, it just points away from the surface, and we already did it above. Truly though we want the normal of the triangle so if you chose a different shape just remember that all vertices must share the same value.

Now for 2. What is the position of the polygon? There's a number of ways we can do this but I'm going to say it's the center (specifically centroid) of the triangle. This will of course get some interesting results, not accurate, but this is more about exploring old limitations and their results.

export function triangleCentroid(pointA, pointB, pointC){

return [

pointA[0] + pointB[0] + pointC[0] / 3,

pointA[1] + pointB[1] + pointC[1] / 3,

pointA[2] + pointB[2] + pointC[2] / 3,

];

}

It's just an average of the points. In this case we can just hardcode it for the 6 points:

//facetQuad

centroids: [

0.17, -0.17, 0,

0.17, -0.17, 0,

0.17, -0.17, 0,

-0.17, 0.17, 0,

-0.17, 0.17, 0,

-0.17, 0.17, 0,

],

Now we've done attribute binding a zillion times so we can just make this a helper. I even added an extra check that if the attribute isn't used it doesn't bind saving you from console warning spam:

//gl-helper.js

export function bindAttribute(context, attributes, attributeName, size){

const attributeLocation = context.getAttribLocation(context.getParameter(context.CURRENT_PROGRAM), attributeName);

if(attributeLocation === -1) return; //bail if it doesn't exist in the shader

const attributeBuffer = context.createBuffer();

context.bindBuffer(context.ARRAY_BUFFER, attributeBuffer);

context.bufferData(context.ARRAY_BUFFER, attributes, context.STATIC_DRAW);

context.enableVertexAttribArray(attributeLocation);

context.vertexAttribPointer(attributeLocation, size, context.FLOAT, false, 0, 0);

}

We can now throw out a lot of duplicated code:

//wc-geo-gl.js

bindMesh(mesh){

bindAttribute(this.context, mesh.positions, "aVertexPosition", 3);

bindAttribute(this.context, mesh.colors, "aVertexColor", 3);

bindAttribute(this.context, mesh.uvs, "aVertexUV", 2);

bindAttribute(this.context, mesh.normals, "aVertexNormal", 3);

if(mesh.centroids){

bindAttribute(this.context, mesh.centroids, "aVertexCentroid", 3);

}

this.bindIndices(mesh.triangles);

this.bindUniforms(mesh.getModelMatrix());

this.bindTexture(mesh.textureName);

}

Also we need to add the centroid property to Mesh to make sure it's available for use. Since this is really esoteric and only works for faceted meshes I might delete it later but it's very useful here.

Anyway we do the lighting calculation in the vertex shader:

//vertex shader

uniform mat4 uProjectionMatrix;

uniform mat4 uModelMatrix;

uniform mat4 uViewMatrix;

uniform lowp mat4 uLight1;

attribute vec3 aVertexPosition;

attribute vec3 aVertexColor;

attribute vec2 aVertexUV;

attribute vec3 aVertexNormal;

attribute vec3 aVertexCentroid;

varying mediump vec4 vColor;

varying mediump vec2 vUV;

varying mediump vec3 vNormal;

varying mediump vec3 vPosition;

void main(){

mediump vec3 normalCentroid = vec3(uModelMatrix * vec4(aVertexCentroid, 1.0));

mediump vec3 normalNormal = normalize(vec3(uModelMatrix * vec4(aVertexNormal, 1.0)));

mediump vec3 toLight = normalize(uLight1[0].xyz - normalCentroid);

mediump float light = dot(normalNormal, toLight);

gl_Position = uProjectionMatrix * uViewMatrix * uModelMatrix * vec4(aVertexPosition, 1.0);

vUV = aVertexUV;

vColor = vec4(aVertexColor, 1.0) * uLight1[2] * vec4(light, light, light, 1);

vNormal = vec3(uModelMatrix * vec4(aVertexNormal, 1.0));

vPosition = vec3(uModelMatrix * vec4(aVertexPosition, 1.0));

}

This is the same basic calculation as last time except we use the centroid as the starting position to the light. We also need to take into account the model matrix so the attributes respond to model transforms.

//fragment shader

varying mediump vec4 vColor;

void main() {

gl_FragColor = vColor;

}

Nothing interesting here, just output the color.

If the light is in the center (x = 0, y = 0, z = -1.5) it'll look like this:

But if we move it a little to the right (x = 0.5, y = 0, z = -1.5):

The closer triangle gets lit more. Now in the image above Virtua Fighter looks to be using quads as the geometry primitive. We could do that too. Instead of taking the centroid on the triangle we'd just take the centroid of the quad:

//facetQuad

centroids: [

0, 0, 0,

0, 0, 0,

0, 0, 0,

0, 0, 0,

0, 0, 0,

0, 0, 0

],

Centered light (x = 0, y = 0, z = -1.5):

To the right (x = 0.5, y = 0, z = -1.5):

It's hard to tell bit's slightly dimmer and uniformly so across the whole quad.

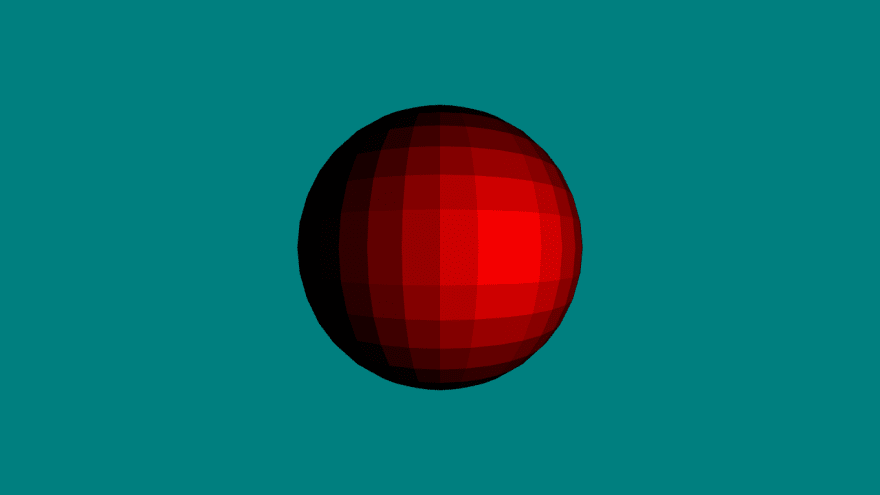

Facet Sphere

One way we can better show this is to generate a new mesh I'm calling a "facet sphere". In the previous post's example the sphere was generated with normals facing outward at each vertex, but to simulate per-poly lighting we can instead have each vertex of each triangle point in the same direction to make it more faceted. I was able to do this by taking a UV Sphere, iterating over the triangles and replacing the vertices such that they are duplicated per triangle and the normals face the same direction. To get the normal direction of a triangle we simply take the cross product of the 2 vectors made by connecting combinations of vertices that make it up.

//order matters! CCW from bottom to top

export function triangleNormal(pointA, pointB, pointC){

const vector1 = subtractVector(pointC, pointA);

const vector2 = subtractVector(pointB, pointA);

return normalizeVector(crossVector(vector1, vector2));

}

If you take the order wrong you'll get it going the opposite direction.

To make the facet sphere:

export function facetSphere(density, options){

const sphere = uvSphere(density, options);

const rawTriangles = arrayChunk(sphere.triangles, 3);

const rawPositions = arrayChunk(sphere.positions, 3);

const rawUVs = arrayChunk(sphere.uvs, 2);

const rawColors = arrayChunk(sphere.colors, 3);

const positions = [];

const uvs = [];

const normals = [];

const colors = [];

const triangles = [];

const centroids = [];

let index = 0;

for(const tri of rawTriangles){

positions.push(rawPositions[tri[0]], rawPositions[tri[1]], rawPositions[tri[2]]);

uvs.push(rawUVs[tri[0]], rawUVs[tri[1]], rawUVs[tri[2]]);

colors.push(rawColors[tri[0]], rawColors[tri[1]], rawColors[tri[2]]);

const normal = triangleNormal(rawPositions[tri[0]], rawPositions[tri[1]], rawPositions[tri[2]]);

normals.push(normal, normal, normal);

const centroid = triangleCentroid(rawPositions[tri[0]], rawPositions[tri[1]], rawPositions[tri[2]]);

centroids.push(centroid, centroid, centroid);

triangles.push([index, index + 1, index + 2]);

index += 3;

}

return {

positions: positions.flat(),

colors: colors.flat(),

triangles: triangles.flat(),

uvs: uvs.flat(),

normals: normals.flat(),

centroids: centroids.flat(),

textureName: sphere.textureName

}

}

arrayChunk is just a function to group the array into subarrays of a specific length:

export function arrayChunk(array, lengthPerChunk) {

const result = [];

let chunk = [array[0]];

for (let i = 1; i < array.length; i++) {

if (i % lengthPerChunk === 0) {

result.push(chunk);

chunk = [];

}

chunk.push(array[i]);

}

if (chunk.length > 0) result.push(chunk);

return result;

}

We can try again with the sphere:

It's an interesting effect but maybe not exactly what one might expect. What we probably want for our flat shading is "per-face" lighting. This means that the shape might be a triangle, but it might also be a quad. This is a tad more sophisticated and we'll have to work a little harder. The first part is changing facetSphere. We were able to work off the original data generated from a UV sphere, but from here it's hard to figure out if a group of triangles is a triangle or a quad without redoing a lot of math, so we might as well re-implement the whole thing:

export function facetSphere(density, { color, uvOffset } = {}) {

const radsPerUnit = Math.PI / density;

const sliceVertCount = density * 2;

//positions and UVs

const rawPositions = [];

let rawUvs = [];

let latitude = -Math.PI / 2;

//latitude

for (let i = 0; i <= density; i++) {

const v = inverseLerp(-QUARTER_TURN, QUARTER_TURN, -latitude);

let longitude = 0;

let vertLength = sliceVertCount + ((i > 0 && i < density) ? 1 : 0); //middle rings need extra vert for end U value

//longitude

for (let j = 0; j < vertLength; j++) {

rawPositions.push(latLngToCartesian([1, latitude, longitude]));

rawUvs.push([inverseLerp(0, TWO_PI, longitude), v]);

longitude += radsPerUnit;

}

latitude += radsPerUnit;

}

if (uvOffset) {

rawUvs = rawUvs.map(uv => [(uv[0] + uvOffset[0]) % 1, (uv[1] + uvOffset[1]) % 1]);

}

//triangles

const triangles = [];

const positions = [];

const centroids = [];

const colors = [];

const normals = [];

const uvs = [];

const vertexColor = color ?? [1,0,0];

let index = 0;

let ringStartP = 0;

for (let ring = 0; ring < density; ring++) {

const vertexBump = (ring > 0 ? 1 : 0);

for (let sliceVert = 0; sliceVert < sliceVertCount; sliceVert++) {

const thisP = ringStartP + sliceVert;

const nextP = ringStartP + sliceVert + 1;

const nextRingP = thisP + sliceVertCount + vertexBump;

const nextRingNextP = nextP + sliceVertCount + vertexBump;

if (ring === 0) {

positions.push(rawPositions[thisP], rawPositions[nextRingNextP], rawPositions[nextRingP]);

uvs.push(rawUvs[thisP], rawUvs[nextRingNextP], rawUvs[nextRingP]);

colors.push(vertexColor, vertexColor, vertexColor);

const centroid = polyCentroid([rawPositions[thisP], rawPositions[nextRingNextP], rawPositions[nextRingP]]);

centroids.push(centroid, centroid, centroid);

const normal = normalizeVector(centroid);

normals.push(normal, normal, normal);

triangles.push([index, index + 1, index + 2]);

index += 3;

}

if (ring === density - 1) {

positions.push(rawPositions[thisP], rawPositions[nextP], rawPositions[nextRingP]);

uvs.push(rawUvs[thisP], rawUvs[nextP], rawUvs[nextRingP]);

colors.push(vertexColor, vertexColor, vertexColor);

const centroid = polyCentroid([rawPositions[thisP], rawPositions[nextP], rawPositions[nextRingP]]);

centroids.push(centroid, centroid, centroid);

const normal = normalizeVector(centroid);

normals.push(normal, normal, normal);

triangles.push([index, index + 1, index + 2]);

index += 3;

}

if (ring > 0 && ring < density - 1 && density > 2) {

positions.push(

rawPositions[thisP], rawPositions[nextRingNextP], rawPositions[nextRingP],

rawPositions[thisP], rawPositions[nextP], rawPositions[nextRingNextP]

);

uvs.push(

rawUvs[thisP], rawUvs[nextRingNextP], rawUvs[nextRingP],

rawUvs[thisP], rawUvs[nextP], rawUvs[nextRingNextP]

);

colors.push(vertexColor, vertexColor, vertexColor, vertexColor, vertexColor, vertexColor);

const centroid = polyCentroid([rawPositions[thisP], rawPositions[nextP], rawPositions[nextRingNextP], rawPositions[nextRingP]]);

centroids.push(centroid, centroid, centroid, centroid, centroid, centroid);

const normal = normalizeVector(centroid);

normals.push(normal, normal, normal, normal, normal, normal);

triangles.push([index, index + 1, index + 2], [index + 3, index + 4, index + 5]);

index += 6;

}

}

if (ring === 0) {

ringStartP += sliceVertCount;

} else {

ringStartP += sliceVertCount + 1;

}

}

return {

positions: positions.flat(),

colors: colors.flat(),

centroids: centroids.flat(),

triangles: triangles.flat(),

uvs: uvs.flat(),

normals: normals.flat(),

textureName: "earth"

};

}

The same principal applies. When we generate each triangle, instead of using the points from the cloud we generate 3 new points in a new cloud that have unique normals and centroids for that face. Getting the centroid was a post all to itself so I'll let you reference that if you need but it can get the centroid of any arbitrary flat polygon, and the normal is just the normalized centroid (centered spheres make this so easy!). Just make sure you mind the API for polyCentroid, it takes an array of points, easy mistake to forget when you are writing similar code lines.

The per-face lighting while slightly less granular does look a bit better.

Sanity check: Why centroids?

So our hack to get polygon information to the vertices was to calculate a centroid, the center of the polygon. But did old games have to do something similar to calculate lighting?

Well no. What we are building is far more sophisticated. A simplistic software render back then likely wouldn't use point lighting, it would have been expensive since you would need to calculate that for every object in the scene. Rather they would probably have a single light source, whatever the prevailing light in the scene was, a light source in a room or the sun/moon if outside. This reduces the need to calculate where the light is relative to the object so we don't need positional coordinates. We can do pure directional lighting too:

uniform mat4 uProjectionMatrix;

uniform mat4 uModelMatrix;

uniform mat4 uViewMatrix;

uniform lowp mat4 uLight1;

attribute vec3 aVertexPosition;

attribute vec3 aVertexColor;

attribute vec2 aVertexUV;

attribute vec3 aVertexNormal;

attribute vec3 aVertexCentroid;

varying mediump vec4 vColor;

varying mediump vec2 vUV;

varying mediump vec3 vNormal;

varying mediump vec3 vPosition;

void main(){

bool isPoint = uLight1[3][3] == 1.0;

if(isPoint){

mediump vec3 normalCentroid = vec3(uModelMatrix * vec4(aVertexCentroid, 1.0));

mediump vec3 normalNormal = normalize(vec3(uModelMatrix * vec4(aVertexNormal, 1.0)));

mediump vec3 toLight = normalize(uLight1[0].xyz - normalCentroid);

mediump float light = dot(normalNormal, toLight);

vColor = vec4(aVertexColor, 1.0) * uLight1[2] * vec4(light, light, light, 1);

} else {

mediump vec3 normalNormal = normalize(vec3(uModelMatrix * vec4(aVertexNormal, 1.0)));

mediump float light = dot(normalNormal, uLight1[1].xyz);

vColor = vec4(aVertexColor, 1.0) * uLight1[2] * vec4(light, light, light, 1);

}

gl_Position = uProjectionMatrix * uViewMatrix * uModelMatrix * vec4(aVertexPosition, 1.0);

vUV = aVertexUV;

vNormal = vec3(uModelMatrix * vec4(aVertexNormal, 1.0));

vPosition = vec3(uModelMatrix * vec4(aVertexPosition, 1.0));

}

You can find the sample code for this demo here:

https://github.com/ndesmic/geogl/tree/v5

Top comments (0)