Introduction

If you don't live under a rock you most probably have heard about ChatGPT since it's the main topic of the internet recently. It's an artificial intelligence chatbot developed by OpenAI in which you can have a conversation about any subject and you are answered with human-like coherent responses. It is built on top of the largest language model trained so far and it's based on massive amounts of text data from all over the internet and beyond. It was launched on November 30, 2022 and drew attention for its coherent, correct and articulate answers across many domains of knowledge.

Besides the main functionality of answering questions with coherent responses, OpenAI provides a way to customize their model to answer questions and generate text for inputs it has never seen before. This feature is called Fine-Tuning and allows anyone to make use of GPT-3 technology to train the original model based on examples from a dataset. This results in a personalized model that answers questions for your specific scenarios. With this model the quality of the responses for your specific cases will improve and the latency of the requests will decrease.

With that tool in hand I decided to try it out and train a custom model to test how it works. After looking for open datasets I found one that caught my attention, a Fake News dataset. This dataset is composed of fake and real news and it would be an interesting and bold scenario to try out and to test the potential of GPT-3 Fine Tuning feature.

Fine Tuning Fake-GPT

Gather and sanitize your dataset

First of all I searched for an open dataset that would fit the fine tuning process. I was looking for a dataset which could fit into the format that GPT-3 uses: question and answer or prompt and completion. After several hours of dataset searches I finally found a promising dataset of Fake News. The dataset is composed of thousands of examples of real news and fake news. It is divided into two CSV files, one with fake news and the other with real news. Each file has the following properties for each news article: title, text body, subject and date. You can see the the dataset example below (fake_news_dataset.csv):

title,text,subject,date

News Title 2,News body 2,politicsNews,31/12/22

News Title 2,News body 2,politicsNews,09/10/22

News Title 3,News body 3,worldNews,17/11/22

. . .

The idea is to identify if a news article is fake or real based on the news title and the text body. With the raw dataset in hand it's time to format the data in a way that fits into the Fine-Tuning process. The file format should be CSV and must contain two properties: "prompt" and "completion". The prompt will have the news title and the text body appended together. The completion is simply one word, fake or true. The resulted file should be structured like the example below (formated_dataset.csv):

prompt,completion

"News Title: News body ... end","fake"

"News Title: News body ... end","true"

"News Title: News body ... end","fake"

. . .

To format the raw CSV data into the format for fine tuning I used the csvkit CLI tool for the sake of simplicity. You can use other tools like panda for Python or even Spark for big amounts of data. When you have the data together, sanitized and formatted, then you are ready to start the fine-tuning process.

Prerequisites for Fine Tuning

First of all I have installed the OpenAI Python library using pip (pypi.org/project/OpenAI):

pip install --upgrade openai

Then I had to set the OpenAI API key to allow you to send commands to OpenAI on behalf of your account. I used the api key for your account in the api-keys page. Then I set the environment variable to authorize commands to OpenAI with the following command:

export OPENAI_API_KEY=<your_key>

Format dataset

OpenAI fine-tuning process accepts only files in JSONL format, but don't worry, it provides a tool to transform any format mentioned above to JSONL. So I run the following command to do so:

openai tools fine_tunes.prepare_data -f formated_dataset.csv

The command fails because it's missing some dependency, so I ran the following command to fix it and tried again (click here for more details). Command to install required dependency to run openai package:

pip install OpenAI[dependency]

The openai prepare_data command analyzed my dataset and showed me a list of suggestions with important information about my dataset and some options for the fine tuning. Then, based on the analysis it suggested to perform some actions like, remove duplicates, add a suffix separator, add white space character at the beginning of completion, etc. I applied all recommended actions since they all fit my dataset case.

One important action is to "split the data into training and validation sets" which will generate some assessment results after the training process. It will produce two files with examples consisting of a training set to be used for fine tuning (around ~95% of the examples) and a validation set to be used to assess the performance of the trained model (around ~5% of the examples). This action allows OpenAI to generate detailed results about the model performance later on.

Finally, I had two JSONL files in the right format to be used in the fine tuning. Note that when the command finishes it will give you some tips and recommendations on how to run the fine tuning command. In this case the suggested command was:

openai api fine_tunes.create -t "dataset_train.jsonl" -v "dataset_valid.jsonl" -m "ada" --compute_classification_metrics --classification_positive_class " true"

This command will be used to create the trained model and will be explained in the next section.

Run Fine-tuning

Now that I have the data correctly formatted in JSONL format I am ready to run the fine tuning process to train the custom model. Note that this is a paid functionality and the price varies based on the size of your dataset and the base model you use for the training (see pricing here).

I ran the "fine_tunes.create" command suggested previously. This command has several options to set or change based on your needs (check it out fine-tune command details here). For example, the option "-m" you can set as "ada", "babbage", "curie" or "davinci", being one of the based models OpenAI provides having different advantages like performance, accuracy, price, etc. (see more about the models OpenAI provides).

openai api fine_tunes.create

-m "ada"

-t "prepared_dataset_train.jsonl"

-v "prepared_dataset_valid.jsonl"

--compute_classification_metrics

--classification_positive_class " true"

Note that the command can change depending on your dataset values. For example, for classifier problems, where each input in the prompt should be classified into one of the predefined classes, I had to set the option "--compute_classification_metrics". Since there were only two classes I had to set '--classification_positive_class " true" '. If there are more classes you have to set "--classification_n_classes 5". See these examples below.

The options explanation:

- m: the model used in fine tuning

- t: file name for the training dataset

- v: file name for the validation dataset

- --compute_classification_metrics: For metrics generation

- --classification_positive_class: fine tuning for classification with positive class being true

When you execute the command it will upload the dataset file(s) to OpenAI servers and the command will prompt you with a fine tune id such as "ft-FpsHdkc836TLMi53FidOabcF". I saved this id for reference later. At this point the request is received by OpenAI servers and the fine tuning is queued to be executed. The fine tuning execution took some hours because of the dataset size and the set model. I didn't have to wait for the process to finish so you killed the process (ctrl-c) without hesitation and came back later to check the progress of the job. I checked the status of your process by running the following command with the id you received previously:

openai api fine_tunes.get -i ft-FpsHdkc836TLMi53FidOabcF

When the fine tuning is finished I checked the status of your fine tune process as "processed" meaning your custom model training is done and it's ready to be used. To use my trained model I had to get its name which I found in the property called "fine_tuned_model" (ex.: "fine_tuned_model": "ada:ft-personal-2023-01-18-19-50-00").

To use the trained model when sending prompts to OpenAI I had to set the "-m" option with the id of your model like showing bellow:

openai api completions.create -m ada:ft-personal-2023-01-18-19-50-00 -p "your prompt here!"

I was also able to use the trained model in my account's playground link address. Now I'm free to play, check, test and assess my custom trained model to make sure it responds with the expected answer for my scenario. In this case I could send a news article and it will return if the article is fake or not! See the results below.

Performance assess results (Bonus)

Remember we generated two files for the dataset, one for the training and the other for the validation. OpenAI used the first one to train the model and the second one to run some assessment procedures to measure some stats like, for example, accuracy and loss. I could see the stats using the following command:

openai api fine_tunes.results -i ft-FpsHdkc836TLMi53FidOabcF results.csv

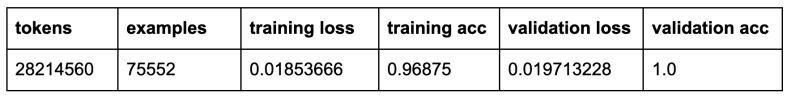

The output data is in a CSV file by default and can be saved in a file for better visualization. The file structure is based on steps done in the training process which is represented by each line of the csv file. Each line shows valuable information about the step like: how many examples it processed, number of tokens processed, training accuracy, training loss, validation accuracy, validation loss, and much more. In this case the results where:

See more about results in this link.

Conclusion

OpenAI is bringing one of the most revolutionary technological disruptions of recent times with the creation of GPT-3, GPT-4, and ChatGPT. The best part is that anyone can have access to use this incredible tool. Besides that, OpenAI provides the necessary tools for anyone to create personalized models to attend any specific scenario. This fine-tuning process is easily available to anyone by OpeanAI. Fine tuning provides access to the cutting-edge technology of machine learning that OpenAI uses in GPT. This provides endless possibilities to improve computer human interaction for companies, their employees and their customers.

Fine tuning allows anyone to create customized models specific for any scenario. In the article's case it was created a Fake News classifier. Imagine how useful it can be for the user to have something to alert you if an article you're reading is probably fake!

Other examples can be:

- Create a chatbot to interact with customers in a conventional manner to answer specific questions about your company, your services and your products.

- Help employees to find valuable information quickly and easily in a large knowledge base with several text articles where it's hard and time consuming to find what you need.

The scenarios involving a high volume of text it can help are countless.

If you have any questions, comments or suggestions feel free to contact me: https://www.linkedin.com/in/marceloax/

Top comments (0)