This is a Plain English Papers summary of a research paper called Small AI Model Matches GPT-4's Performance Using High-Quality Training Data. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

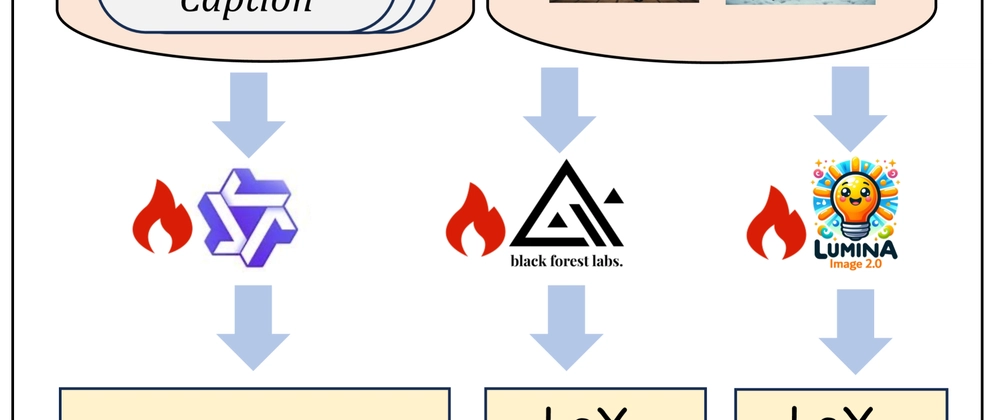

- LeX-Art is a framework for high-quality text generation data synthesis

- Creates LeX-10K dataset with 10,208 expert-level responses across 9 domains

- Trains LeX-1B model (1.3B parameters) that outperforms much larger models

- Achieves GPT-4 level performance on text generation tasks with vastly fewer parameters

- Demonstrates scalable approach to building specialized text generation systems

Plain English Explanation

Creating high-quality AI systems for text generation typically requires massive amounts of data and computing resources. The LeX-Art approach tackles this problem differently by focusing on quality rather than quantity.

Think of it like cooking. Most AI approaches gather huge ...

Top comments (0)