This is a Plain English Papers summary of a research paper called Learnable Neural Attention Boosts Vision Transformer Performance While Using Less Computing Power. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

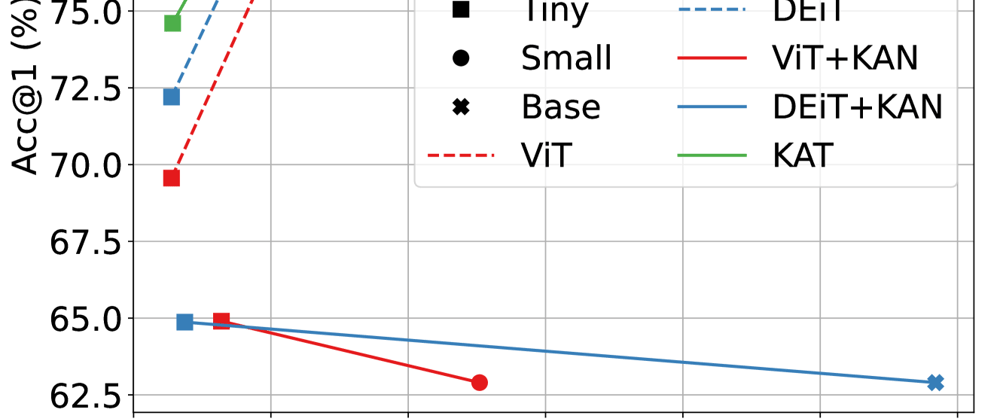

- Researchers introduce Kolmogorov-Arnold Attention (KA-Attention), a learnable alternative to standard attention in Vision Transformers

- KA-Attention replaces the fixed softmax function with trainable neural networks

- Improves performance across multiple computer vision tasks and datasets

- Reduces computational complexity while maintaining or improving accuracy

- Shows greater robustness to adversarial attacks and out-of-distribution data

Plain English Explanation

Think of attention in transformers like a spotlight system at a concert. Traditional transformer attention uses a fixed method (softmax) to decide where to shine these spotlights - it's like having a pre-programmed lighting system that can't adapt to different performers or sta...

Top comments (0)