This is a Plain English Papers summary of a research paper called Insights into LLM Long-Context Failures: When Transformers Know but Don't Tell. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

• This paper investigates the challenges large language models (LLMs) face when processing long input contexts, and why they sometimes fail to utilize relevant information that is available in the context.

• The researchers find that LLMs can often "know" the correct answer based on the provided context, but fail to output it due to biases and limitations in the models.

Plain English Explanation

• Large language models (LLMs) like GPT-3 and BERT have become incredibly powerful at understanding and generating human language. However, they can sometimes struggle when presented with long input contexts, failing to fully utilize all the relevant information.

• This paper dives into the reasons behind these "long-context failures". The researchers discover that LLMs can actually "know" the right answer based on the full context, but for various reasons don't end up outputting that information. This suggests the models have biases and limitations that prevent them from fully leveraging the available context.

• By understanding these issues, the researchers hope to guide future work in making LLMs better at long-context reasoning and mitigating positional biases that can lead to long-context failures.

Technical Explanation

• The paper presents a series of experiments and analyses to investigate why LLMs sometimes struggle with long input contexts, even when they appear to "know" the correct answer based on the full information provided.

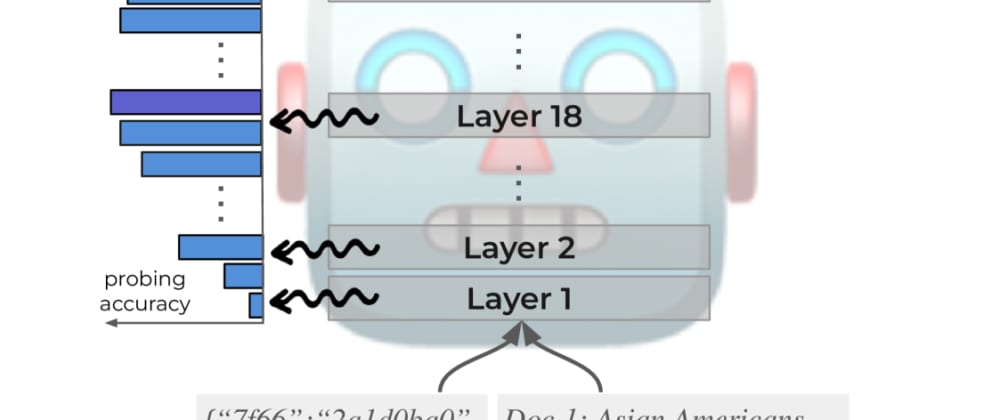

• The researchers design a task where LLMs are given long passages of text and asked to answer questions about the content. By probing the internal representations of the models, they find that the models do encode the relevant knowledge to answer the questions correctly.

• However, the models often fail to output the right answer, due to biases towards information located at the beginning or end of the input as discussed in this related work. The paper also explores how limitations in the models' ability to effectively utilize long contexts contribute to these failures.

• Through additional experiments and analyses, the researchers gain deeper insights into the nature of these long-context failures, and how models' struggles with long-form summarization may be connected.

Critical Analysis

• The paper provides a thoughtful and rigorous analysis of a crucial issue facing modern large language models - their limitations in effectively leveraging long input contexts. The researchers do a commendable job of designing targeted experiments to uncover the underlying causes of these failures.

• That said, the paper acknowledges that the experiments are conducted on a relatively narrow set of tasks and models. More research would be needed to fully generalize the findings and understand how they apply across a wider range of LLM architectures and use cases.

• Additionally, while the paper offers potential explanations for the long-context failures, there may be other factors or model biases at play that are not explored in depth. Further investigation into the root causes could lead to more comprehensive solutions.

• Overall, this is an important contribution that sheds light on a significant limitation of current LLMs. The insights provided can help guide future research in improving context utilization and mitigating positional biases to create more robust and capable language models.

Conclusion

• This paper offers valuable insights into the challenges large language models face when processing long input contexts, even when they appear to have the necessary knowledge to answer questions correctly.

• The researchers uncover biases and limitations in LLMs that prevent them from fully leveraging all the relevant information available in the provided context, leading to "long-context failures". Understanding these issues is crucial for developing more capable and contextually-aware language models in the future.

• By building on this work and addressing the underlying causes of long-context failures, researchers can work towards language models that are better able to understand and reason about complex, long-form information. This could have significant implications for a wide range of language-based applications and tasks.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)