This is a Plain English Papers summary of a research paper called AI Can Now Generate 20-Second Videos with Better Quality, Using New LongCon Method. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

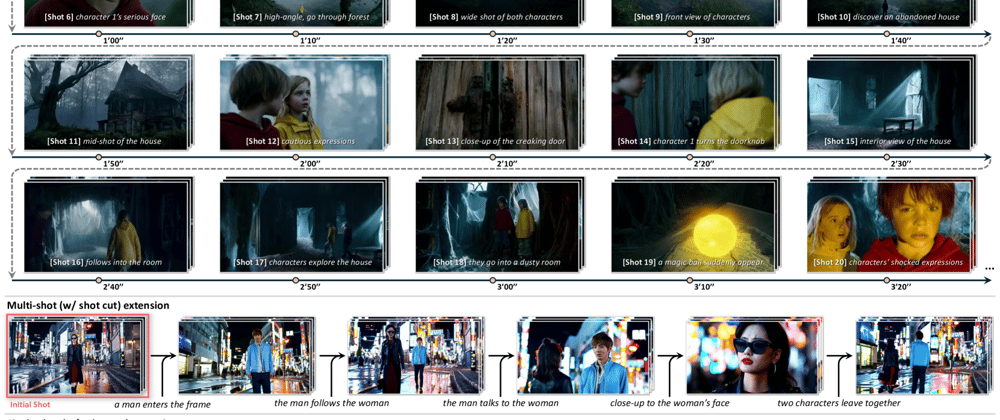

- LongCon introduces long context tuning for extended video generation

- Synthesizes videos of 5-20 seconds versus standard 3-4 second videos

- Uses sliding window approach similar to language model techniques

- Introduces Video Window Token method for temporal consistency

- Achieves 2x longer videos with better quality than autoregressive methods

- Works with any single-shot video diffusion model

- Requires only 1-2 days of lightweight fine-tuning

Plain English Explanation

Video generation models today have a frustrating limitation: they can only create short clips of about 3-4 seconds. This happens because these AI systems have a fixed "attention span" - they can only process a certain number of video frames at once.

The researchers behind this...

Top comments (0)