Let’s first start by defining the “Dark” mentioned in the title. This could relate to a cluster that you have that needs to have minimum to no access to the internet or it could also be a home Kubernetes cluster, the example I will be using in this post will be a K3S cluster deployed in my home network, I do not have a static IP address with my ISP and I would like others to be able to connect to my cluster for collaboration or something that we will get to around data management later.

What is the problem?

How do you access dark sites over the internet?

How do you access dark Kubernetes clusters over the internet? Not to be confused with dark deployment or A/B testing.

Do you really want a full-blown VPN configuration to put in place?

If you are collaborating amongst multiple developers do you want KUBECONFIGS shared everywhere?

And my concern and reason for writing this post is around how would Kasten K10 Multi-Cluster access a dark site Kubernetes cluster to provide data management to that cluster and data?

What is Inlets?

First, I went looking for a solution, I could have implemented a VPN so that people could VPN into my entire network and then get to the K3D cluster I have locally, this seems to be an overkill and complicated way to give access. It’s a much bigger opening than is needed.

Anyway, Inlets enables “Self-hosted tunnels, to connect anything.”

Another important pro to inlets is that it replaces opening firewall-ports, setting up VPNs, managing IP ranges, and keeping track of port-forwarding rules.

I was looking for something that would provide a service that would provide a secure public endpoint for my Kubernetes cluster (6443) and Kasten K10 deployment (8080) which would not normally or otherwise be publicly reachable.

You can find a lot more information about Inlets here at https://inlets.dev/ I am also going to share some very good blog posts that helped me along the way later in this post.

Let’s now paint the picture

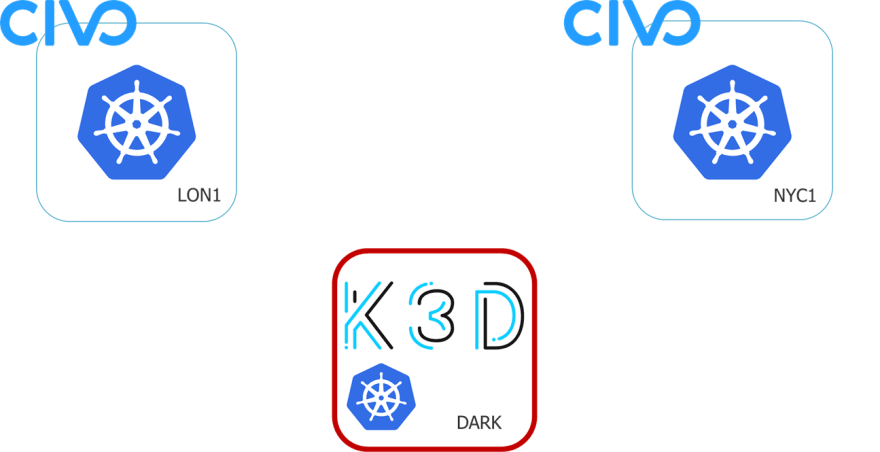

What if we have some public cloud clusters but we also have some private clusters maybe running locally on our laptops or even dark sites? For the example I am using CIVO in my last post I went through how I went through the UI and CLI to create these clusters and as they were there, I wanted to take advantage of that. As you can also see we have our local K3D cluster running locally within my network. With the CIVO clusters we have our KUBECONFIG files available with our public IP to access, the managed service offerings make it much simpler to have that public IP ingress to your cluster, it is a little different when you are on home ISP backed Internet, but you still have a requirement.

My local K3D Cluster

If you were not on my network, you would have no access from the internet to my cluster. Which for one stops any collaboration but also stops me being able to use Kasten K10 to protect my stateful workloads within this cluster.

Now for the steps to change this access

There are 6 steps to get this up and running,

- Install inletscli on dev machine to deploy exit-server (taken from https://docs.inlets.dev/#/ – The remote server is called an “exit-node” or “exit-server” because that is where traffic from the private network appears. The user’s laptop has gained a “VirtualIP” and users on the Internet can now connect to it using that IP.)

- Inlets-Pro Server droplet deployed in Digital Ocean using inletsctl (I am using Digital Ocean but there are other options – https://docs.inlets.dev/#/?id=exit-servers)

- License file obtained from Inlets.dev, monthly or annual subscriptions

- Export TCP Ports (6443) and define upstream of local Kubernetes cluster (localhost), for Kasten K10 I also exposed 8080 which is what is used for the ingress service for the multi-cluster functionality.

- curl -k https://Inlets-ProServerPublicIPAddress:6443

- Update KUBECONFIG to access through websocket from the internet

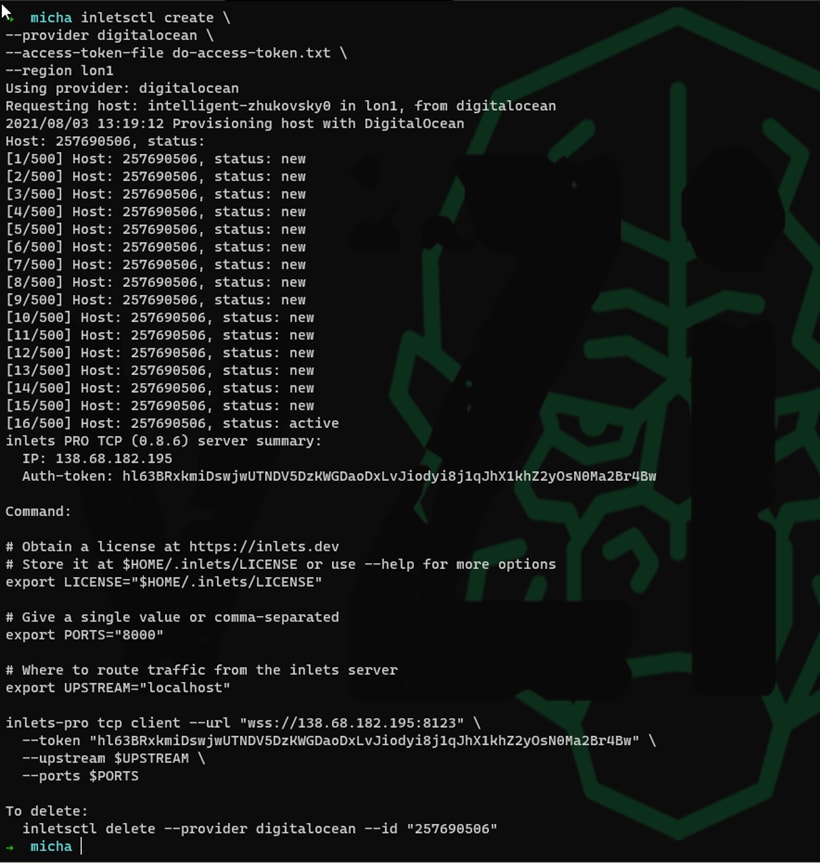

Deploying your exit-server

I used Arkade to install my inletscli more can be found here. The first step once you have the cli is to get your exit server deployed. I created a droplet in Digital Ocean to act as our exit server, could be many other locations as mentioned and shown in the link above. The following command is what I used to get my exit-server created.

inletsctl create \

--provider digitalocean \

--access-token-file do-access-token.txt \

--region lon1

Define Ports and Local (Dark Network IP)

You can see from the above screen shot that the tool also gives you handy tips on what commands you now need to run to configure your inlets pro exit-server within Digital Ocean. We now have to define our ports which for us will be 6443 (Kubernetes API) and 8080 (Kasten K10 Ingress) we also need to define the IP address on our local network.

export TCP\_PORTS="6443,8080" - Kubernetes API Server

export UPSTREAM="localhost" - My local network address for ease localhost works.

inlets-pro tcp client --url "wss://209.97.177.194:8123" \

--token "S8Qdc8j5PxoMZ9GVajqzbDxsCn8maxfAaonKv4DuraUt27koXIgM0bnpnUMwQl6t" \

--upstream $UPSTREAM \

--ports $TCP\_PORTS \

--license "$LICENSE"

Image note – I had to go back and add export TCP_PORTS=”6443, 8080″ for the kasten dashboard to be exposed

Image note – I had to go back and add export TCP_PORTS=”6443, 8080″ for the kasten dashboard to be exposed

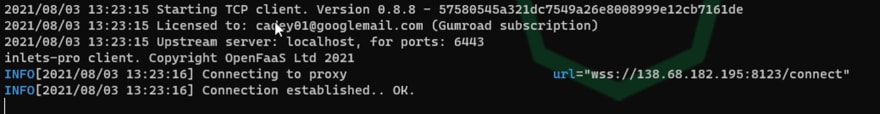

Secure WebSocket is now established

When you commit the commands above to configure inlets-PRO you will then have the following if configured correctly, leave this open in a terminal this is the connection between the exit-server and your local network.

Confirm access with curl

As we are using the Kubernetes API, we are not expecting a fully authorised experience via curl but it does show you have external connectivity with the following command.

curl -k https://178.128.38.160:6443

Updating KubeConfig with Public IP

We already had our KUBECONFIG for our local K3D deployment, to create my cluster I used the following command for the record. If you do not suggest the API port as 6443 then some high random port will be used which will skew everything we have done at this stage.

k3d cluster create darksite --api-port 0.0.0.0:6443

Anyway, back to updating the kubeconfig file, you will have the following in there currently which is fine for access locally inside the same host.

Make that change with the public facing IP of the exit-server

Then locally you can confirm you still have access

Overview of Inlets configuration

Now we have a secure WebSocket configured and we have access externally to our hidden or dark Kubernetes cluster, You can see below how this looks.

At this stage we can share the KUBECONFIG file, and we have shared access to our K3D cluster within our private network.

I am going to end this post here, and then the next post we will cover how I then went to configure Kasten K10 multi cluster so that now I can manage my two CIVO clusters and my K3D clusters from a data management perspective using Inlets to provide that secure WebSocket.

Top comments (0)