TLDR

A machine learning (ML) model is only as good as its input data. In a world of inequity, developers must ensure they build responsible models void of bias.

Outline

- Introduction

- What is algorithmic bias?

- Societal harm

- Closing thoughts

Introduction

As artificial intelligence (AI) begins to integrate itself into daily life, it’s essential that the technology be created for equitable and ethical use. Creating a responsible product is essential and failure to do so can have very real consequences. While neutral in theory, algorithms are at risk to become as biased as the world they were created from.

What is algorithmic bias?

Algorithmic bias occurs when computers make systematically biased and unfair decisions based on the data they're given. AI has the power to be a tool for the implementation of diversity and inclusion in many facets of life — increase workplace diversity, better access to healthcare, etc.

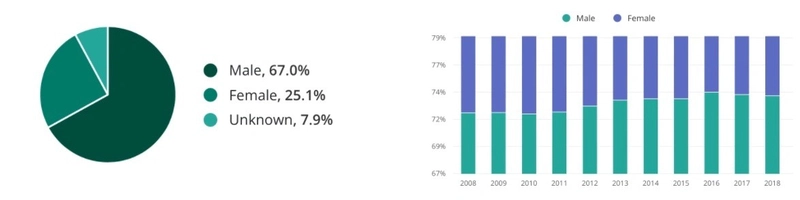

When looking to create more inclusive AI, we must look to who is creating the algorithms. According to Zippia, only 25% of software developers in the US identify as female. Furthermore, over half of the 57% male developers are white. Even while well intentioned, a lack of diversity amongst programmers increases the chances that the algorithms created don’t reflect the experiences of the entire population.

“SOFTWARE ENGINEER STATISTICS BY GENDER” via Zippia

“SOFTWARE ENGINEER STATISTICS BY GENDER” via Zippia

When creating AI models, developers either curate or choose from an existing training set that will be fed into the algorithm. Problems can arise when input data goes against the established norm of the dataset or when it’s curated on biased data. Developers cannot address problems that they aren’t aware of. And with a very homogenous group of people creating these models, many biases go unnoticed.

Societal harm

Can an algorithm be prejudice? While algorithms are a neutral force, data and developer’s biases often appear in the final product. This can have very real implications for those who use or are affected by AI.

Apple Credit Card

In partnership with Goldman Sachs, Apple launched their first credit card in 2019. Problems arose when David Heinemeier Hansson tweeted that when applying for the Apple credit card, it gave him a 20x higher limit than his wife. This was echoed many times over, including by Apple co-founder Steve Wozniak.

Goldman Sachs issued a statement explaining that gender wasn’t an input variable when determining credit limits. Experts have said that this could be a part of the problem as omitting a variable could leave developers unaware of any problems. Will Knight with Wired explained “a gender-blind algorithm could end up biased against women as long as it’s drawing on any input or inputs that happen to correlate with gender.” As companies like Apple continue to make business decisions based on algorithms, rigorous testing, and trials must be done to ensure that human bias doesn’t make its way into machines.

Over-policing

Example of PredPol’s predictive policing software via Wabe

Example of PredPol’s predictive policing software via Wabe

AI is currently being used for law enforcement and policing. Some worry that technology could exacerbate biases and racial stereotypes. PredPol is one company using algorithms to predict where crimes are most likely to happen and is currently being deployed in police stations around the world.

PredPol and other AI policing technologies have left many concerned that the technology will only perpetuate existing racial bias. Racial disparities in criminal justice laws and the over-policing of certain neighborhoods could be factors in the data that would direct police back to these same neighborhoods. With a greater police presence, a vicious cycle of over-policing could continue.

PredPol looks for patterns to dispatch police in neighborhoods

PredPol looks for patterns to dispatch police in neighborhoods

PredPol’s CEO, Brian MacDonald, has claimed that data is a much more reliable source for making decisions than human judgement. Policing software tools have the power to make determinations about people’s lives. Some use cases from police officers have backed up its claims. While algorithms can make life easier, we should be confident that they are free from biased data of the past before using them to make future decisions.

Closing thoughts

The rapid rate of development for AI has led to great innovation. It’s essential that ethical practices are integrated into technology to ensure equitable use. Next week’s blog covers responsible AI in practice and how to check for algorithmic bias.

Interested in reducing social biases in AI? See how Mage can give you a display of bias and suggestions on how to lower them. Sign up for Early Access.

Top comments (0)