The incident

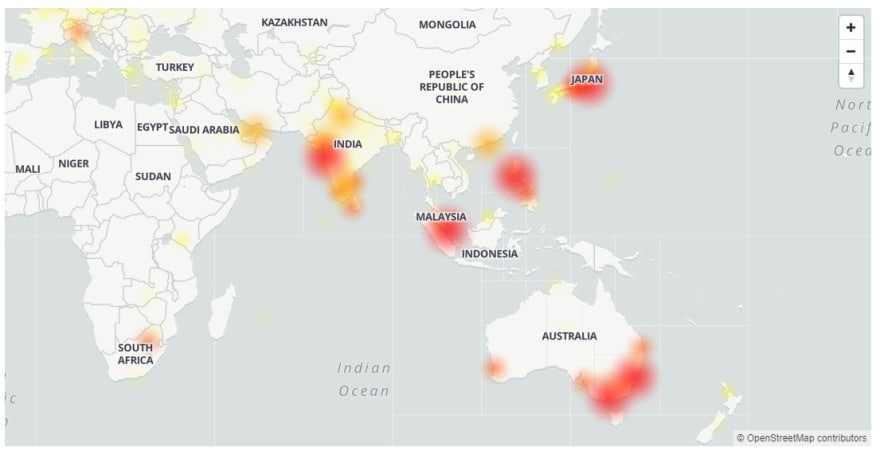

If you're following the news, you may have seen that Gmail had a global outage today.

Users have been complaining that they were unable to send emails or attach files to their emails.

The error showed “the operation couldn’t be completed”.

Why it's bad

It's safe to say that most of us would not be affected if we couldn't use our personal Gmail accounts for a few hours.

The real damage is for companies that are using the G Suite business products.

As you might imagine, not all of them have a procedure implemented for the scenario in which G Suite is down.

It's not just Google, it's a trend

Sadly, data shows that there has been a significant increase in the overall number of issues and outages related to cloud services in the last 3 years.

At Endtest, we invest a lot of time in studying the data and trends from the software world.

Most of these studies are done to help us improve our products and adapt them to the ever-changing needs of the users.

Ignoring good practices

If you have been in the Software Development industry for more than 7 years, you might have noticed that the workflows of verifying the quality of a product have changed.

It used to be a common occurrence to have Quality Assurance specialists in charge of testing.

These testers would develop complex testing plans and strategies, designed to automatically verify the product from the perspective of a real user.

In the Software Testing area, there are a series of good practices and bad practices.

For example, it's considered a bad practice for someone who developed a feature to also be in charge of testing it.

But how many of us take that into consideration nowadays?

How many of us develop testing plans and strategies?

A strange desire to over-complicate things

Some architectural decisions might lead to an increased complexity, without adding any real benefits.

For example, if you're a restaurant that has a basic website built with JavaScript, PHP and MySQL, there would be little benefit to introducing technologies such as Gatsby, ReactJS, Docker, etc.

But there are countless examples where basic websites are using such technologies, simply because someone thought it would be cool, without calculating the ROI.

This results in an increased cost in developing and maintaining that basic website and an increased risk of service interruptions.

If we scale up that example, we can see the same thing for bigger companies.

The excuse for over-complicating things tends to be related to the potential for scalability.

But most of the times, that potential is over-estimated and not distributed accordingly into multiple phases.

If you want to build a house, you won't start by building a foundation for a skyscraper.

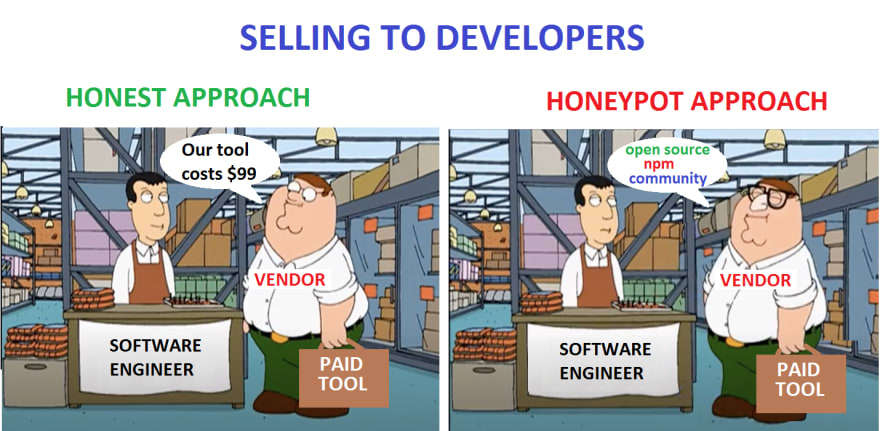

What's fueling this desire to over-complicate things?

Surprisingly, a large number of R&D departments do not calculate the ROI of adopting new technologies.

Most of them hop on the hype train, which might be fueled by the Marketing departments of the for-profit companies behind those new technologies.

Let's look at a random example.

You might have read a blog post about how cool Gatsby is.

A modern open-source technology that can help you build a fast website.

Behind that open-source technology, you will find a for-profit company with $46.8M in funding.

No, it's not just a bunch of cool developers working on open-source software in their free time.

That blog post that you read may have been written by someone paid by their Marketing department.

I'm not saying that Gatsby isn't great, I'm just saying that a lot of companies are trying to influence the decisions we make as developers.

Here's a fun challenge.

Look at the latest open-source technology that you added to the tech stack of your project.

Do some research and see if that open-source technology is owned by a for-profit company.

And even our desire to be cool developers might lead us to use a new technology and create a huge increase in the cost of maintaining our project and we never really properly calculate the ROI.

Maybe we end up working 3 months to introduce it into our tech stack and the result is that our pages take 5 ms less to load, which has zero effect on the overall business.

And when asked about the benefits, our response always starts with:

"Yes, but in the future..."

I'm not saying that you shouldn't adopt new technologies, I'm just saying you should calculate the ROI and plan accordingly.

The temptation to over-simplify testing

Testing has been labelled as a bottleneck.

The problem is not that testing is the bottleneck. The problem is that you don’t know what’s in the bottle.

That was an actual quote by Michael Bolton.

It's perfectly natural for testing to occupy a significant amount of time.

Just look at the current development of the COVID-19 vaccines.

Trying to speed up things may not result in the best outcome.

Before we jump and blame the stakeholders for pushing us to release faster, we have to admit that we as Developers and Testers, are part of the problem.

I've seen developers using only Jest to validate that a React application works as expected.

Jest allows you to access the DOM via jsdom, which is only an approximation of how the browser works.

Relying only on those tests will not give you an idea if your web application works for your users or not.

Same for unit tests, we sure love to see that green output.

But those unit tests aren't going to tell us if our product actually works.

Those super-fly e2e tests that you just implemented, if you can't run them on Safari and Internet Explorer, they're a bad investment.

End-to-end tests should work on all the major browsers.

Assumptions are risky when you're in charge of testing something.

I'm not saying that testing should be painful, I'm simply saying that you can't just skip certain steps and not expect things to blow up in your face.

Bad Automation Attempts

I've seen testers trying to reinvent the wheel and building an over-complicated internal test automation framework for months or even years, by stitching together different open-source libraries.

The only reason in their mind was:

"Those libraries are open source, it doesn't cost us anything."

Obviously, they wouldn't take into consideration the thousands of hours they spent trying to build something useful with those libraries.

The ROI for such internal solutions is usually terrible.

During your career, you must have met someone who was always working on developing this over-complicated internal test automation tool which was always in an almost ready state.

Most of the time, their team would end up having to do manual testing.

That's one of the many reasons why we built Endtest.

Applying pseudoscience to our testing

To make matters worse, while trying to work smarter, we just end up applying pseudoscience to our workflows, including testing.

The Testing Pyramid:

You might have seen this in several biased blog posts praising a certain open-source e2e testing solution owned by yet another for-profit company.

It's a relevant example for pseudoscience.

Following that simple principle will not lead to any real tangible benefits.

Top comments (8)

"Use the cloud", they said. "You won't have downtime anymore", they said...

Funny enough: when I started working with cloud, it was first time when I met absolutely unpredictable reliability in real life. Some instances worked for months without any issues, while some died within minutes after start. Of course, many things changed since then (it was about 10 years ago). Fortunately, such a behavior helped us build system able to survive in such a harsh environment :)

I also have unpleasant memories of instances going into Erred state.

Facts

Hmmm, so scalability and testing go hand in hand?

My opinion is that they are just separate concepts and one should not affect the other.

Uh, i see. Thanks for the clarification

Now that I think about it, the fact that we can simply overlook this problem as devs is kind of disappointing.

Some comments have been hidden by the post's author - find out more