TL;DR

- The pull-request review process is a pain. Data shows this is a major bottleneck.

- Pull-request review speed & quality can be improved by adding context to pull requests, like estimated time to review

- Review automation tools like gitStream can add context for reviewer

Data shows an insane bottleneck in code reviews

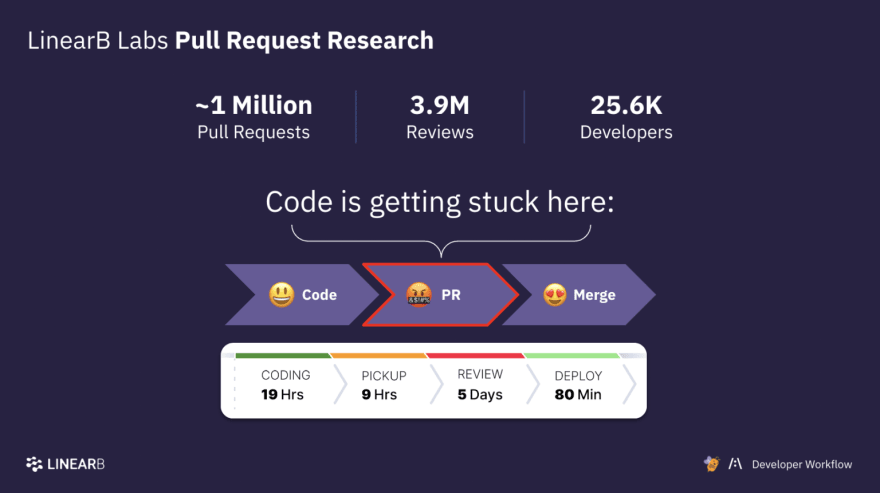

The code review and pull-request process continues to be a major bottleneck for developers and dev teams. In a recent study, LinearB inspected ~1,000,000 PRs and found the following that: Pull requests are waiting on average 4+ days before being picked up.

Studies show pull requests & code review pickups are the no. 1 bottleneck in cycle time

Although there have been significant gains in coding times and deployment times; the amount of time it takes to start a pull-request review and the amount of time it takes to complete a pull-request review continue to disappoint.

The good news? There are things we can all do to break this bottleneck.

Context that allows developers to easily pick up pull requests is a game-changer

One key – and there are many keys – to improving both pull-request review speed and quality is providing as much up-front context to the reviewer as possible.

Examples of context proven to improve pickup time:

- Estimated Review Time

- Labeling low–risk changes like Documentation or Test changes only

- Labeling high-risk changes like Core services, API Database or, Security

- Ticket or Issue links

- Test-coverage impact

What happens when estimated time of review (ETR) is added to pull requests?

Plain and simple: When a pull requests is labeled with how long it will take to review it - be it 5 minutes or 60 mixtures - developers:

- Are more likely to pick up pull requests quickly

- Are more likely to have have their reviews completed quickly

The longer a developer assumes a code review will take, the longer they will take to respond to it.

Why do pull requests with ETR get picked up faster and reviewed quickly?

Developers are knowledge workers, not cogs in a machine. By simply adding estimated time to review, you accommodate cognitive biases we all have. Specifically,

- Knowing the review time allows the reviewer to schedule the best time to start the review. The key is to find a time that allows for non-interruption.

- Cognitive reload occurs when a PR review is started and then not completed typically due to interruption. This causes a cognitive reload for the reviewer, starting the review over close to the beginning and extending the review time.

- Conversely, the review can be rushed at the expense of quality. Fitting a 30 minute review into a 15 minute window results in quality gaps.

Two ways to add ETR to your pull requests

To get started you need to install gitStream on your repository from GitHub marketplace. https://docs.gitstream.cm/github-installation/ and add 2 workflow files to your repo:

- gitstream.cm;

- github action file.

The default "gitstream.cm" file has estimated time to review already setup for you, but let’s dig into it a bit to see what it’s actually doing:

automations:

estimated_time_to_review:

if:

- true

run:

- action : add-label@v1

args:

label: "{{ branch | estimatedReviewTime }} min review"

Let’s look at this snippet of yaml in the gitstream.cm file. "automations" keyword sets up the listing where all your automations will live and you can have an unlimited number of them. The next line is where you name your automation, in this case it’s "estimated_time_to_review," which will add an estimated time to review label on every PR run through gitStream.

Next is our conditional, in this case we just need it to be true, but you can use regular expressions, line counts and a lot more here to check the code in the pr and then act on it.

After the conditional, we need to run some actions from the conditions being met.

In this case, we run the add-label@v1 action which will add the label to the PR based on the arguments we give it. In the arguments (args) we provide a string that adds in the estimated time to review as the label to the PR.

How does gitStream determine ETR?

The estimated review time is predicted using a machine-learning model developed in LinearB and trained on millions of PRs. It incorporates features including:

- The size of the PR, including details of additions, deletions, etc.

- The file types that were modified, and the extent of the changes on these files

- The codebase (repository) involved, and the familiarity of the PR issues with the code base

The review time prediction is then bucketed into a useful time range (e.g. 15-30 minutes) to provide the final estimate

Now that we have our estimated time to review, let’s mark what type of PR this can be. Let’s do this by adding some color to the labels.

We can also apply color coded labels providing a visual cue to help reviewers, let’s also add some code to hold state for the estimated time to review so it updates. The code below adds this functionality. By adding the "calc" we can get the updated estimatedReviewTime as it is updated per this branch.

automations:

estimated_time_to_review:

if:

- true

run:

- action: add-label@v1

args:

label: "{{ calc.etr }} min review"

color: {{ 'E94637' if (calc.etr >= 20) else ('FBBD10' if (calc.etr >= 5) else '36A853') }}

# To simplify the automation, this calculation is placed under a unique YAML key.

# The result is assigned to `calc.etr` which is used in the automation above.

# You can add as many keys as you like.

calc:

etr: {{ branch | estimatedReviewTime }}

Now you know how to add context to your PRs with gitStream, this article just scratches the surface of what is possible, please check out the gitStream docs to learn more.

In future posts we’ll dive into using gitStream to do code review automation and having gitStream help find the right reviewers for your PR with reviewer automation.

Top comments (0)