Cover Photo by Trent Pickering on Unsplash

Disclaimer: I work at Keyrock but views expressed here are my own. However, if you like the views, do go checkout the company as it is full of awesome people and I have learned much whilst working there, including Rust.

If you have haven't read Part 1, I strongly encourage you to do that first. It covers:

- Syntax

- Separation of Data and Code

- Type safety

- Declarative Programming and Functional Composition features

In this part we are going to cover:

- Evolved package management

- Concurrency ready std lib and great async support

- Clear, helpful compiler errors

- Zero cost abstractions (Optimisation)

- Energy efficiency

- WASM

- Tauri - High performance, secure, cross-platform desktop computing

Evolved package management

Here's something I don't see many articles noting about the "why Rust" conversation: Package management. I remember when I was first learning C++ over 20 years ago and you had to create folders, add header files, reference the folders in compiler params/settings, go through manual linking and a bunch of initially confusing activities like non-standard build tools that are dependent on the library creators choices. Later it felt like cumbersome yak shaving that was just waiting for some automation.

Today C++ developers can use some nascent package management tools - however from the looks of it (I haven't used them - happy to hear more from today's C++ devs) it's still a painful area of the language. There's an interesting conversation here.

What about other languages? Well I used Pip (Python) for many years and battled circular/changing references and the (still) extra step of managing virtual environments. It was ok but left a lot to be desired [edit: I changed my mind - I recently completed the Udacity ML for Trading course and I spent more time fixing dependency issues than learning. Working with Pip is still a load of arse]. JavaScript was a small step forward but the solution there seemed to be "pile all the dependent code into a folder and compile everything". It evolved over time (tree-shaking!) but today the "10k files in node_modules" is a well known joke pain point.

For me, Go-lang was the first language with well thought out package management and compilation tooling, with some foresight around name-spacing the dependencies and linking to existing tools like github (easily). It was flexible, simple, and featured the ability to very easily compile to some target hardware from some other hardware (a feature spreading across other tool-chains now). I have a friend that swear by Go because of the benefits of it's simple effective tooling (and now there are package management options as the language evolves). New languages like Elixir now set the the standard with package managers like Hex that have batteries included and support building and publishing of packages with ease.

The Rust language developers have made some excellent choices around tooling and fortunately learned from the history of package management pain in other languages. For downloading, compiling, pushing open-source, and building CI tool chains, the tooling is mostly a help rather than a hindrance.

More info:

https://doc.rust-lang.org/book/ch01-03-hello-cargo.html

https://medium.com/blackode/how-to-write-elixir-packages-and-publish-to-hex-pm-8723038ebe76

https://news.ycombinator.com/item?id=19989188

Concurrency ready std lib and great async support

The rust-lang blog has a great article that lays out the rust approach to concurrency - called Fearless Concurrency. It lays out architectural and language principles and it's a good place to start towards understand the features of Rust that support concurrent programming.

To try and summarise the fabulous concurrency toolkit is quite a challenge but here goes:

The std lib contains "concurrency primitives" made up of both tooling and types that are built from the ground up to be supportive of the principles of fearless concurrency and to be thread safe for those wanting to experiment and try alternative methods/patterns of concurrent (or even parallel) programming.

Two major projects (non std lib but extremely commonly used) stand out in the area of async programming: Async std and Tokio - no doubt familiar to anyone that has turned an eye towards Rust for a second too long. Async architecture in general is likely very familiar to JavaScript programmers but in Rust there are some extra considerations (like ownership of the data that is thrown into an async function). Tokio is fast becoming a heavily supported and road tested async framework, with a thread scheduling runtime "baked in" that has learned from the history of Go, Erlang, and Java thread schedulers.

Tokio is a "take what you need" framework, whilst Async-std started as an "everything the box" solution. Today both have a lot of crossover with micro async runtimes like smol becoming the foundation one of framework and optionally usable in the other. The ability to rip out a small dependent sub-crate (dependent package) like smol and use it independently with ease never get's boring, by the way. It's great way to include a test runtime in an async library without forcing the inclusion of a giant async framework.

Both have rich documentation and both should be explored as a learning point for those new to Rust.

However, this isn't even 50% of what's out there: Need raw parallel power (and maybe don't need an async runtime)? Checkout Rayon. Need simple Actors for concurrent processing? Checkout Actix. Need a larger Actor system for fault tolerance/CQRS messaging? Checkout Riker. Damn, I sound like a youtube advert 🤦 - For real though, this is the tip of the concurrency iceberg. There is so much more - and it's growing.

More info:

https://blog.rust-lang.org/2015/04/10/Fearless-Concurrency.html

https://tokio.rs/blog/2019-10-scheduler

Clear, helpful compiler errors

from: https://www.reddit.com/r/ProgrammerHumor/comments/8nwyma/c_is_a_beautiful_language/

I remember wondering what "Syntax Error" meant when I was about 11, as it was the response to pretty much anything I typed into my Commodore 64. I didn't know what a "syntax" was but it was fun to see that this beige box responding to anything I typed, as if I was meeting a digital creature speaking an alien language and was at least having some kind of dialogue, with the hope it will one day understand me.

Later, as I learned various programming languages I learned the art of reading errors and stack traces and how to decipher the junk that compilers spewed out when everything went wrong. It was a strange "normal" and a part of learning to code in many languages, especially low level languages.

When I first came across Evan Czaplicki's "Compiler Errors for Humans" article and approach (expressed his landmark FP front-end language, Elm) I was gob-smacked. I had never realised how, or why, I had grown to accept the ridiculous, complicated, and often unintelligible, junk that passed for compiler "messaging", as being the norm.

It feels like the common sense of user-experience-centric design principles applied to compiler (or interpreter) error messaging became a tidal wave in new languages. I remember I was learning Elixir and the community forums were discussing the desire for Elm-like errors (today Elixir's error messages are wonderful too, by the way).

Rust has very much adopted this approach from the ground up and error information is extremely helpful and constantly improving. The complexities of the language, that generally stem from the vast menu of features, need this type of support, and while the initial experience can be frustrating, like a nagging, fusty, computer science pedant, lecturing you on every save, eventually you will become friends with it and it will be... emotional (I promise).

This is however, an inadequate summary of the errors, notes, critique, and alternative solutions the compiler suggests. One of the best reactions was from a developer to whom I gave an initial exercise towards learn rust. He had a background in Go-lang, PHP, JavaScript and more. I had a catchup early on and he said "It's only been 3 days and I'm love with the compiler." That's a more adequate summary.

What's creating this reaction is that, in Rust, a lack of compiler errors carries the implicit guarantee that (as long as there is no badly managed unsafe code) the compiler says your code is type-safe and memory-safe (also meaning thread-safe when data is being passed around). This increases confidence in the dev and makes refactoring far less dangerous. I even have straight-out-of-uni interns work on critical production code and I know that the compiler has got our backs.

More info:

https://ferrous-systems.com/blog/the-compiler-is-your-friend/

https://www.reddit.com/r/rust/comments/8lse7e/rust_compiler_errors_are_appreciated_apparently_d/

Example advanced response:

https://twitter.com/b0rk/status/954366146505052160?s=20&t=Ot4RtYCeRDbotl-TpoaPog

Zero cost abstractions

I was very sad to hear of the death of Joe Armstrong in 2019. He was without doubt a pioneer in our wider industry of software engineering. Joe had some classic quotes he is known for and I love them all. However, I have a bone to pick with this one:

"Make it work, then make it beautiful, then if you really, really have to, make it fast. 90 percent of the time, if you make it beautiful, it will already be fast. So really, just make it beautiful!"

— Joe Armstrong, quoted by Martin Logan in Erlang and OTP in Action, 2010, Manning

I'm going to say this quote works in Erlang or Elixir and maybe some other contexts but it's not a generalisation we can apply to all languages, especially imperative languages (see 'Declarative Programming and Functional Composition features' in Part 1). Try making "beautiful" optimised code in C++ or Go-lang that still maintains the implicit knowledge in the code required for long term maintenance by a team of devs. Usually there will be some "cheats" or alternative methods of looping, buffering data, sometimes referencing data in an unsafe way, or forced, dangerous tail-recursion, where the language doesn't support this feature in an efficient way. Even watching experts writing manually optimised code in some languages, the outcome is not intuitive and its ugliness is often hidden behind a veil of "that's the pattern to use there". The worst is often branchless-code fanatics trying to wedge a transformation into a 0 or 1 outcome to avoid a "costly" if branch ( 🤦 ).

Added to this general lack of aesthetic focus in low-level code is the overwhelming cognitive load added to the codebases where this optimisation has taken place. I can hear the responses already - in fact I heard this in an interview once - "but there are tools to alleviate this and the way I write code means I don't have that problem." I'm not going to respond much there except to say that as soon as a person is thinking about the code they write rather than the code than can be written and understood by a team of people with different levels of experience, we're already on different pages regarding teamwork and scalable productivity.

Rust helps to alleviate the dangers of this minefield of optimisation and yak-shaving boiler-plate coding by providing an ever evolving set of high-level "primitive" functions (especially around functional declarative features) that are said to be "zero cost abstractions" (a concept that will be familiar to C++ devs, in who's world the term originated).

What does this mean? Well, in general, it means, in around 99% of cases, you will not be able to code something more efficient if you tried to code it yourself. There are some exceptions and they will be addressed over time but in general it works this way: The compiler will look at the code that uses some looping or high level function and will "unfold" that code to be as fast as it can be. This means (for example) a loop that runs twice may be unfolded into the exact code running twice instead of a loop, or a generic function will be statically generated more than once in the compile (for each type) rather than dynamically derived at runtime (if that is deemed as more efficient - this can also be a dev's choice in the code) or, as the language evolves, the methods to collate, parse, or (default) sort/search data will improve over time, and we get that optimisation for free (zero cost).

What's the outcome? We get to maintain lovely high-level declarative code without having to muddy the waters with imperative optimisation cheats (that will need maintaining and reviewing in the future) - and which often impedes the knowledge transition of the intent of the author to the reader of the code - it increases the ability to code like a systems thinker (yes, that's a self-plug link but I think it's fairly relevant and interesting here, as I wrote that article before I learned Rust).

I see imperative developers often diving into the abstractions, finding issues with efficiency or making changes and pasting the code into code of their projects, as if they have achieved something great with this approach. That thinking is a little obtuse at best and a behavioural Not Invented Here mindset problem at worst. There are exceptions to every rule but in general, code is written for humans; to communicate an intent towards solving a data processing problem. This implies that code is written for knowledge transmission and therefore we should embrace high-level abstractions and cleaner code wherever possible. Rust enthusiastically embraces this philosophy.

More info:

https://boats.gitlab.io/blog/post/zero-cost-abstractions/

https://blog.rust-lang.org/2015/05/11/traits.html

Energy efficiency

With eye-catching headlines figures like "98% more efficient than Python" and using "23% less energy than C++" Rust is paving the way towards a greener future with the ability to reduce energy usage in server farms on a gigantic scale. A 30% reduction in electricity bills for your raspberry pi might seem borderline pointless - but imagine a 30% saving for a web services business running on tight margins. That's considerable, especially with the oncoming energy crises hitting some Western European countries.

But is it all about money? For business maybe, but consider this: It was recently claimed (and much debated) that (based on today's technology requirements, code, and architecture, and sources of energy etc, etc) watching a 30 minute show on Netflix could consume a small but significant amount of energy - I'm avoiding stating the amount because of the intense debates it creates; go read about it yourself.

Regardless of the amount of energy, the implication is thought provoking: When I use a digital service, I am increasing my carbon footprint by amounts that differ exponentially, depending on the language and architectural choices of the digital service provider!

Note: I wrote the section above as the Russian invasion of Ukraine was just getting started. Today we see spiking energy prices and a looming energy crisis in the UK, where I live most of the time. The ability of a new technology to drastically lower energy consumption in today's world is not merely an added extra; it's a competitive factor that will drive a switch to Rust in many industries, and for tooling across the board (see 'Everybody else's tooling' in Part 3).

More info:

https://greenlab.di.uminho.pt/wp-content/uploads/2017/10/sleFinal.pdf

https://haslab.github.io/SAFER/scp21.pdf

https://www.theregister.com/2021/11/30/aws_reinvent_rust/

https://energyinnovation.org/2020/03/17/how-much-energy-do-data-centers-really-use/

WASM

In JavaScript, there is a beautiful, elegant, highly expressive language that is buried under a steaming pile of good intentions and blunders.

— Douglas Crockford, JavaScript: The Good Parts (ed. 2008)

JavaScript, or ECMAScript (which is its true name that around 0% of people use), is my guilty pleasure. It really is an expressive tool that allows for free flowing thought to bleed into the editor, with functional composition features, easy to use async, simple baked in handling of JSON, what's not to like? Well... A lot, actually. There are many ways to discuss this but the simplest is the one that is the most frightening: I once read somewhere that anything that is possible to be structured and written in a programming language will eventually appear in the code (can't remember the reference - I'll add the reference when I find it one day). This means all the crazy stuff that's possible with JavaScript (e.g. confusing usage of syntax, accidental mathematical errors, parsing quirks, implicit type conversation to the wrong type, overly flexible architecture, silent errors, missing semi-colon issues etc) will eventually be a bugs in JS code everywhere.

Anyone that has spent time working with JS knows there are ways to work around these issues and that comes with experience, but in general this sounds like 😱 and I feel goes a step towards explaining why front-end development is 10x more complicated than any back-end-only dev already thinks it might be!

However, baked into all the frontend JavaScript engines in nearly all major browsers is an intermediate language known as Web Assembly (WASM), that JavaScript is transformed into internally. Today, other languages can generate Web Assembly and ship it to the browser and it already works great everywhere. I mean really great:

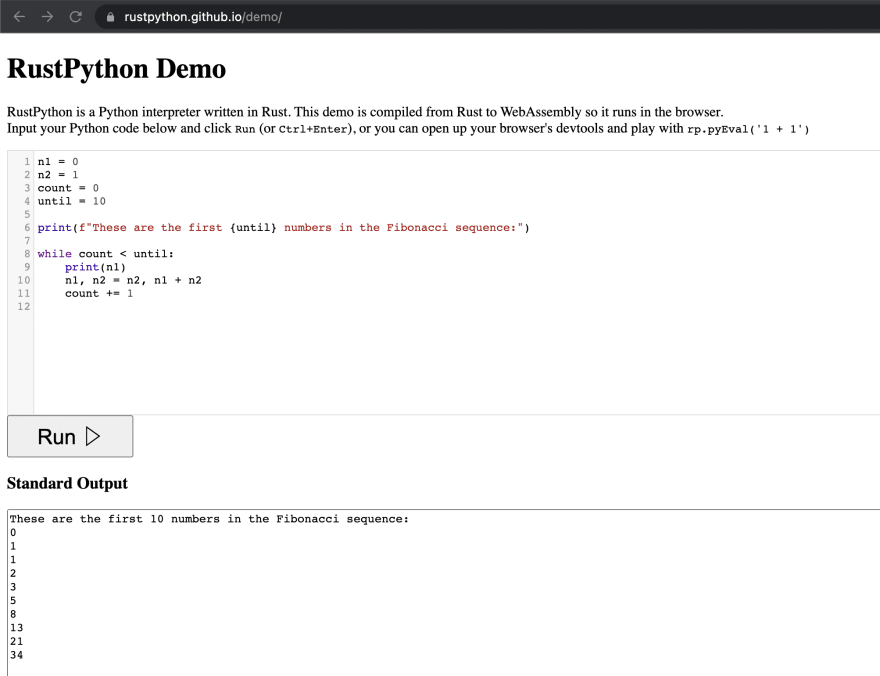

For example, this is a Python interpreter, written completely in Rust, then cross-compiled to WASM, and loaded into the browser as a component: https://rustpython.github.io/demo/.

So the question is this: If all the benefits of Rust can also be applied to frontend code, as the frameworks in this area develop, why would would people continue to choose JavaScript frameworks for web apps?

What am I saying? That Rust will end the era of JS in the browser? I don't know - probably not. JS can be really fun too. But there already is an Angular Rust project, WASM integration into some desktop application frameworks, and several rapidly growing WASM projects, so things are moving in an interesting direction. At my current work we were able to compile an entire backend rust service into a WASM module that gets loaded into a Vue based UI. We then stream the context that the backend service is receiving to the WASM module and we can "see" the output the service will be producing, in (throttled) realtime - but it's available in the browser with zero load on the backend service itself needing to deliver that data. It's a very interesting application of Rust and provokes thoughts of new alternative architectures.

More info:

https://www.decipherzone.com/blog-detail/front-end-developer-roadmap-2021

https://webassembly.org/roadmap/

https://github.com/MoonZoon/MoonZoon

Tauri - High performance, secure, cross-platform desktop computing

Enabled by the emergence of the webview library, Tauri opens a door that, until now, was half closed unless we were willing to sacrifice some security, performance, choice of tooling, performance, memory management and often performance... Cross platform desktop applications have been a veritable holy grail, just out of reach for those not prepared to sacrifice language-choice, speed, concurrency features, security, and memory usage. Yes, I'm talking about that incredible laptop-fan-stimulating heap of JS junk: Electron.js (Hey, it's just an opinion. Leave me alone). Electron was a great idea and still facilitates a good option for Desktop applications where security or performance is not a major concern, like the WhatsApp desktop client (:halo: ). In fact it's used for a considerable number of apps that you are probably using right now, including one that is used by many Rust developers, including me, because it's pretty awesome: Visual Studio Code 😞.

Today however, we have Tauri. I'm not going to fly the Tauri flag too much here, except to say that it's an extremely interesting project. Grab you beverage of choice and join Denjell from the Tauri Core Team at their fireside video where he presents what they are working on. Seriously - it's a another Rust revolution driver - it's currently the fourth highest ranked Rust project, even above projects you may know well such as rust-analyzer, Actix, and Tokio.

I'll say it explicitly for those skim-reading and missing the point. Rapid localised, desktop, rust applications, with a high-speed UI that leveraged the skill of almost any front-end framework developer, are about to become a reality. It won't be surprisingly to see every front-end dev adding Rust to their already ridiculously over-weight tool-belts.

More info:

https://evanli.github.io/Github-Ranking/Top100/Rust.html

Creating Tiny Desktop Apps With Tauri and Vue.js, Smashing Magazine, Kelvin Omereshone, July 2020

Thanks for reading up to this point. If this article is interesting, or even infuriating, I can promise more of the same in Part 3 where I will cover:

- Macros!

- Cryptocurrency and Blockchains

- Everybody else's tooling

- Speed

- No garbage collection

- Memory safety features

Top comments (0)