This post was originally published on notion. Click here if you prefer to read in notion page which has better readability.

Introduction

This blog post illustrate development cycle using django app container. I assume readers are already somewhat familar with docker and docker-compose. Although I used django for app development, it is language-agnostic since the post is about containerized application deployment.

Walkthough is devided into three parts consisting three different environemt respectively. First part, describes the architecture of the app(api and async worker) and how they are deployed on local enviroment. Second part is how to deploy the docker containers on cloud using single ec2 instance with on staging environment. Third part, illustrate how to convert traditional ec2 deployment into ECS using fargate with github actions on prod environment.

local - run docker containers on desktop/laptop with sqlite and redis server using docker-compose

stating - run docker containers on single ec2 instance with mysql RDS and ElasticCache

prod - convert stagning setup to ECS Fargate

For part1, click here.

How to deploy django app to ECS Fargate Part1

Jinwook Baek ・ Jul 3 ・ 4 min read

You can skip to part3 if you are already familiar with AWS architectures.

How to deploy django app to ECS Fargate part3

Jinwook Baek ・ Jul 3 ・ 12 min read

Staging Infra Setup

Before going straight to ECS deployment, we will setup up a staging env to test the application using ec2 compute instance and other AWS cloud services. If you are familar with AWS infra and confident with ecs setup, you can skip this part. However I will be using same vpc, redis and mysql for production env so it would be worth while to take a look at the setup. (you should use different vpc, redis and mysql for your actual production deployment)

The staging cloud architecture will consist following AWS services.

- VPC with public and private subnet

- EC2 instance (in public subnet)

- ALB in front of ec2 instance

- RDS mysql (in private subnet)

- ElasticCache - Redis (private subnet)

Refer to the cloudformation for detailed configurations.

kokospapa8/ecs-fargate-sample-app

-

Preview

-

Network - VPC

"VpcEcsSample": { "Type": "AWS::EC2::VPC", "Properties": { "CidrBlock": "172.10.0.0/16", "InstanceTenancy": "default", "EnableDnsSupport": "true", "EnableDnsHostnames": "true", "Tags": [ { "Key": "Name", "Value": "ecs-sample" } ] } }, "EcsSamplePrivate1": { "Type": "AWS::EC2::Subnet", "Properties": { "CidrBlock": "172.10.11.0/24", "AvailabilityZone": "us-west-2a", "VpcId": { "Ref": "VpcEcsSample" }, "Tags": [ { "Key": "Name", "Value": "ecs-sample-private 1" } ] }, "DependsOn": "VpcEcsSample" }, "EcsSamplePublic1": { "Type": "AWS::EC2::Subnet", "Properties": { "CidrBlock": "172.10.1.0/24", "AvailabilityZone": "us-west-2a", "VpcId": { "Ref": "VpcEcsSample" }, "Tags": [ { "Key": "Name", "Value": "ecs-sample-public 1" } ] }, "DependsOn": "VpcEcsSample" }, "IgwEcsSample": { "Type": "AWS::EC2::InternetGateway", "Properties": { "Tags": [ { "Key": "Name", "Value": "ecs-sample IGW" } ] }, "DependsOn": "VpcEcsSample" }, "NATGateway": { "Type": "AWS::EC2::NatGateway", "Properties": { "AllocationId": { "Fn::GetAtt": [ "EipNat", "AllocationId" ] }, "SubnetId": { "Ref": "EcsSamplePublic1" } }, "DependsOn": [ "EcsSamplePublic1", "EipNat", "IgwEcsSample", "GatewayAttachment" ] }, -

RDS

"RDSCluster" : { "Type": "AWS::RDS::DBCluster", "Properties" : { "MasterUsername" : { "Ref": "DBUsername" }, "MasterUserPassword" : { "Ref": "DBPassword" }, "DBClusterIdentifier" : "ecs-sample", "Engine" : "aurora", "EngineVersion" : "5.6.10a", "EngineMode" : "serverless", "ScalingConfiguration" : { "AutoPause" : true, "MinCapacity" : 4, "MaxCapacity" : 8, "SecondsUntilAutoPause" : 1000 } } }, -

Redis

cacheecssample001": { "Type": "AWS::ElastiCache::CacheCluster", "Properties": { "AutoMinorVersionUpgrade": "true", "AZMode": "single-az", "CacheNodeType": "cache.t2.micro", "Engine": "redis", "EngineVersion": "5.0.6", "NumCacheNodes": "1", "PreferredAvailabilityZone": "us-west-1b", "PreferredMaintenanceWindow": "thu:02:30-thu:03:30", "CacheSubnetGroupName": { "Ref": "cachesubnetecssampleredissubnetgroup" }, "VpcSecurityGroupIds": [ { "Fn::GetAtt": [ "sgecssampleredis", "GroupId" ] } ] } },

-

This template is preconfigured to us-west-2 region.

Migrate docker images to ECR

Before setting up the infrastructure I will move docker images to elastic container registry. If you want to still use docker hub or other private docker image repository, you can skip this part

-

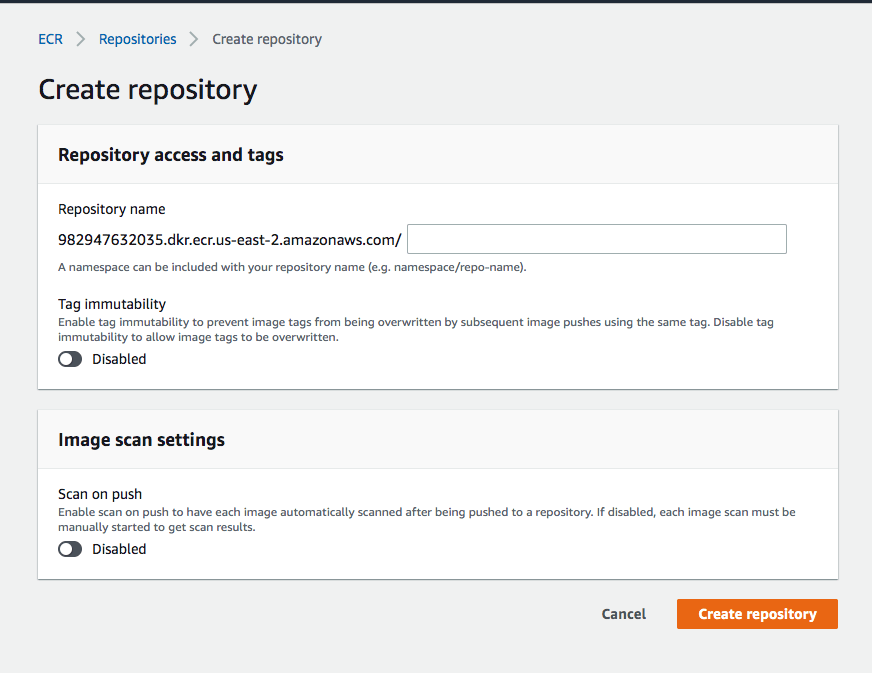

ecr setup

Getting started with Amazon ECR using the AWS CLI

log into ecr

$ eval $(aws ecr get-login-password --region us-east-2 --no-include-email) > Login SucceededCreate repository with cli

$ aws ecr create-repository --repository-name ecs-sample-nginx --region us-east-2 $ aws ecr create-repository --repository-name ecs-sample-api --region us-east-2 $ aws ecr create-repository --repository-name ecs-sample-worker --region us-east-2or create repository on the console

Build docker image if you have not build the image from previoud post

```bash

$ docker build -f config/app/Docker_base -t ecs-fargate-sample_base:latest .

$ docker build -f config/app/Docker_app -t ecs-fargate-sample_app:latest .

$ docker build -f config/app/Docker_worker -f ecs-fargate-sample_app:latest .

```

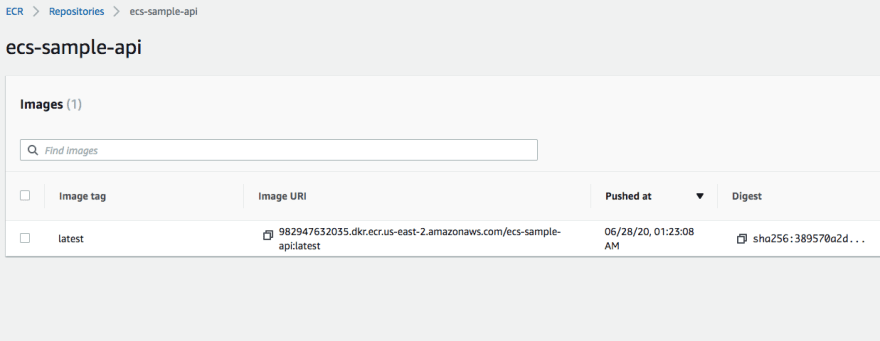

Tag and push images

```bash

$ docker tag ecs-fargate-sample_app:base <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-api:base

$ docker push <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-api:base

$ docker tag ecs-fargate-sample_app:latest <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-api:latest

$ docker push <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-api:latest

$ docker tag ecs-fargate-sample_worker:base <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-worker:latest

$ docker push <aws_account_id>.dkr.ecr.us-east-1.amazonaws.com/ecs-sample-worker:latest

```

Check the console for the images

IAM role

You need access to ECR from your ec2 instances, instead of embedding AWS accesskey in the instance I will attach an iam role.

-

ecs-sample-ec2_role (optional)

- I will give permission to read from ECR

Network

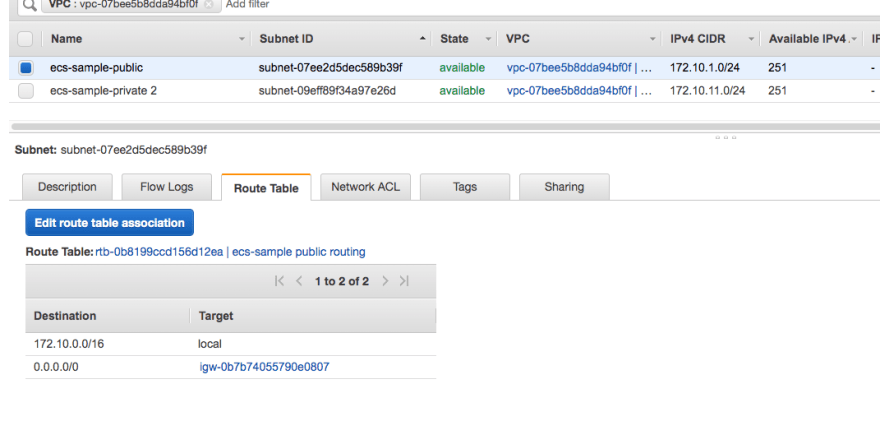

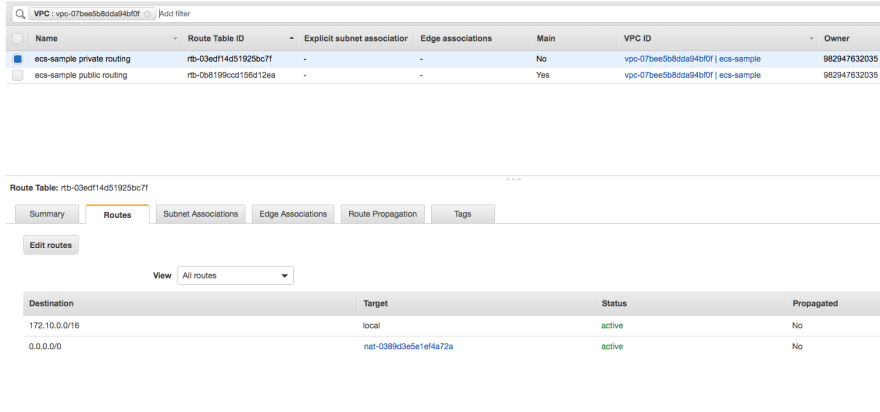

VPC

I have created VPC with CIDR 172.10.0.0/16. refer to the images attached for reference. For production I advise you to make more subnets in multi-AZ for better availability. For the sake of the post, I have just used two available zones (serverless mysql needs at least two AZs)

- Public subnet

CIDR - 172.10.1.0/24, 172.10.2.0/24

Components

- ec2 instance for `api`

- NAT GATEWAY for `worker` instance

- Private subnet

CIDR - 172.10.11.0/24, 172.10.12.0/24

Components

- mysql

- elastic cache

- ec2 instance for `worker`

Security group

I have added minial security measure for the sake of the post. This is just sample you should not use this for production.

-

Setup

ecs-sample-VPC

- Opened all ports to public (not safe)

ecs-sample-loadbalancer

- 80 and 443 to public

- 80 with ec2-api instance

ecs-sample-mysql

- 3306 with ec2

ecs-sample-ec2-api and ecs-sample-ec2-worker (they should be different but I just used single sg for both ec2, should block 80 access for particular ec2 instance)

- 3306 mysql

- 6379 redis,

- 80 for ALB and public ip access

- 22 for ssh

ecs-sample-redis

- 6379 with ec2 ### ALB

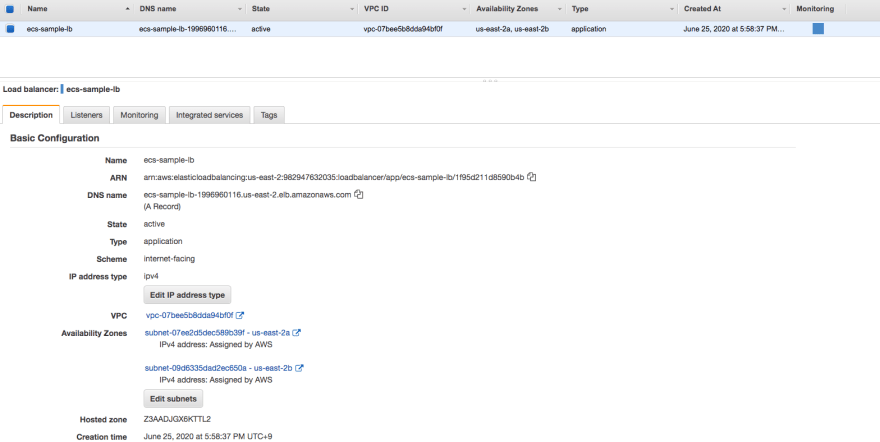

Created Alb with simple setup attached to public subnet. We don't have instance yet so just created target group with no instance attached yet.

- configuration

- name: ecs-sanple-lb

- Listener: port 80

- Security group: ecs-sample-lb

- VPC and subnet - public subnet created above

Database

RDS - Mysql

I will use serverless mysql instead of traditional mysql instance to minimize cost. (serverless mysql only support mysql engine version up to 5.6)

- configuration

- database -

sample - username -

admin - network - VPC and private subnet created above

- database -

Take a note on your mysql endpoint and password, will be using it later for env variable setup.

Elasticcache - Redis

Take a note on your primary endpoint, will be using it later for env variable setup.

Compute instances

We will start two ec2 instances. One for api in public subnet and another for worker in private sunet.

EC2 instance - API

- configurations

- AMI : Ubuntu image 20.04 LTS

- Network: public subnet

- auto-assign ip : enabled

Ec2 instance - worker

This instance is basically same with api instance but it needs to in private subnet. I advise making AMI from api instance and fire up on private instance. Since there will be no public ip attached, you need to tunnel through api or bastion instance to connect the instance with private ip. (You can use following method to connect directly since we have NAT gateway deployed)

Deployment

Once the instance is up and running, we are ready to deploy docker containers.

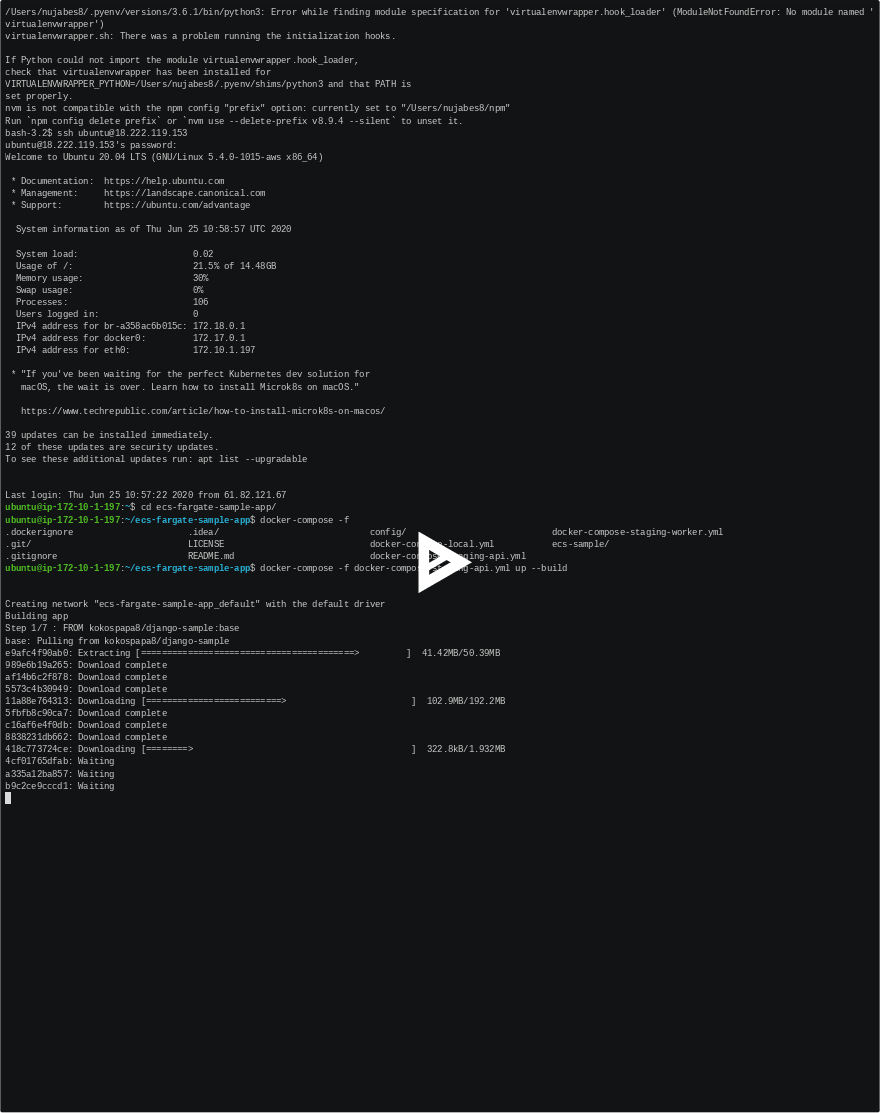

Let's ssh into to ec2 instance.

ssh -i "/path/to/keyfile/" ubuntu@<public_ip>

Docker setup

Next, you need to install docker engine and docker-compose. refer to following link

-

install steps

Install Docker Engine on Ubuntu

Post-installation steps for Linux

# docker engine $ sudo apt-get update $ sudo apt-get install \ apt-transport-https \ ca-certificates \ curl \ gnupg-agent \ software-properties-common $ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - $ sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable" $ sudo apt-get update $ sudo apt-get install docker-ce docker-ce-cli containerd.io # docker-compose $ sudo curl -L \ "https://github.com/docker/compose/releases/download/1.26.0/docker-compose-$(uname -s)-$(uname -m)" \ -o /usr/local/bin/docker-compose

Environment variables

You need to set couple env for staging since we are using redis and mysql.

Please take a closer look at settings/staging.py for DB_USER and DB_NAME. If they are different, update the file accordingly.

export SECRET_KEY=""

export DB_HOST=""

export DB_PASSWORD=""

export REDIS_HOST=""

Run containers

Check out sources from git and run the docker-compose

$ git clone https://github.com/kokospapa8/ecs-fargate-sample-app.git

$ cd ecs-fargate-sample-app

$ docker-compose -f docker-compose-staging-api.yml up --build

# add -d option for detach mode I explicitly left it out to see access logs

# if you get error running docker-compose or docker, you probably don't have previlige to run docker as user,

# refer to https://docs.docker.com/engine/install/linux-postinstall/

# instead of building the whole images you can pull the image from ECR - https://docs.docker.com/compose/reference/pull/

# in order to pull the image from repository make sure you add images filed to each docker-compose file - https://docs.docker.com/compose/compose-file/#image

#

$ docker-compose -f docker-compose-staging-api.yml pull

$ docker-compose -f docker-compose-staging-api.yml up

Once docker containers are up and running and no error shown, access public ip of your app instance.

Attach instace behind ALB

Attach your instance to ALB's target group then you will start seeing ALB's health check on the log.

For worker instance

Follow same step as previous step on docker setup or create another AMI image from api instance and start the instance in private subnet.

$ git clone https://github.com/kokospapa8/ecs-fargate-sample-app.git

$ cd ecs-fargate-sample-app

$ docker-compose -f docker-compose-staging-worker.yml up --build

Caveats

Everything checked out; in order to use this setup in production level, there are couple problems we need to address.

- Scaling services

- Updating sources for further development

Scaling services

We can create launch config and attach it to autoscaling group for automatic scaling.

We need to prepare couple things for this to work

- Each instances must have environment variable injected on startup. There are couple ways to do this

- Pull new source

- Run docker-compose on start

There are various way to accomplish this, you can search for other methods

Aws Ec2 run script program at startup

Updating sources for further development

- Configuration changes - environment

- pulling new src from git

Brute force

Login into each instance and update the source and build image manualy

- ssh into each ec2 instance

> ssh -i "/path/to/keyfile/" ubuntu@<public_ip>

$ git pull

$ docker-compose -f docker-compose-staging-api.yml up --build

Obviously this is not a great idea if you have multiple instances of ec2 running. Also ip address change if instances are running behind autoscaling group.

Fabric

You can use fabric to alleviate such painful process. It's library designed to execute shell commands remotely over SSH.

I have provided sample fab file for accessing ec2 instances in public subnets.

def _get_ec2_instances():

instances = []

connection = connect_to_region(

region_name = "ap-northeast-2", #TODO beta env

aws_access_key_id=os.getenv("AWS_ACCESS_KEY_ID"),

aws_secret_access_key=os.getenv("AWS_SECRET_ACCESS_KEY")

)

try:

reservations = connection.get_all_reservations(filters= {'tag:Name':'ecs-sample-api','tag:env':'staging'})

for r in reservations:

for instance in r.instances:

instances.append(instance)

except boto.exception.EC2ResponseError as e:

print(e)

return

instances=filter(None, instances)

return instances

kokospapa8/ecs-fargate-sample-app

When you run fabfile, it uses boto library to traverse ec2 instance with tag named as ecs-sample-api. Therefore you need to add ecs-sample-api for Name tag for each ec2 instances.

# you need to install fab and boto library

$ pip install fabric, boto

#edit fabfile.py

# SSH_KEY= "PATH/TO/YOUR/SSH_KEY"

# set env for AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY with ec2 describe policies

$ fab --list

$ fab gitpull

$ fab docker-restart

Wrap up

We are done for staging environment setup. We now have a docker containers running on cloud evironment. However there are couple concerns I woud like to address.

- Accessing each instances manually to update the source is not secure job

- I would like to address immutability to container deployment.

- I would like to fully utilize my compute node for containers.

Let's move to part3

How to deploy django app to ECS Fargate part3

Jinwook Baek ・ Jul 3 ・ 12 min read

Reference

Save yourself a lot of pain (and money) by choosing your AWS Region wisely

VPC with public and private subnets (NAT)

Securely Connect to Linux Instances Running in a Private Amazon VPC | Amazon Web Services

Top comments (0)