In the previous post, I looked into how to optimize telemetry and alerts.

In this article, I take a look into offline scenario.

Offline Environment

I assume 99.99% application can talk to internet all the time, which is not true some time. The application maybe offline time to time, or totally offline due to security concern.

I find similar questions in stack overflow, too. One example is:

Stack overflow: Can Application Insights be used off-line

forty-eight hours limitation

It sounds like Application Insights has 48 hours limitation when uploading the old data. This makes sense to me as its telemetry analytics platform and near real-time data has more meaning. So where I can store more "log" type of data to track historical analysis?

Azure Log Analytics Workplace

One strong candidate is Log Analytics Workspace.

There are several reasons why this may be good fit.

- This is also Azure Monitor family

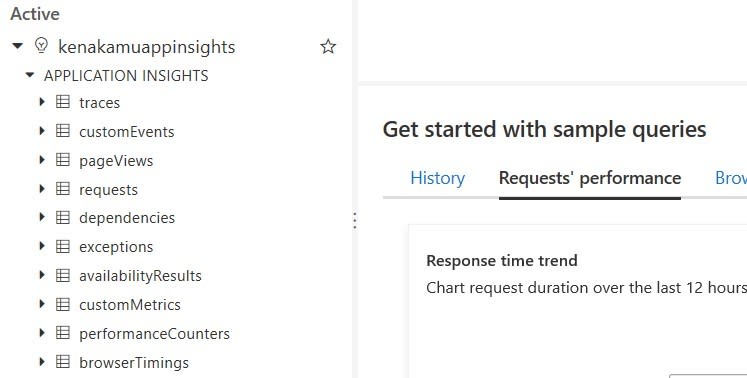

- I can use same Kusto Query experience

- Alert or any other common feature of Azure Monitor works

- It has API as well as agent to load the log

Collect Application Insights log

One of the great points of Application Insights is that it provide SDK which "auto-collect" many things. See Application Insights SDK for Node.js series for detail.

There are several ways to save the log to disk.

- Implement Telemetry Processor

- Modify SDK

- Send telemetry to local server

Implement Telemetry Processor

Application Insights for Node.js provides Preprocess data with Telemetry Processors function, which I can do my own stuff before sending data to Application Insight server.

By return false in the function, I can stop sending telemetry to cloud.

1. Add my own telemetry processor.

appInsights.defaultClient.addTelemetryProcessor((envelope) => {

envelope.tags["ai.cloud.role"] = "frontend";

envelope.tags["ai.cloud.roleInstance"] = "frontend-1";

saveToDisc(envelope);

return false;

});

2. Add function to save data to local disk.

function saveToDisc(payload) {

var directory = path.join(os.tmpdir(), "applicationinsights");

try {

if (!fs.existsSync(directory)) {

fs.mkdirSync(directory);

}

var fileName = new Date().toISOString().split('T')[0] + ".json";

var fileFullPath = path.join(directory, fileName);

fs.appendFile(fileFullPath, batch + os.EOL, { mode: 0o600 }, (error) => {

console.log((error && error.message));

});

} catch (error) {

console.log((error && error.message));

}

}

There are several consideration points if I take this approach.

- Store data into memory object to boost performance rather than save data everytime to disk.

- Flush data to disk when memory object reached threshold or certain time has been passed.

- Implement synchronous call to support Node.js crash scenario.

The SDK actually has these capabilities and the following code is just an example after I researched the SDK.

var _maxItemInBuffer = 100;

var _getBatchIntervalMs = 10000;

var _timeoutHandle;

var _buffer = [];

...

appInsights.defaultClient.addTelemetryProcessor(envelope => {

envelope.tags["ai.cloud.role"] = "myapp";

envelope.tags["ai.cloud.roleInstance"] = "myapp1",

savePayload(envelope);

return false;

});

...

function flush() {

saveToDiscSync();

process.exit();

}

function savePayload(payload) {

_buffer.push(payload);

if (_buffer.length > _maxItemInBuffer) {

saveToDisc();

}

if (_buffer.length > 0) {

_timeoutHandle = setTimeout(() => {

_timeoutHandle = null;

saveToDisc();

}, _getBatchIntervalMs);

}

}

function saveToDisc() {

var batch = _buffer.map(x=> JSON.stringify(x)).join("\n");

_buffer.length = 0;

_timeoutHandle = null;

var directory = path.join(os.tmpdir(), "applicationinsights");

try {

if (!fs.existsSync(directory)) {

fs.mkdirSync(directory);

}

var fileName = new Date().toISOString().split('T')[0] + ".json";

var fileFullPath = path.join(directory, fileName);

fs.appendFile(fileFullPath, batch + os.EOL, { mode: 0o600 }, (error) => {

console.log((error && error.message));

});

} catch (error) {

console.log((error && error.message));

}

}

function saveToDiscSync() {

var batch = _buffer.map(x=> JSON.stringify(x)).join("\n");

var directory = path.join(os.tmpdir(), "applicationinsights");

try {

if (!fs.existsSync(directory)) {

fs.mkdirSync(directory);

}

var fileName = new Date().toISOString().split('T')[0] + ".json";

var fileFullPath = path.join(directory, fileName);

fs.appendFileSync(fileFullPath, batch + os.EOL, { mode: 0o600 });

} catch (error) {

console.log((error && error.message));

}

}

process.on('SIGINT', flush);

process.on('SIGTERM', flush);

process.on('SIGHUP', flush);

process.on('exit', flush)

Modify SDK

I also cannot strongly recommend this but this is the easiest way to fully utilize the capabilities of SDK. But I need to repeat the process every time I use new SDK.

Sender.ts has send function, where SDK sends data to cloud. You can tweat this point. If you use existing save to disk mechanism, you better consider changing MAX_BYTES_ON_DISK as well.

Send telemetry to local server

Unfortunately, Azure Stack doesn't support Application Insights, but you can send data to database like we can save data to disk. I don't say which is better between save to disk vs database as there are many consideration points.

Upload saved log to Log Analytics Workspace

I can use either Log Analytics Agent or Collector API.

See Custom logs in Azure Monitor and Send log data to Azure Monitor with the HTTP Data Collector API for more detail.

What I gain and lose by doing this?

Gain:

- I can upload Application Insight data which is older than 48 hours

- I can use any log tools to manipulate data before sending to cloud Lose: I lose all Application Insight specific features

- Clear category in Log. All logs comes into one collection if I simply upload the file, but Application Insight creates separate collection per category.

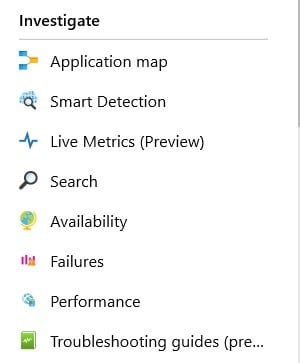

- Investigate features

I still have all Azure Monitor common feature, such as KQL and alerts.

Summary

As long as I research this time, I cannot say Application Insights supports offline scenario, but it supports intermittent internet connection loss scenario.

Top comments (0)