How do I know if my team is producing the quality outcome and if they are improving as they marching forward?

It’s doable with a smart and sweet KPI (Key Performance Indicator). KPI like below make it happen. It explains to me how to quickly and timely understand the product quality and identify areas for further improvement. KPIs are nothing but extracted from the Metrics and they have a narrow focus.

Let me explain what are metrics and KPI and how they help and relate to each other.

Software quality metrics are the quantifiable measure used to track, monitor and assess the success or failure of various software quality processes.

In a world of Software quality metric, there are multiple statistics that can be measured to understand the overall health of development processes as well as the product in development.

For example, think of a vehicle quality metrics, it has suspension, gearbox, ignition, plugs, wiring etc. A whole lot of features and processes. A typical car garage would measure all metrics of a vehicle to make sure they are within their normal ranges for a fitness certificate. Let's call this whole calibration as car quality metrics.

On another hand, KPI is a most important piece to be looked at, for example, a car mileage KPI (a single indicator) is of interest when it’s come to a driver (the end user or a product manager in our case responsible for product success). It can tell a driver if everything is well or not - perhaps need a deeper level investigation by an expert. Let's call it a car KPI.

KPI is something we keep an eye all the time even in software development and the testing world. For example, I can collect multiple of metrics but be monitoring all of them daily with the same level of accuracy isn't easy. The one must add some context to collected metrics to make them meaningful. Am I looking at the right metric?

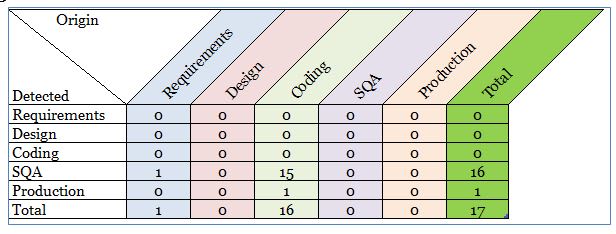

The Bugs discovery scorecard comes handy, shown below. It's measurable and quantifiable. It's telling if there is a problem and where is the problem.

To construct this efficient and easily understandable KPI for any product, we need to understand the 2 key ingredients

Origin: It defines when a bug was injected into the system.

Detected: It defines at what stage a bug was caught or identified.

Usually, the way I obtained this scoring is by adding 2 custom fields to my favorite bug tracking system to record the bug origin and the bug detection phase. Not to mention, it’s a good for post-release sessions and discussion like release retrospective.

Some examples to get the meaning from this data formation

There are multiple ways to get benefit from this scorecard

For example, it’s perfectly fine to have a bug originated in the “Requirements” phase but it’s surely not right to discover it at later stages of Production. Finding a requirement or a design level issue late in the game may warrant a lot of rework or lead towards the product or a feature failure.

The Bugs discovery scorecard matrix isn't just for development or QA team. It helps all teams at all levels starting from analysts, designers, developer, QA testers, and management to measure quality as well as identify the gap and improve continuously. Like here in above image, 1 coding bug caught in PROD (by customers) and 1 REQ bug was caught in SQA (but not in the coding phase).

From my experience of managing teams, I look for ways to praise my team too. For example, if the design bug has caught in the development phase but not in the design phase, it needs significant rework but rework would still be less when a bug caught in the SQA or the PROD phase

I can easily grab the maturity level of my teams working on requirements, design and development phases. And, I can measure quality standard for the testing team.

Top comments (5)

This is a fantastic idea, very well explained. I'm actually going to implement a form of this for my team.

There are a couple of possible improvements, depending on team needs:

Origin Usefulness

For some teams, the origin may not be particularly useful. It certainly can take a good deal of work to track down in some cases ("Wait, this bug got into prod, was it from coding or design stage, or perhaps a bug fix?"). The point is, wherever the bug came from, it didn't get caught until prod.

Now, it may be useful to figure out where one bug, or even a swarm of bugs, originated, so we can figure out what part of the workflow sprung a leak. However, as a rule, it might not always be worth tracking down at the time of reporting. Given a halfway decent workflow and VCS, you can always figure out origin later.

That said, some teams might need the full origin/detected scheme. More power to 'em.

Weight

On the scorecard, one might get a slightly more useful single-number metric by assigning different weights to the various "Detected" stages.

For example, catching in the "Requirements" or "Design" stage should only have a multiplier of x0 [explained in a moment]. It indicates that the development team was able to anticipate the issue before coding even began.

By contrast, catching in "Production" should have a multiplier of, say, x3.

This might make more practical sense given an example. Let's look at two (simplified, no origin) scorecards.

PROJECT 1

PROJECT 2

Both have a TOTAL of 10. Sure, we can tell that Project 2 is having a harder time because of all the bugs getting to Prod (4!!) But imagine if we applied the weight multipliers.

PROJECT 1

PROJECT 2

To boil that down...

PROJECT 1: 10/10

PROJECT 2: 10/16

Yow! Project 2 is obviously having serious issues - its bugs are being caught far later than Project 1. We have the scorecard handy for the analysis, but we don't need to read it through to know the overall health. We now have a quick two-number metric that summarizes the critical information.

So, why the 0-multiplier in practice? Easy - what would s score of "10/1" tell you? Simply that, of the 10 bugs caught in the project, only one survived out of design phase.

BTW, you could clearly expand this to fit the origin/detected format, for the teams that need that.

I like the idea of introducing the Weights. The need of the origin would still be needed. Let me explain in a bit. From my experience of managing teams, I look for ways to praise my team too. For example, if the design bug has caught in the development phase but not in the design phase, it needs significant rework but rework would still be less then when a bug caught in the SQA or the PROD phase. Did you see the idea? I'm fine with bug until they are found early enough in the development lifecycle. Finding a requirement or a design level issue late in the game may warrant a lot of rework or lead towards the product or a feature failure.

For large products, origin help with identifying a right team (could be design team or any other team) for fine-tuning to uplift the product quality.

Need to see, how we may expand the Weights on origin in terms of the level of effort; it usually takes longer and hard to fix design or requirement level bug while sitting in prod.

Ahh, I see the inherent difference between our teams! I can agree that origins would be crucial for yours, since you have different Design, Coding, and SQA teams. By contrast, at my company, one team covers the entire process.

I can also see how origin might be useful to my team in limited situations, but in general, we don't have the time to figure out origin on every bug.That aside, I'll make room in my bug tracker for an optional origin field.

As to weights, if I'm understanding this, the longer a bug lives, the higher the score it needs, yes? If so, it's actually pretty simple, although I can't really set up the table here.

Using the same multipliers...

If a bug is caught in Prod (3), and origin at Code (1), then

SCORE = CAUGHT - ORIGIN = 3 - 1 = 2.Similarly, if a code is caught in Prod (3) and origin at Design (0), then

3 - 0 = 3.Like that?

Ahh, what a math!

Yes, the longer a bug lives, the higher the score

I don't know if I ever told you, but I absorbed this idea into Quantified Task Management: standards.mousepawmedia.com/qtm.html

I also talked about it here recently: legacycode.rocks/podcast-1/episode...